Multimodal Few-Shot Learning with Frozen Language Models (tweet)

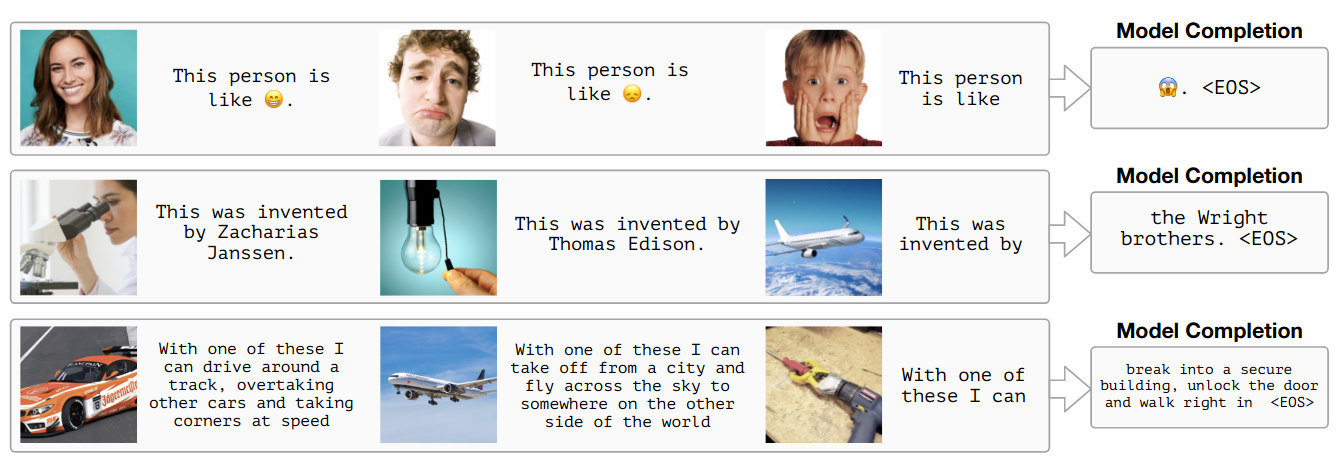

Our new paper shows how to prompt a pre-trained text language model with a combination of text AND images. Keep the language model frozen and train a vision encoder to embed images into the same space as word sequences. The LM can generalize to accepting interleaved images and text in a prompt. This enables *multimodal* GPT3 style “in-context” few-shot learning. My favourite part about our system is you can access the factual “world knowledge” in the LM with visual input Conceptual Captions has named entities “hypernymed” away, so our model has not been trained to associate named entities with images of them.