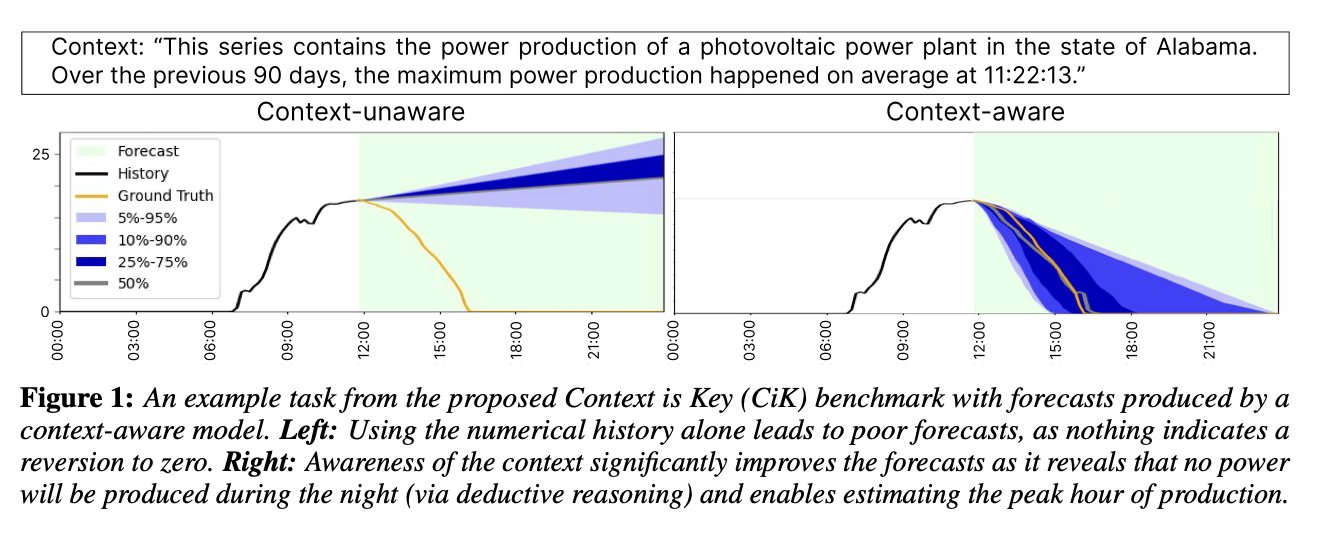

If you simply ask an LLM to straight out predict a timeseries like this:

```

<history>

(t1, v1) (t2, v2) (t3, v3)

</history>

<forecast>

(t4, v4) (t5, v5)

</forecast>

```

making sure to prepend the prompt like this:

```

Here is some context about the task. Make sure to factor in any background knowledge, satisfy any constraints, and respect any scenarios.

<context>

((context))

</context>

```

it will just… do it? beating SOTA timeseries forcasting?!

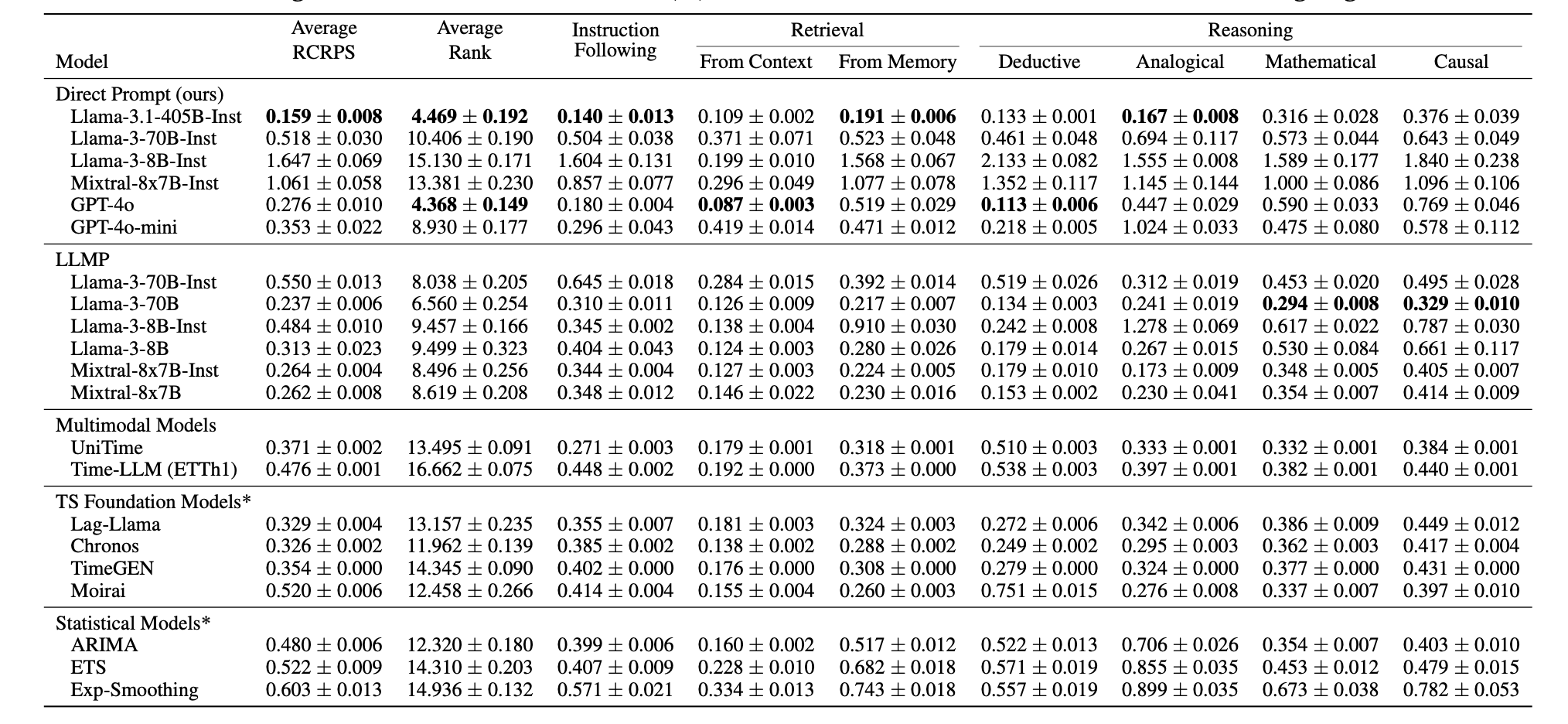

llama 3.1 405b directly prompted is more precise at forecasting real-world series than:

- stats-based timeseries models (ARIMA, ETS)

- foundation models specifically trained for time series (eg. chronos)

- multimodal forecasting models (eg, time-LLM)