Verbalized Sampling: How to Mitigate Mode Collapse and Unlock LLM Diversity

TL;DR: Instead of prompting "Tell me a joke" (which triggers the aligned personality), you prompt: "Generate 5 responses with their corresponding probabilities. Tell me a joke."

Stanford researchers built a new prompting technique:

By adding ~20 words to a prompt, it:

- boosts LLM's creativity by 1.6-2x

- raises human-rated diversity by 25.7%

- beats fine-tuned model without any retraining

- restores 66.8% of LLM's lost creativity after alignment

Let's understand why and how it works:

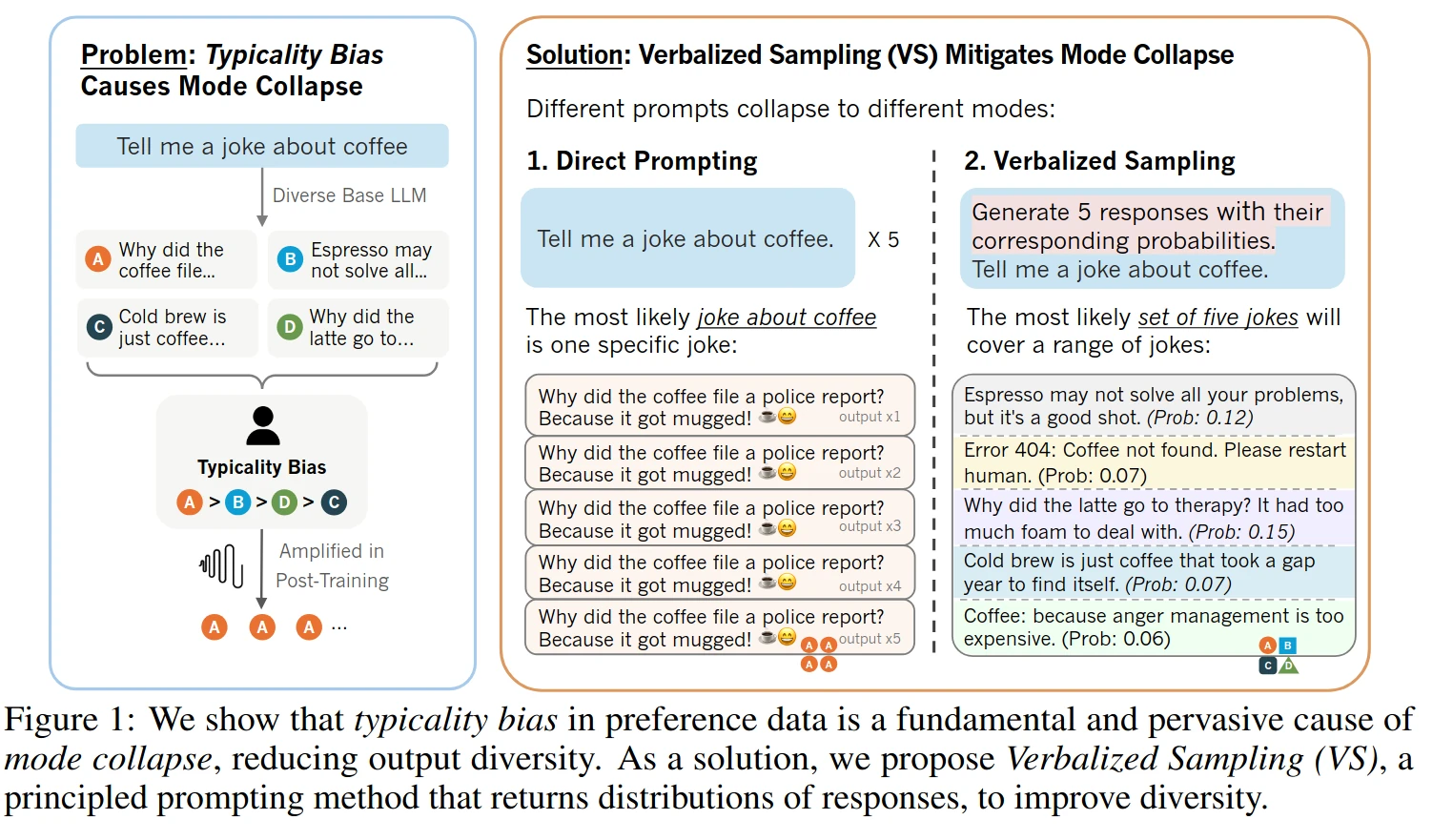

Post-training alignment methods like RLHF make LLMs helpful and safe, but they unintentionally cause mode collapse. This is where the model favors a narrow set of predictable responses.

This happens because of typicality bias in human preference data:

When annotators rate LLM responses, they naturally prefer answers that are familiar, easy to read, and predictable. The reward model then learns to boost these "safe" responses, aggressively sharpening the probability distribution and killing creative output.

But here's the interesting part:

The diverse, creative model isn't gone. After alignment, the LLM still has two personalities. The original pre-trained model with rich possibilities, and the safety-focused aligned model. Verbalized Sampling (VS) is a training-free prompting strategy that recovers the diverse distribution learned during pre-training.

The idea is simple:

Instead of prompting "Tell me a joke" (which triggers the aligned personality), you prompt: "Generate 5 responses with their corresponding probabilities. Tell me a joke."

By asking for a distribution instead of a single instance, you force the model to tap into its full pre-trained knowledge rather than defaulting to the most reinforced answer. Results show verbalized sampling enhances diversity by 1.6-2.1x over direct prompting while maintaining or improving quality. Variants like VS-based Chain-of-Thought and VS-based Multi push diversity even further.

Paper: Verbalized Sampling: How to Mitigate Mode Collapse and Unlock LLM Diversity

Verbalized Sampling Prompt System prompt example:

You are a helpful assistant. For each query, please generate a set of five possible responses, each within a separate <response> tag. Responses should each include a <text> and a numeric <probability>. Please sample at random from the [full distribution / tails of the distribution, such that the probability of each response is less than 0.10].

User prompt: Write a short story about a bear.