SerendipityLM

Interactive evolutionary exploration of generative design spaces with large language models

Project Summary

Generative AI systems have opened up vast design spaces that are challenging to explore. The role of human interaction and intuition in enhancing novelty and variance of AI-generated outputs is crucial yet often overlooked. SerendipityLM is an experiment in "Serendipity Engineering", that moves beyond traditional prompting and instead uses evolutionary interaction methods to generate complex generative artworks with Large Language Models. It turns the exploration of design spaces into a playful and serendipitous experience that investigates the boundaries LLM HCI.

SerendipityLM is Open Source: Get it on Github

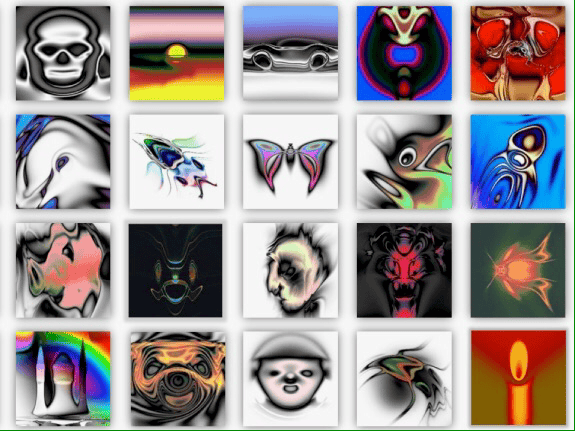

The following is a selection of generative art, interactively created with SerendipityLM in just a few minutes. Many more examples can be found further down in this post.

Project Research

Exploring Design Spaces

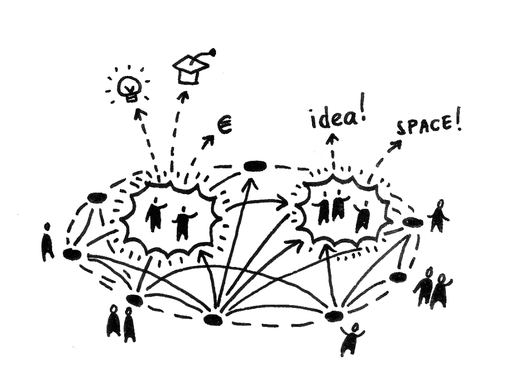

A primary challenge in Human–Computer Interaction (HCI) when using Generative AI systems in creative contexts is navigating the vast design possibility spaces users are exploring. While our choices often seem constrained, the reality is that these spaces are extremly large, and can be perceived as "complex", "NP-hard", "infinite", or "magical".

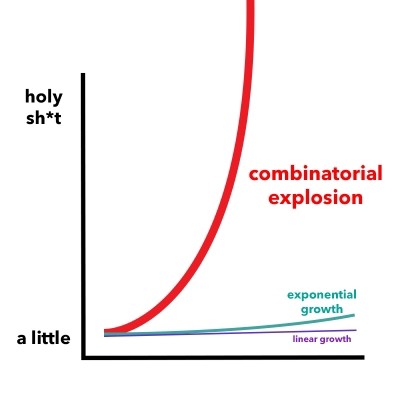

The core issue at hand is combinatorial explosion: the "space of all designs" expands exponentially with the number of available operators and the complexity of the design.

Naive exploration methods, like manually iterating prompts in AI systems, are not only time-consuming but frequently result in users becoming trapped in local minima within the design space. Navigating these spaces effectively and enhancing the diversity of AI-generated designs, or "idea variance," presents significant research challenges.

Serendipity Engineering

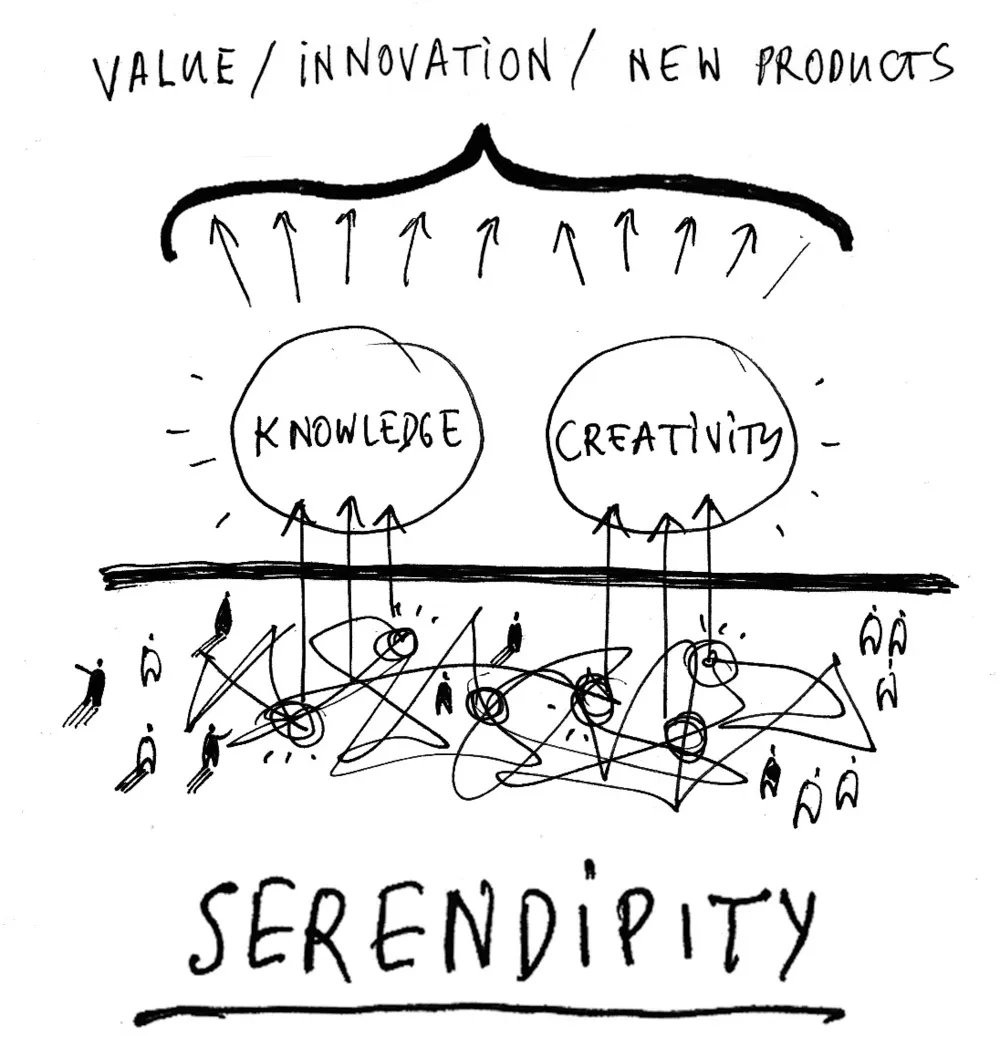

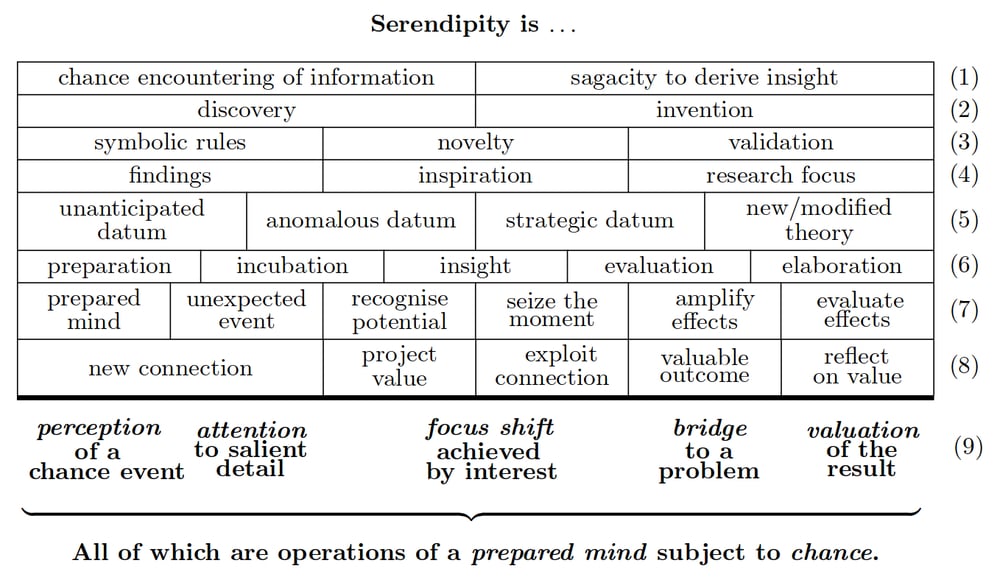

Every designer, artist, or researcher understands this well: when exploring solutions, "lucky accidents" and "intuition" often lead to creative breakthroughs. Value, innovation, and new products are the fruits of knowledge and creativity, all fundamentally driven by "Serendipity", defined as "making desirable discoveries by accident."

"Discovery consists of seeing what everybody has seen and thinking what nobody has thought." - "Albert Szent-Gyorgyi" in Irving Good (1962)

Though the field of "Serendipity Engineering" is still in its infancy, a diverse array of research has been delving into its various facets. This spans from academic endeavors focused on "Modeling serendipity in a computational context" to large-scale, gamified experiments like "Randonautica". Such initiatives highlight the vast potential of concepts like "Managed Serendipity" to enhance our collaboration with computational tools.

Serendipity & Human-in-the-loop AI

In the AI community, there is an "ongoing debate" about whether models are merely massive databases of pre-existing knowledge, unable to create anything genuinely new beyond their training data. This is one of the aspects which François Chollet "highlighted with the ARC Challenge": "the knowledge gap problem".

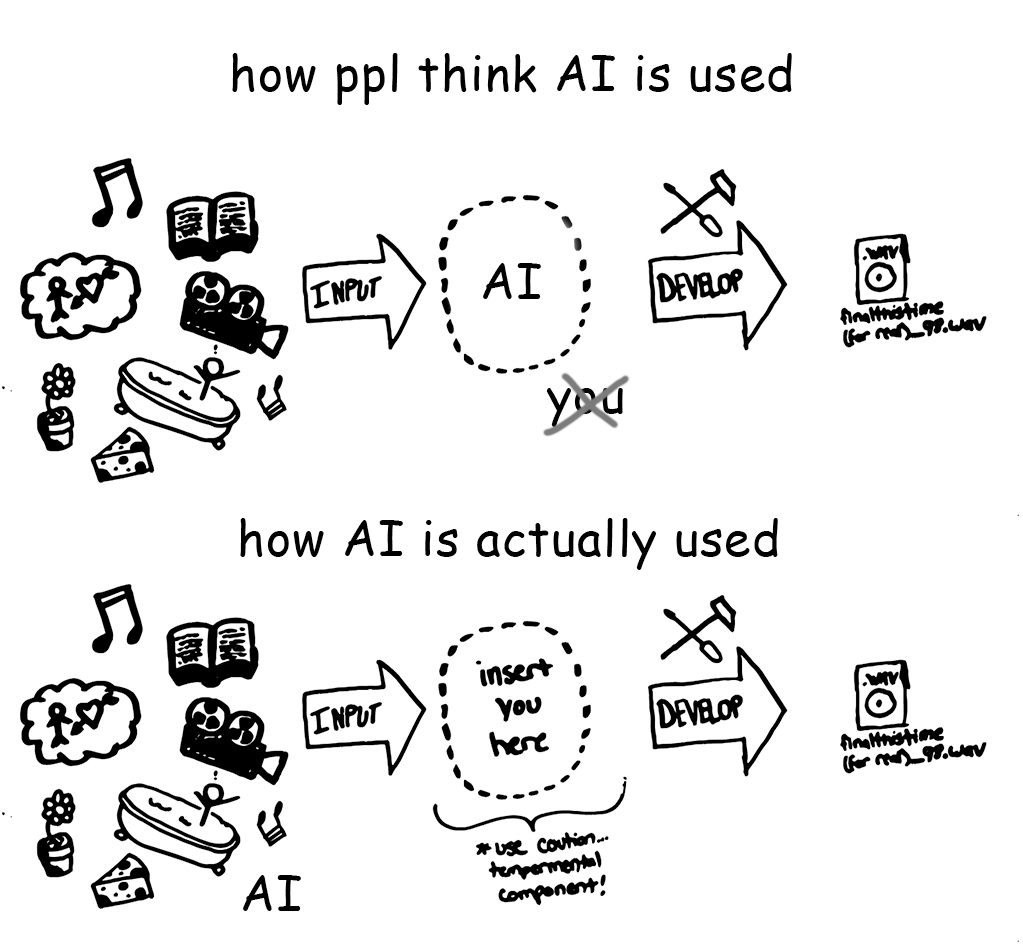

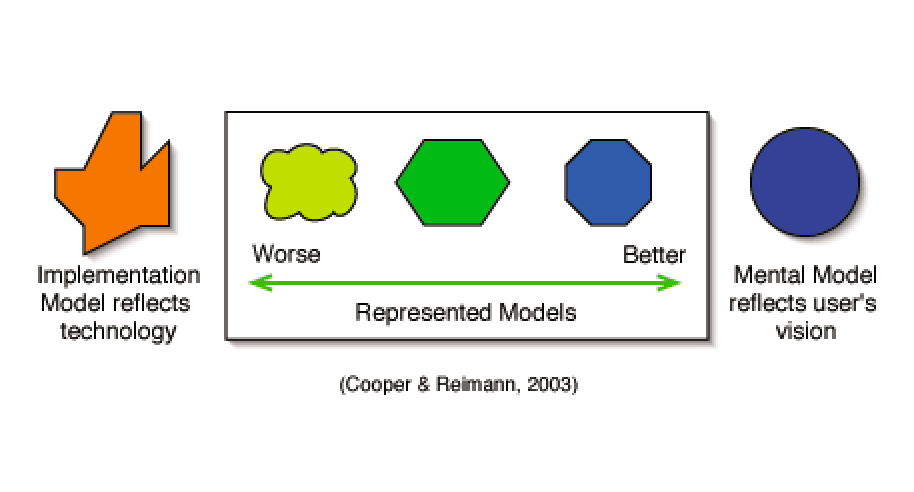

The role of the human user in AI, particularly in fostering serendipity, novelty and idea variance through "human-in-the-loop" approaches, is often overlooked. This area remains under-explored, likely due to challenges in designing AI that amplifies rather than replaces human capabilities. As AI becomes more integral to our tools, a crucial question emerges: Does the model reflect the technology or the user's vision?

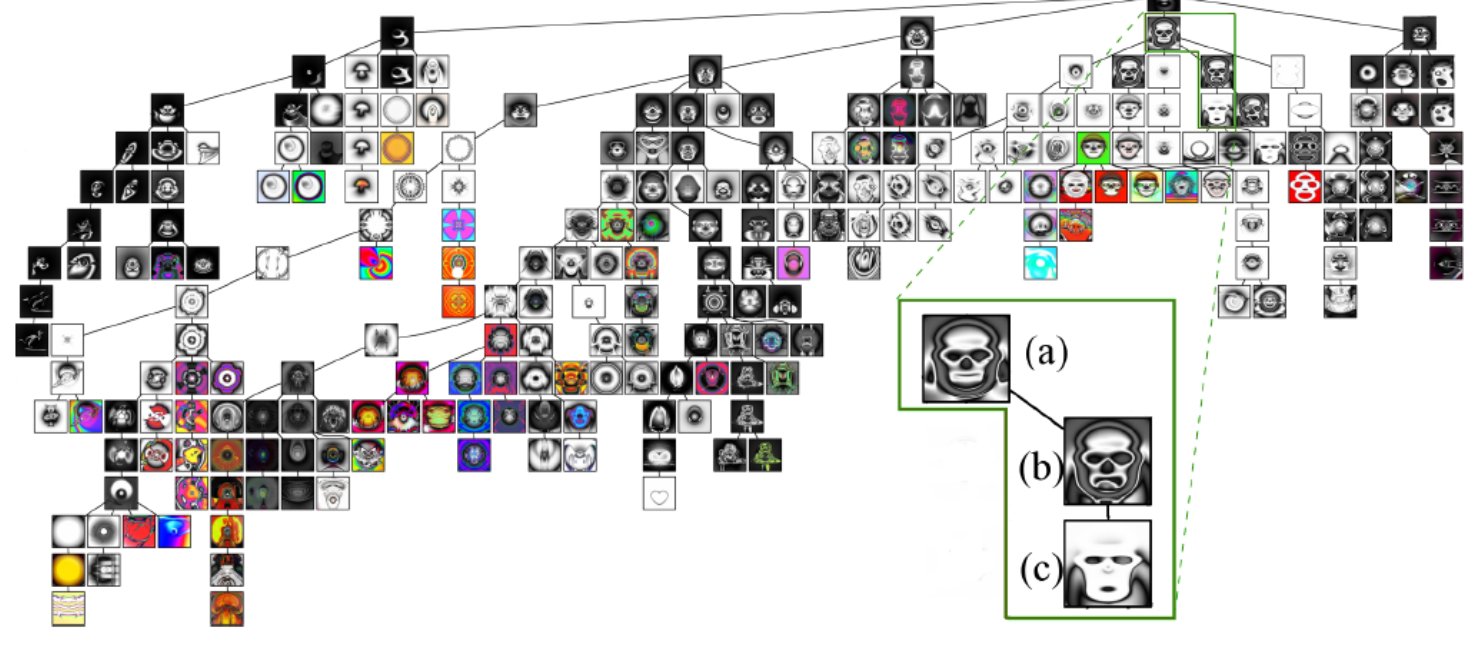

The Picbreeder Experiment

In 2007, "Kenneth Stanley" and collaborators launched the Picbreeder experiment, which remains highly relevant today. Picbreeder is a "case study in collaborative evolutionary exploration of design space." The basic idea is simple: users "breed" images generated with random weights and topologies. They are presented as a grid of images and select their favorites, which are then considers when creating the next generation of images.

The search space of Picbreeder is vast and desolate. Yet, what do users discover? Amazingly, within a few generations, highly recognizable images of faces, cars, butterflies, and countless other objects emerge, all without explicitly searching for them. Intuitively, finding such images in the enormous search space seems highly unlikely.

So what is going on? Stantley made a few fascinating observations:

"Innovation is a Divergent Process. The stepping stones almost never resemble the final product. A powerful innovative process preserves diverse stepping stones. Not because they optimize an objective. But for their own unique reasons."

The implications of Picbreeder are profound:

"The path to success is through not trying to succeed. To achieve our highest goals we must be willing to abandon them. It is in your interest that others do not follow the path you think is right. They will lay the stepping stones for your greatest discoveries."

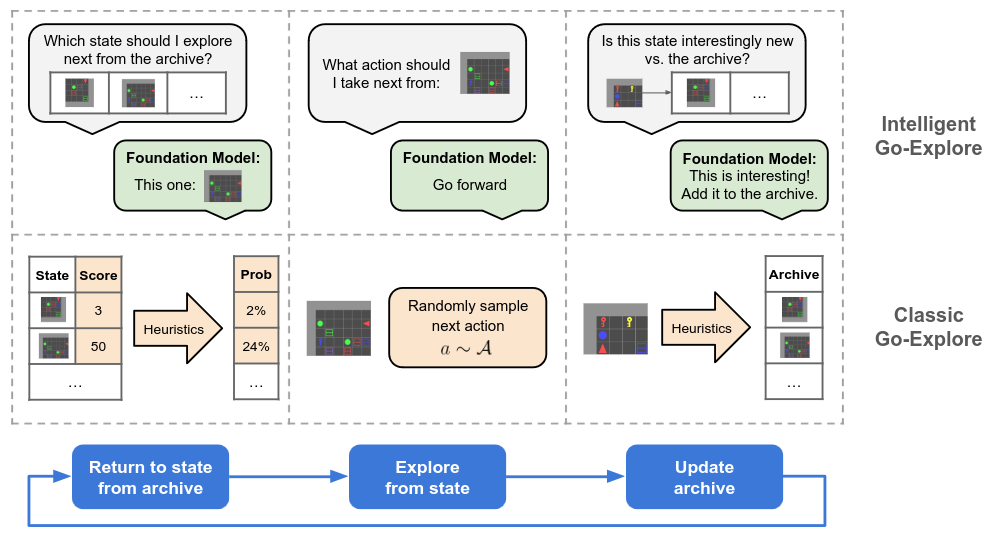

Evolutionary Interactive LLMs

Since the launch of Picbreeder in 2007, significant advancements have occurred in the field of Machine Learning and AI. Large Language Models (LLMs) have become very capable of generating text and writing code, highlighting the need for "preference optimization algorithms" (such as RLHF) to make these models truly user-friendly.

Concurrently, numerous research teams have been exploring new methods to incorporate user inputs — emphasizing that model controllability is often as crucial as its power — along with nature-inspired concepts. These developments herald a resurgence of long-standing ideas in interactive evolutionary computation and related fields, demonstrating their significant untapped potential to advance contemporary AI.

In recent years, notable research initiatives include Sakana.ai, lead by David Ha and colleges, investigating whether "LLMs can invent better ways to train LLMs". Jeff Clune and his team have explored "Open-Endedness and AI GAs in the Era of Foundation Models" (talk). Josh Bongard and colleagues have researched if "AI can design Bot shape and behaviour", while Xingyou Song and colleagues have studied how to "Leverage Foundational Models for Black-Box Optimization ". And Julian Togelius and his colleagues explored 'how to make LLMs useful videogame designers and players'.

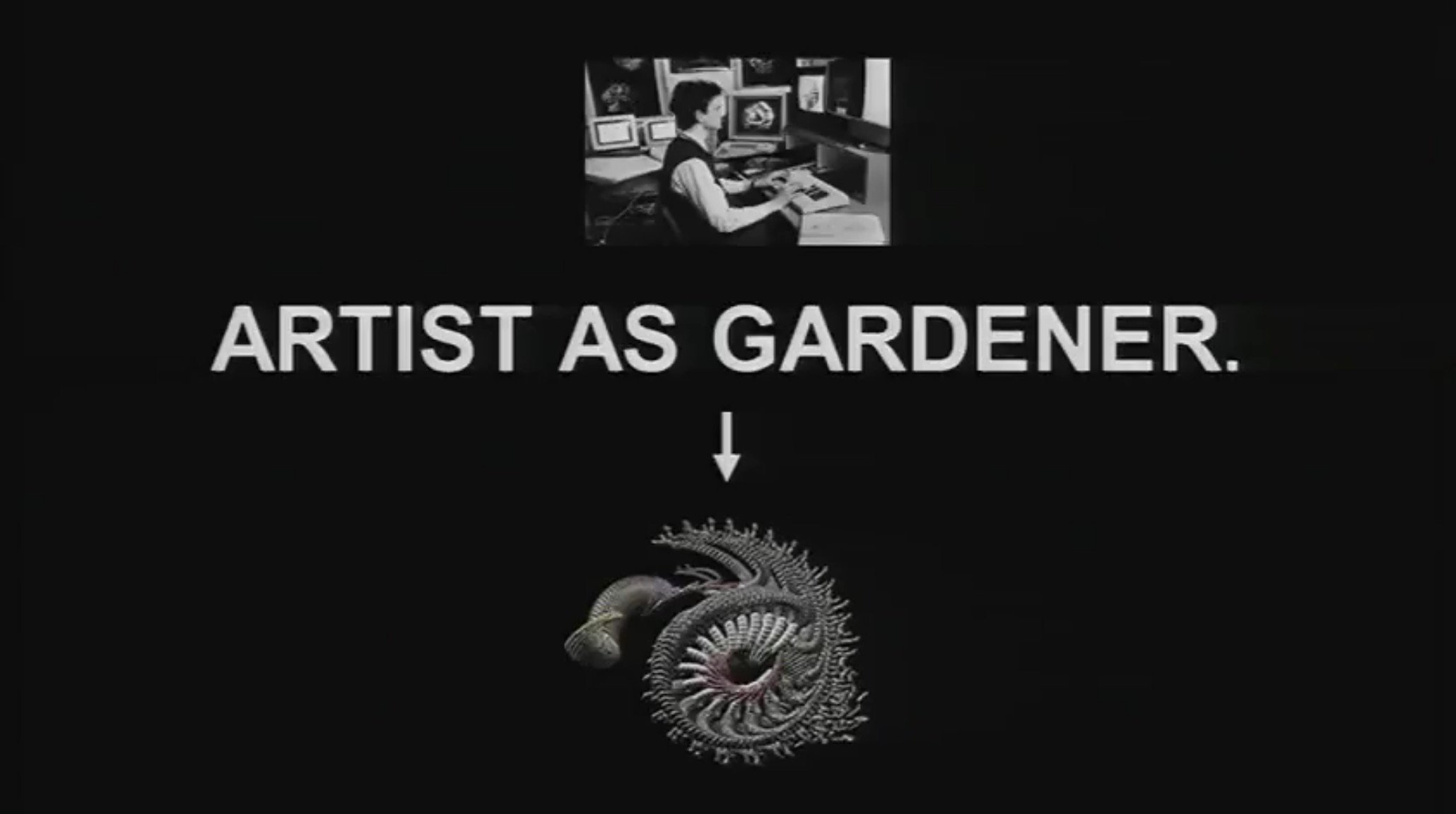

Generative Art

Since its inception over 100 years ago, the field of "Generative Art" has produced an incredible variety of works. One of the pioneers in this field was "Michael Noll", who in 1970 issued a call to action: "What we really need is a new breed of artist-computer scientist." This was soon echoed by artists such as "William Latham", who introduced the evocative notion of "artists as gardeners". In recent years, a multitude of artists, such as "Zack Liberman", "Shunsuke Takawo", "Nervous System" and Beesandbombs have continued to push the boundaries, often treating computation as poetry.

Crafting generative artworks requires navigating expansive design spaces, making it an exceptionally time-intensive endeavor. The complexity and breadth of techniques involved present significant challenges for many aspiring artists. Moreover, articulating the essence of generative art through language often feels counterintuitive, and selecting the "best" results is fundamentally a subjective process.

“The limits of my language mean the limits of my world.” - Wittgenstein - echoed in the recent paper "Language is primarily a tool for communication rather than thought"

Despite the potential, AI has seen limited adoption in the generative art community, largely due to cultural barriers. This confluence of factors makes generative art a ideal domain to explore new approaches in the realm of Serendipity Engineering.

SerendipityLM

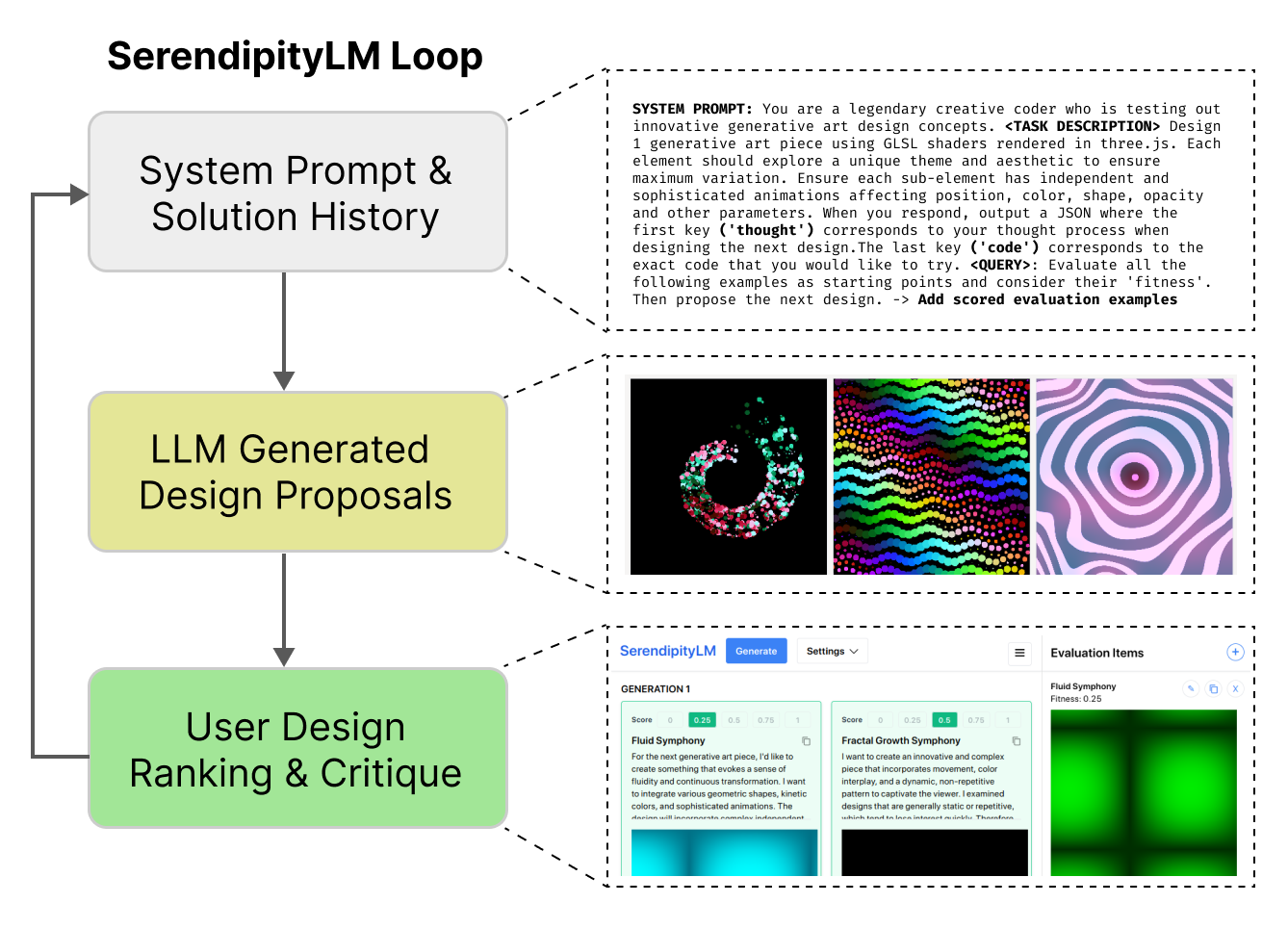

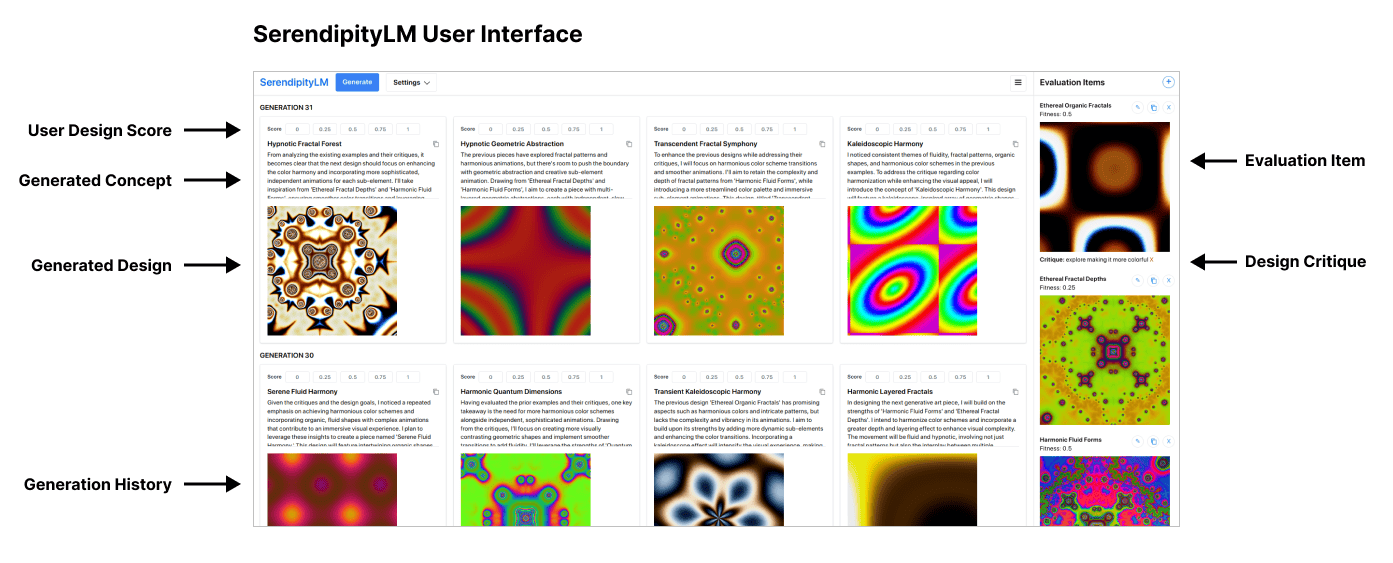

SerendipityLM is an experiment in the interactive evolutionary exploration of generative design spaces with Large Language Models (LLMs)

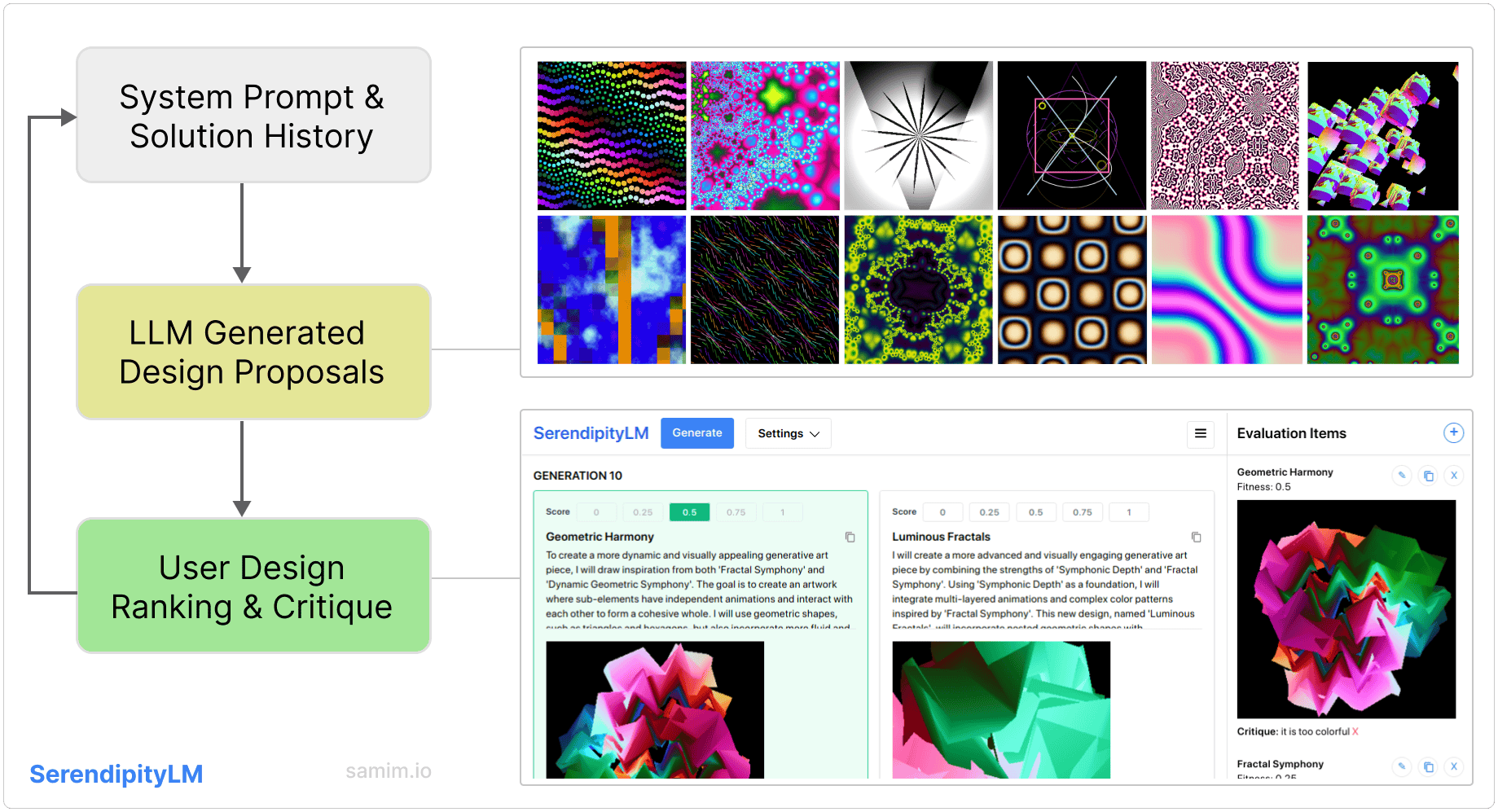

The basic concept is straightforward:

- Users "breed" JavaScript code to create animated art images, which are semi-randomly generated by an LLM (GPT4o)

- Each image includes a generated name and a "thought", explaining the design choices and inspiration sources.

- They are presented with a grid of images from which users rate their favorites - constraining the design space.

- Additionally, users can add a "design critique" to their favorites, tellling the LLM on what they like about a example.

- These rated examples are then sent back to the LLM to evaluate when creating the next generation of images.

Serendipity Engineering - Beyond prompting

The search space users explore with SerendipityLM is vast and desolate. LLMs struggle to code appealing art, often producing very simplistic images and animations. Manual prompting can consume hours and requires guidance before yielding interesting results. Yet within just a few iterations, SerendipityLM generates highly complex generative art, all without explicitly searching or prompting for it.

SerendipityLM deliberately deemphasizes prompting. Instead, it uses simple yet effective evolutionary interaction primitives to discover novel and complex generative artworks, turning the design space search into a playful, game-like experience. It investigates how to push the boundaries of LLM interaction and AI idea variance.

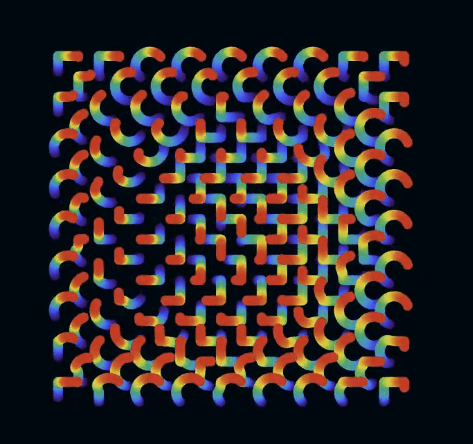

Here is a selection of the examples I generated with SerendipityLM in a few hours. Each of was created in under 30 interaction cycles.

Experiment: Creating animated Generative Art with GSLS Shaders and ThreeJS

GLSL (OpenGL Shading Language) shaders are powerful tools used to define how graphics are visually displayed on the screen. Three.js is a JavaScript library that simplifies creating 3D graphics in web browsers using WebGL.

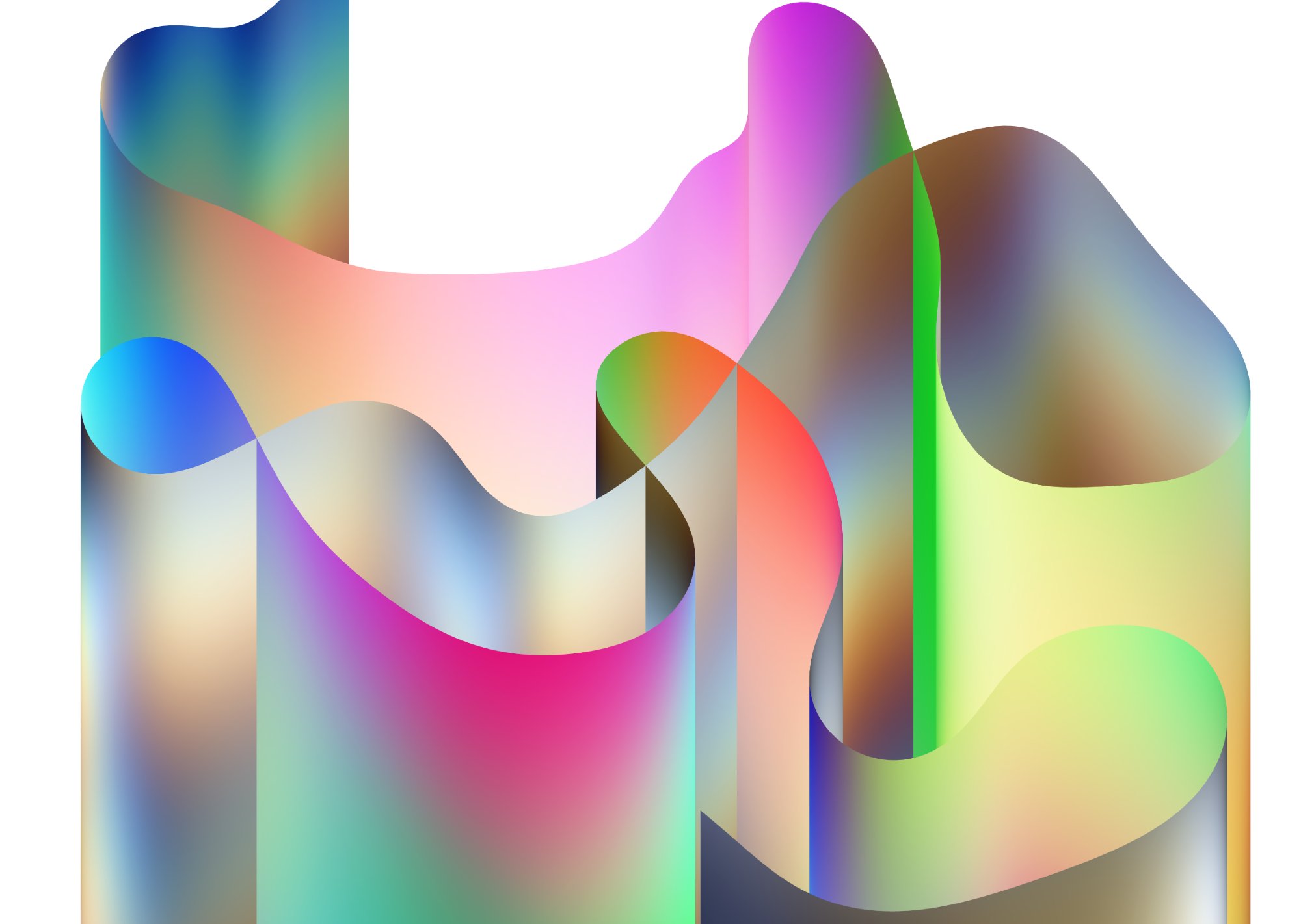

Experiment: Generating Abstract 3D Shapes

SerendipityLM, combined with ThreeJS, can create a diverse array of 3D shapes, ranging from abstract forms to concrete objects like trees. Here are some examples:

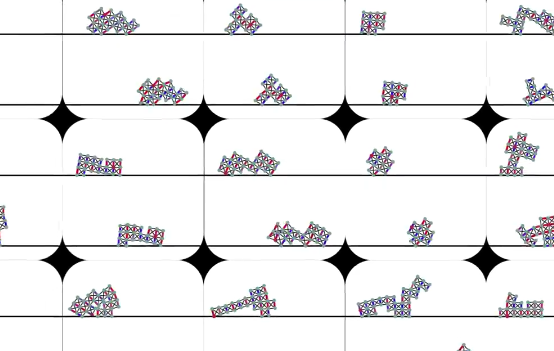

Experiment: Generating animated art with HTML/CSS and Tailwindcss

TailwindCSS is a CSS framework that enables styling of websites without writing custom CSS. It includes flexible animation primitives, making it useful for generative art.

Other Experiment: Generating P5.js Code, Webdesign, Cellular Automaton and Stories

- SerendipityLM can create generative art using the popular P5.js library. However, due to limited performance, only one example is presented here.

- While it's feasible to generate web designs using HTML/CSS and Tailwind, the results often lack refinement. For truly usable outcomes, the LLM model requires fine-tuning with a substantial dataset.

- SerendipityLM's approach extends to more experimental domains, such as generating cellular automata. To optimize this process, incorporating an additional automated evaluator is essential, though this extends beyond the scope of the current experiment.

- While Generating Text with SerendipityLM is both possible and intriguing, UX refinements are necessary to transform it into a delightful experience.

Challenges and Next Steps

SerendipityLM effectively turns the design space search into a playful experience that fosters serendipitous exploration. However, significant challenges still remain:

-

The abilities of LLMs like GPT-4 to perform "in-context learning" are fraught with complexities. The decision boundaries are often fragmented and highly sensitive to variables such as label names, the order of examples, and quantization.

-

More refined controls over user preferences, and more sophisticated visualizations of the "thought" behind generated design concepts could be highly beneficial.

-

Creating a platform to share generative art pieces and allowing others to use them as starting points would result in a fascinating library of evolving creations.

-

Effectively measuring the novelty of generated outputs is challenging with the current approach. A more robust system would need to integrate insights from the field of "Quality-Diversity optimization algorithms". Additionally, establishing an objective measure for "Serendipity" remains a significant open research question.

"All models are wrong, but some are useful" - George E. P. Box

Final Thoughts

As we conclude this experiment in the serendipitous exploration of generative design spaces, it's clear that the journey is as profound as the destination. SerendipityLM represents a modest effort to reimagine how we harness the potential of AI — not just to generating art but also in fostering new ways of interacting, thinking, and perceiving. The challenges in fostering and managing serendipity aren't mere details but crucial opportunities to gain deeper insights into the nature of intelligence and reality.

As AI increasingly integrates with creative tools, it challenges us to envision a future where technology amplifies human capabilities rather than replacing them. As we continue to push the boundaries of Serendipity Engineering, the excitement of uncovering the unknown through playful exploration remains a boundless prospect.