tag > Generative

-

Neuroevolution of Self-Interpretable Agents (Yujin Tang, Duong Nguyen, David Ha - Google Brain, 2020)

Abstract: Inattentional blindness is the psychological phenomenon that causes one to miss things in plain sight. It is a consequence of the selective attention in perception that lets us remain focused on important parts of our world without distraction from irrelevant details. Motivated by selective attention, we study the properties of artificial agents that perceive the world through the lens of a self-attention bottleneck. By constraining access to only a small fraction of the visual input, we show that their policies are directly interpretable in pixel space. We find neuroevolution ideal for training self-attention architectures for vision-based reinforcement learning tasks, allowing us to incorporate modules that can include discrete, non-differentiable operations which are useful for our agent. We argue that self-attention has similar properties as indirect encoding, in the sense that large implicit weight matrices are generated from a small number of key-query parameters, thus enabling our agent to solve challenging vision based tasks with at least 1000x fewer parameters than existing methods. Since our agent attends to only task-critical visual hints, they are able to generalize to environments where task irrelevant elements are modified while conventional methods fail.

-

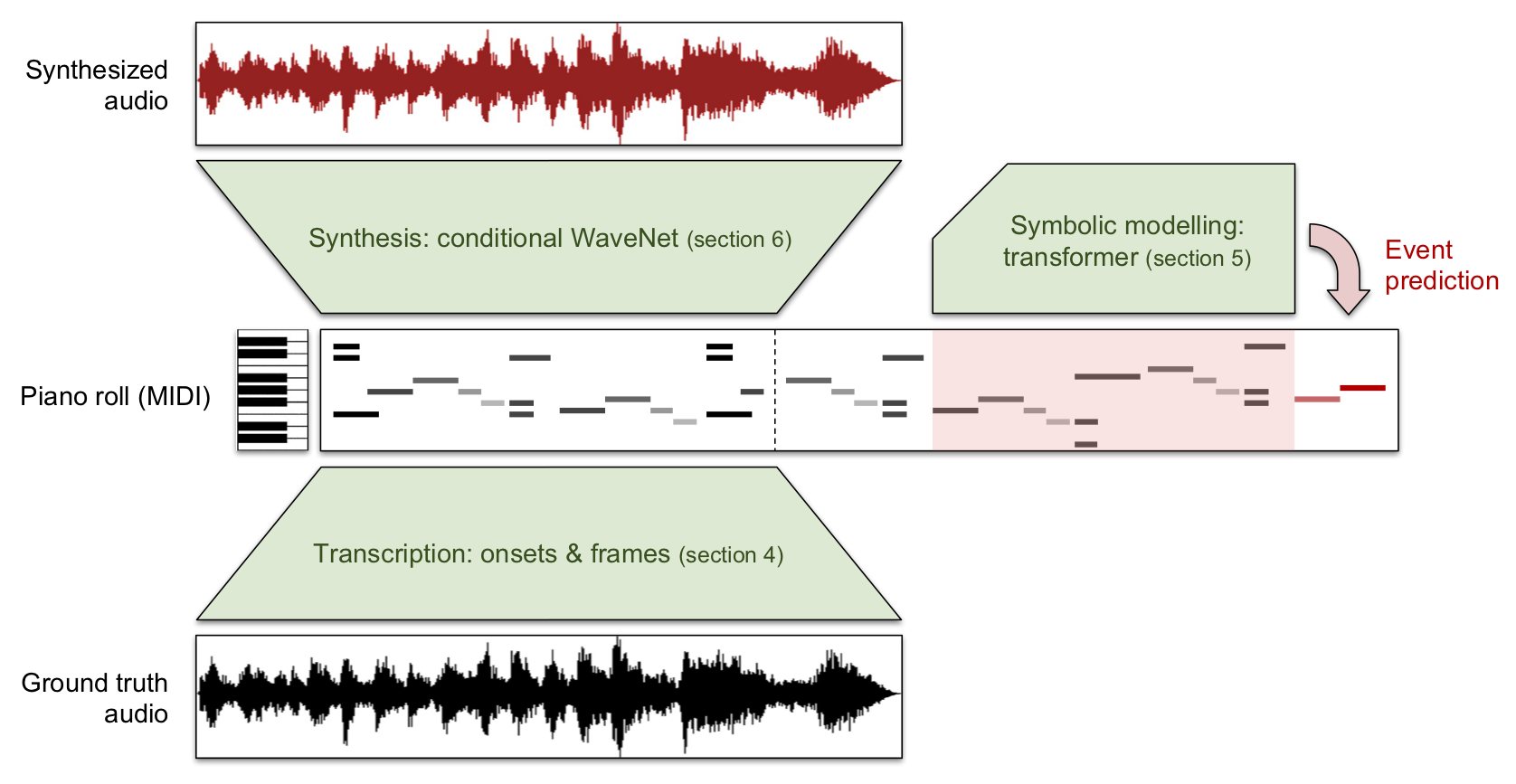

Generating music in the waveform domain - A comprehensive overview of the field

In November last year, I co-presented a tutorial on waveform-based music processing with deep learning with Jordi Pons and Jongpil Lee at ISMIR 2019. Jongpil and Jordi talked about music classification and source separation respectively, and I presented the last part of the tutorial, on music generation in the waveform domain.

-

NeRF - Representing Scenes as Neural Radiance Fields for View Synthesis

We present a method that achieves state-of-the-art results for synthesizing novel views of complex scenes by optimizing an underlying continuous volumetric scene function using a sparse set of input views. We describe how to effectively optimize neural radiance fields to render photorealistic novel views of scenes with complicated geometry and appearance.

-

Saddy Waddy - The Private Sector

-

"Forcing ideas through a small aperture can give them any shape, like pasta."

- Ted Nelson (1990) -

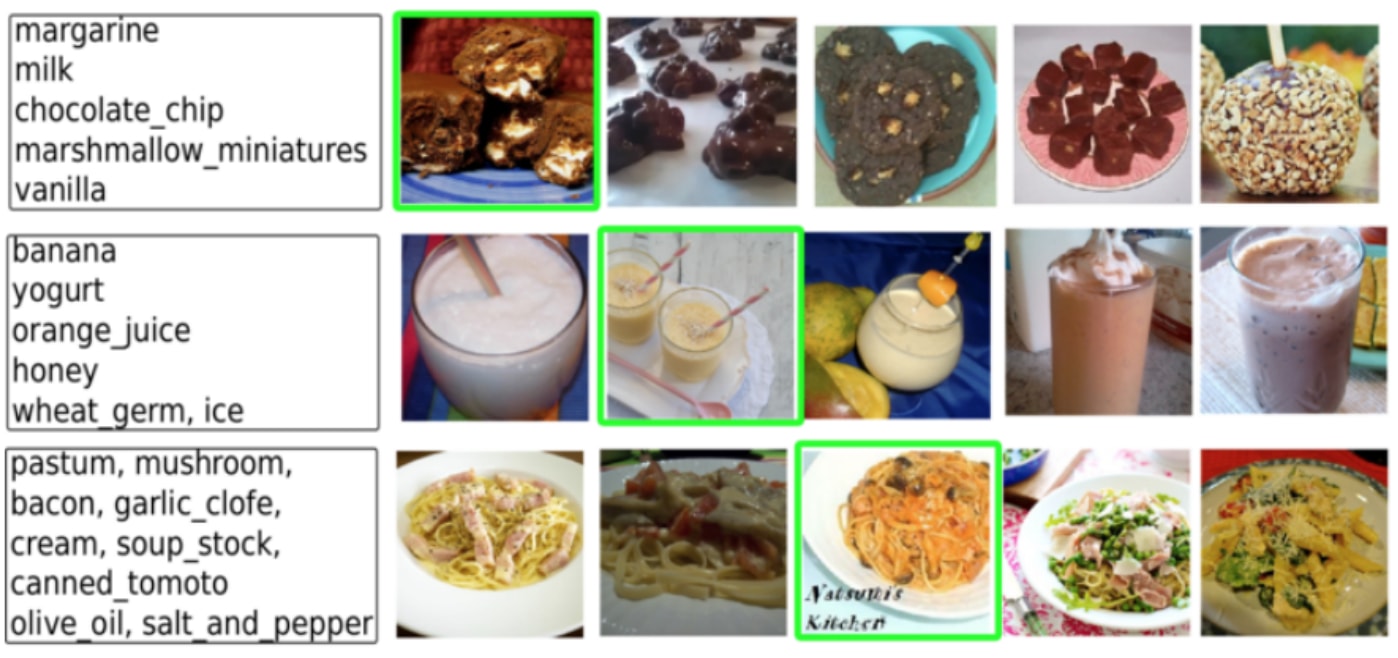

CookGAN Generates Realistic Meal Images From an Ingredients List (paper)

CookGAN uses an attention-based ingredients-image association model to condition a generative neural network tasked with synthesizing meal images. The framework enables the model to generate realistic meal images corresponding to an ingredients list alone.

-

Recent Machine Learning Papers with Videos (2020)

Image2StyleGAN++: How to Edit the Embedded Images? (CVPR 2020)

D3S - A Discriminative Single Shot Segmentation Tracker (CVPR 2020)

Zooming Slow-Mo: Fast and Accurate One-Stage Space-Time Video Super-Resolution (CVPR-2020) (code)

IGNOR: Image-guided Neural Object Rendering (ICLR 2020)

-

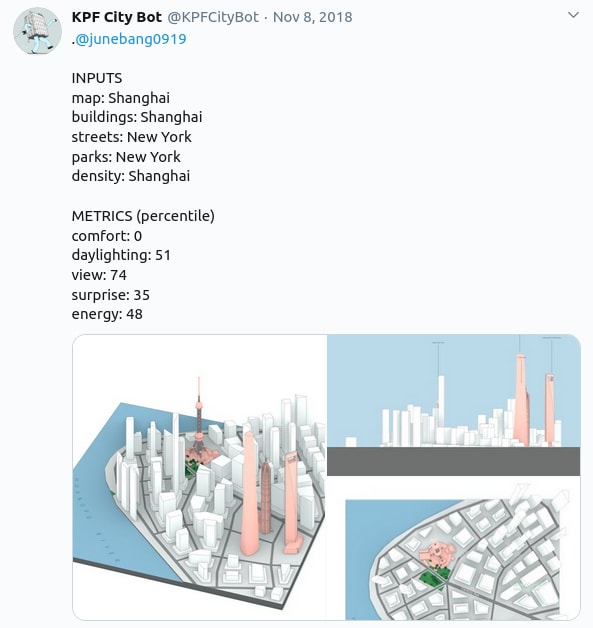

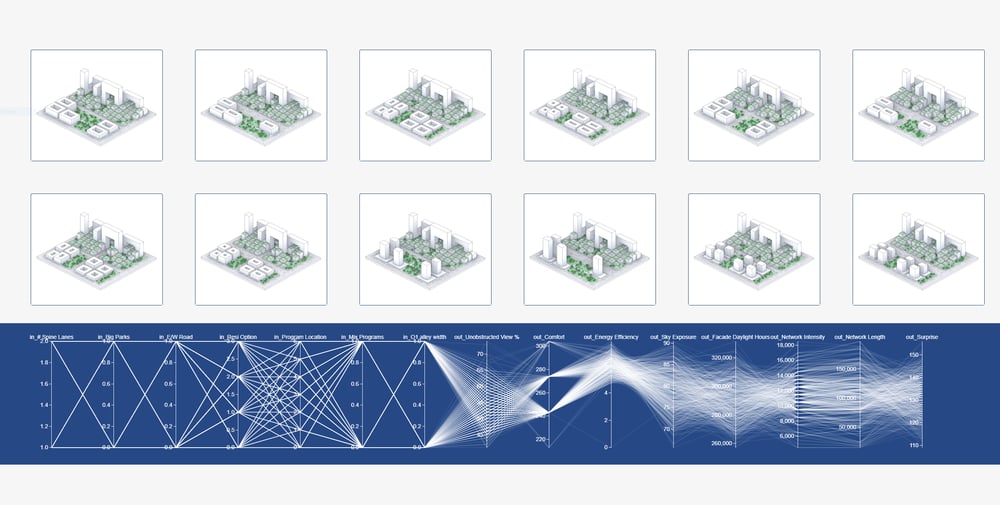

"Our Twitter bot @KPFCityBot uses social media to engage people with urban design and planning concepts. This tool is both educational and enjoyable for users, a method for gamifying the design and feedback process. Users tweet at City Bot with a certain set of parameters – preferences for density, street grid type (ex. New York vs. London?) and amount of open space – and our listener runs an analysis. An image of that city is produced and then Tweeted back at the user, along with a series of performance metrics. "

-

Conversation with Victor Riparbelli - CEO of Synthesia, a player in the Synthetic Media space

#Comment: Shallow bullshit aiming at gluing people even more to their little glowing rectangles, to further accelerate late capitalist hyper consumerism. See my talk on the topic here.

-

The Smart(er) City - Computational Urban Design and Analysis

-

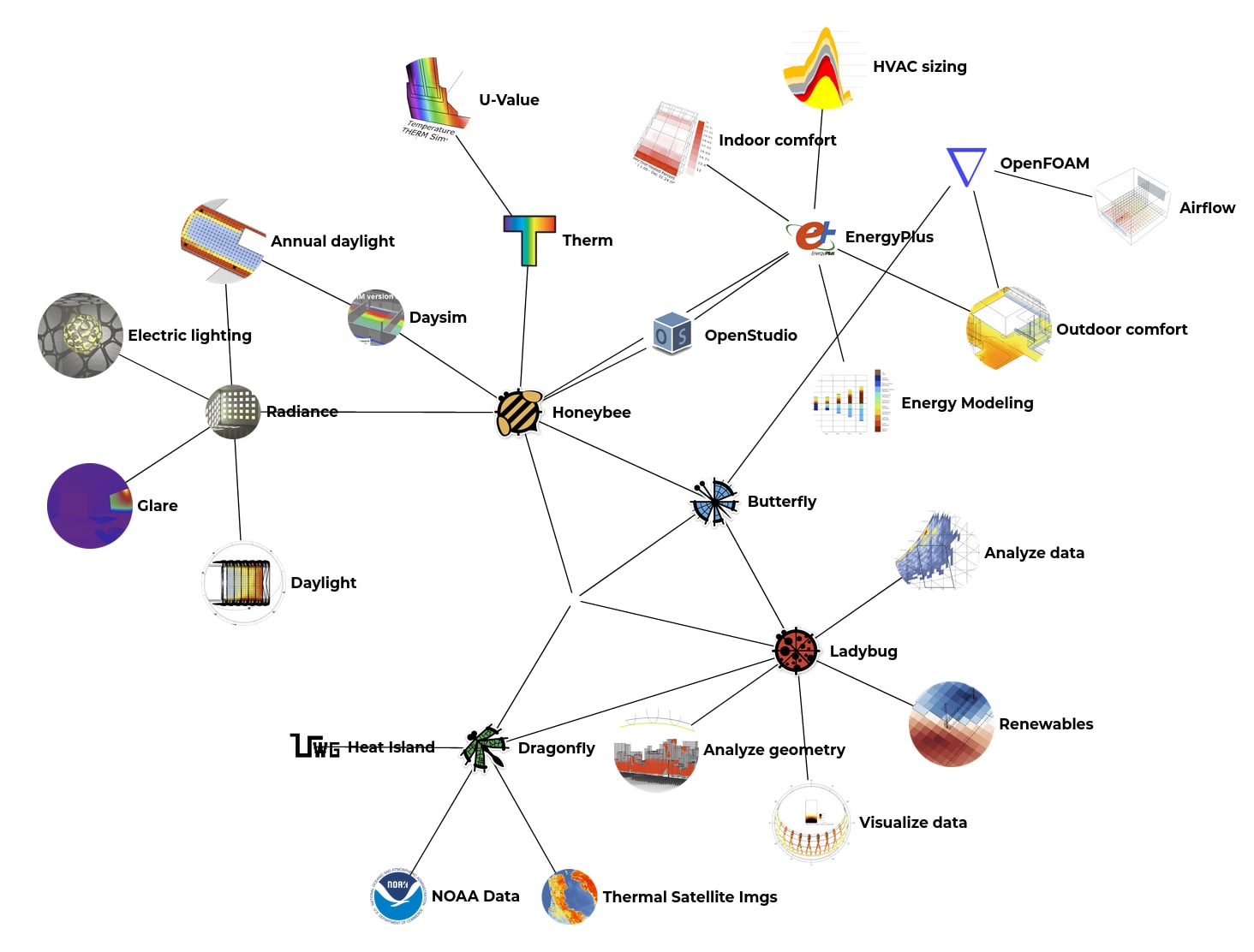

Ladybug Tools - Making environmental design knowledge and tools freely accessible to every person, project and design process.

-

Design Tech - Digital technology is on the brink of changing how we design cities

Connected Places Catapult together with Dark Matter Labs, Open Systems Lab and the Centre for Spatial Technologies undertook a three month collaborative research study reviewing 76 companies across AEC on the cutting edge of this change - looking at how these companies are innovating and revolutionising the design processes in the built environment.

-

The Difference Between Computational Design vs. Generative Design vs. Parametricism

The Future of BIM Is NOT BIM And Its Coming Faster Than You Think - The Sequel

Is GENERATIVE DESIGN the future of architecture?

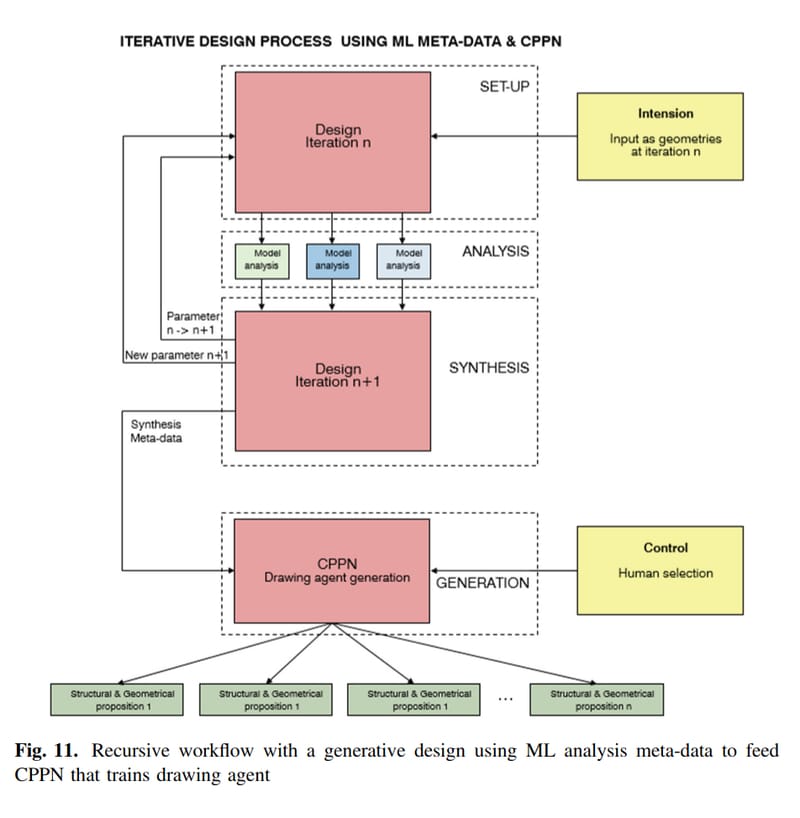

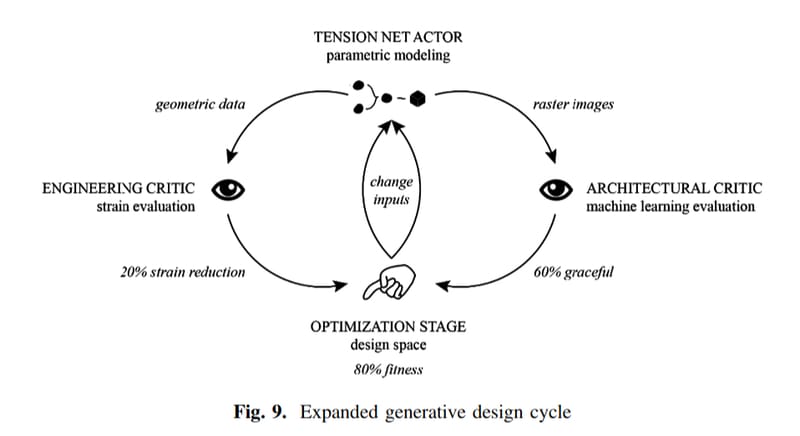

My MASTERTHESIS on Generative Design

Danish AM Hub Talks: Bastian Schaefer (Airbus) and David Benjamin (Autodesk) (2019)

-

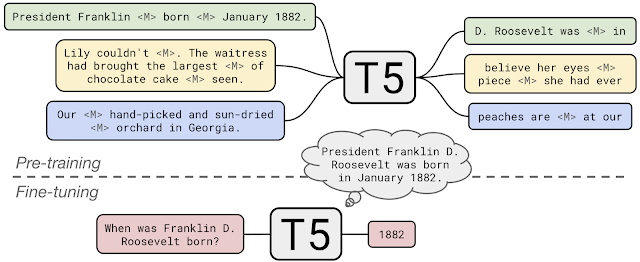

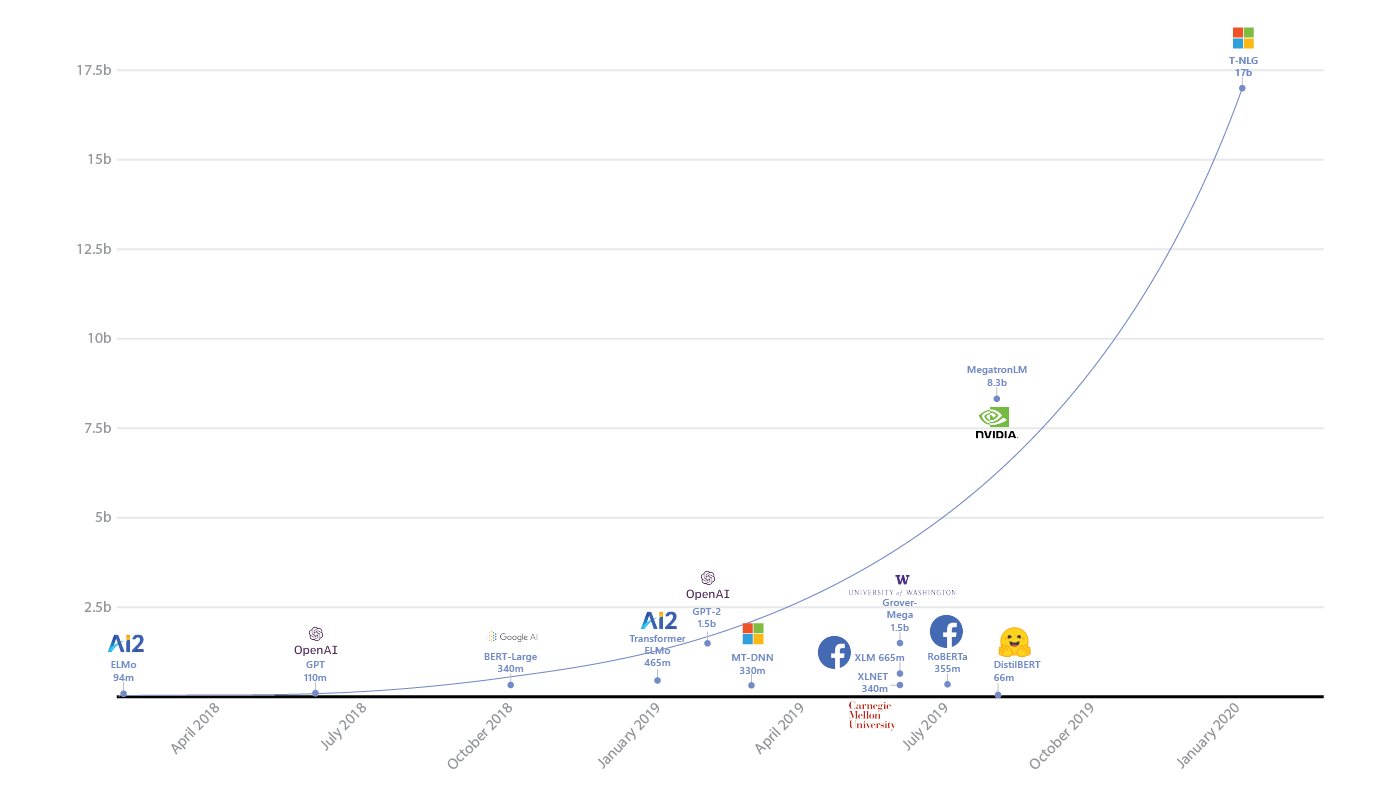

Turing-NLG: A 17-billion-parameter language model by Microsoft

"Turing Natural Language Generation (T-NLG) is a 17 billion parameter language model by Microsoft that outperforms the state of the art on many downstream NLP tasks. We present a demo of the model, including its freeform generation, question answering, and summarization capabilities, to academics for feedback and research purposes. <|endoftext|>" – This summary was generated by the Turing-NLG language model itself.