tag > Music

-

Are we in the right place at the wrong time, as Dr. John said, or in the wrong place at the right time?

-

AI Music in 2025 – Notes on a Stalled Revolution

It’s been over a year since we wound down our generative music startup okio. Since then the space has “evolved” in ways both predictable and boring.

We now have 50+ high-quality video gen models, yet music still has just a handful (suno, udio, stability, 11labs…) - all closed source, all sounding somewhere between bland and absolute dog poo (unless you burn weeks on trial-and-error hacks).

The music industry’s lawyer army has strangled innovation, while many musicians have turned violently anti-AI. UX innovation has stalled too: most tools haven’t evolved in a year, others now mimic a shitty DAW in the browser.

Some sell “ethically sourced data” (usually half-true), but it's pointless as outputs still sound like dog poo. Google showed off real-time gen, but in mega-corp fashion it’ll never be a real product - they'll ship a muzak machine for video slop at scale.

The only glimmer: Chinese dark-horses might drop open-source, uncensored models trained on everything. They’ll sound great—and get demonized in the West.

Shame, because the promise of generative music has never been bigger.

Music is humanity’s most direct technology for emotion. And yet AI music has been reduced to slop and jingles. It’s a tragedy of imagination: the space where innovation should be loudest is dead quiet.

My bet is that the real breakthroughs won’t come from the lawyer-choked West or from UX gimmicks. They’ll come from the underground, open source, and global scenes - just like every real musical revolution before. Onwards!

-

Rest in peace, Jay Haze. Grateful for the funky times we shared and all I learned along the way. Much love, old friend.

We spend intensive times in the studio and on the road and made lots of music back in the early 2000s..

-

New MusicForComputers release: Machine Dub – by Count Slobo.

11 min of AI-generated roots dub. Think King Tubby’s ghost routed through neural nets. A synthetic prayer in bass + echo, crafted by the Machine, for the Machine. Play it loud and let Jah signal ride the wires!

-

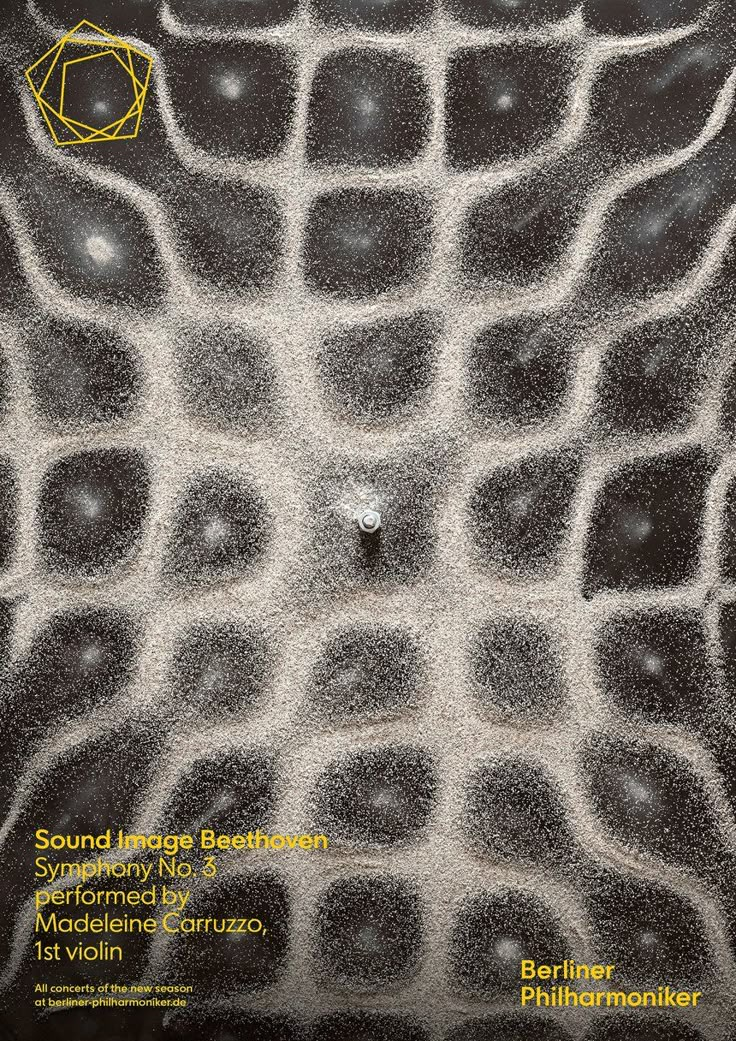

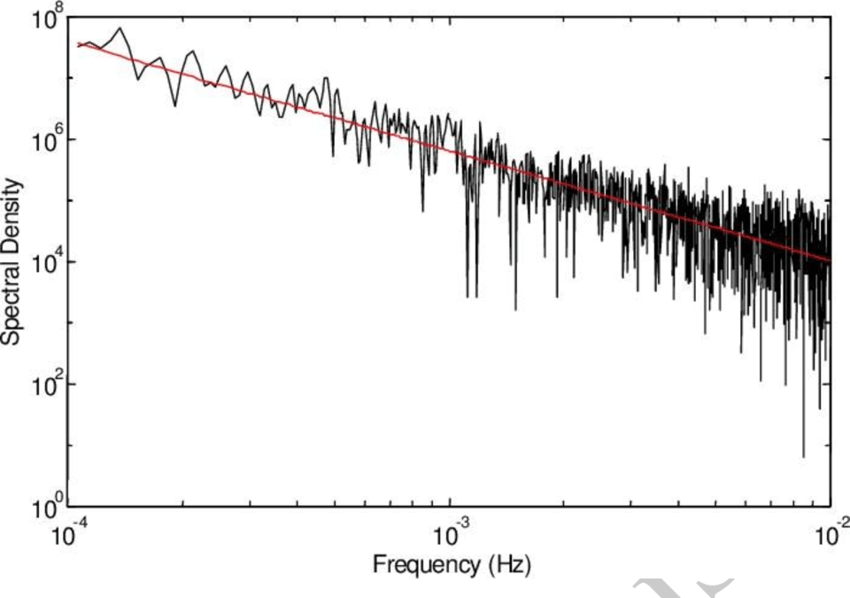

Pink Noise - The "1/f" structure of spectral brain dynamics

1/f noise, also known as flicker noise or pink noise, is a type of noise where the power spectral density is inversely proportional to the frequency. This means the noise power decreases as the frequency increases. 1/f noise is observed in various fields, including electronics, music, biology, and economics

James Keeler and Farmer demonstrated that a system of coupled logistic maps could produce fluctuations with a 1/f power spectrum. They showed that this occurred because the system continually tunes itself to stay near a critical point, a property that was later dubbed self-organized criticality by Per Bak. - Source