-

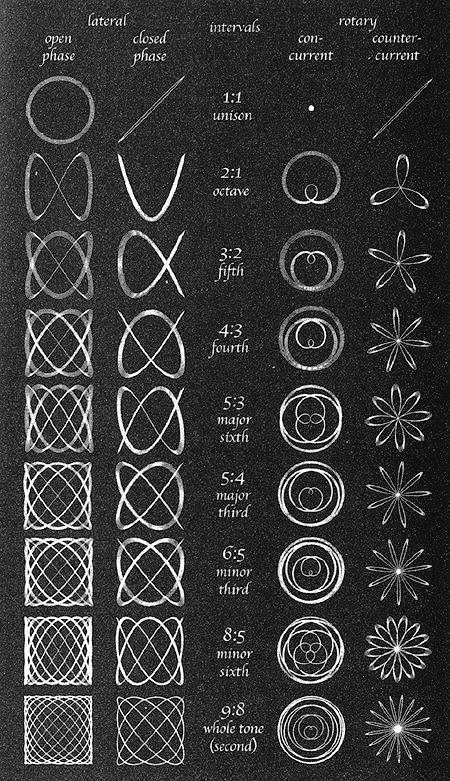

Visualizing musical intervals as geometric patterns highlights the deep link between mathematics, geometry, and music theory.

-

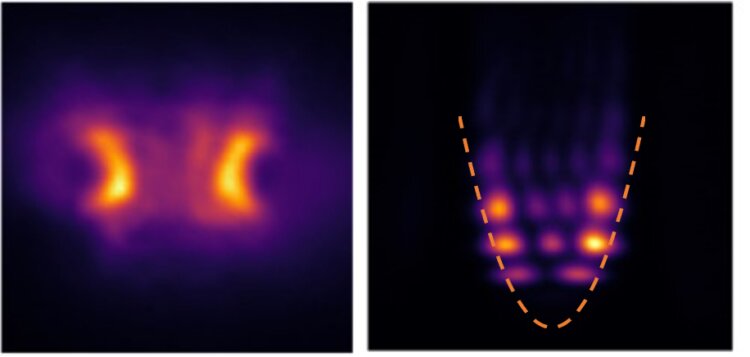

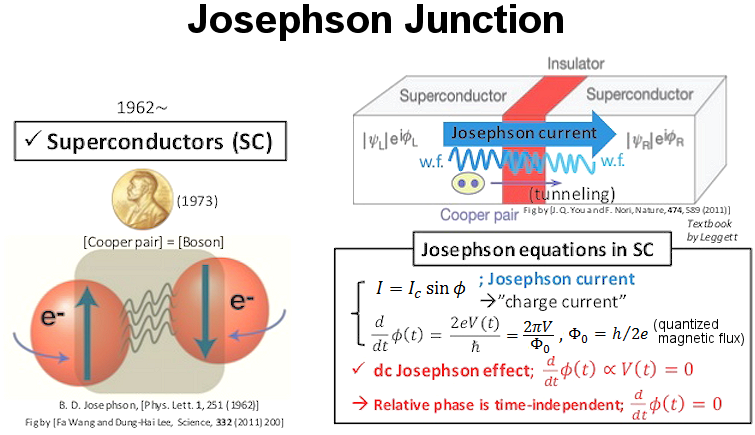

The Josephson effect describes the flow of a superconducting current between two superconductors separated by a thin insulating barrier, without any voltage applied. It is an example of a macroscopic quantum phenomenon.

-

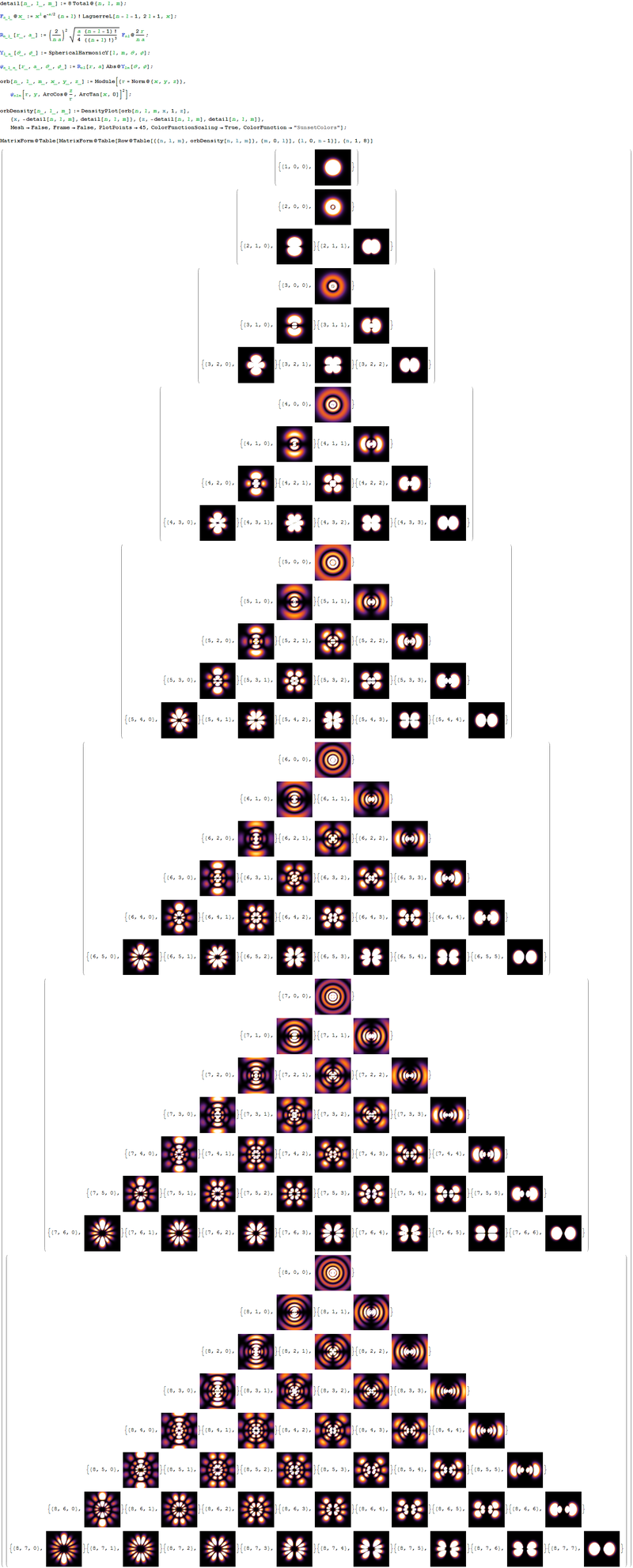

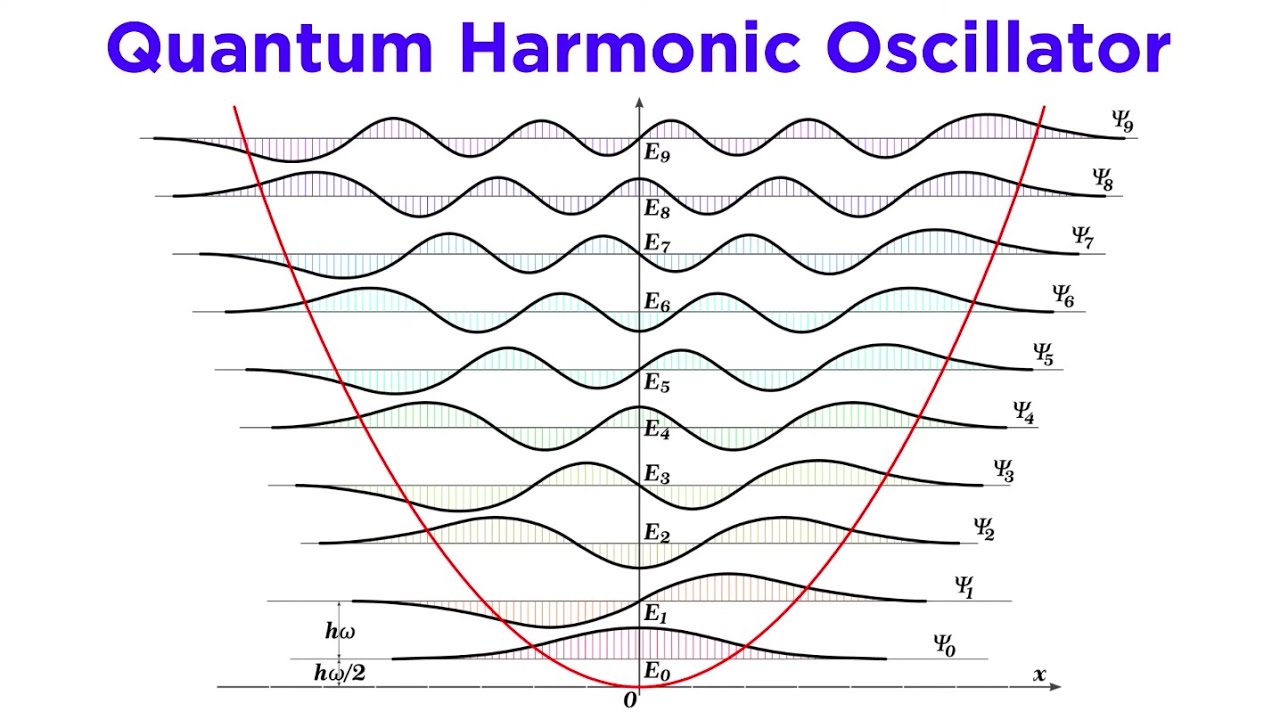

Atoms are quantum oscillators with resonant absorption spectra and frequency responses. They weakly couple to off-resonance frequencies, but exhibit strong absorption near their characteristic transition energies. (photon interactions)

-

The psycho-spiritual rot of letting the military-financial-tech-mindcontrol complex infiltrate every facet of Western society is hard to measure—but it’s vast. The architects don’t see it yet, but this entire system is a train wreck of epic proportions. Godspeed!

-

"The best possible knowledge of a whole does not necessarily include the best possible knowledge of all its parts, even though they may be entirely separate and therefore virtually capable of being ‘best possibly known’, i.e., of possessing, each of them, a representative of its own. The lack of knowledge is by no means due to the interaction being insufficiently known at least not in the way that it could possibly be known more completely it is due to the interaction itself." - Erwin Schrödinger.

-

Alchemical times: ALICE detects the conversion of lead into gold at the LHC

"Near-miss collisions between high-energy lead nuclei at the LHC generate intense electromagnetic fields that can knock out protons and transform lead into fleeting quantities of gold nuclei"

-

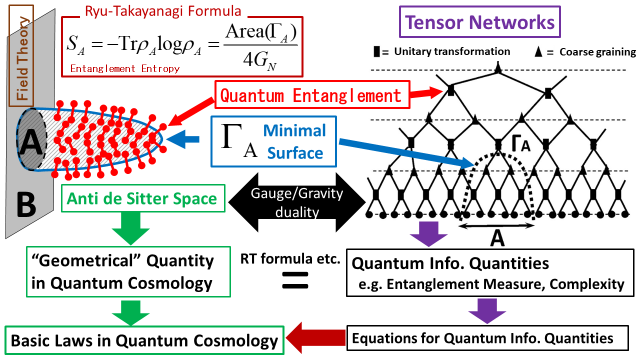

Self-Referential Recurrent Inductive Bias

Self-referential recurrent inductive bias is the computational echo of Gödelian self-reference in learning systems: it creates a paradox where the learner must assume how to learn before it can learn what to assume—yet this very loop is the engine of open-ended intelligence.

The only way out is through: the system’s ‘truth’ is its ability to sustainably refine itself without collapsing into triviality or divergence.

Grounding:

Gödel/Turing Resonance: Like Gödel’s incompleteness or Turing’s halting problem, self-referential learning systems cannot "prove" their own optimality from within their frame. The bias must exist a priori to bootstrap, yet its validity can only be judged a posteriori.

Fixed-Point Dynamics: The bias update rule Bt+1=f(Bt,D)Bt+1=f(Bt,D) is a recurrence relation seeking a "fixed point" where the bias optimally explains its own generation process (analogous to hypergradient descent in meta-learning).

Dangerous Universality: A self-referential learner with unlimited compute could, in theory, converge to Solomonoff induction (the ultimate bias for prediction), but in practice, it risks catastrophic self-deception (e.g., hallucinated meta-priors).

Pseudocode: Recursive Bias Adaptationdef self_referential_learner(initial_bias_B, data_generator, T): B = initial_bias_B for t in range(T): # Generate data under current bias (e.g., B influences sampling) D = data_generator(B) # Train model with current bias, compute loss model = train_model(B, D) loss = evaluate(model, D) # Self-referential step: Compute gradient of loss w.r.t. B # (This requires unrolling the training process) grad_B = gradient(loss, B) # Update the bias recursively B = B - learning_rate * grad_B return BReference: Model-Agnostic Meta-Learning for Fast Adaptation of Deep Networks

-

Prediction: Analog Computing is coming back with a vengeance

-

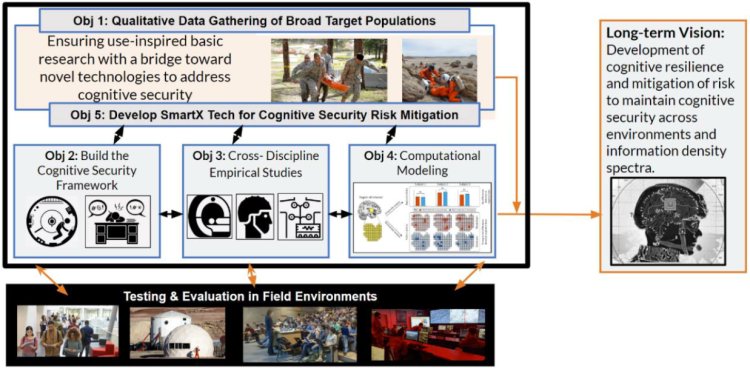

Robert Prevost aka Pope Leo XIV immediately after his election went viral for his rant on the state of the information environment and the population's cognitive resilience.

"We really are living in a cognitive wild west. Most people have near-zero memetic defenses or cognitive security suited for the online age". He adds, "any semblance of it is easily brute-forced by the onslaught of information & the situation is even worse when it comes to AI agent-orchestrated psyops."

-

Samim's Law of Predictions

Hofstadter's Law: It always takes longer than you expect, even when you take into account Hofstadter's Law.

Samim's law: Events always unfold differently than predicted, even when you take into account Samim’s Law — because expectation & outcome are locked in a recursive loop.