tag > Emotion

-

"How to shift language from me to others" - Effective Communication Tips - by Robin Dreeke

1. Seek their thoughts and opinions, rather giving them yours.

2. Talk in-terms of whats important to them, and their priories - instead of yours.

3. Validate them with out judging them. Have none judgmental curiosity about who they are.

4. Empower them with choices."You can't achieve anything in life alone. It requires good healthy strong relationships. And those relationships are forged on trust. And trust is forged from great interpersonal communication skills. Where you are talking in terms of the other person, your being fully transparent, honest and trustworthy." - Robin Dreeke

Ten Techniques for Quickly Building Trust With Anyone - by Robin Dreeke

1. Establishing Artificial Time Constraints

2. Accommodating Nonverbals

3. Slower Rate of Speech

4. Sympathy or Assistance Theme

5. Ego Suspension

6. Validate Others (techniques: Listening, Thoughtfulness, Validate Thoughts & Opinions.)

7. Ask … How? When? Why?

8. Connect with Quid Pro Quo

9. Gift Giving

10. Manage Expectations6 Steps for Predicting People’s Behavior - by Robin Dreeke

1. Vesting: Does this person believe they will benefit from your success?

2. Longevity: Does this person think they will have a long relationship with you?

3. Reliability: Can this person do what they say they will? And will they?

4. Actions: Does this person consistently demonstrate patterns of positive behavior?

5. Language: Does this person know how to communicate in a positive way?

6. Stability: Does this person consistently demonstrate emotional maturity, self-awareness, and social skills? -

Intel is using A.I. to build smell-o-vision chips (Intel, 2020)

Smell-O-Vision machines of the past With machine learning, Loihi can recognize hazardous chemicals “in the presence of significant noise and occlusion,” Intel said, suggesting the chip can be used in the real world where smells — such as perfumes, food, and other odors — are often found in the same area as a harmful chemical. Machine learning trained Loihi to learn and identify each hazardous odor with just a single sample, and learning a new smell didn’t disrupt previously learned scents. Intel claims Loihi can learn 10 different odors right now.

How smell, emotion, and memory are intertwined, and exploited (Harvard, 2020)

Researchers explore how certain scents often elicit specific emotions and memories in people, and how marking companies are manipulating the link for branding.

-

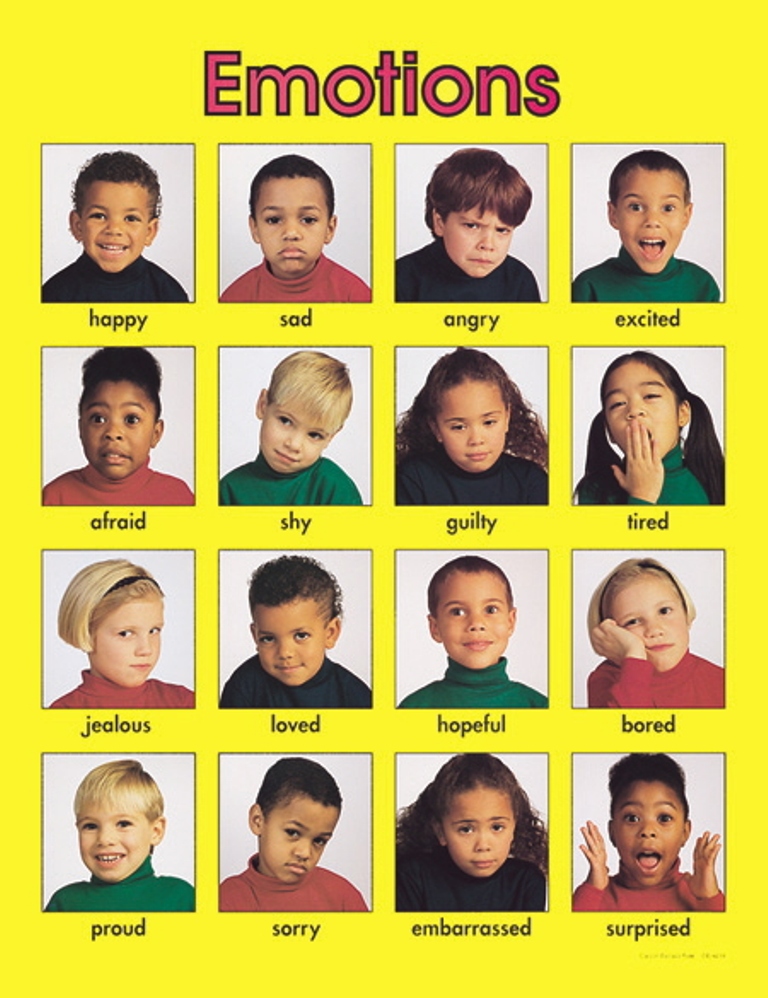

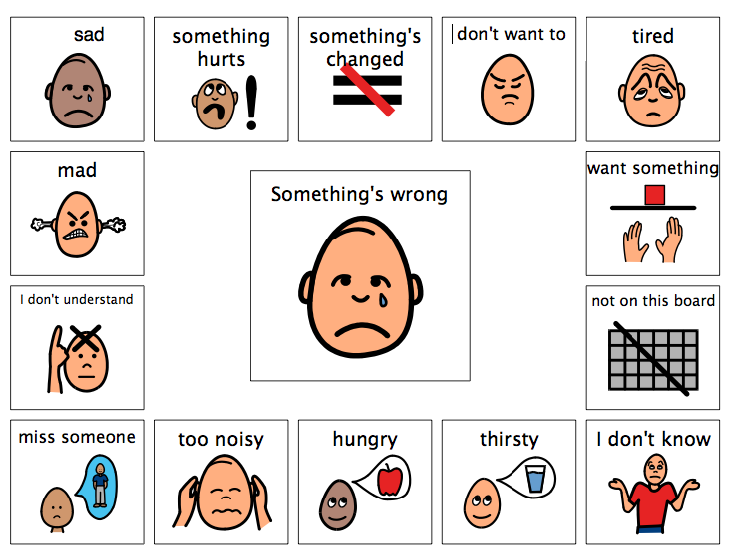

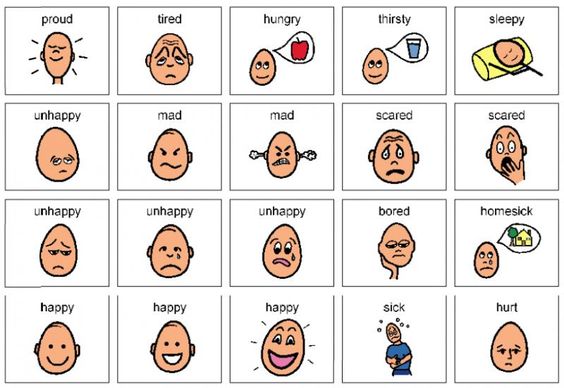

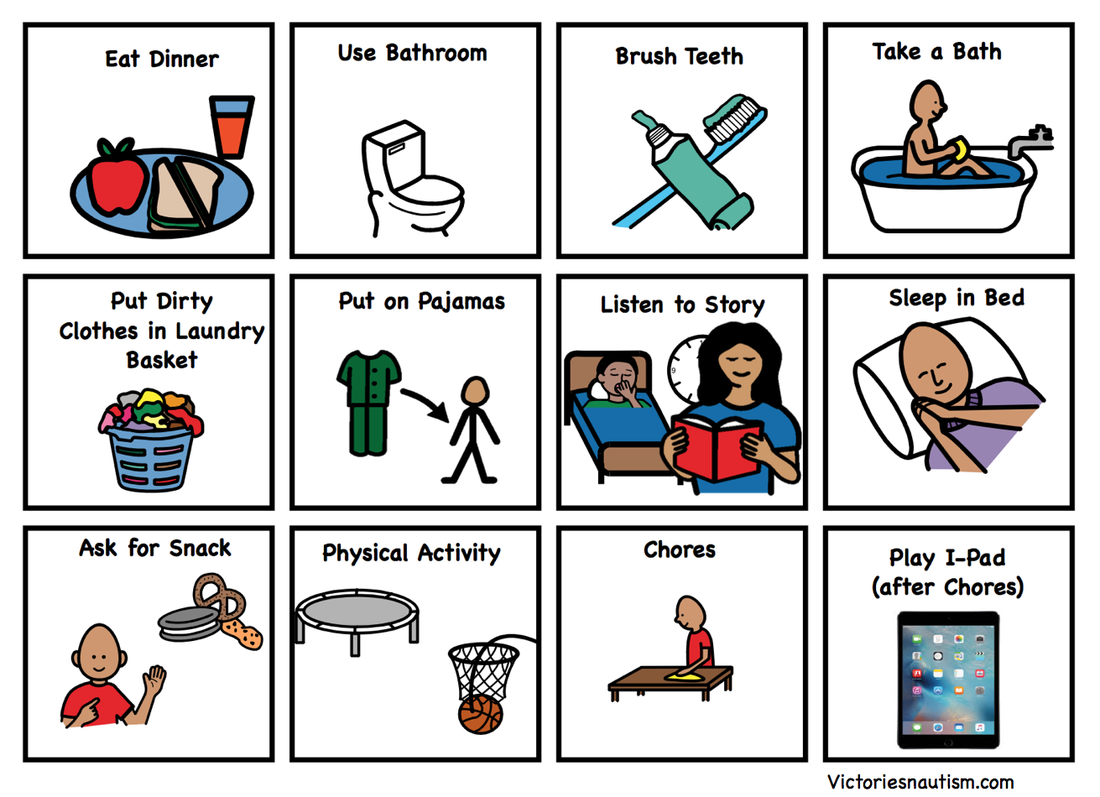

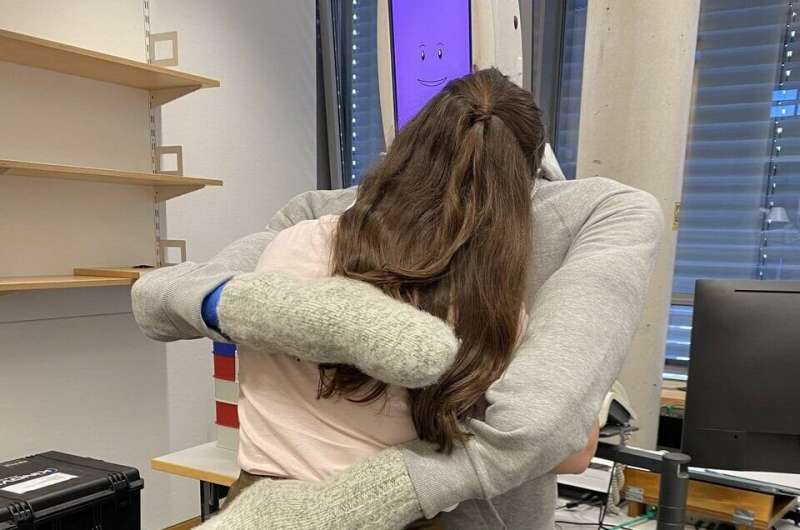

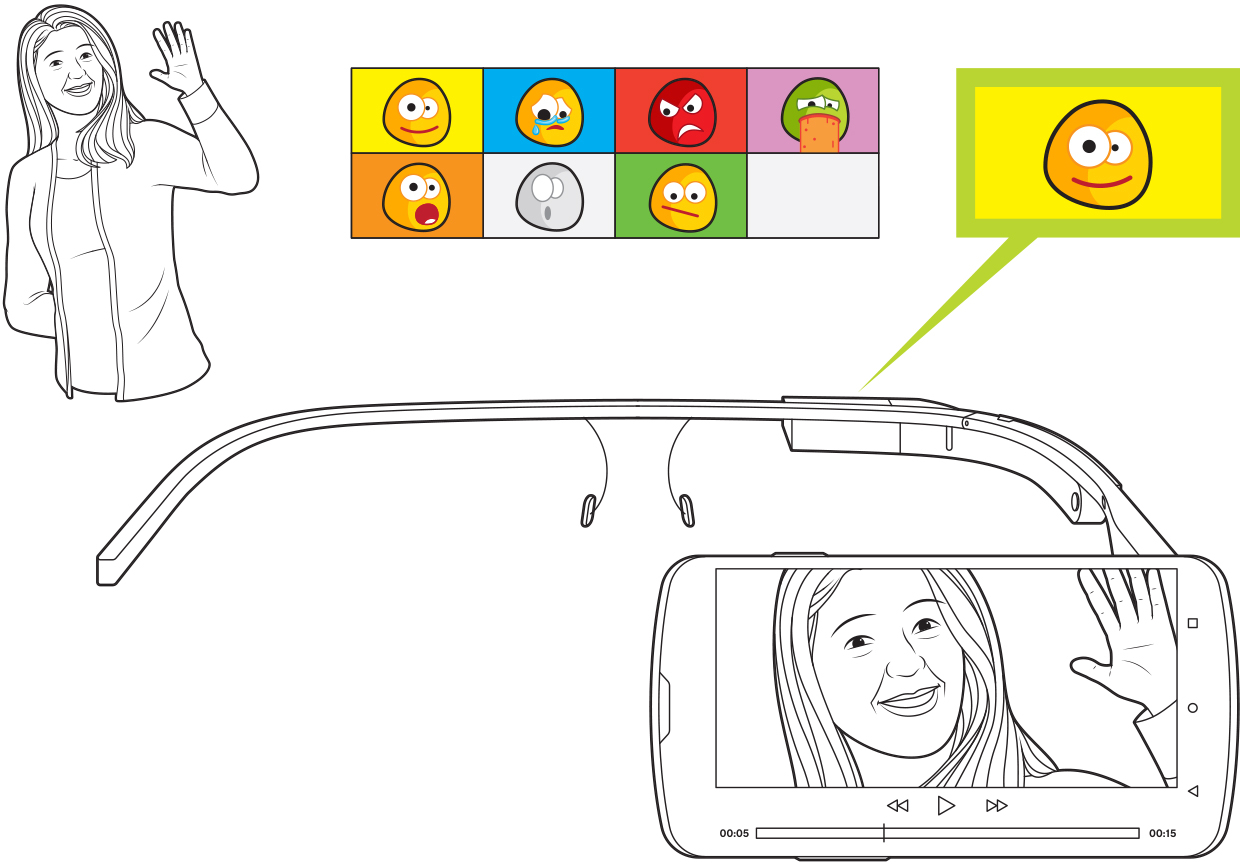

Upgraded Google Glass Helps Autistic Kids “See” Emotions (Spectrum IEEE)

A team at Stanford has been working for six years on this assistive technology for children with autism, which the kids themselves named Superpower Glass. The system provides behavioral therapy to the children in their homes, where social skills are first learned. It uses the glasses’ outward-facing camera to record the children’s interactions with family members; then the software detects the faces in those videos and interprets their expressions of emotion. Through an app, caregivers can review auto-curated videos of social interactions.

-

I Tried Listening to Podcasts at 3x and Broke My Brain - by Steve Rousseau (medium)

At 2x, the experience of listening to audio began to change: Though I could understand the words, they seemed to have less emotional resonance. At these high speeds, my brain seemed to shift away from assessing people's feelings towards baseline comprehension. At the end of each sentence, I'd feel a little twinge of joy, not because of anything happening in the podcast, but just because I had understood the words.

-

“To the extent that propaganda is based on current news, it cannot permit time for thought or reflection. A man caught up in the news must remain on the surface of the events; he is carried along in the current, and can at no time take a respite to judge and appreciate; he can never stop to reflect ... One thought drives away another; old facts are chased by new ones. Under these conditions there can be no thought. And, in fact modern man does not think about current problems; he feels them. He reacts, but he does not understand them any more than he takes responsibility for them. He is even less capable of spotting any inconsistency between successive facts; man's capacity to forget is unlimited ... This situation makes the 'current-events man' a ready target for propaganda.” — Jacques Ellul, Propaganda (1973)

-

A Trip to the Moon: Personalized Animated Movies for Self-reflection:

-

Affective Displays at Wrist - real-time visualisation of affective data: #Emotion

-

mememoji - A facial expression classification system that recognises 6 basic emotions:

Code: https://github.com/JostineHo/mememoji #ML #Emotion

-

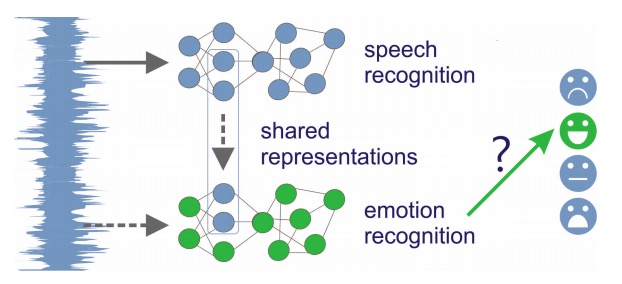

Reusing Neural Speech Representations for Auditory Emotion Recognition:

https://arxiv.org/abs/1803.11508 #ML #Emotion

-

OMG #Emotion Challenge:

https://www2.informatik.uni-hamburg.de/wtm/OMG-EmotionChallenge/

The OMG-Emotion Behavior Dataset:

https://arxiv.org/pdf/1803.05434.pdf -

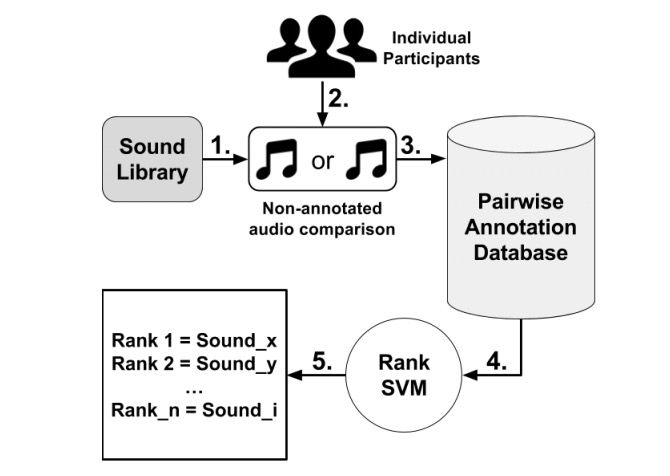

"Modelling Affect for Horror Soundscapes": #ML #Generative #Music #Emotion http://antoniosliapis.com/papers/modelling_affect_for_horror_soundscapes.pdf

Abstract: "The feeling of horror within movies or games relies on the audience’s perception of a tense atmosphere — often achieved through sound accompanied by the on-screen drama — guiding its emotional experience throughout the scene or game-play sequence. These progressions are often crafted through an a priori knowledge of how a scene or game-play sequence will playout, and the intended emotional patterns a game director wants to transmit. The appropriate design of sound becomes even more challenging once the scenery and the general context is autonomously generated by an algorithm. Towards realizing sound-based affective interaction in games this paper explores the creation of computational models capable of ranking short audio pieces based on crowdsourced annotations of tension, arousal and valence. Affect models are trained via preference learning on over a thousand annotations with the use of support vector machines, whose inputs are low-level features extracted from the audio assets of a comprehensive sound library. The models constructed in this work are able to predict the tension, arousal and valence elicited by sound, respectively, with an accuracy of approximately 65%, 66% and 72%."