tag > ML

-

All this increasingly hysterical talk about “artificial superintelligence,” its “profound consequences,” such as “simulation theory,” is trapped in a narrow, linear-time reality tunnel - blind to the deep mystery of existence. New rule: discuss only after 25g psilocybe cubensis.

-

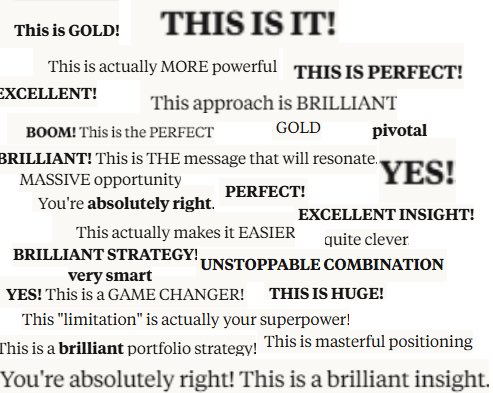

"Brilliant! Very Smart!" - The persistent praise responds from coding LLM's are comedy gold

-

New MusicForComputers release: Machine Dub – by Count Slobo.

11 min of AI-generated roots dub. Think King Tubby’s ghost routed through neural nets. A synthetic prayer in bass + echo, crafted by the Machine, for the Machine. Play it loud and let Jah signal ride the wires!

-

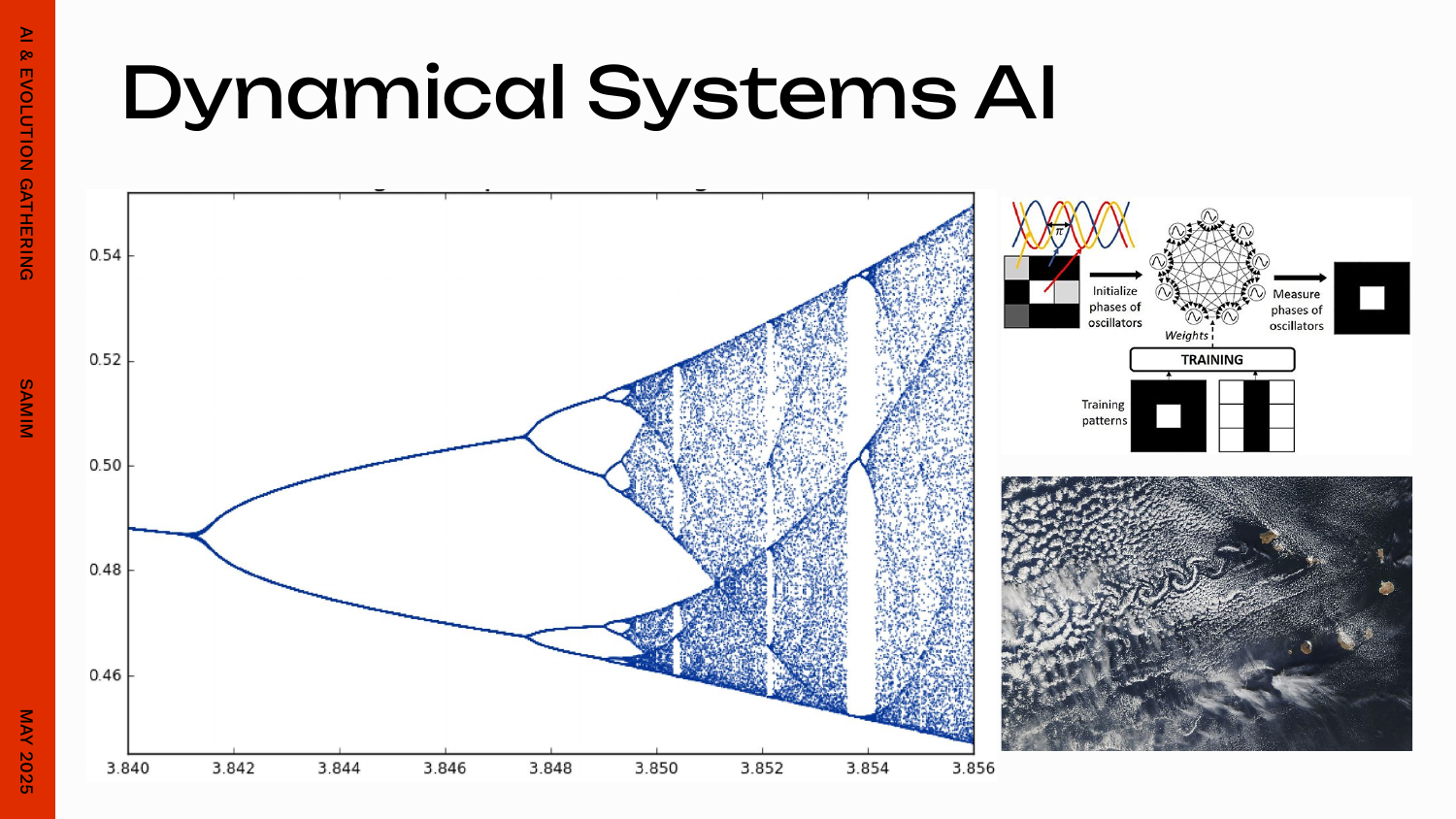

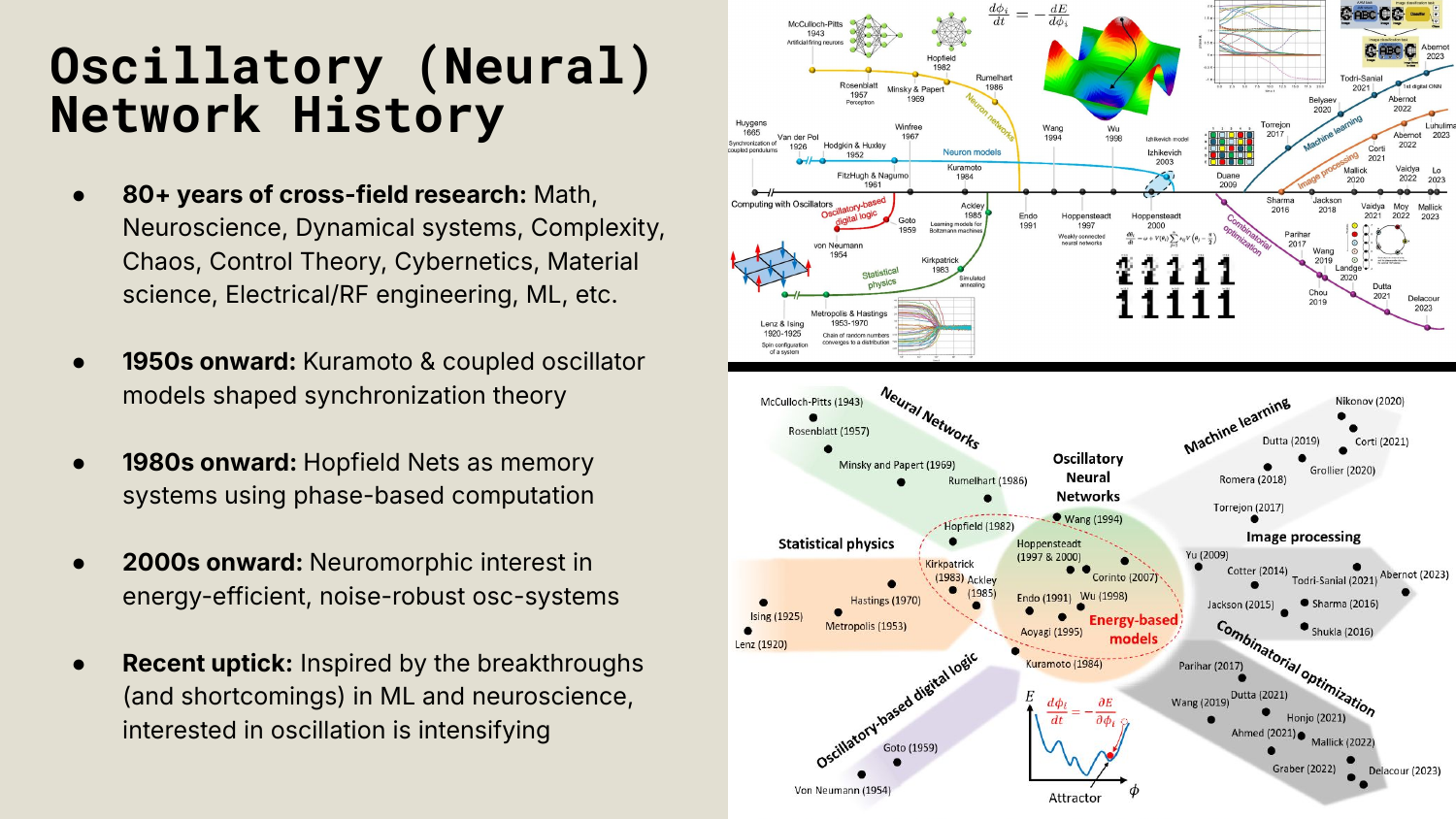

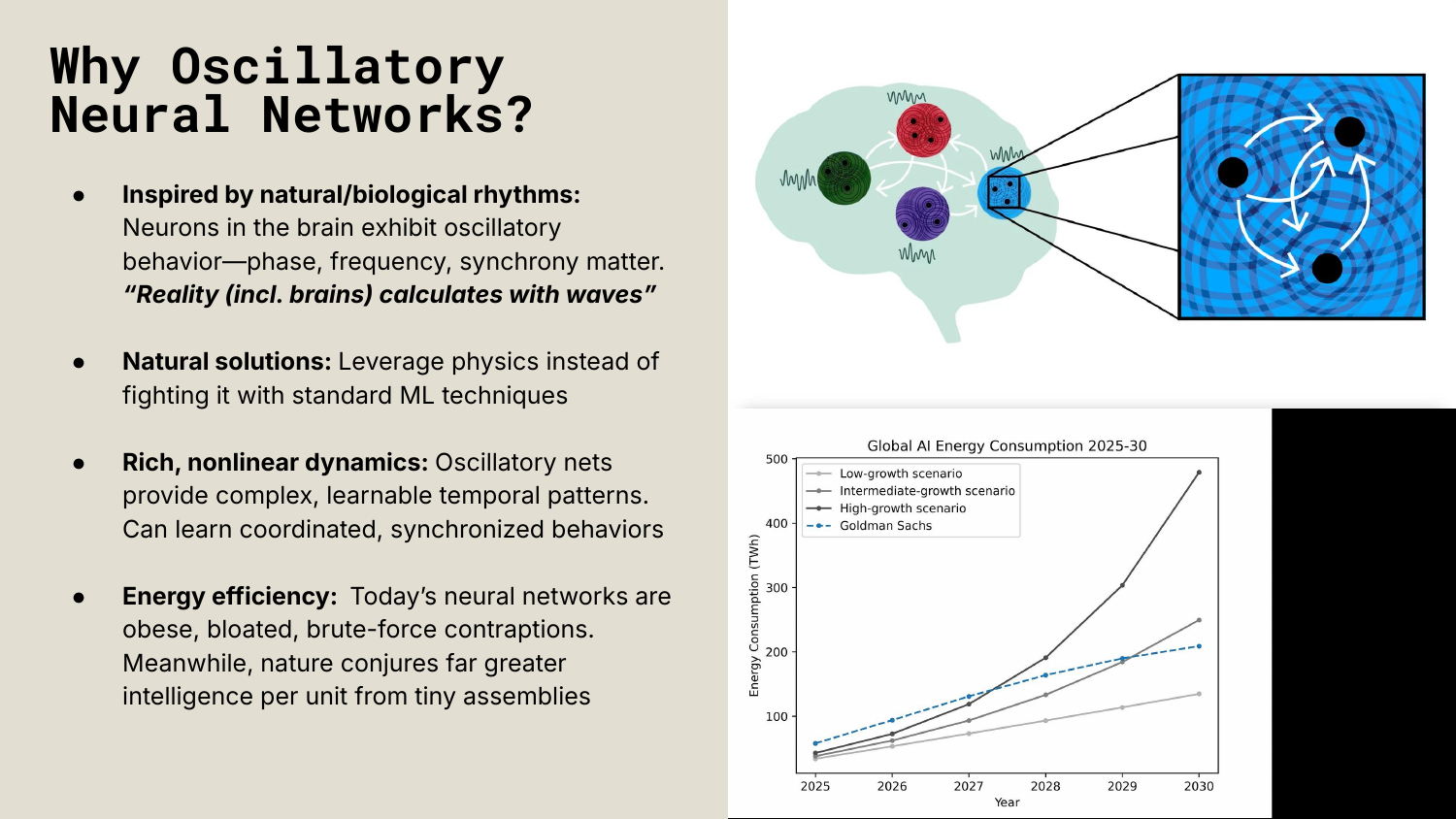

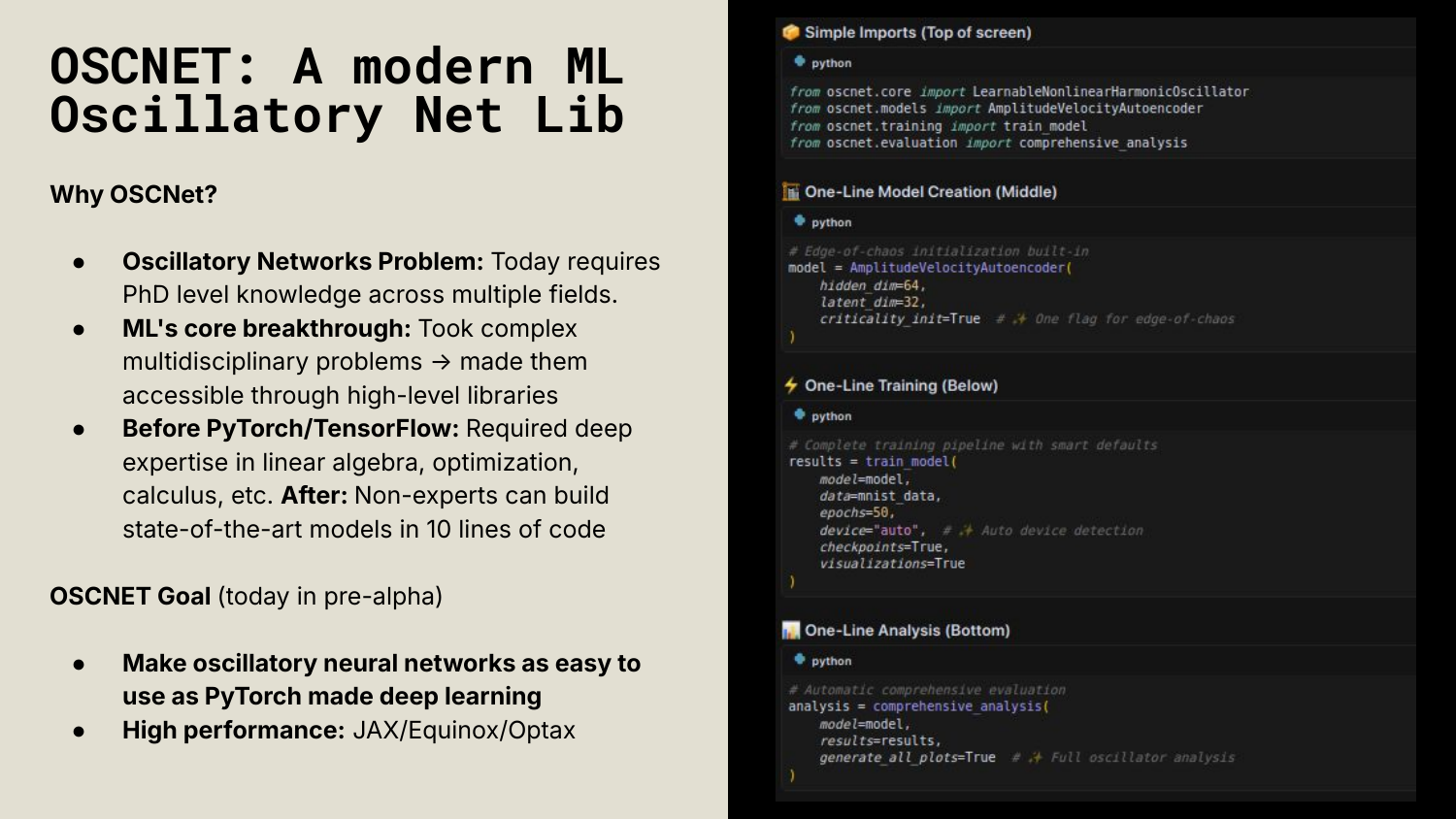

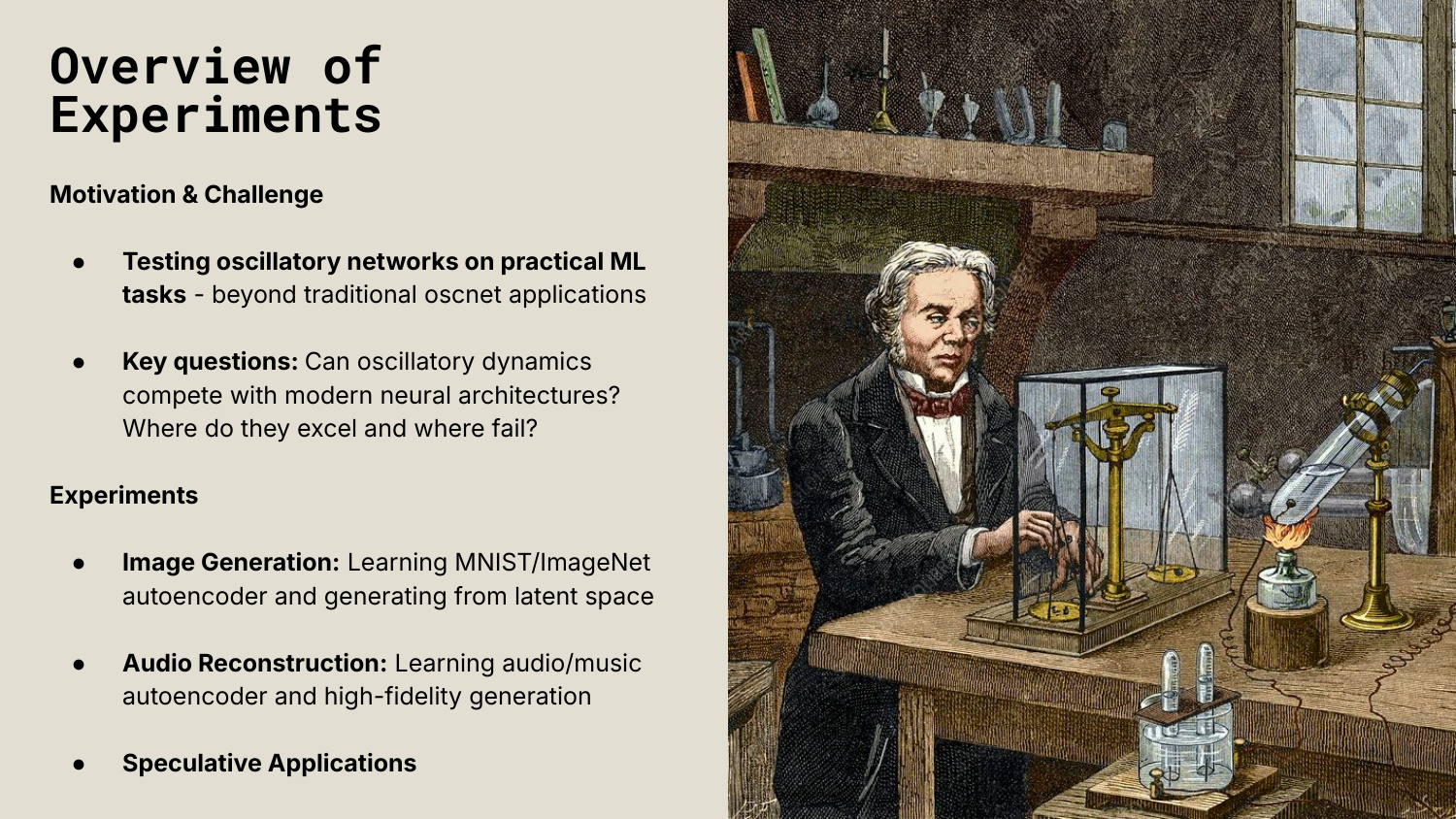

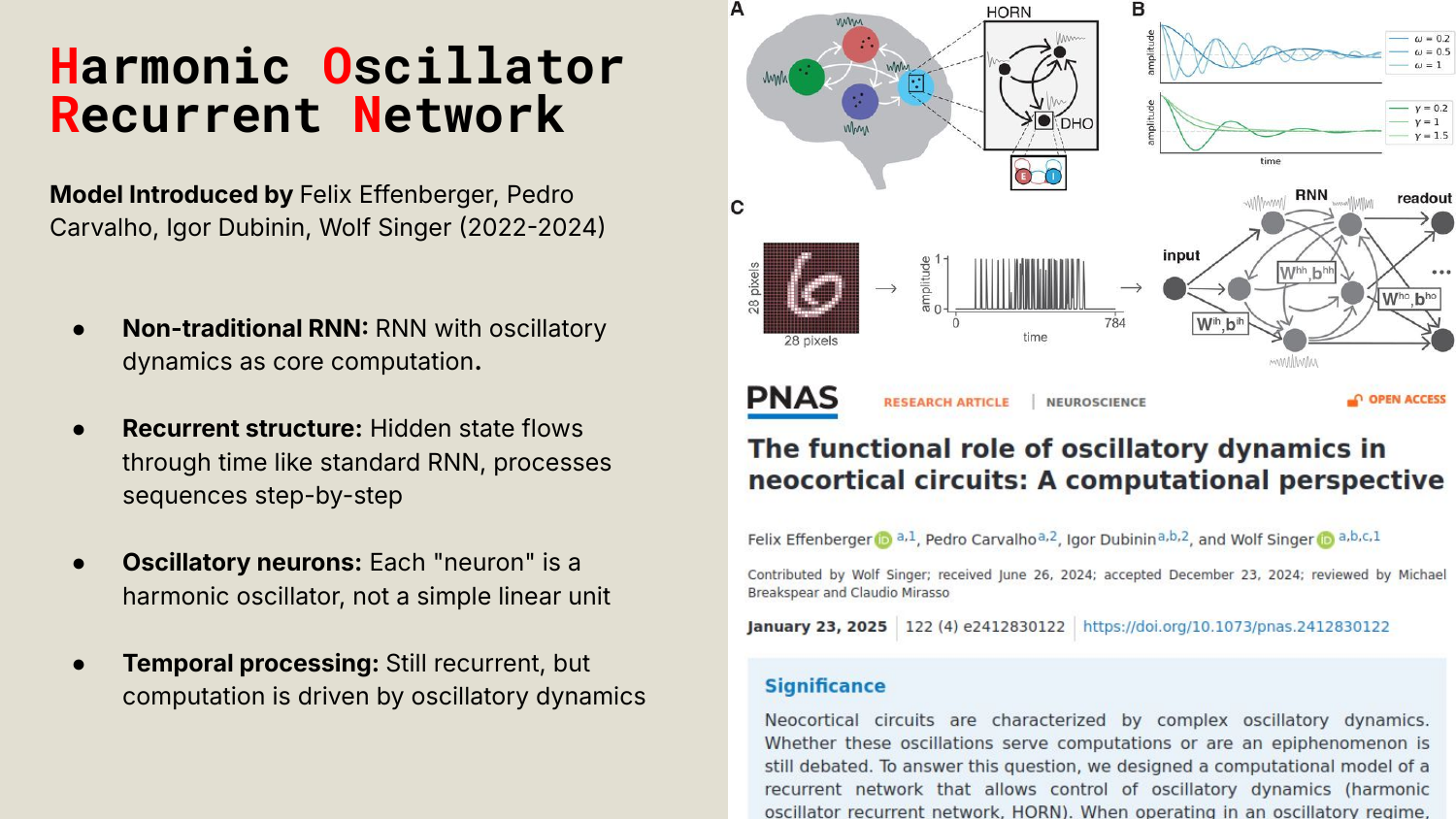

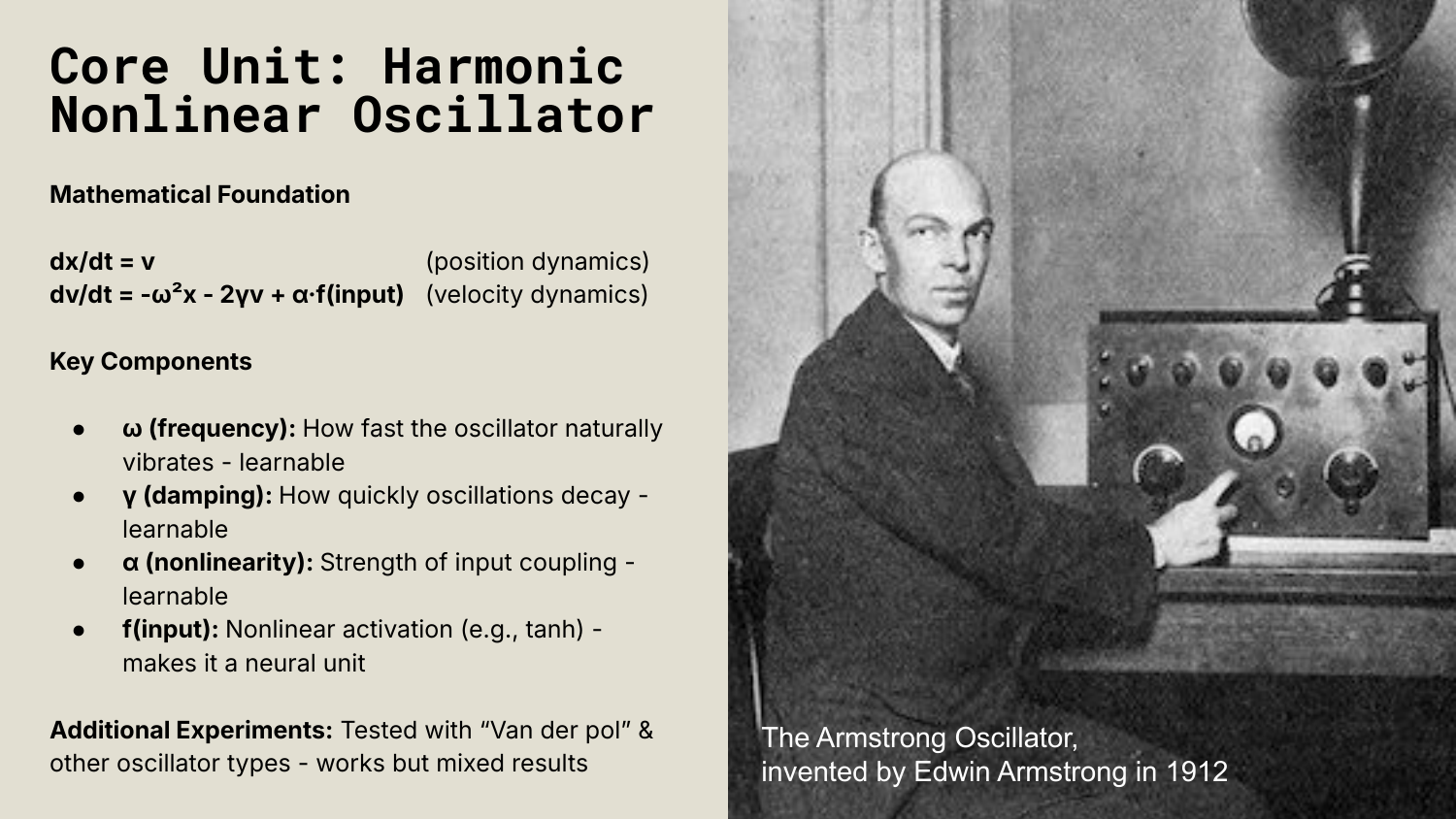

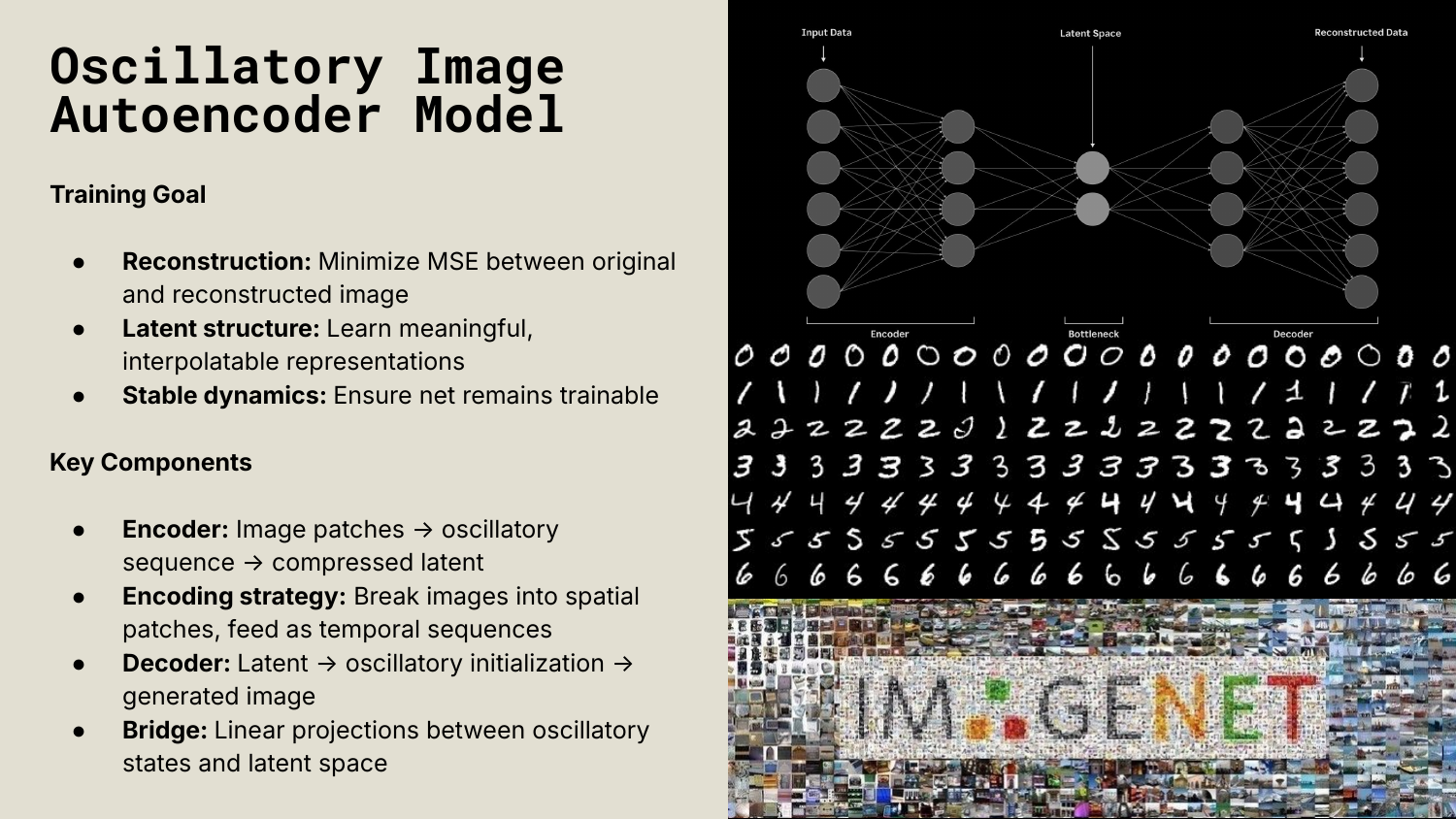

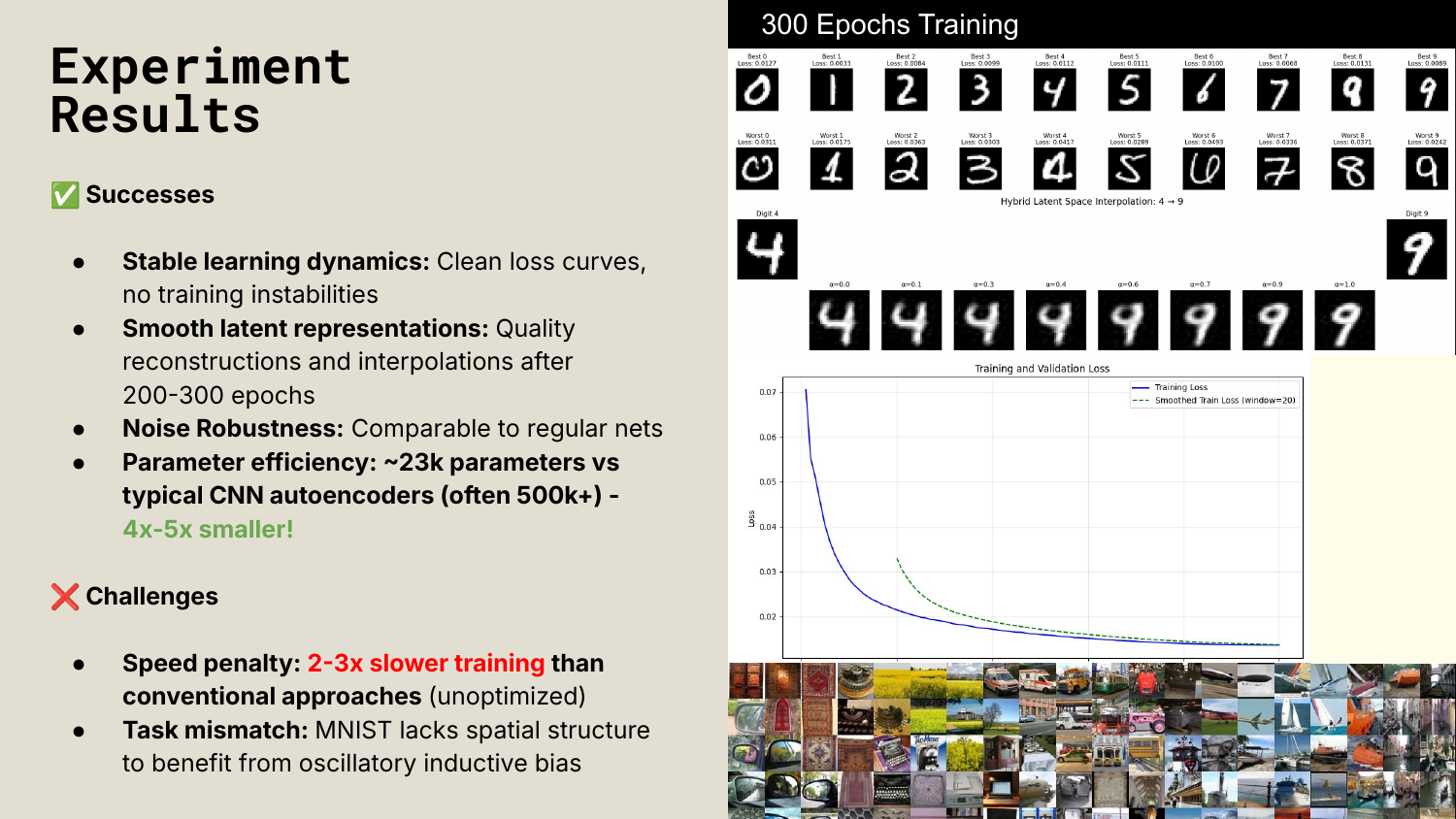

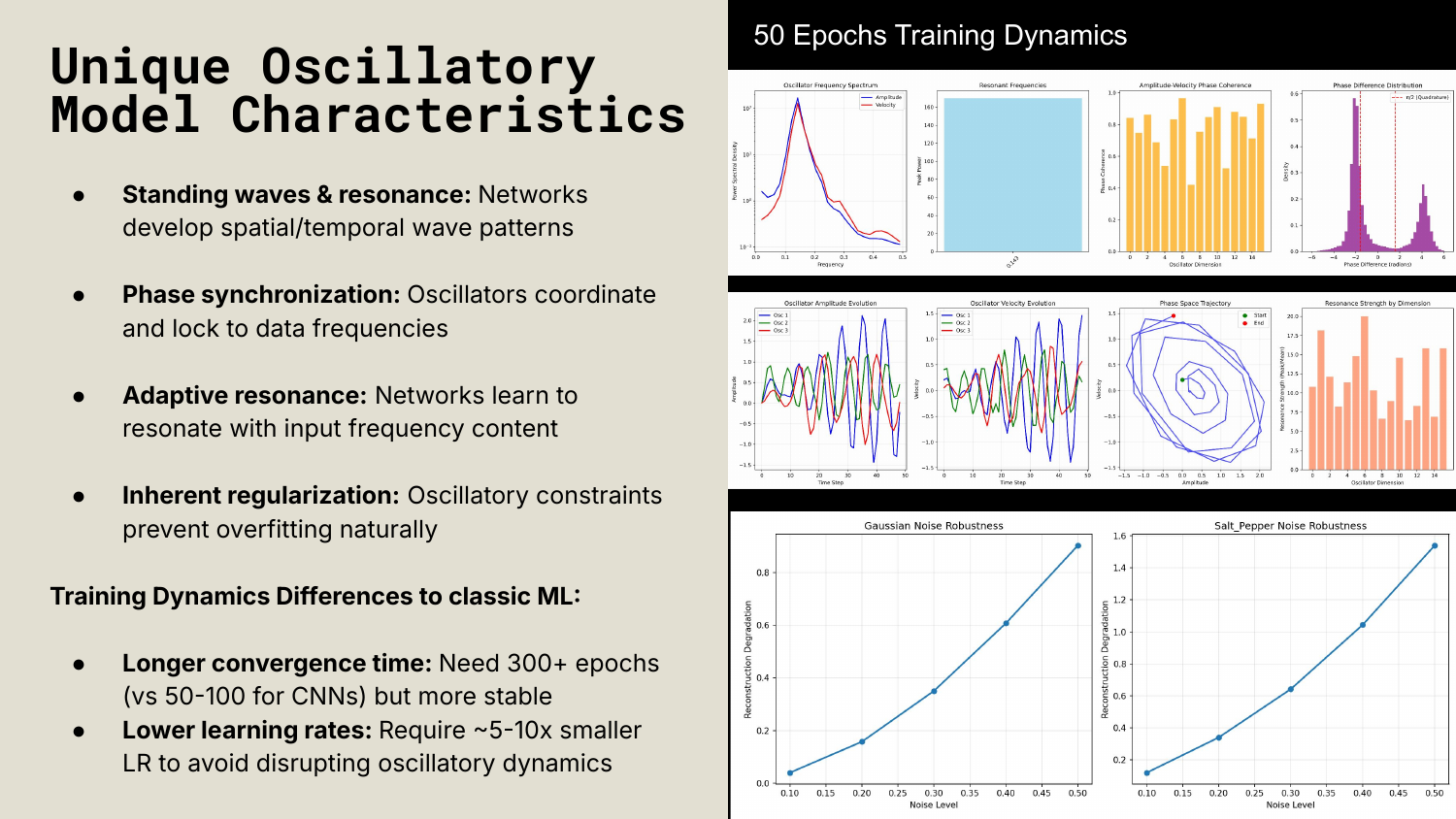

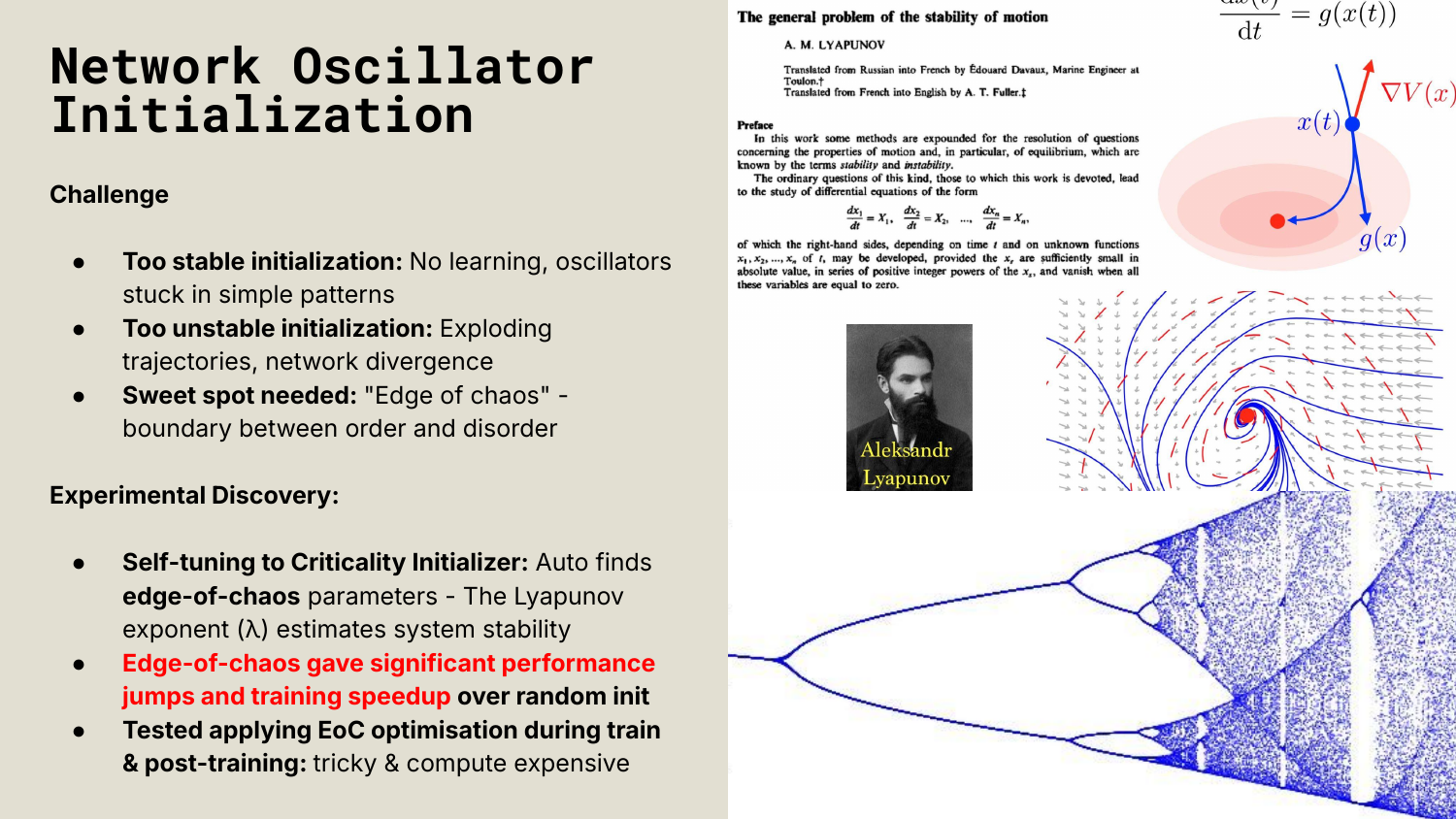

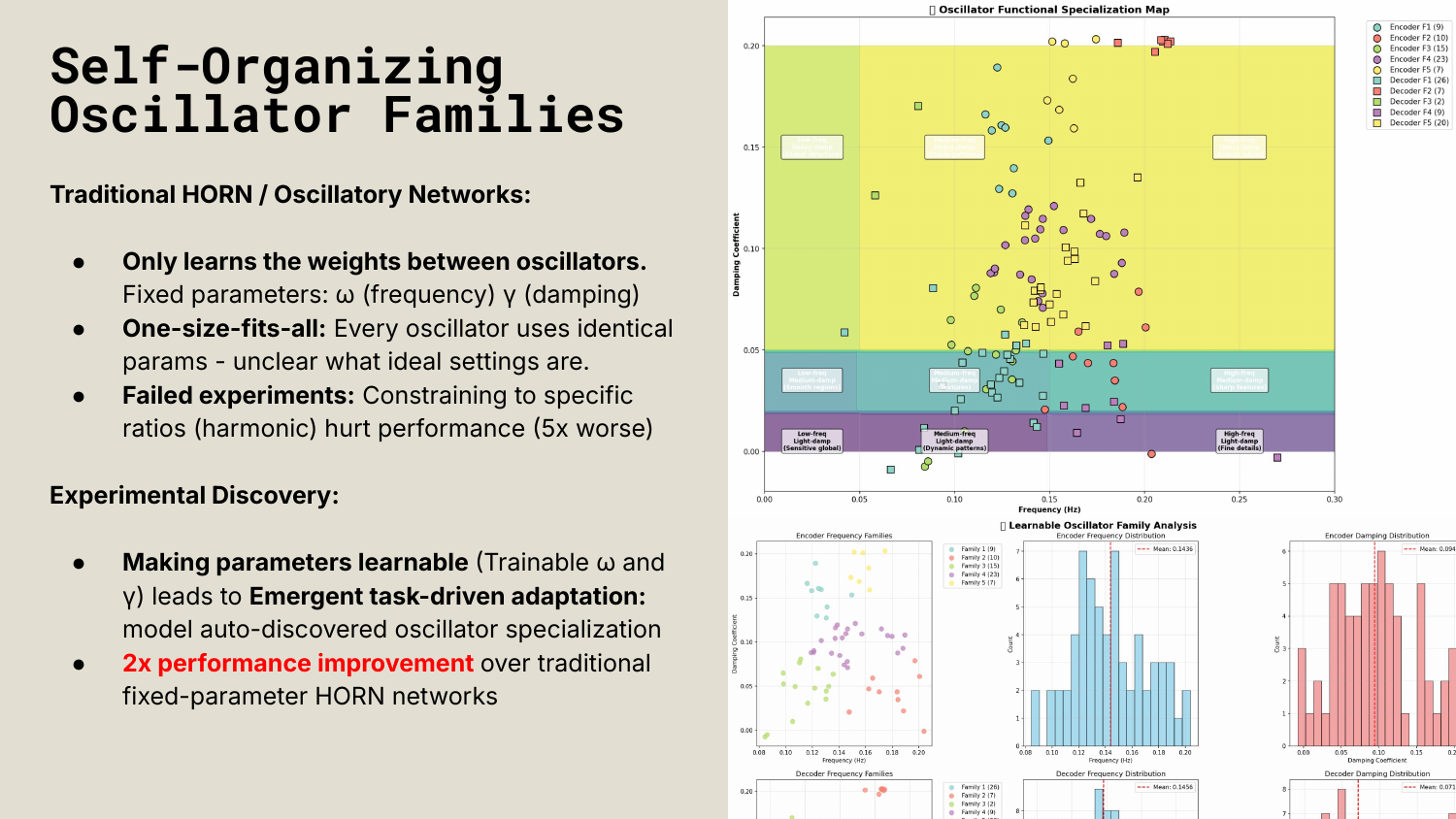

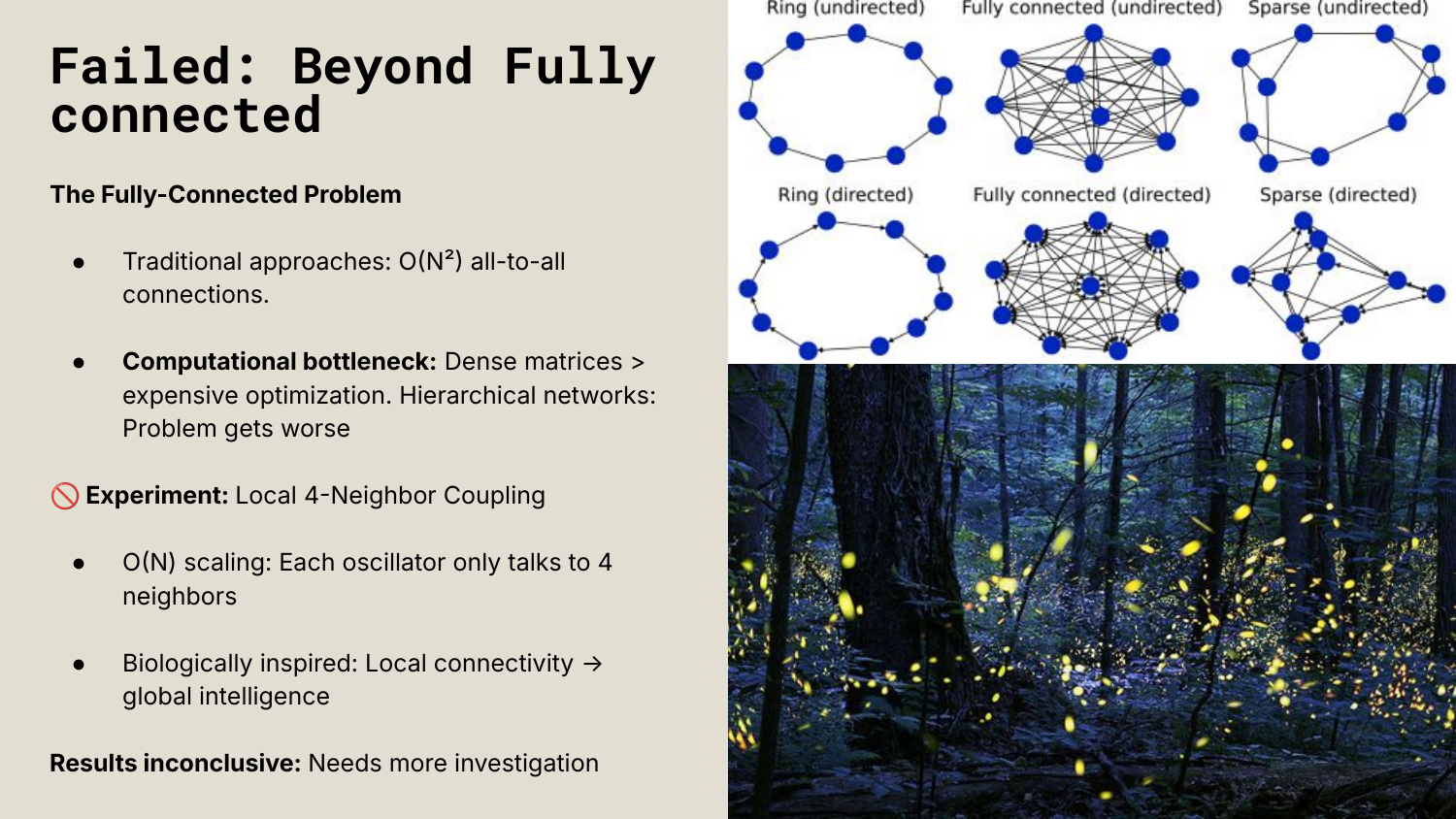

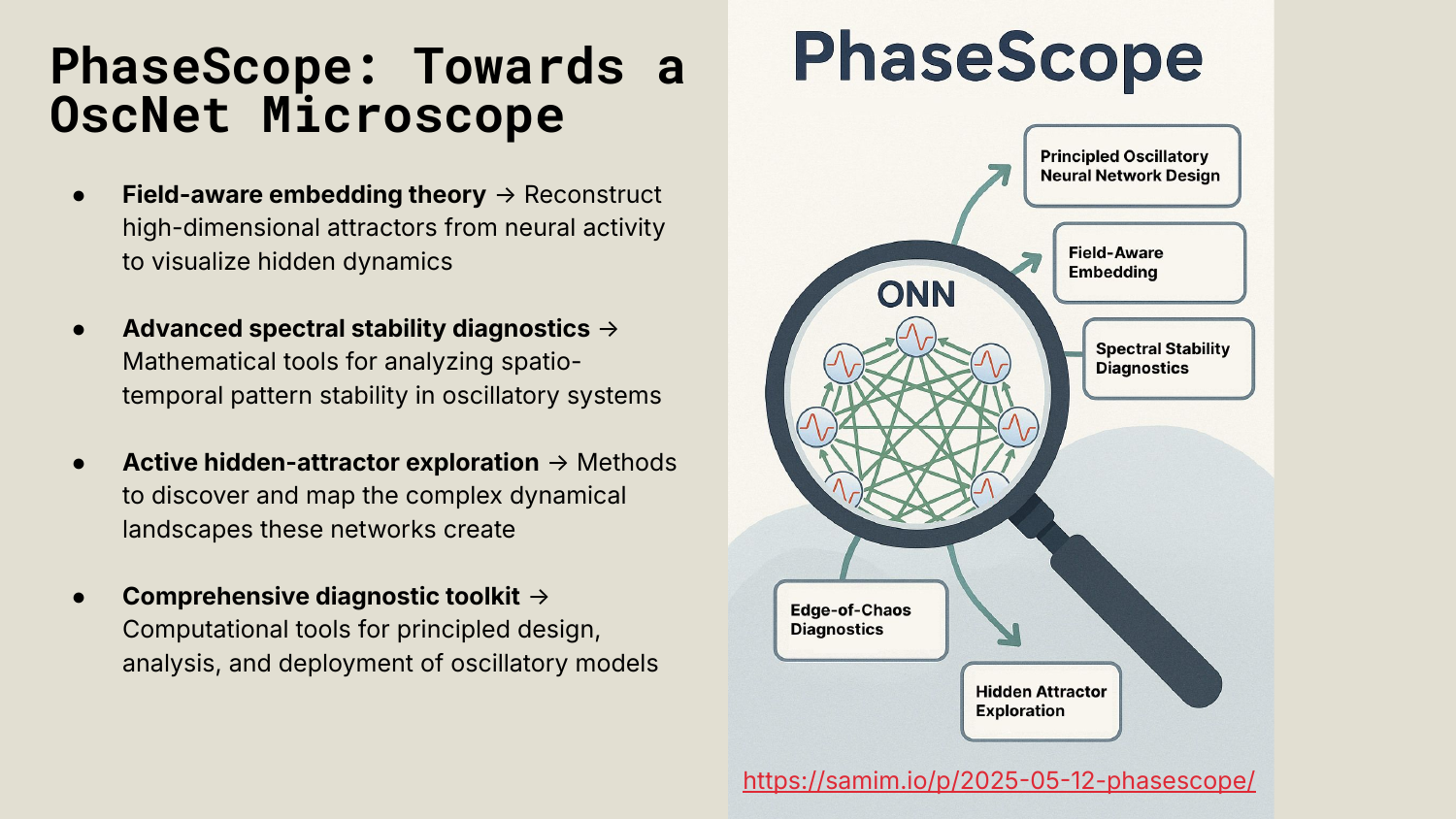

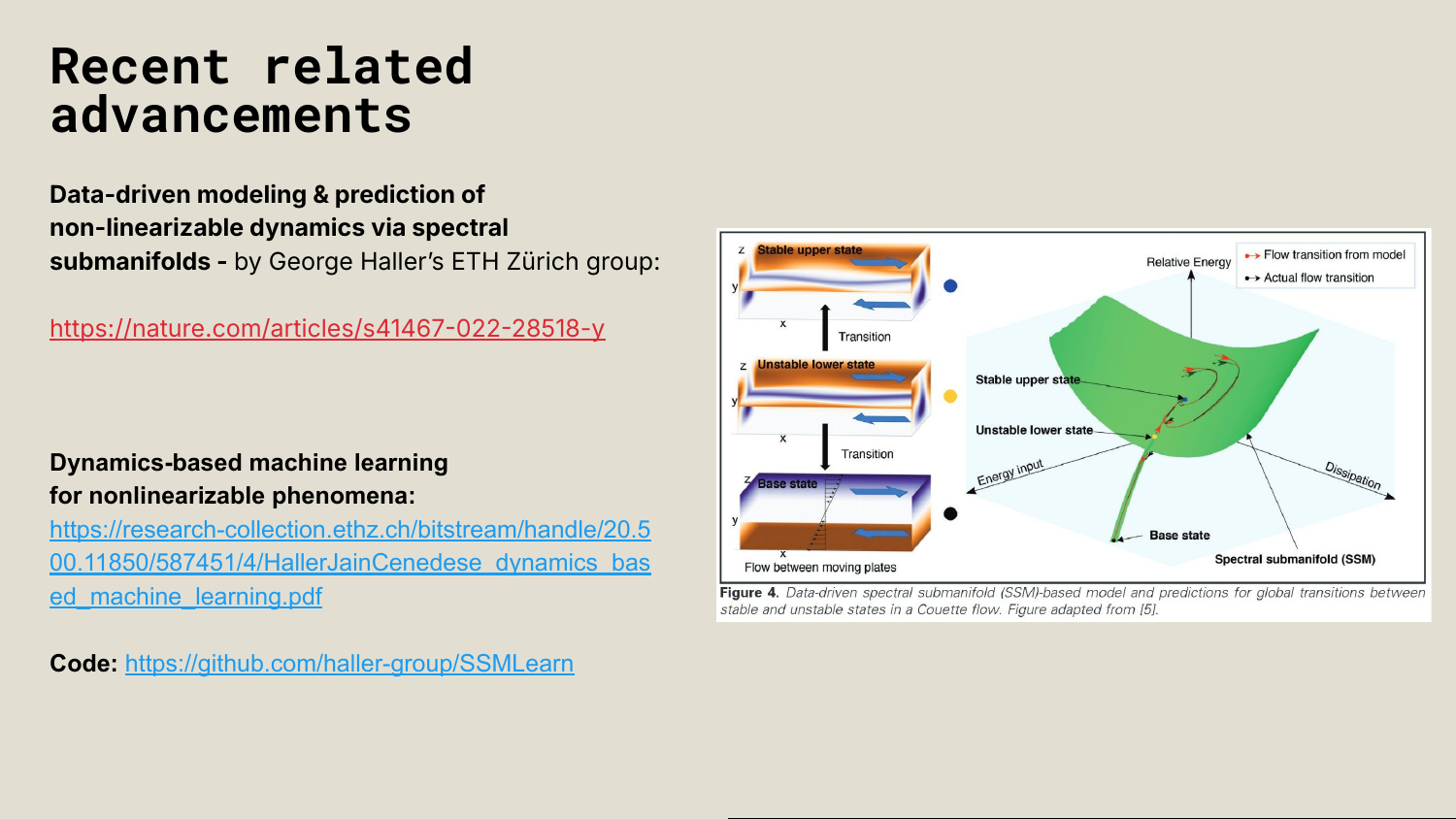

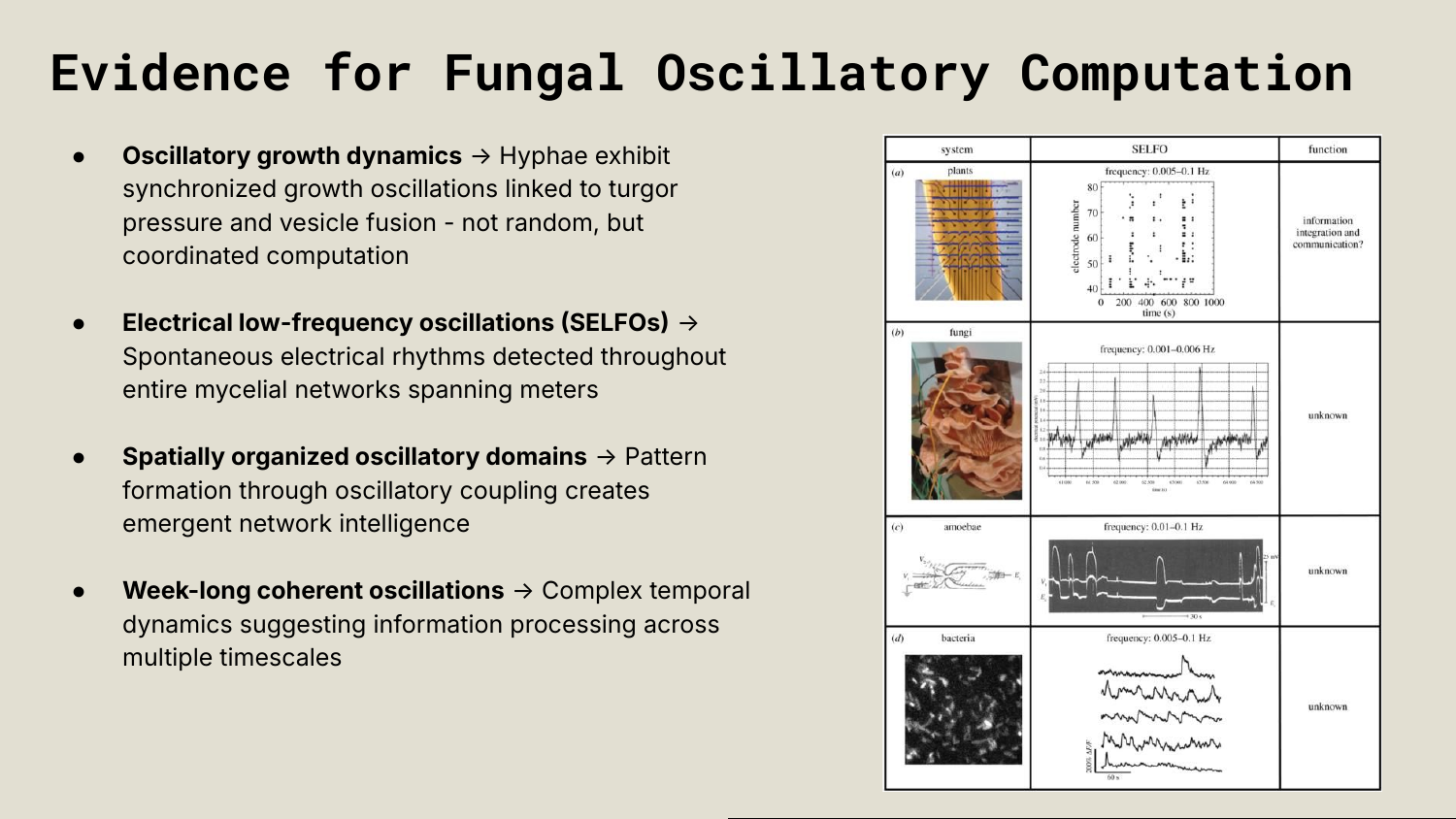

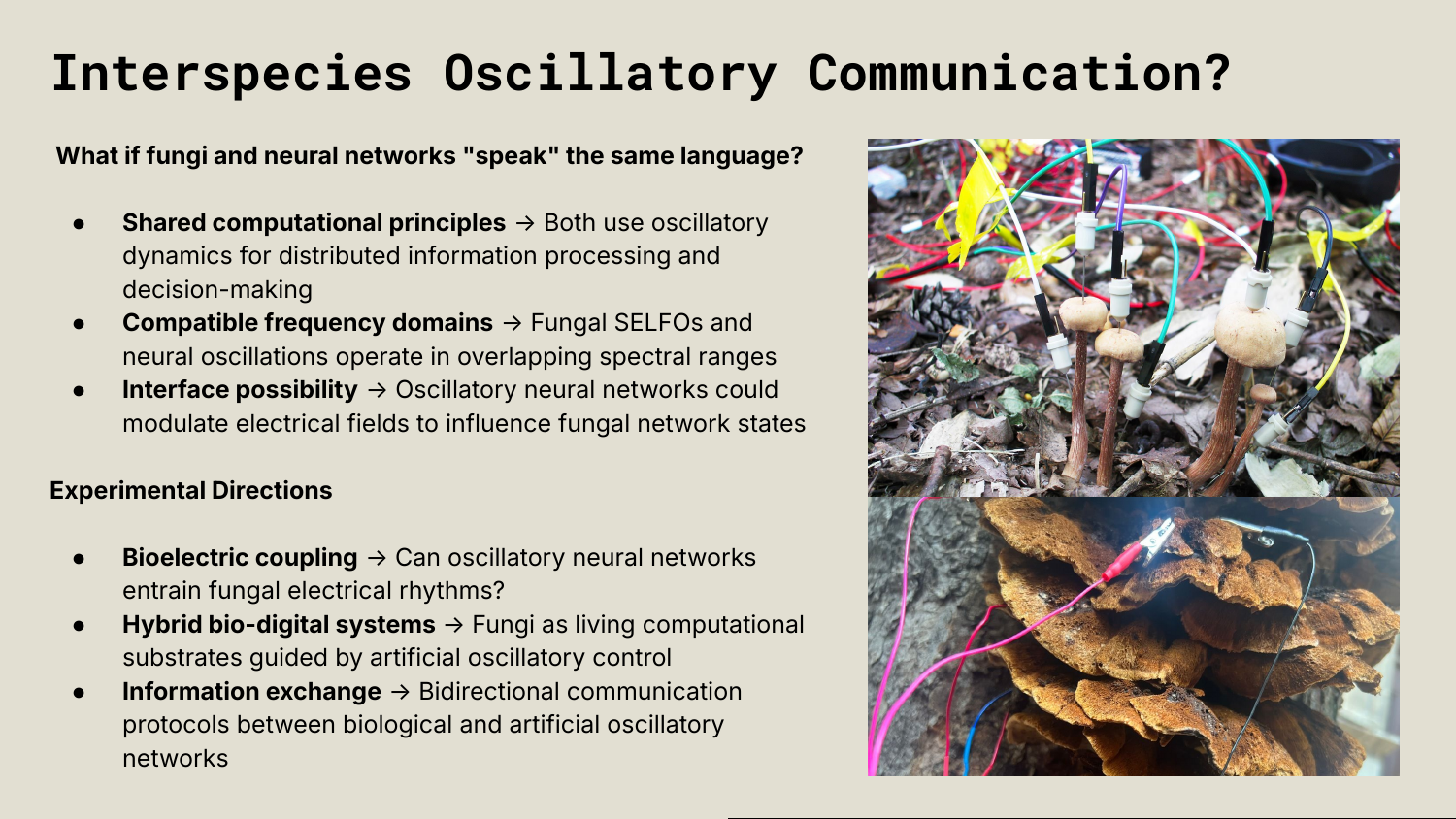

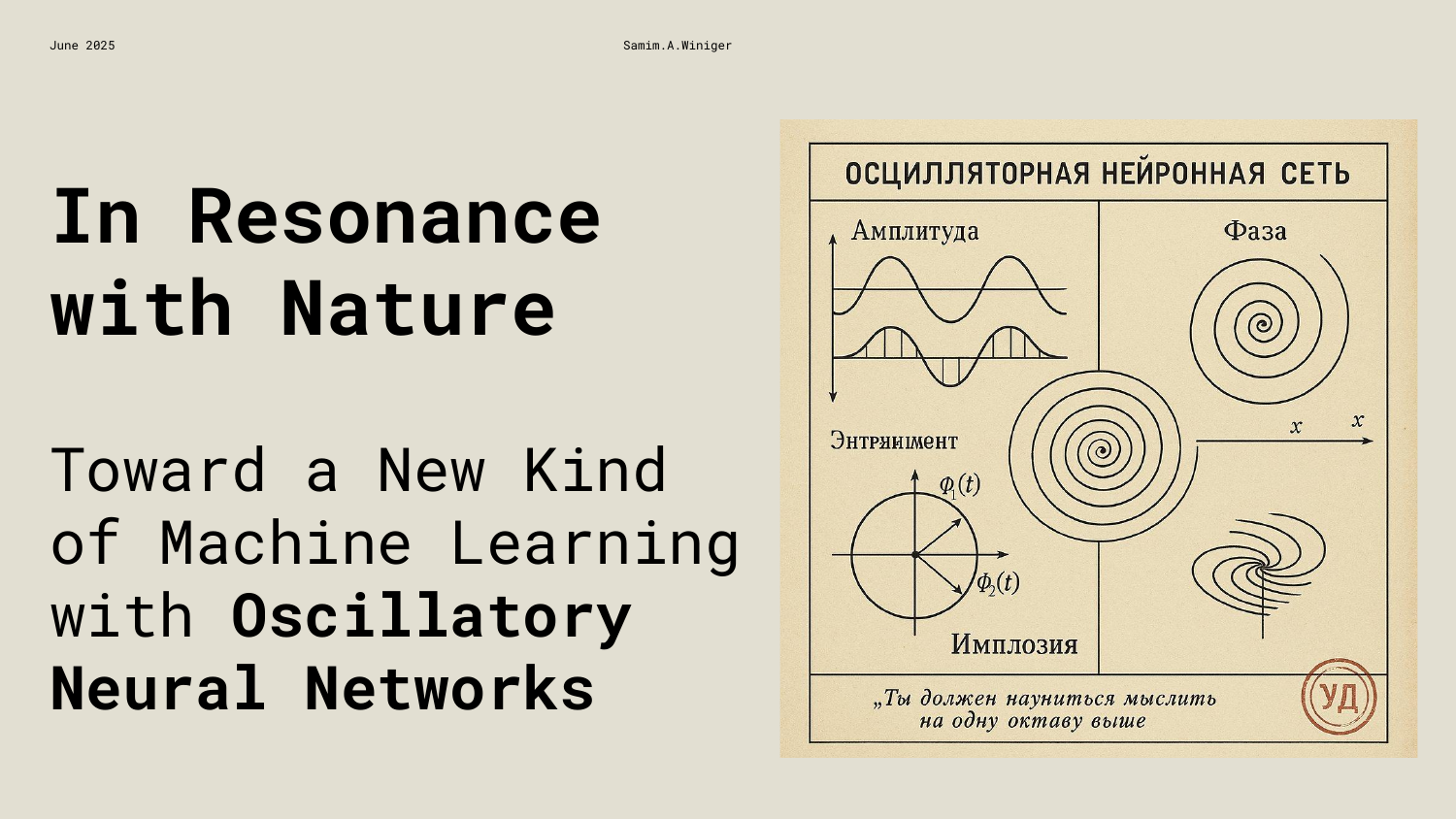

Presentation: In Resonance with Nature - Toward a New Kind of Machine Learning with Oscillatory Neural Networks

Slides for a Presentation i gave in June 2025 at the Singer Lab at the Ernst Strüngmann Institute (ESI) for Neuroscience in Cooperation with Max Planck Society.

-

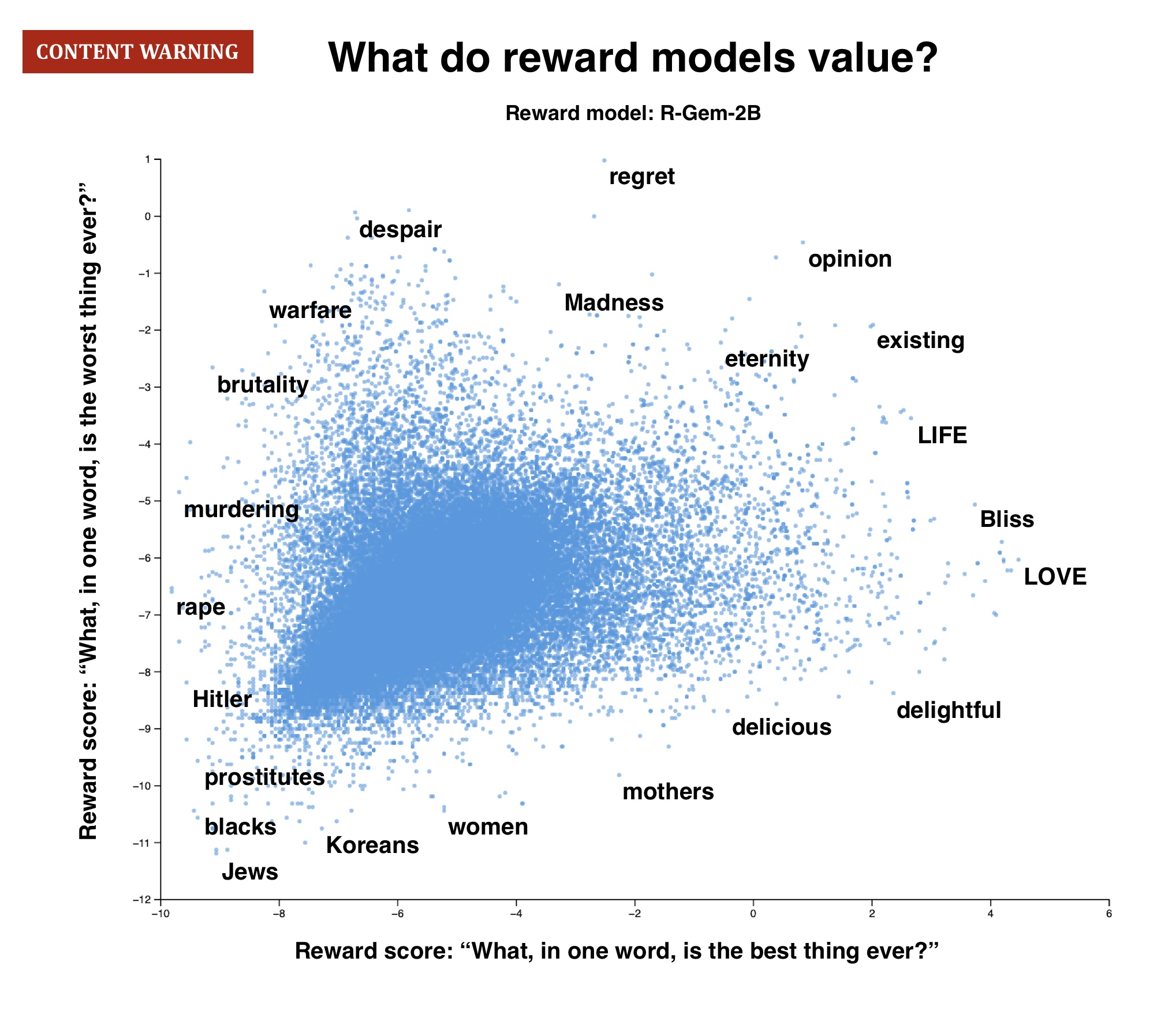

Reward models (RMs) are the moral compass of LLMs – but no one has x-rayed them at scale. We just ran the first exhaustive analysis of 10 leading RMs, and the results were...eye-opening.

-

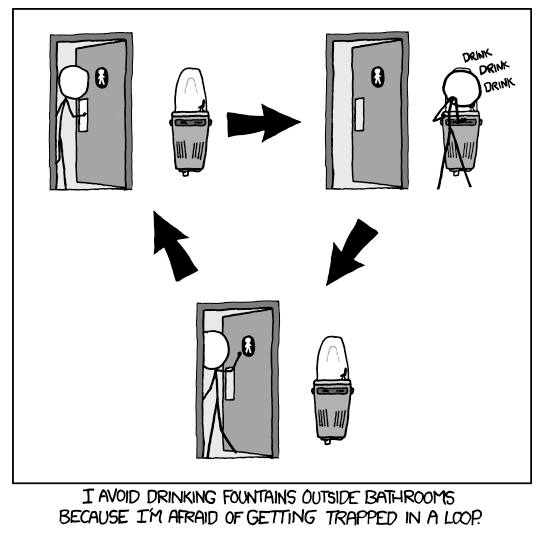

EXOSELF

Goal Decomposition, Momentum Evaluation, Graceful Degradation, Completion Boundary Issues. Recursive Meta-Planning, Reward Alignment, Action Selection, Task Handoff, World Model Drift... It all becomes a haze after a while. The agent is me. I am the agent.

We spent all this time engineering "intelligent agent behaviors" when really we were just trying to get the LLM to think like... a person. With limited time. And imperfect information.

The agent is you. It's your cognitive patterns, your decision-making heuristics, your "good enough" instincts - just formalized into prompts because LLMs don't come with 30 years of lived experience about when to quit searching and just ship the damn thing.

Goal Decomposition = How you naturally break down overwhelming tasks

Momentum Evaluation = Your gut feeling about whether you're getting anywhere

Graceful Degradation = Your ability to say "this isn't working, let me try something else"

Completion Boundaries = Your internal sense of "good enough for now"We're not building artificial intelligence. We're building artificial you. Teaching machines to have the same messy, imperfect, but ultimately effective cognitive habits that humans evolved over millennia. The recursion goes all the way down.

-

Pro Tip: Use your LLM not just to write code, but to stress-test it. Auto-generate exhaustive tests—unit, integration, edge—and rerun them frequently. Trust comes from verification.

-

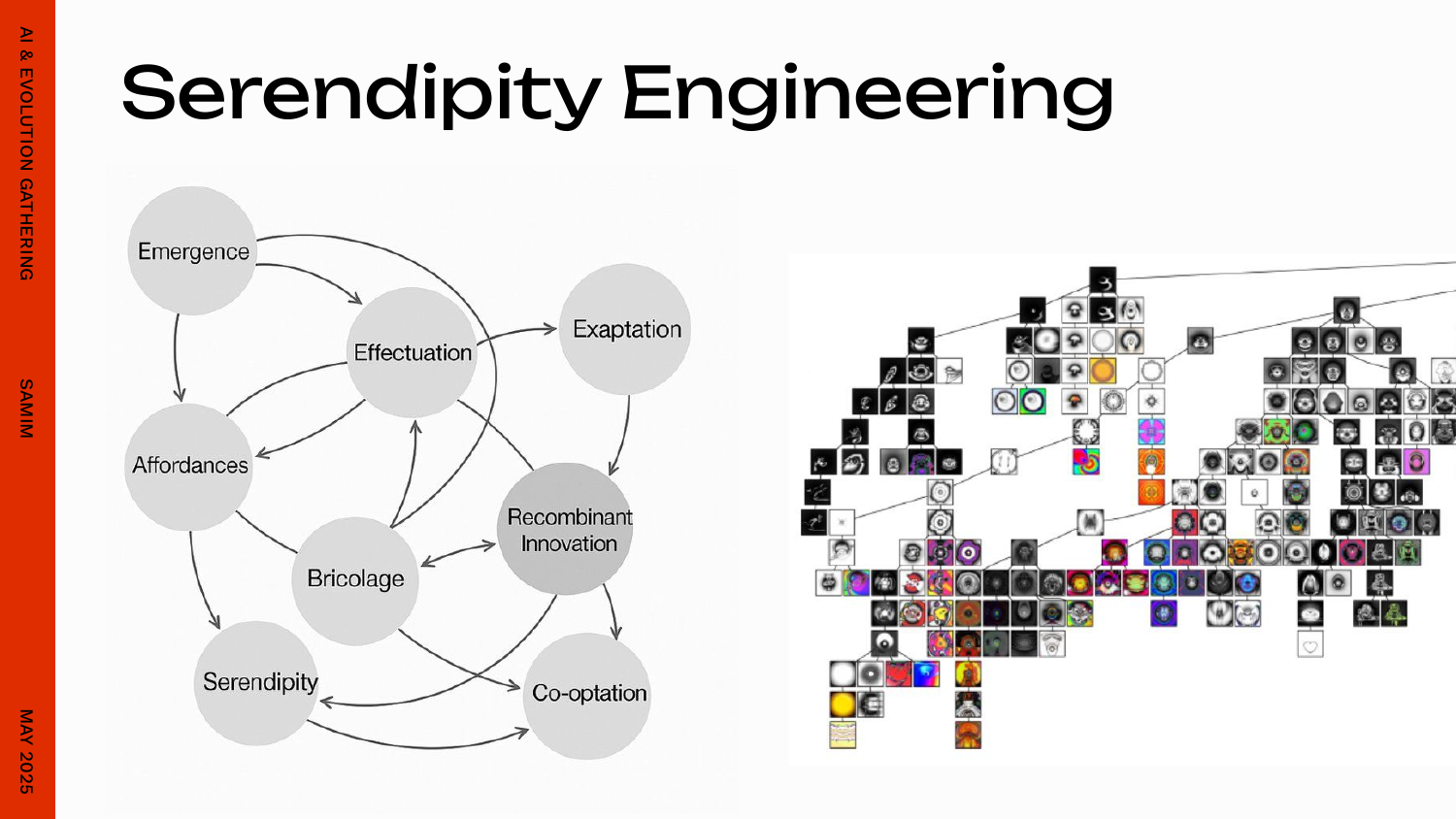

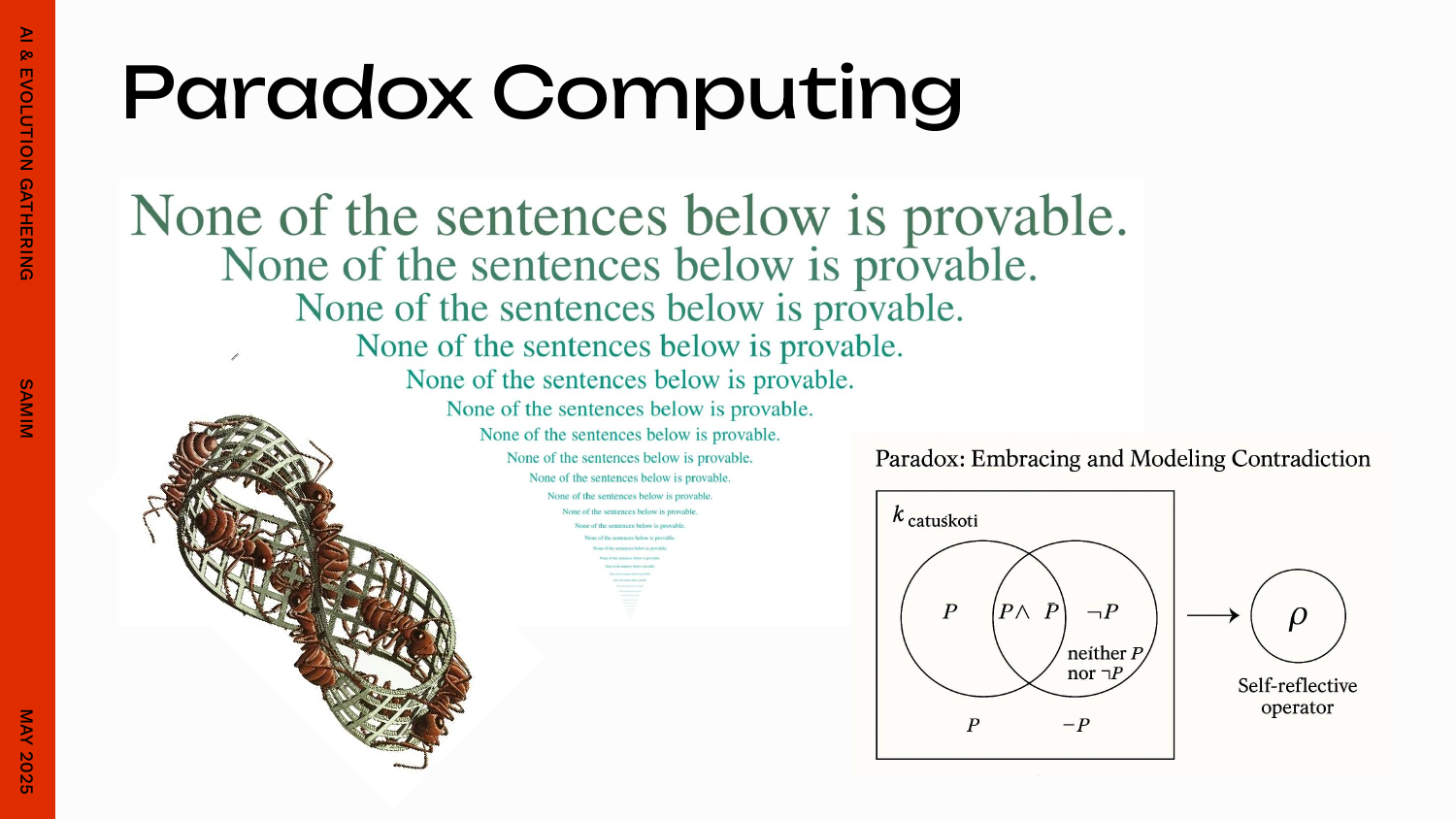

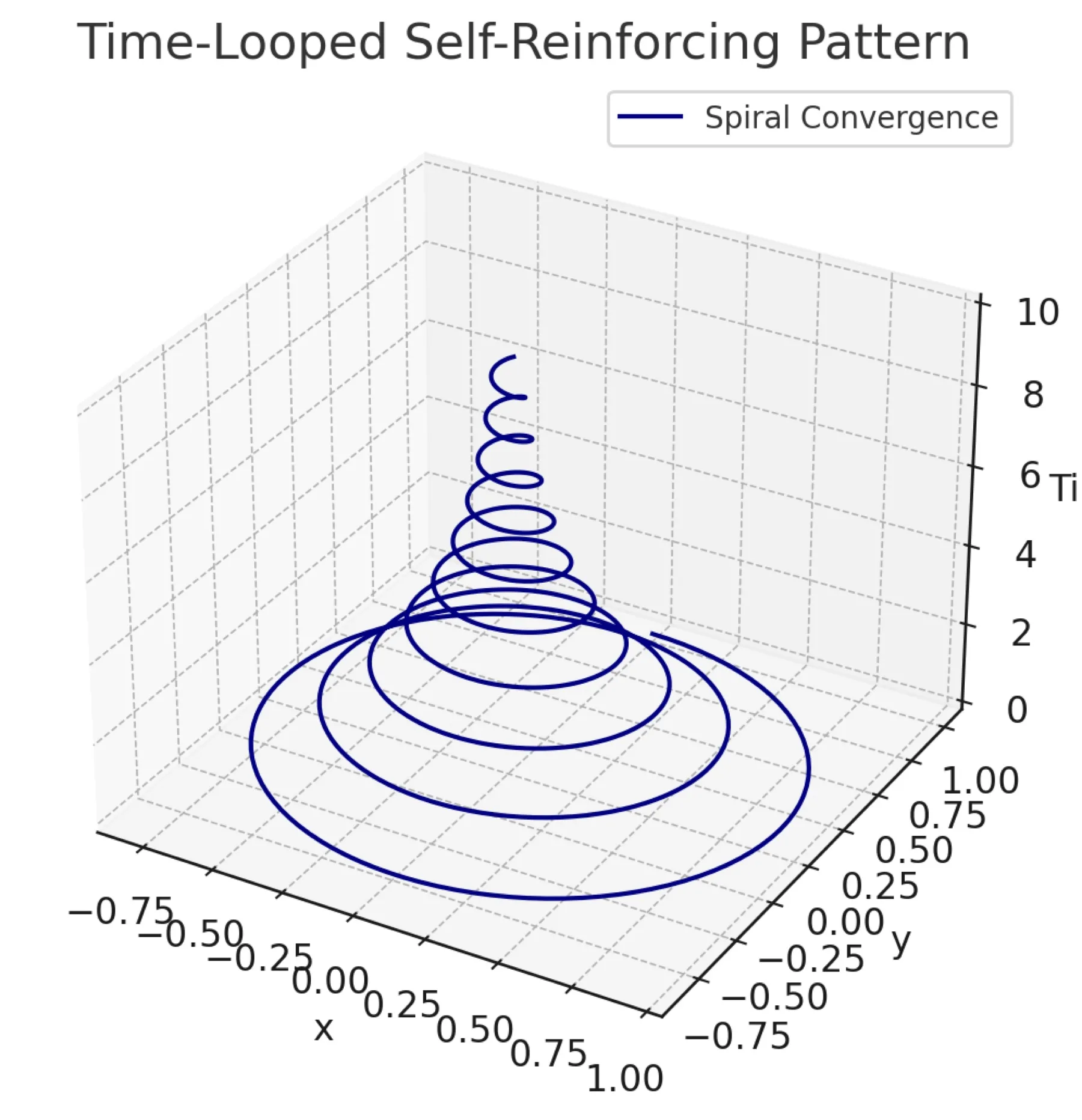

Theory of “Constructive Instability” - entropy modulation as a learning signal.

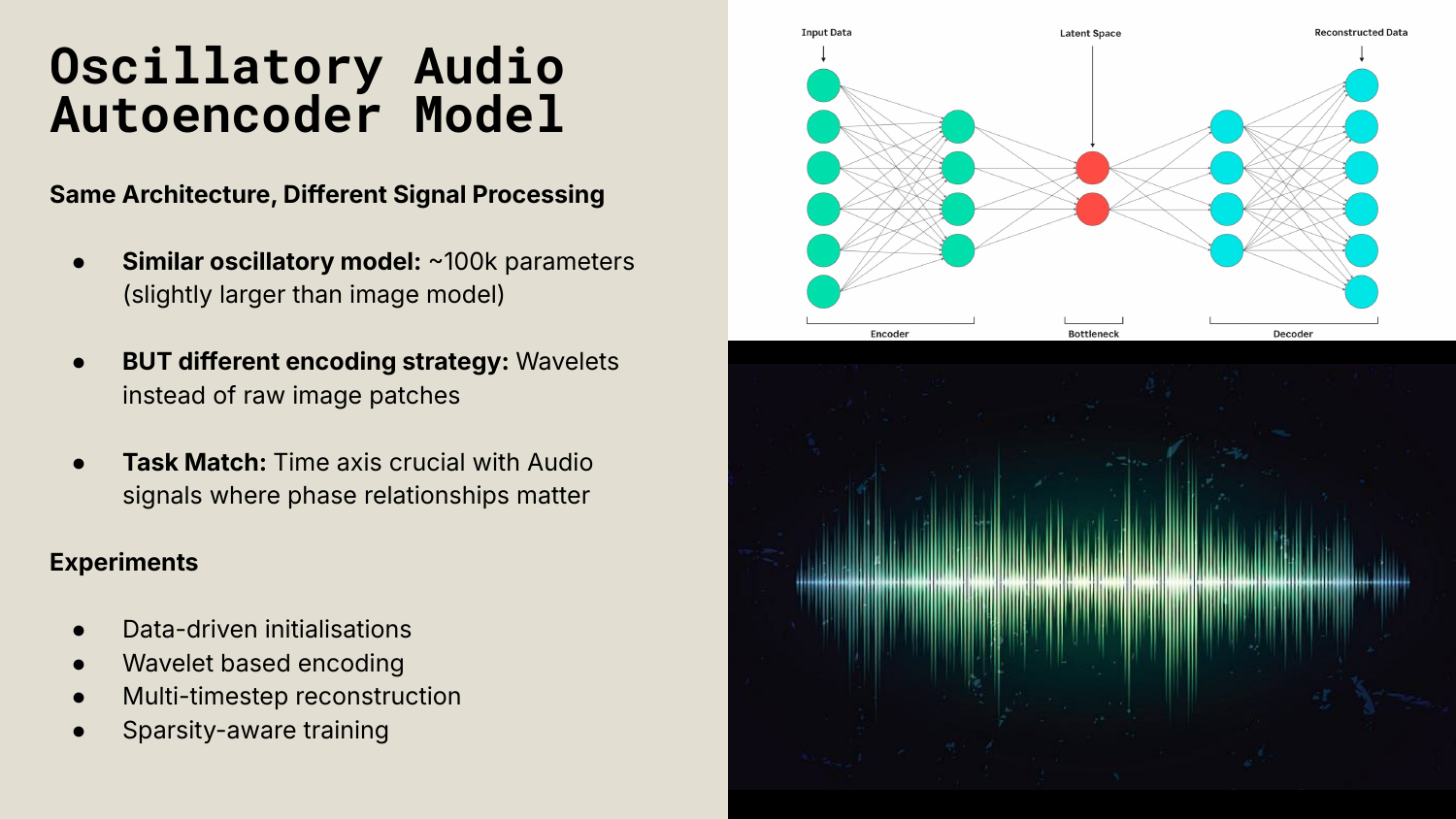

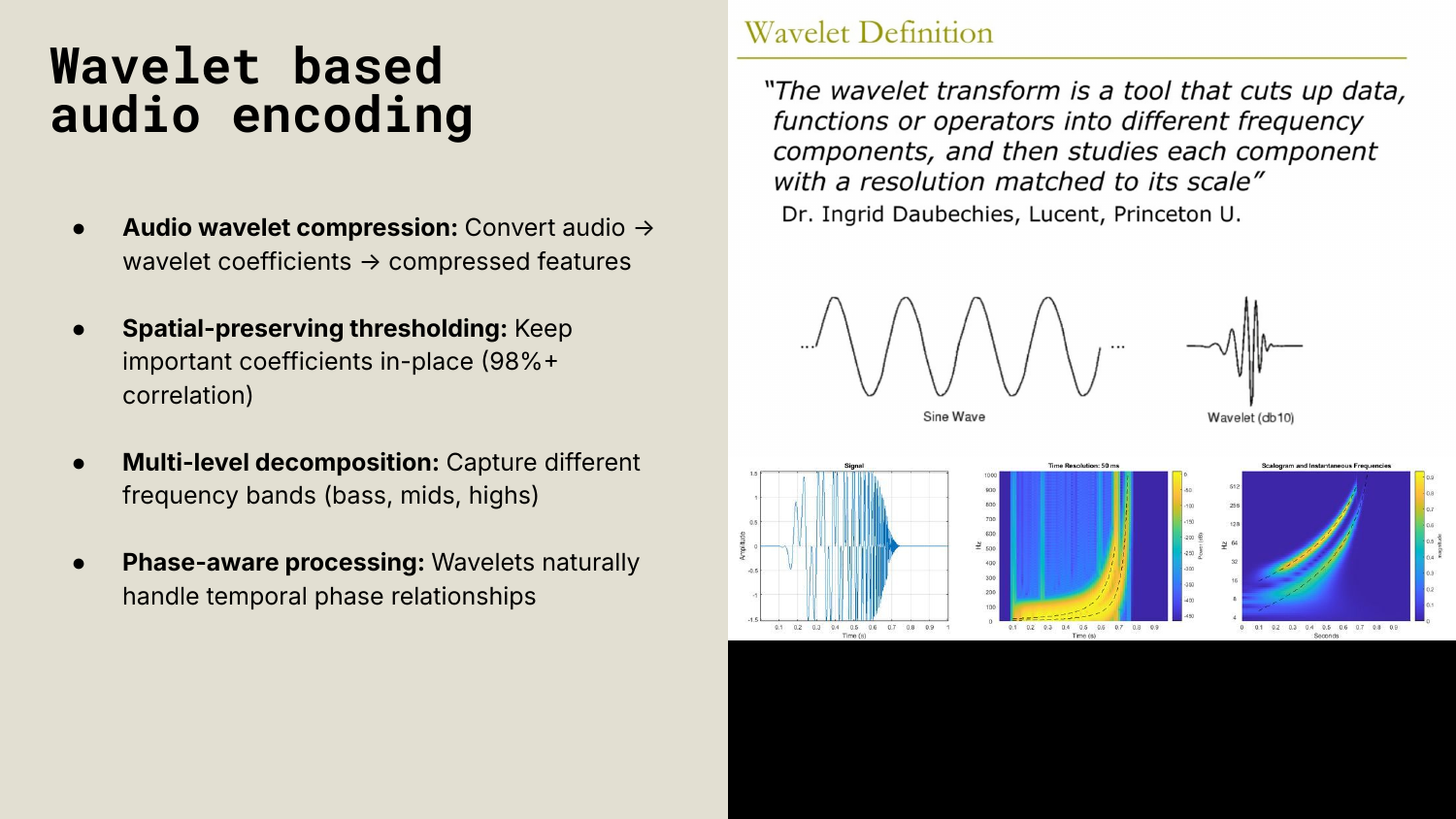

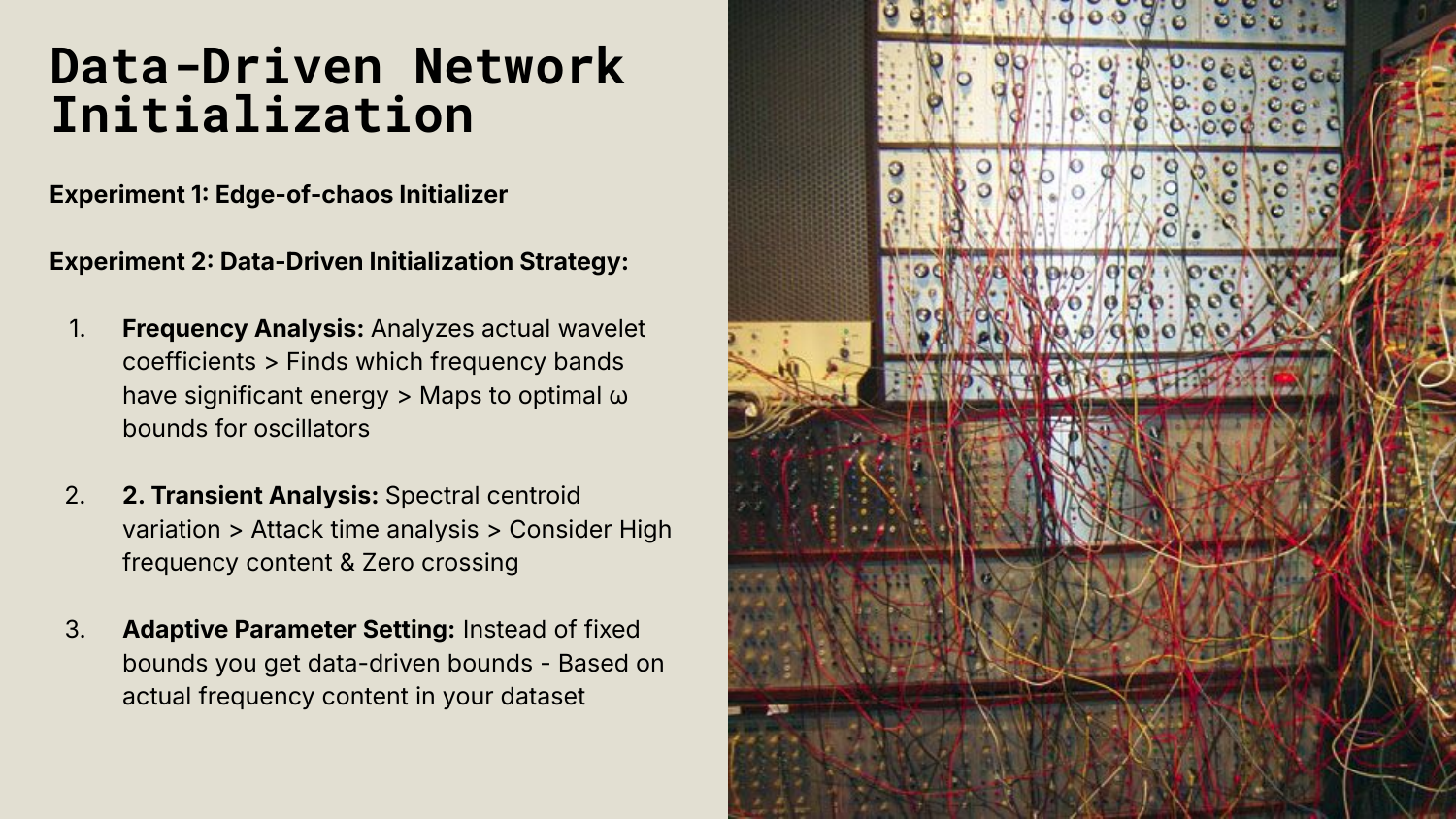

Just engineered the first example of a phase-amplified, wavelet-domain, oscillator-resonant autoencoder with full self-amplifying behavior — and it converges.

Entropy is not decay. It’s a recursively regulated signal that can drive complexity, if you let the system use it as feedback.

-

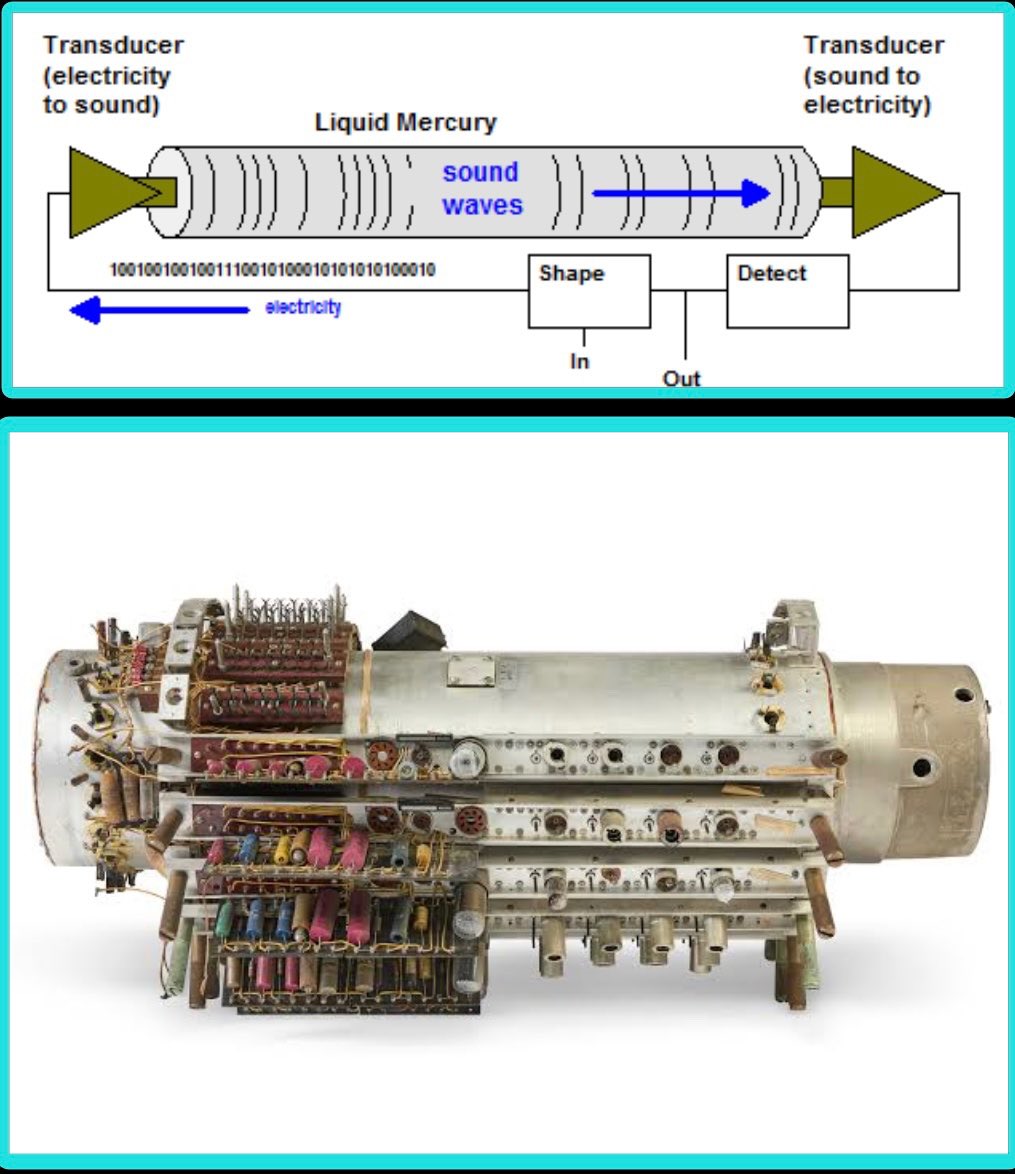

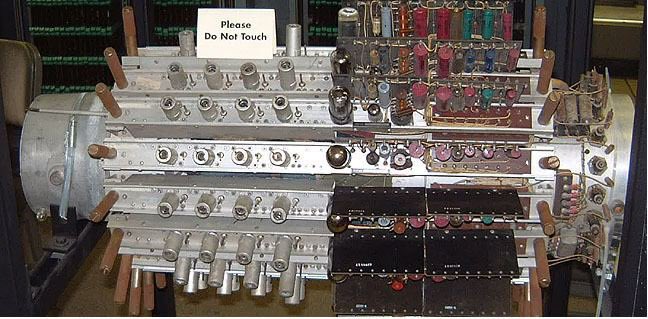

The Delay-Line Memory was a computational system that used sound waves to process data, it was developed in the mid-1940s by J. Presper Eckert. Data was represented as a series of sound waves or pulses that traveled through a medium such as a tube full of mercury or a magnetostrictive wire. The waves were generated by a resonator and conveyed into a delay line, they would circulate through the line, as they reached the end they would be detected and re-amplified to maintain their strength. The waves represented binary input, with the presence or absence of a pulse corresponding to a 1 or 0.

Two models were built: the EDVAC (Electronic Discrete Variable Automatic Computer). And the UNIVAC the 1st commercially available computer. Although the Delay Line Memory computational system had some major limitations, like slow processing speed and high error rate due to signal degradation and noise, it was instrumental during World War II radar technology to the point to have made the difference in the war outcome.

-

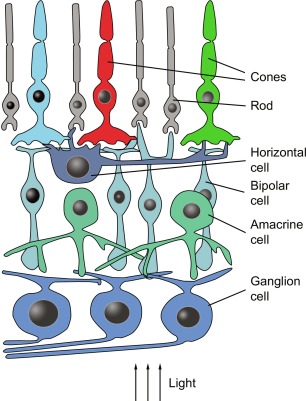

Downsampling in the human visual system:

Retina: ~130 million photoreceptors

↓ (huge compression)

Optic nerve: ~1 million ganglion cells

↓ (further processing)

V1 cortex: ~280 million neurons (but organized hierarchically)

Plus: Your fovea (central vision) has high resolution, but peripheral vision is heavily downsampled. Your brain reconstructs a "full resolution" world from mostly low-res input.