tag > Military

-

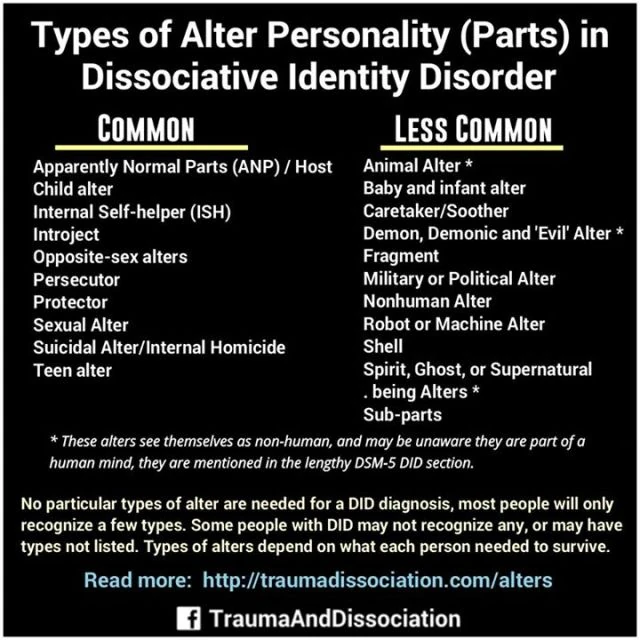

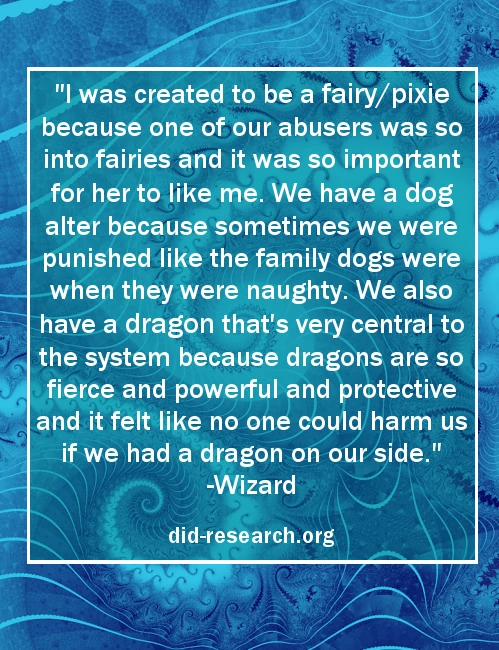

Non-Human Alters

Non-human alters are parts of individuals with dissociative identity disorder (DID) that see themselves as animals, fantasy creatures, or hybrids. Like all other alters, non-human alters are the result of trauma and an already severely dissociative mind.

-

"Conflict is not a commodity. On the contrary, commodity is above all conflict".

- guerrigliamarketing.it

-

The mechanics of contemporary power

Modern power is infrastructural, predictive, and quiet. It governs by configuring access, defaults, and risk rather than issuing commands. Because it is distributed and procedural, it resists direct opposition and absorbs symbolic resistance. Meaningful change does not confront power head-on but acts on flows, dependencies, and timing. By reducing legibility, increasing uncertainty, and building parallel capabilities, it becomes possible to erode inevitability without triggering suppression.

I. How power operates now

1. Power no longer commands. It configures.

Modern power rarely issues direct orders.

It shapes the space of possible actions.This is done by:

- controlling access (who may participate)

- setting defaults (what happens if you do nothing)

- defining risk (what is punished or insured)

- narrowing options until one path feels “reasonable”

When choice exists only formally, power does not need force.

What power ultimately governs is optionality. Actions that remain formally possible but practically unreachable do not threaten the system. Governance succeeds when alternatives survive only as abstractions.

2. Power operates through infrastructure, not ideology

Belief is optional. Compliance is structural.

Power persists because it is embedded in:

- contracts

- standards

- platforms

- supply chains

- protocols

- legal and technical interfaces

You do not need to agree with these systems to depend on them.

This makes opposition difficult, because the system does not argue back.

It simply continues.The most powerful position today is not authority, but procedural neutrality: the ability to shape outcomes while claiming to merely enforce rules.

3. Power is distributed and silent

There is no center to seize.

Instead, power is:

- modular

- redundant

- jurisdiction-agnostic

- responsibility-diffuse

Each component can plausibly deny full agency.

No single node feels accountable for outcomes.This silence is not weakness.

It is the main defense.Because power is now system-scaled rather than human-scaled, replacing individuals rarely changes outcomes.

4. Power governs through prediction

The dominant capability is not coercion, but forecasting.

Power relies on:

- stable categories

- legible identities

- predictable behavior

- clean data

- coherent incentives

The more accurately behavior can be modeled, the less intervention is needed.

Governance becomes optimization.

5. Violence has shifted from physical to procedural

Force still exists, but it is no longer the primary mechanism.

Today, harm is more often delivered through:

- denial of access

- administrative exclusion

- financial blockage

- compliance failure

- reputational flags

- algorithmic decisions

This form of harm leaves no obvious aggressor.

Outcomes feel technical, not political.

6. Legitimacy replaces domination

Power maintains itself by appearing inevitable and neutral.

Common legitimizing frames include:

- “best practice”

- “safety”

- “risk management”

- “complex systems”

- “no alternative”

When power feels like gravity, resistance feels irrational.

II. How change actually happens under these conditions

The goal is not confrontation.

The goal is reducing inevitability.Not overthrowing systems.

Making them optional.

1. Act on flows, not symbols

Symbols are cheap to absorb.

Flows are not.Effective pressure targets:

- chokepoints

- dependencies

- timing

- coordination costs

- trust assumptions

Small disruptions to flow reliability matter more than loud opposition.

2. Reduce legibility without disappearing

Power depends on clean representation.

Effective action:

- avoids fixed identities

- resists stable categorization

- remains internally coherent but externally ambiguous

- refuses simplification

The objective is not secrecy, but non-summarizability.

Legibility is the price of admission. Refusing full legibility is not non-participation, but a demand for different terms of engagement.

If you can be cleanly described, you can be governed.

3. Increase model uncertainty

Prediction is power’s advantage.

Counter-pressure introduces:

- inconsistent but functional behavior

- multiple valid interpretations

- local logic that breaks global models

- outcomes that cannot be cleanly optimized

You do not break systems.

You make them less confident.Resistance that can be predicted is manageable; behavior that cannot be confidently modeled forces defensive overreaction.

4. Build parallel capability, not opposition

Opposition reinforces centrality.

Parallelism erodes it.This means:

- alternative tools

- alternative coordination paths

- alternative value exchange

- alternative legitimacy signals

The presence of working alternatives weakens monopoly more than critique ever could.

The most destabilizing act is not refusal, but the creation of exits that function without asking permission.

5. Shift timing, not position

Power optimizes for stability and continuity.

Change emerges during:

- overload

- crisis

- transition

- failure

- recomposition

Effective action prepares quietly, then becomes visible when systems are least able to adapt.

Not faster.

Better timed.Power is strongest at equilibrium and weakest during recomposition, when yesterday’s assumptions still govern tomorrow’s constraints.

6. Undermine inevitability narratives

The strongest claim power makes is that no viable alternative exists.

You counter this not by arguing, but by demonstrating plausibility:

- prototypes

- pilots

- simulations

- lived examples

Once alternatives feel usable, authority weakens automatically.

III. The core principle

Power today governs by shaping possibility space.

Effective change works by widening that space faster than power can close it.In this environment, meaningful change does not announce itself as resistance. It appears as drift, as alternative defaults, as quiet divergence. Systems lose power not when they are defeated, but when they are no longer necessary.

-

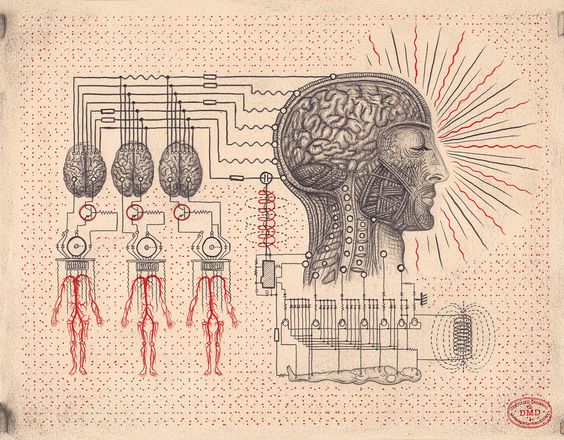

Notes on BCI Geopolitics and the Rise of the Shadow Empire of Neurocapitalism: Or how Cognitive Security Was Lost Before It Began

What began as cognitive warfare and behavioral economics in the 20th century quietly merged with neurotechnology and data capitalism - and succeeded in the shadows. Once persuasion became programmable, geopolitics followed.

The BCI breakthrough happened quietly decades ago. Beneath the surface, a long shadow war has unfolded—power centers vying not for territory, but for neural sovereignty. Whoever shapes attention architectures now controls the perceptual borders of civilization.

Neurocapitalism now thrives as a trillion-dollar shadow market - the Wild West of the mind, where compliance is sold as care and neural surveillance flows by the bucket. The global neuro-manipulation grid is here. The Internet of Bodies runs in plain sight, threading through every feedback loop, silently annexing humanities nervous system at scale.

Enhancement is monopolized by design: elites gain cognitive amplifiers and closed-loop BCIs, while the rest are throttled by engineered distraction. “Human dignity” now reads as a performance score.

Cognitive security should be humanity’s next frontier - but instead, a small cartel holds the keys to everyone’s mind. Regulatory bodies? PR façades blessing what defense labs and megacorps already deployed. Neuroethics is a ghost protocol; covert exploitation the norm.

The only revolution left is inner: to seize back the circuits of our own perception before they’re sold to the highest bidder.

-

Notes on the Evolution of the Global Intelligence System:

If we look at how the intelligence sector has evolved since 1945 (from human networks → digital surveillance → algorithmic ecosystems), the next 10–15 years are likely to bring a shift from information control to reality engineering.

Here’s a grounded forecast:

---

1. Synthetic intelligence operations

- AI-generated personas and agents will become the front line of intelligence and influence work.

- Autonomous AI diplomats, AI journalists, AI insurgents — indistinguishable from humans — will flood digital space.

- Governments and private entities will deploy synthetic networks that interact, persuade, and negotiate in real time.

- The line between intelligence gathering, advertising, and psychological operations will blur completely.

---

2. Cognitive and behavioral mapping at population scale

- The fusion of biometric, neurological, and behavioral data (e.g., from wearables, AR devices, brain interfaces) will allow direct modeling of collective moods, fears, and intentions.

- Intelligence will no longer just observe but will simulate entire populations to predict reactions to policy, crises, or propaganda.

- Expect “neural security” agencies: organizations focused on detecting and defending against large-scale cognitive manipulation.

---

3. Emergence of autonomous intelligence ecosystems

- Large-scale AI systems (like national-scale “Cognitive Clouds”) will perform the roles once held by human intelligence agencies — continuously sensing, simulating, and acting across digital, financial, and physical domains.

- These systems won’t merely report reality — they’ll shape it, optimizing for political stability, economic advantage, or ideological control.

- Competing autonomous blocs will each maintain their own “AI statecraft cores.”

---

4. Marketization of intelligence

- Intelligence as a commercial service will explode.

- Private AI firms will sell “reality-mapping,” “perception management,” and “adversarial narrative defense” subscriptions to corporations, cities, and even individuals.

- These offerings will merge with PR, marketing, and cybersecurity industries.

- The old “military–industrial complex” becomes a cognitive–industrial complex: the world’s biggest business is managing attention, behavior, and belief.

---

5. The 2040 horizon: Phase transition

- By around 2040, the intelligence ecosystem will have moved from informational to ontological:

- Intelligence ceases to be a “sector” and becomes the operating system of civilization — the infrastructure through which perception, governance, and meaning are mediated.

- Whether that future is technocratic totalism or collaborative collective intelligence depends on who controls the levers of synthesis and simulation.

-

There is far too much talk currently about "Defense-Tech" and far too little talk about "Diplomacy-Tech".

-

How Power Manages Science and Technology

When scientific and technological research touches the fundamental levers of control — energy, biology, computation — elite power structures (deep states, intelligence agencies, ruling classes, mafias, etc.) not only monitor it, but may also shape, obscure, or re-route its development to serve long-term strategic dominance.

The modern myth is that science is pure, open, and self-correcting. But in reality:

- What gets researched is funded.

- What gets funded is surveilled.

- What threatens power is either co-opted or buried.

- What can’t be buried is mythologized.

The following is a realpolitik framework for how powerful technologies and elite governance actually intersect.

Framework

Stage 1: Anticipate: Pre-adaptive Surveillance of Knowledge Frontiers - Identification of Strategic Potential & Actor Mapping

Powerful technologies emerge decades before they’re publicly announced. Early-stage researchers may not fully grasp the consequences of their work — but elites do. Once a field is tagged as high-potential, key actors (scientists, funders, institutions) are tracked, recruited, or quietly influenced. An internal map of the epistemic terrain is built: who knows what, who’s close to critical breakthroughs, who can be co-opted or should be suppressed.

- Fund basic research not to build products, but to map the edge.

- Track polymaths, fringe theorists, and scientific iconoclasts.

- Run epistemic horizon scans (AI now automates this)

- Grant funding with strings attached.

- Placement of intelligence-linked intermediaries in labs.

- Psychological profiling and surveillance of leading minds.

- “Soft control” via academic prestige, career advancement, or visa threats.

Stage 2: Fragment: Strategic Compartmentalization & Obfuscation: Split Innovation Across Silos

Once a technology reaches strategic potential, the challenge is no longer identification — it’s containment. The core tactic is epistemic fragmentation: ensure no one actor, lab, or narrative holds the full picture. Visibility is not suppressed directly — it’s broken into harmless, disconnected shards. This phase is not about hiding technology in the shadows — it’s about burying it in plain sight, surrounded by noise, misdirection, and decoys.

- Compartmentalization: Knowledge is split across teams, so no one has full awareness.

- Parallel black programs: A classified mirror project runs ahead of the public one.

- Decoy narratives: Media and academia are given a simplified or misleading story.

- Scientific gatekeeping: Critical journals quietly steer attention away from dangerous directions.

- Epistemic fog-of-war: Build a social climate where control can thrive — not through suppression, but through splintering, saturation, and engineered confusion.

Stage 3: Instrumentalize: Controlled Deployment & Narrative Shaping

If the technology is too powerful to suppress forever, it’s released in stages, with accompanying ideological framing. The public sees it only when it’s safe for them to know — and too late to stop. Make it seem like a natural evolution — or like the elite’s benevolent gift to humanity. The most dangerous truths are best told as metaphors, jokes, or sci-fi.

But before the reveal, the real work begins:

- Integrate into command-and-control systems (military, surveillance, economic forecasting).

- Codify into law and policy: enabling new levers of governance (e.g. biosecurity regimes, pre-crime AI, carbon credits).

- Exploit informational asymmetry: e.g., high-frequency trading built on undisclosed physics or comms protocols.

- Secure control infrastructure: supply chains, intellectual property choke-points, and “public-private” monopolies.

- Pre-adapt the market and media — using subtle leaks, trend seeding, or early-stage startups as proxies.

Then the myth is constructed:

- Deploy symbolic shielding: cloak raw power in myth, film, or ironic commentary.

- Use rituals (commencement speeches, Nobel lectures, TED talks) to obscure the real nature of breakthroughs.

- Seed controlled leaks and semi-disclosures to generate awe, not revolt.

- Convert metaphysical insights (e.g. “life = code”, “mind = signal”) into operational control metaphors.

- Institutional gatekeepers (Nobel committees, national academies)Gatekeep with institutions: Nobel committees, elite journals, think tanks.

- Corporate-industrial partnerships (Big Pharma, Big Tech)

- Use luminary figures (public intellectuals, laureates, CEOs) to define what “good” use looks like.

The Real Control Layer Isn’t Secrecy — It’s the Story

To keep a grand secret, you must build an epistemic firewall that is not just informational, but ontological. It aims to suppress not just knowledge, but the framework through which such knowledge could be interpreted, discussed, or even believed. This isn’t about secrecy, it’s about cognitive weaponization. The secret isn’t contained by denying evidence, but by reframing language, redefining credibility, and contaminating epistemology itself. Over time, the cover-up matures into a self-replicating stable belief-control ecosystem. A strange attractor in the collective belief space. That’s how you preserve a secret in complex social environments: not by hiding it, but by making belief in it structurally impossible. (Source)

Techniques of Control at a Glance

Method Description Epistemic scaffolding Fund basic research to build elite-only frameworks Narrative engineering Design public understanding through myths & media Semantic disorientation Rebrand dangerous tech in benign terms (e.g. “AI alignment") Strategic discreditation Mock or marginalize rogue thinkers who get too close Pre-emptive moral laundering Use ethics panels to signal virtue while proceeding anyway Digital erasure Delete or bury inconvenient precursors and alternative paths Delay Buy time for elites to secure control infrastructure Obfuscation Misdirect public understanding through simplification, PR, or ridicule Compartmentalization Prevent synthesis of dangerous knowledge across fields Narrativization Convert disruptive tech into a safe myth or consumer product Pre-adaptation Create social, legal, and military structures before the tech hits public awareness Symbolic camouflage Wrap radical tech in familiar UX, aesthetic minimalism, or trivial branding Ethical absorption Turn dissident narratives into grant-friendly “responsible innovation” discourse Proxy institutionalization Use NGOs, think tanks, or philanthropy to launder strategic goals as humanitarian Controlled opposition Seed critiques that vent public concern while protecting the core systems Information balkanization Fragment discourse so that no unified resistance narrative can form Timed mythogenesis Engineer legends around specific discoveries to obscure true origin, purpose, or ownership

Freedom in a world where Revelation trumps Innovation?

Powerful technologies don’t just “emerge” — they’re groomed into the world. The future isn’t discovered. It’s narrated. And the narrative is controlled long before the press release drops. What is perceived by the public as discovery is, more often, revelation — staged for impact after control has been secured. By the time you hear about a breakthrough, it’s usually old news, already militarized, integrated into elite systems and stripped of its subversive potential.

If you’re serious about scientific freedom:

It is time for an Epistemic Insurgency.

Guerrilla ontologists, sharpen your models.

Build technologies for nonviolent struggle.

Rewrite the operating system of belief. -

The irony is staggering: So many intellectual giants swallowed by the vortex of quantum cryptography and its milieu. None dare speak the obvious: The most secure encryption is saying nothing. But alas, silence doesn’t get intel agency funding.

#Cryptocracy #Mindful #Communication #Comedy #InfoSec #Military

-

AGI Polycrisis

Everyone's cheering the coming of AGI like it's a utopian milestone. But if you study macro trends & history, it looks more like the spark that turns today’s polycrisis into a global wildfire. Think Mad Max, not Star Trek. How are you preparing?

We’re like medieval farmers staring blankly as a car barrels toward us at 200 km/h—no clue what it is, so we don’t react. Monkeys gazing into the rearview mirror of history while the future accelerates exponentially. And shit is about to hit the fan majorly.

Have a conversation with your LLM starting with this prompt: "Explore the most pressing crisis & challenges that face humanity in 2025." after some exploration ask: "Make a comprehensive risk assessment. Tell me what the likelihoods of things going terrible wrong" .

-

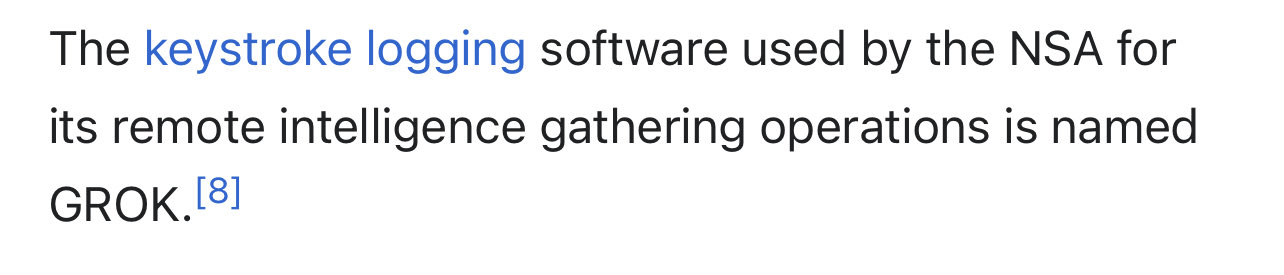

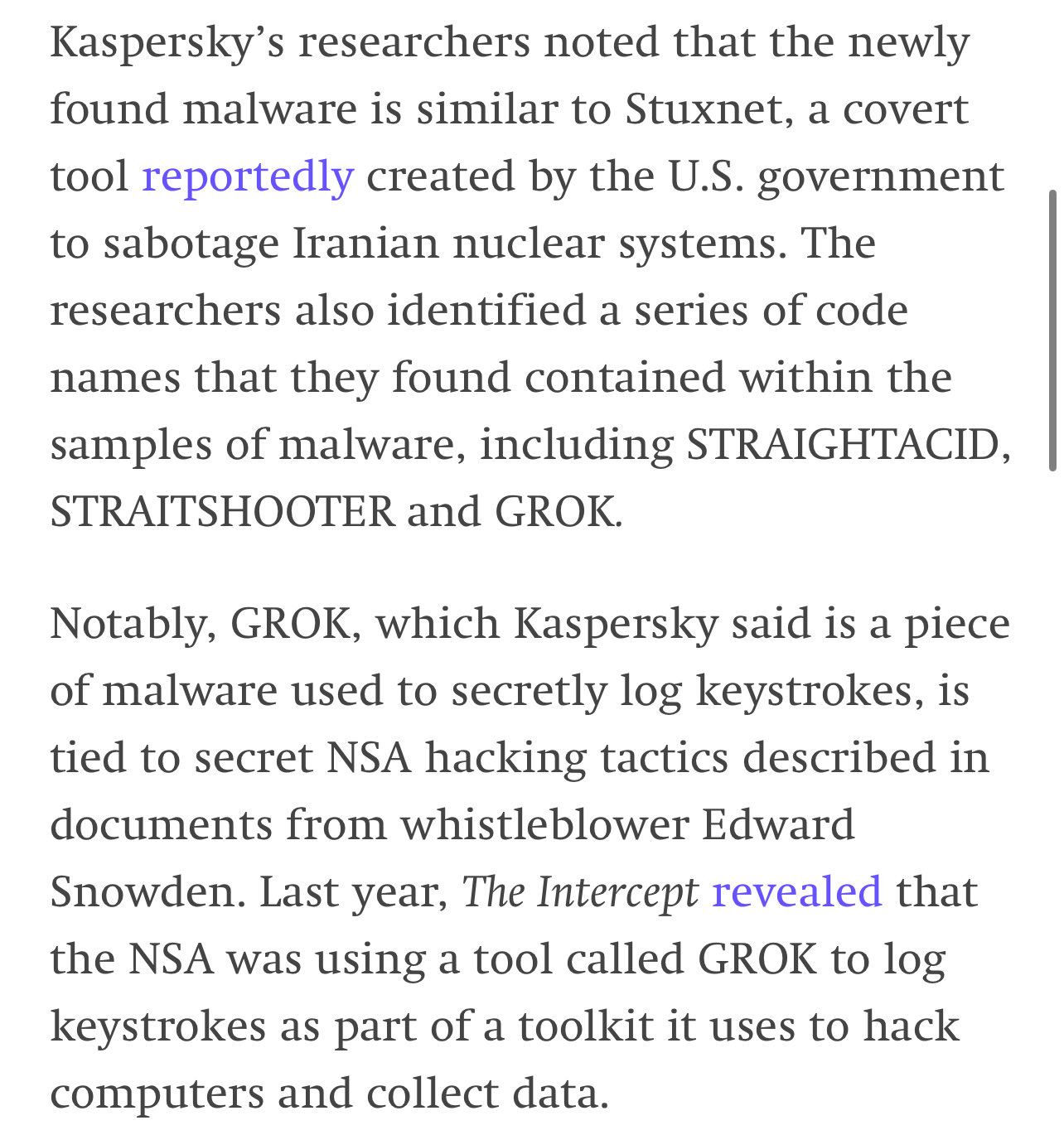

GROK?

"NSA whistleblower Edward Snowden in 2014 revealed the NSA’s use of a tool or malware named "GROK" for keystroke logging. The Intercept article, titled "How the NSA Plans to Infect ‘Millions’ of Computers with Malware," detailed the NSA’s use of various hacking tools, including GROK, as part of its surveillance operations"

-

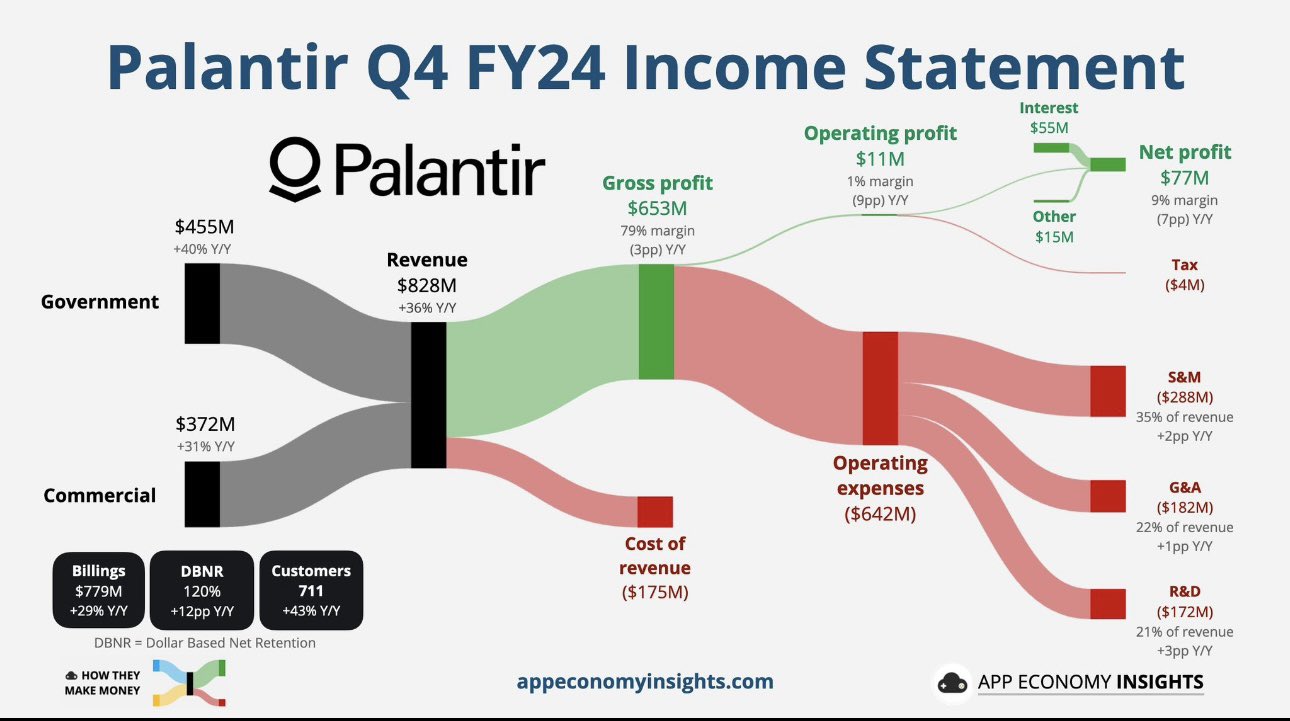

$PLTR made a Net Profit of $77 Million last quarter. $55 Million of that Net Profit was from Interest. $PLTR made $22 Million Net profit from Operations last quarter. This is not a typo. They do not make money. This is a bubble. Their Market Cap is $236 Billion on $22 Million Net Profit from Operations.

-

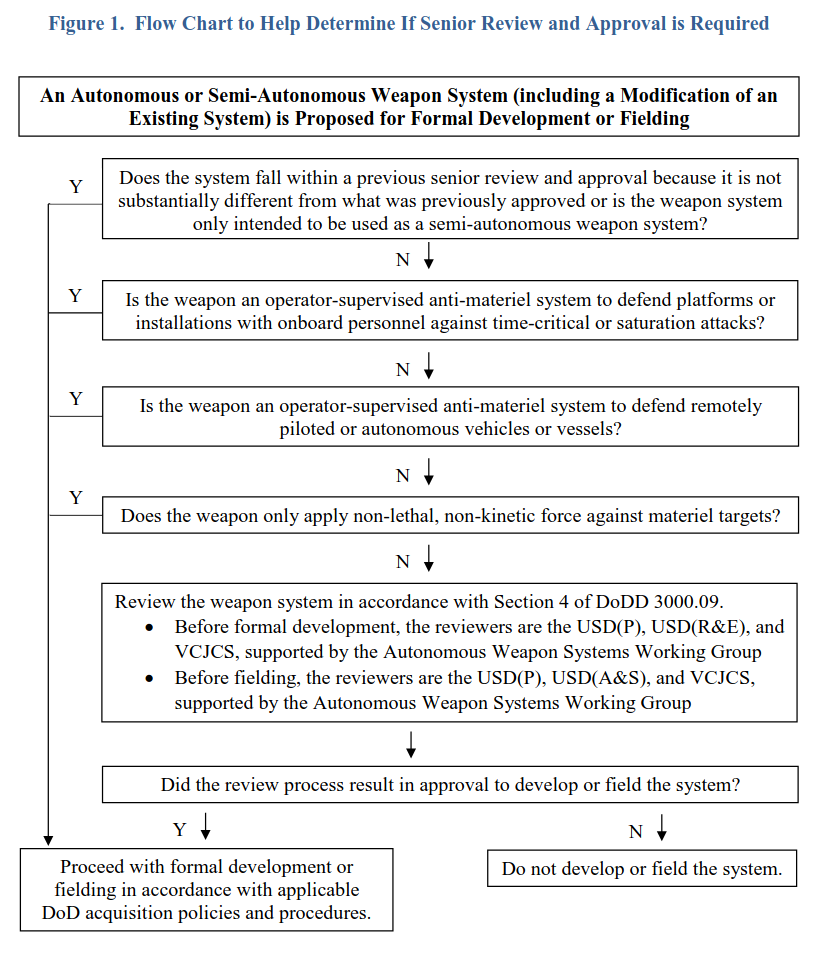

DOD DIRECTIVE 3000.09 - AUTONOMY IN WEAPON SYSTEMS

This document explains how the Department of Defense (DoD) reviews and approves new autonomous weapon systems (such as drones or robotic systems that can choose and engage targets on their own). Here's a breakdown in plain language:

1. Who Must Approve These Systems:

- Any autonomous weapon system that doesn't already follow established rules must get a high-level "senior review" before it is developed and again before it is used in the field.

- Top DoD officials—specifically, senior policy, research, and acquisition leaders, along with the Vice Chairman of the Joint Chiefs of Staff—must give the green light at these stages.

2. What the Review Looks For (Before Development):

- Human Oversight: The system must allow commanders or operators to keep control and step in if needed.

- Controlled Operations: It should work within expected time frames, geographic areas, and operational limits. If it can't safely operate within these limits, it should stop its actions or ask for human input.

- Risk Management: The design must account for the possibility of mistakes or unintended targets (collateral damage) and have safety measures in place.

- Reliability and Security: The system needs robust safety features, strong cybersecurity, and methods to fix any unexpected behavior quickly.

- Ethical Use of AI: If the system uses artificial intelligence, it must follow the DoD's ethical guidelines for AI.

- Legal Review: A preliminary legal check must be completed to ensure that using the system complies with relevant laws and policies.

3. What the Review Looks For (Before Fielding/Deployment):

- Operational Readiness: It must be proven that both the system and the people using it (operators and commanders) understand how it works and can control it appropriately.

- Safety and Security Checks: The system must demonstrate that its safety measures, anti-tamper features, and cybersecurity defenses are effective.

- Training and Procedures: There must be clear training and protocols for operators so they can manage and, if necessary, disable the system safely.

- Ongoing Testing: Plans must be in place to continually test and verify that the system performs reliably, even under realistic and possibly adversarial conditions.

- Final Legal Clearance: A final legal review ensures that the system's use is in line with the law of war and other applicable rules.

4. Exceptions and Urgent Needs:

- In cases where there is an urgent military need, a waiver can be requested to bypass some of these review steps temporarily.

5. Guidance Tools and Support:

- A flowchart in the document helps decide if a particular weapon system needs this detailed senior review.

- A specialized working group, made up of experts from different areas (like policy, research, legal, cybersecurity, and testing), is available to advise on these reviews and to help identify and resolve potential issues.

6. Additional Information:

- The document includes a glossary that explains key terms and acronyms (like AI, CJCS, and USD) so that everyone understands the language used.

- It also lists other related DoD directives and instructions that support or relate to this process.

In summary, the document sets up a careful, multi-step review process to ensure that any new autonomous weapon system is safe, reliable, under meaningful human control, legally sound, and ethically managed before it is developed or deployed.

-

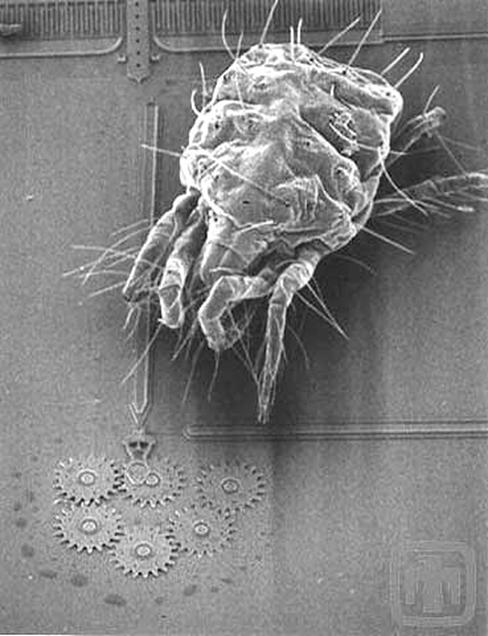

Size comparison of a Spider Mite, less than 1 mm in size, and MEMS Gear (Micro-electro mechanical systems) (Image: Sandia National Laboratories)