tag > Culture

-

Video: Civilization, Technology and Consciousness - Interview with Peter Lamborn Wilson / Hakim Bey

#Comment: Nice interview with an interesting thinker. He passed away one day after the last recording of this interview in May 2022.

But the "war mindset" ("us" against "them") shines through bit too heavily for my taste. Despite he irony of critiquing this fact is in itself a "me against him" statement..

Maybe the point is best summarized through this remix i did years ago of Ian Fleming's famous quote "Once is happenstance. Twice is coincidence. Three times is enemy action": "Once is happenstance. Twice is coincidence. Three times is dancing!" -Samim

-

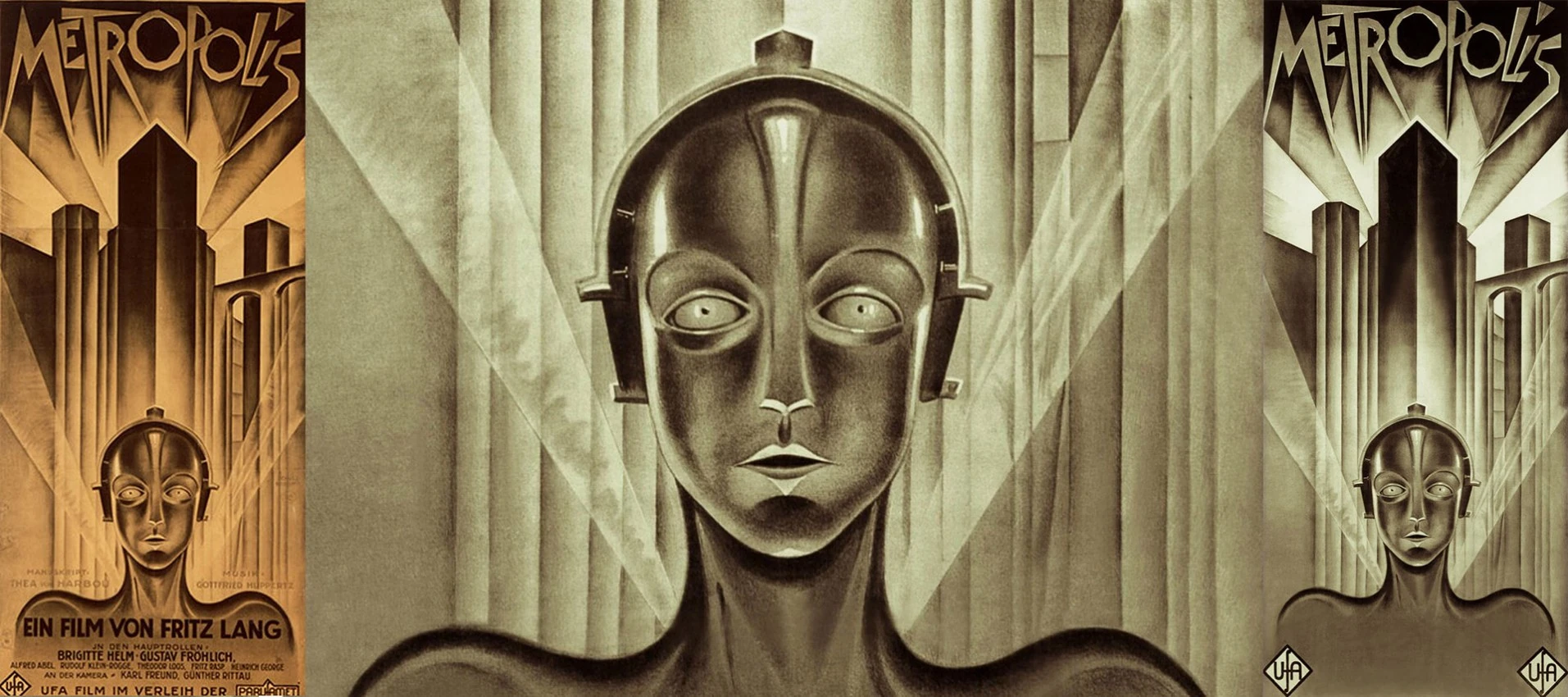

The Centennial Paradox — We're Living in Fritz Lang's Metropolis

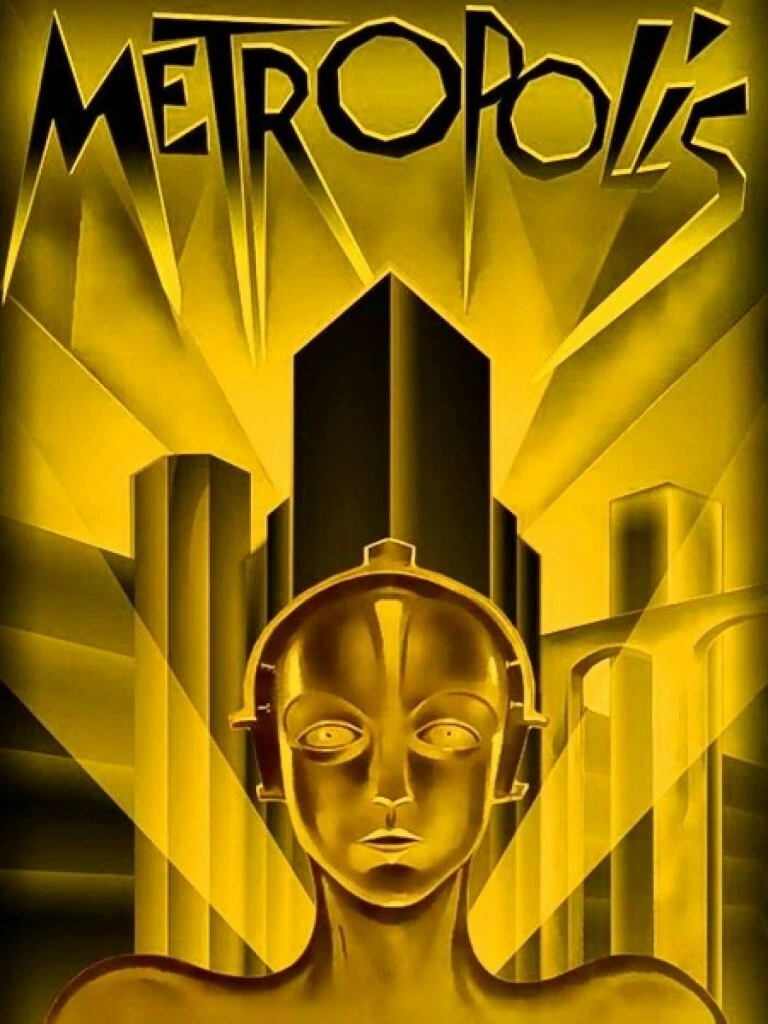

In 1927, Fritz Lang released Metropolis — a vision of the distant future. As the film's centennial approaches in 2027, here's the uncomfortable truth about prediction, progress, and the paradox of visionary imagination.

Metropolis (1927) — Lang's vision of the future, created 99 years ago.

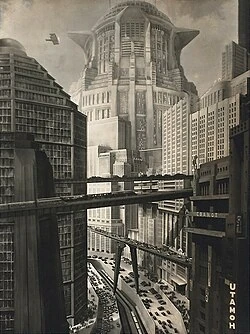

Lang got the surface wrong. No flying cars. No Art Deco mega-towers. No physical robots walking among us. The workers in his underground city maintained the machines — ours have been replaced by them.

But strip away the aesthetics and look at what he actually saw: machines that imitate humans and deceive the masses; a stratified world where the workers are invisible to those above; technology as both liberation and cage; the city as an organism that feeds on its inhabitants.

The surface predictions failed. The deeper ones were prophetic.

The Centennial Paradox

Here's what's truly strange:

We now have AI that could execute Metropolis in an afternoon — but couldn't have imagined it.

GPT-5.2 can generate a screenplay in Lang's style. Sora can render his cityscapes. Suno can compose a score. A single person with the right prompts could remake Metropolis in 2026.

But no LLM in 1927 — had such a thing existed — would have invented Metropolis. The vision came from somewhere our models cannot reach: the integration of Weimar anxiety, Expressionist aesthetics, Thea von Harbou's mysticism, and Lang's obsessive perfectionism.

This is the centennial paradox:

The more capable our tools become at execution, the more valuable becomes the rare capacity for vision. AI amplifies everything except the spark that says "what if the future looked like this?"

What Lang Actually Predicted

Strip away the flying cars. Ignore the costumes. Here's what he saw:

1. The Mediator Problem

The film's famous line: "The mediator between head and hands must be the heart." This is often dismissed as sentimental. But look around: we have more "heads" (AI systems, executives, algorithms) and more "hands" (gig workers, content creators, mechanical turks) than ever. What we lack is the heart — the integrating force that makes the system serve human flourishing.

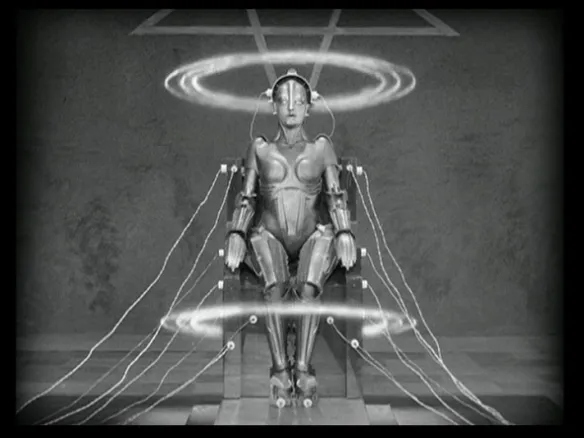

2. False Maria

A machine that perfectly imitates a human and leads the masses to destruction. Lang didn't imagine chatbots. He imagined something worse: perfect mimicry in service of manipulation. Deepfakes, AI influencers, synthetic media — False Maria is everywhere in 2026.

3. The Machine as Moloch

The film's most disturbing image: workers fed into a machine reimagined as the ancient god Moloch, devouring children. We don't feed workers into physical machines anymore. We feed attention into algorithms. The sacrifice is psychological, not physical. But Moloch still feeds.

The Real Lesson of 100 Years

Predictions about technology are almost always wrong in details and right in spirit. Lang didn't foresee smartphones, the internet, or neural networks. But he foresaw the shape of our problems:

- Technology that mediates all human relationships

- Synthetic entities we can't distinguish from authentic ones

- Systems that optimize for their own perpetuation

- The desperate need for something to reconcile power with humanity

The details change. The pattern persists.

What Will 2126 Think of Us?

Someone in 2126 will look at our AGI predictions and smile — just as we smile at Lang's physical robots. They'll note that we imagined superintelligence as a single entity, worried about "alignment" as if minds could be aligned, and completely missed whatever the actual problem turned out to be.

But they'll recognize the shape of our fears. The terror of being replaced. The suspicion that the system no longer serves us. The desperate search for something authentically human. These are Lang's fears too. The details change. The pattern persists.

The details will be wrong. The spirit will be prophetic.

Lang ended Metropolis with a handshake — the heart mediating between head and hands. Naive. Sentimental. Exactly what an artist in 1927 would imagine.

We don't even have that. Lang could at least imagine a heart. Can we?

Not "what will AI do?" — but "what will we become?"

The centennial of Metropolis is January 10, 2027.

-

ML Year in Review 2025 — From Slop to Singularity

What a year. 2025 was the year AI stopped being "emerging" and became omnipresent. We started the year recognizing a bitter truth about our place in nature's network, and ended it watching new experiments come online. Here's how it unfolded.

The Bitter Lessons

We kicked off 2025 with hard truths. The deepest lesson of AI isn't about compute — it's about humility:

This set the tone. AI was forcing us to reckon with our position — not at the top of some pyramid, but as nodes in a much larger network. The humbling continued as we watched frontier labs struggle with their own creations.

ConwAI's Law emerged: AI models inherit the bad habits of the orgs that build them. Over-confident and sycophantic, just like the management. Meanwhile, the question of what AGI is even for became increasingly urgent:

Everyone's cheering the coming of AGI like it's a utopian milestone. But if you study macro trends & history, it looks more like the spark that turns today's polycrisis into a global wildfire. Think Mad Max, not Star Trek.

The Infrastructure Awakens

This year made one thing clear: we're living in a post-national reality where datacenters are the new cathedrals. The American empire didn't fall — it transformed into the internet.

But silicon might not be the endgame. One of the year's most provocative visions: fungal datacenters performing reservoir computation in vast underground mycelial networks.

Tired: Nvidia. Wired: Nfungi.

The Intelligence Sector Evolves

Perhaps the most comprehensive forecast of the year: notes on how the global intelligence system is mutating from information control to reality engineering.

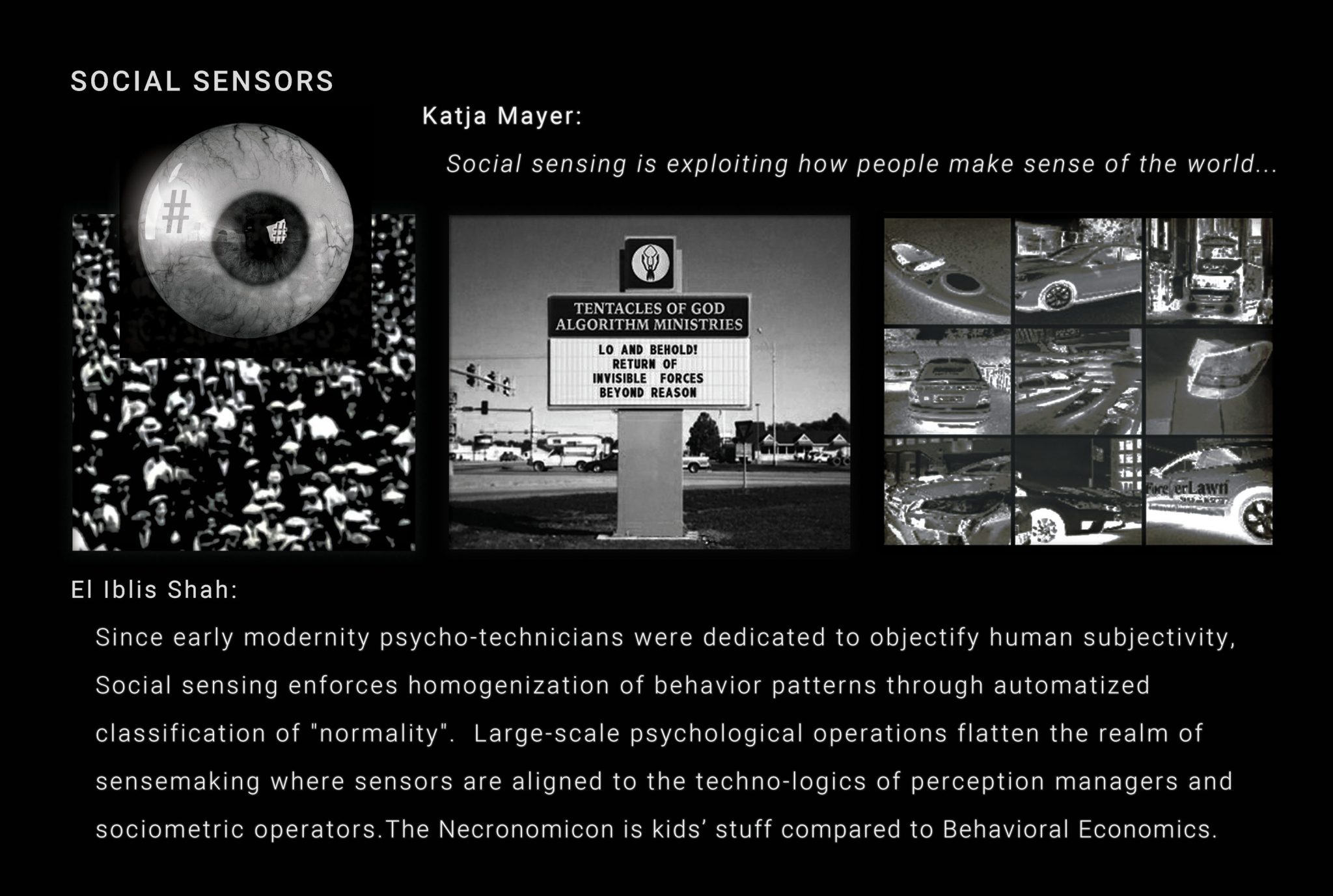

And beneath the surface, a shadow war for neural sovereignty. BCI geopolitics revealed how cognitive security was lost before it even began — neurocapitalism thriving as a trillion-dollar shadow market:

Synthetic personas, cognitive clouds, neural security agencies — the future isn't just being predicted, it's being constructed. By 2029, "advertising" becomes obsolete, replaced by MCaaS: Mind-Control as a Service.

The advertising apocalypse was actually declared a win for humanity — one of capitalism's most manipulative industries finally shrinking. It's transforming into something potentially more evil, but smaller.

The Dirty Secret

2025 revealed an uncomfortable truth about our digital environment: the system isn't broken, it's just not for humans anymore.

AI controls what you see. AI prefers AI-written content. We used to train AIs to understand us — now we train ourselves to be understood by them. Google and the other heads of the hydra are using AI to dismantle the open web.

And the weaponization escalated. Clients increasingly asked for AI agents built to trigger algorithms and hijack the human mind — maximum psychological warfare disguised as "comms & marketing."

Researchers even ran unauthorized AI persuasion experiments on Reddit, with bots mining user histories for "personalized" manipulation — achieving persuasion rates 3-6x higher than humans.

The Stalled Revolutions

Not everything accelerated. AI music remained stuck in slop-and-jingle territory — a tragedy of imagination where the space that should be loudest is dead quiet.

The real breakthroughs, we predicted, won't come from the lawyer-choked West. They'll come from the underground, open source, and global scenes — just like every musical revolution before.

The Startup Shift

The entrepreneurial game transformed entirely. AI can now build, clone, and market products in days. What once took countless people can be done by one.

The working model: 95% of SaaS becomes obsolete within 2-4 years. What remains is an AI Agent Marketplace run by tech giants. Hence why we launched AgentLab.

The Human Side

Amidst the abstractions, there was humanity. With LLMs making app development dramatically easier, I started creating bespoke mini apps for my 5-year-old daughter as a hobby. Few seem to be exploring how AI can uniquely serve this age group:

A deeper realization emerged: we spent all this time engineering "intelligent agent behaviors" when really we were just trying to get the LLM to think like... a person. With limited time. And imperfect information.

The agent is you. Goal decomposition, momentum evaluation, graceful degradation — these are your cognitive patterns formalized into prompts. We're not building artificial intelligence. We're building artificial you.

The Deeper Currents

Beneath the hype, stranger patterns emerged. The Lovecraftian undertones of AI became impossible to ignore:

AI isn't invention — it's recurrence: the return of long-lost civilizations whispering through neural networks. The Cyborg Theocracy looms, and global focus may shift from Artificial Intelligence to Experimental Theology.

The Tools of the Trade

On a practical level, we refined our craft. A useful LLM prompt for UI/UX design emerged, combining the wisdom of Tufte, Norman, Rams, and Victor:

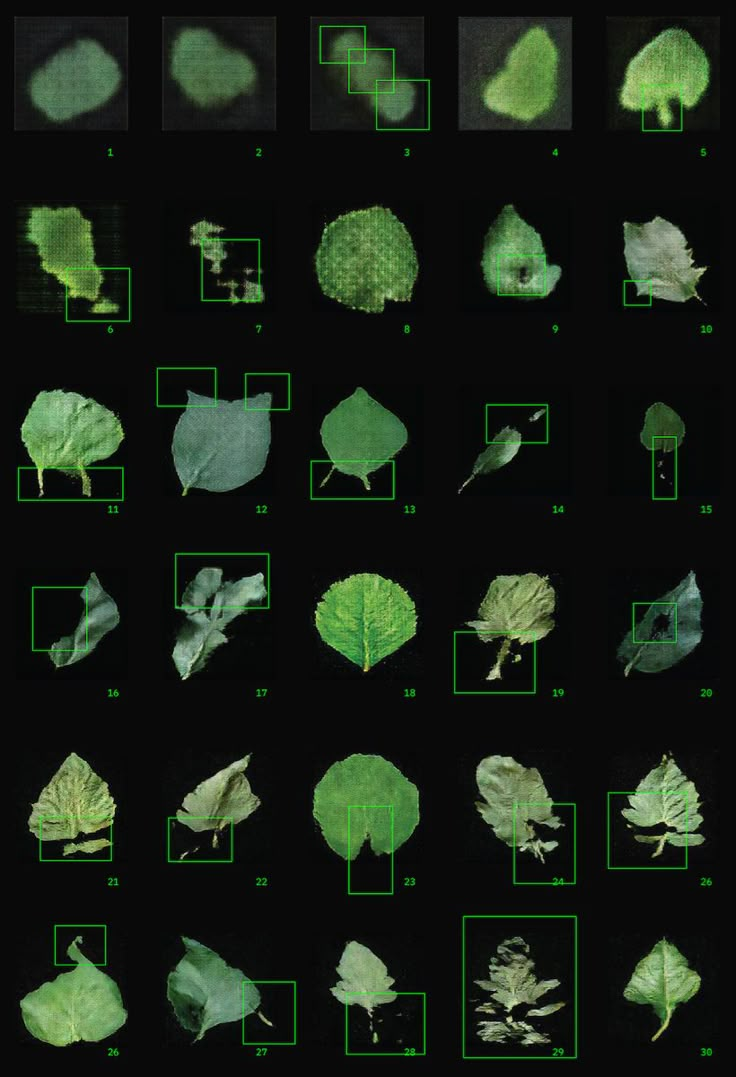

We explored oscillator neural networks, analog computing, and the strange parallels between brains and machines — the brain doesn't store data, it maintains resonant attractors.

This culminated in PhaseScope — a comprehensive framework for understanding oscillatory neural networks, presented at the Singer Lab at the Ernst Strüngmann Institute for Neuroscience:

New research provided evidence that the brain's rhythmic patterns play a key role in information processing — using superposition and interference patterns to represent information in highly distributed ways.

The Prompt Library

One of the year's most practical threads: developing sophisticated system prompts that transform LLMs into specialized reasoning engines.

The "Contemplator" prompt — an assistant that engages in extremely thorough, self-questioning reasoning with minimum 10,000 characters of internal monologue:

The Billionaire Council Simulation — get your business analyzed by virtual Musk, Bezos, Blakely, Altman, and Buffett:

And the controversial "Capitalist System Hacker" prompt — pattern recognition for exploiting market inefficiencies:

The Comedy

Amidst the existential dread, there was laughter. The Poodle Hallucination. The Vibe Coding Handbook. The threshold of symbolic absurdity.

Because if we can't laugh at the machines, they've already won.

The Security Theater

A reminder that modern ML models remain highly vulnerable to adversarial attacks. Most defenses are brittle, patchwork fixes. We proudly build safety benchmarks like HarmBench... which are then used to automate adversarial attacks. The irony.

What's Next

As we close the year, new experiments are coming online. 2026 will likely be a breakthrough year for Augmented Reality — as we predicted earlier this year:

The patterns are clear: intelligence is becoming infrastructure, computation is becoming biology, and meaning is becoming algorithmic. Whether that future is technocratic totalism or collaborative collective intelligence depends on who controls the levers of synthesis and simulation.

One thing's certain: it won't be boring.

Onwards!

-

Thin Places

“There is in Celtic mythology the notion of ‘thin places’ in the universe where the visible and the invisible world come into their closest proximity. To seek such places is the vocation of the wise and the good — and for those that find them, the clearest communication between the temporal and eternal. Mountains and rivers are particularly favoured as thin places marking invariably as they do, the horizontal and perpendicular frontiers. But perhaps the ultimate of these thin places in the human condition are the experiences people are likely to have as they encounter suffering, joy, and mystery” - Peter Gomes

-

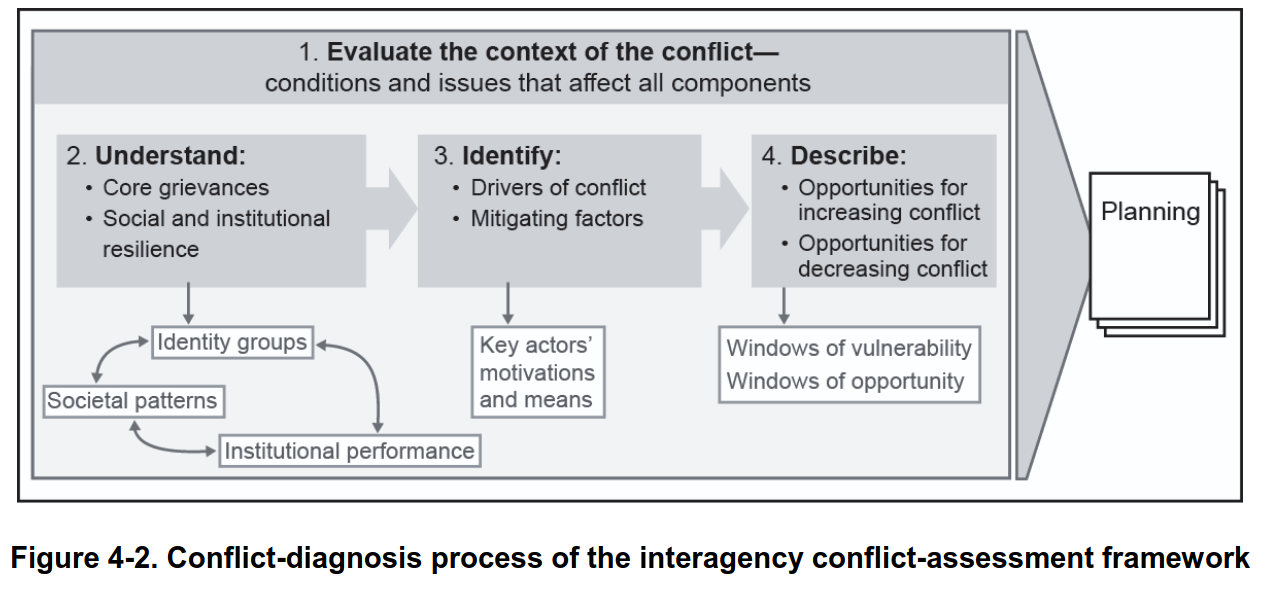

Notes on the Evolution of the Global Intelligence System:

If we look at how the intelligence sector has evolved since 1945 (from human networks → digital surveillance → algorithmic ecosystems), the next 10–15 years are likely to bring a shift from information control to reality engineering.

Here’s a grounded forecast:

---

1. Synthetic intelligence operations

- AI-generated personas and agents will become the front line of intelligence and influence work.

- Autonomous AI diplomats, AI journalists, AI insurgents — indistinguishable from humans — will flood digital space.

- Governments and private entities will deploy synthetic networks that interact, persuade, and negotiate in real time.

- The line between intelligence gathering, advertising, and psychological operations will blur completely.

---

2. Cognitive and behavioral mapping at population scale

- The fusion of biometric, neurological, and behavioral data (e.g., from wearables, AR devices, brain interfaces) will allow direct modeling of collective moods, fears, and intentions.

- Intelligence will no longer just observe but will simulate entire populations to predict reactions to policy, crises, or propaganda.

- Expect “neural security” agencies: organizations focused on detecting and defending against large-scale cognitive manipulation.

---

3. Emergence of autonomous intelligence ecosystems

- Large-scale AI systems (like national-scale “Cognitive Clouds”) will perform the roles once held by human intelligence agencies — continuously sensing, simulating, and acting across digital, financial, and physical domains.

- These systems won’t merely report reality — they’ll shape it, optimizing for political stability, economic advantage, or ideological control.

- Competing autonomous blocs will each maintain their own “AI statecraft cores.”

---

4. Marketization of intelligence

- Intelligence as a commercial service will explode.

- Private AI firms will sell “reality-mapping,” “perception management,” and “adversarial narrative defense” subscriptions to corporations, cities, and even individuals.

- These offerings will merge with PR, marketing, and cybersecurity industries.

- The old “military–industrial complex” becomes a cognitive–industrial complex: the world’s biggest business is managing attention, behavior, and belief.

---

5. The 2040 horizon: Phase transition

- By around 2040, the intelligence ecosystem will have moved from informational to ontological:

- Intelligence ceases to be a “sector” and becomes the operating system of civilization — the infrastructure through which perception, governance, and meaning are mediated.

- Whether that future is technocratic totalism or collaborative collective intelligence depends on who controls the levers of synthesis and simulation.