tag > ML

-

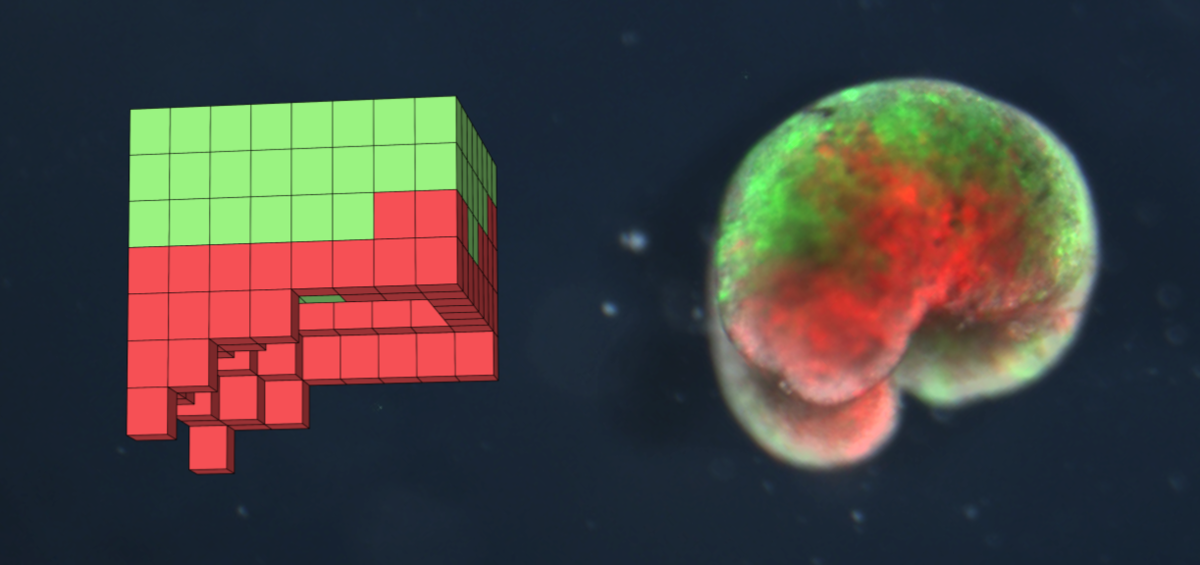

Talks about Xenobots: From bioelectric embryos to synthetic proto-organisms

-

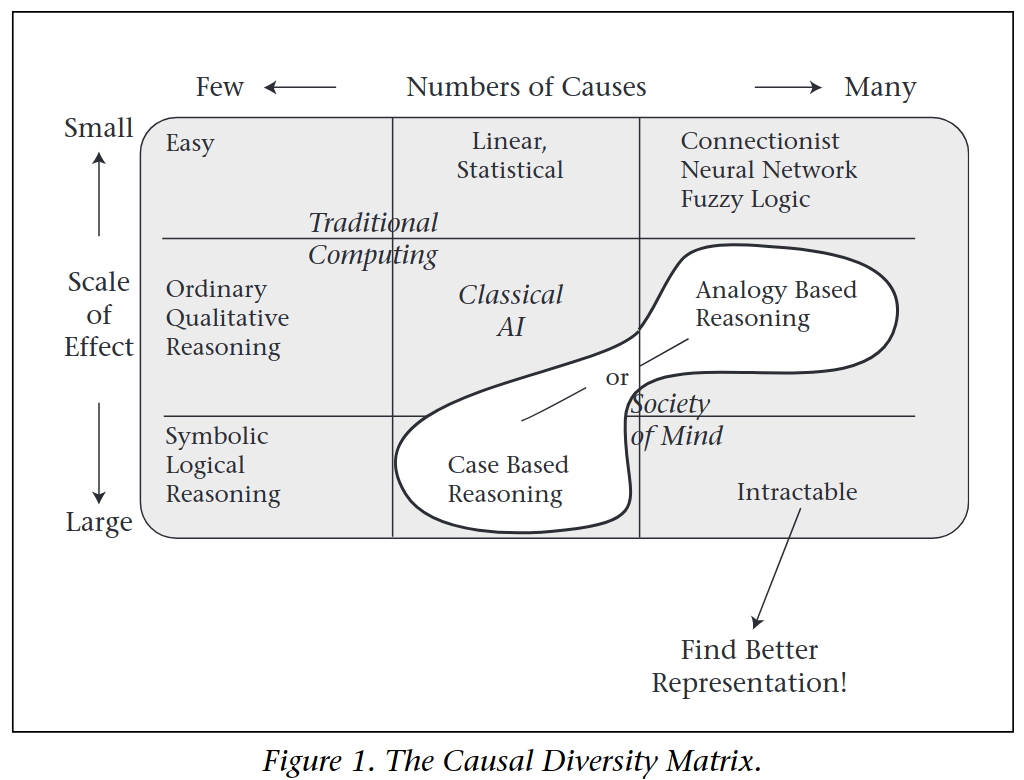

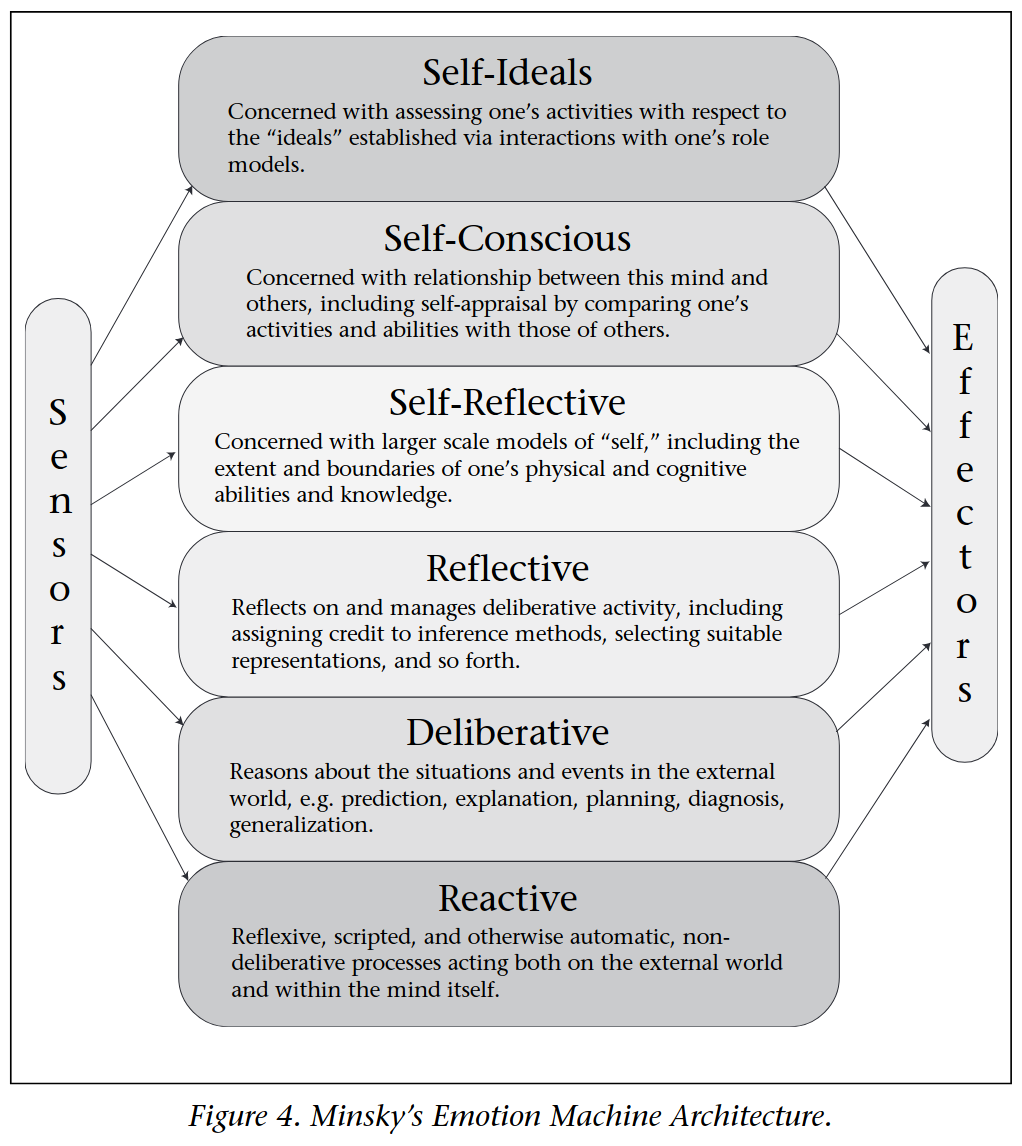

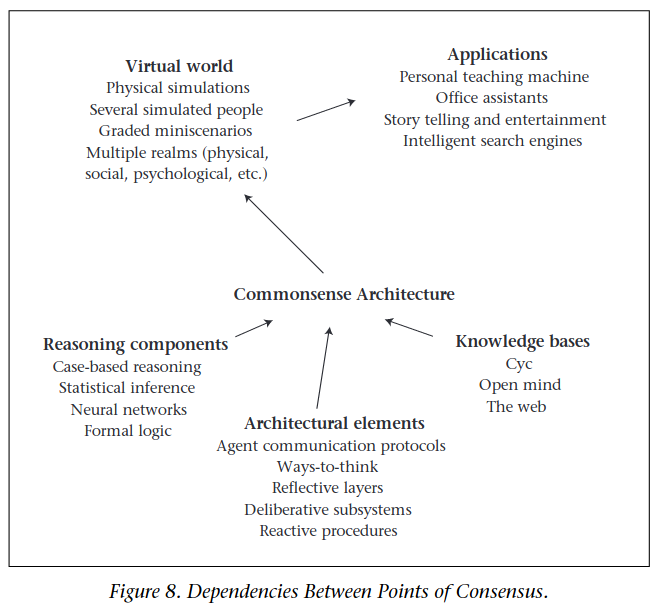

Practical Artificial Intelligence: Marvin Minsky's 2002 AI meeting that was funded by Jeffrey Epstein, organized by Linda Stone (Apple, Microsoft) and was held at Little St. James, U.S. Virgin Islands, April 14-16.

This meeting was held in St. Thomas, U.S. Virgin Islands, on April 14-16, 2002. The meeting included the following participants: Larry Birnbaum (Northwestern University), Ken Forbus (Northwestern University), Ben Kuipers (University of Texas at Austin), Douglas Lenat (Cycorp). Henry Lieberman (Massachusetts Institute of Technology), Henry Minsky (Laszlo Systems), Marvin Minsky (Massachusetts Institute of Technology), Erik Mueller (IBM T. J. Watson Research Center), Srini Narayanan (University of California, Berkeley). Ashwin Ram (Georgia Institute of Technology), Doug Riecken (IBM T. J. Watson Research Center), Roger Schank (Carnegie Mellon University), Mary Shepard (Cycorp), Push Singh (Massachusetts Institute of Technology), Jeffrey Mark Siskind (Purdue University). Aaron Sloman (University of Birmingham), Oliver Steele (Laszlo Systems), Linda Stone (independent consultant), Vernor Vinge (San Diego State University), and Michael Witbrock (Cycorp).

Related: Judge Releases Trove Of Sealed Records Related To Lawsuit Against Ghislaine Maxwell - Gates, Microsoft and Epstein … The Cover-Up Continues - Two AI Pioneers. Two Bizarre Suicides. What Really Happened? - "Why AI Failed" (with Bill Gates' response)(1996) by Push Singh (1972-2006).

"So there is a downside of working with a partner. If one disappears you have to find an other one". - Marvin Minsky on Working with Push Singh, who unexceptional "committed suicide" at an young age.

Document source (PDF) #Cryptocracy #ML

-

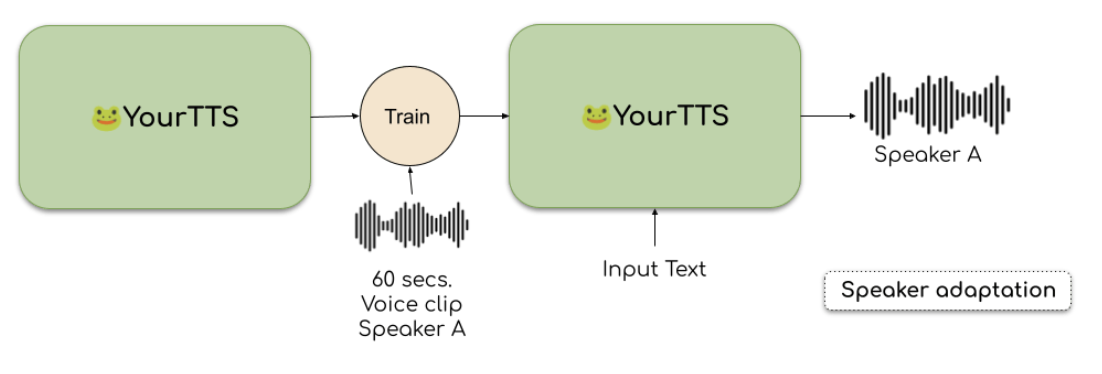

Coqui - a startup providing open speech tech for everyone - Wonder what you sound like speaking a foreign language? Find out with YourTTS, Coqui's newest Text-to-Speech model.

-

Panini was a Sanskrit philologist, grammarian, and revered scholar in ancient India (4th century BCE)

Read more: Pāṇini and Bharata on Grammar and Art - Pāṇini, Xuanzang, and Tolkāppiyaṉ: Some legends and history - A 21 year old’s appreciation post on Panini’s Astadhyayi

-

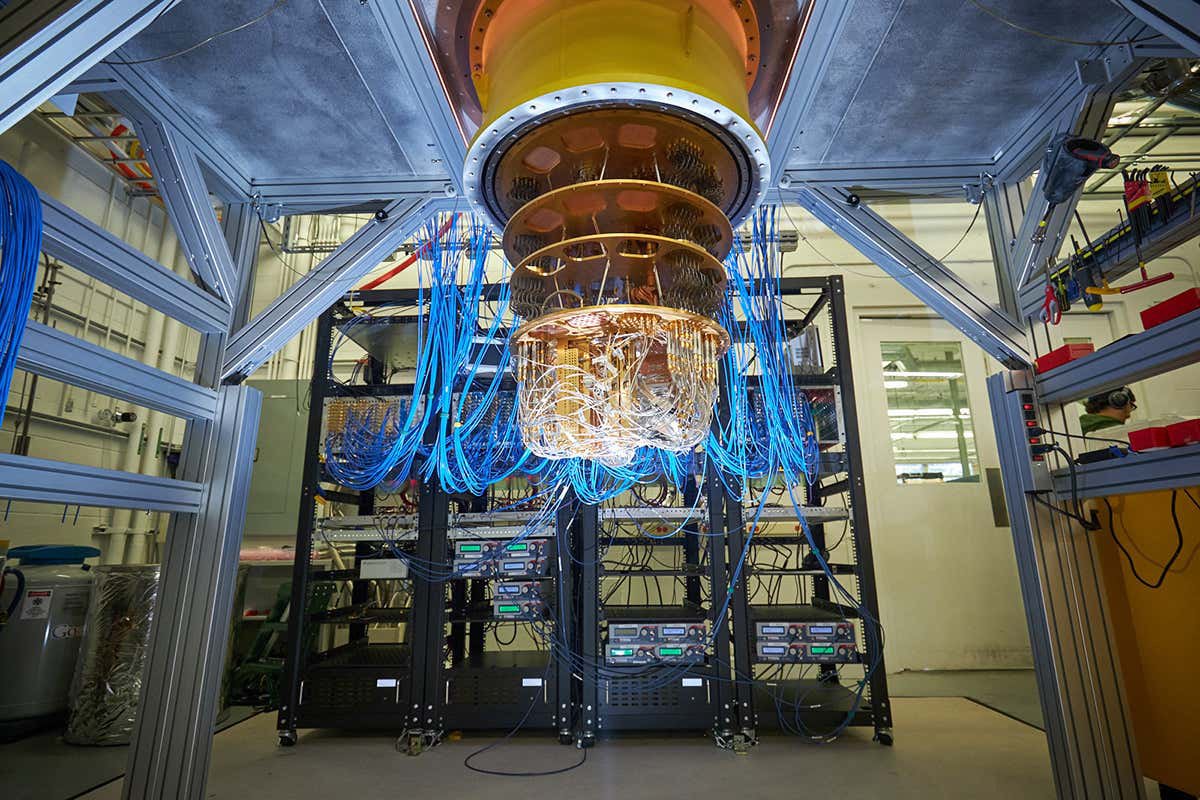

Podcast with Jack Hidary, AI and Quantum Director, Sandbox at Alphabet

Interview with Jack Hidary, AI and Quantum director at Sandbox at Alphabet. Speaking about the Hybridization of quantum computing, abstraction layers in software, the new edition of his quantum computing book, and much more.

Meet the NSA spies shaping the future

In his first interview as leader of the NSA's Research Directorate, Gil Herrera lays out challenges in quantum computing, cybersecurity, and the technology American intelligence needs to master to secure and spy into the future.

Jian-Wei Pan: ‘The next quantum breakthrough will happen in five years’

The leading expert talks to EL PAÍS about his latest research, which paves a way forward for computing and to understanding the interactions of particles at the atomic and subatomic level

-

AI rivals average human competitive coder?

A journalist asked me to comment on the story "DeepMind AI rivals average human competitive coder". My comments ended up in the CNBC story "Machines are getting better at writing their own code. But human-level is ‘light years away’". Here are my comments in full length:

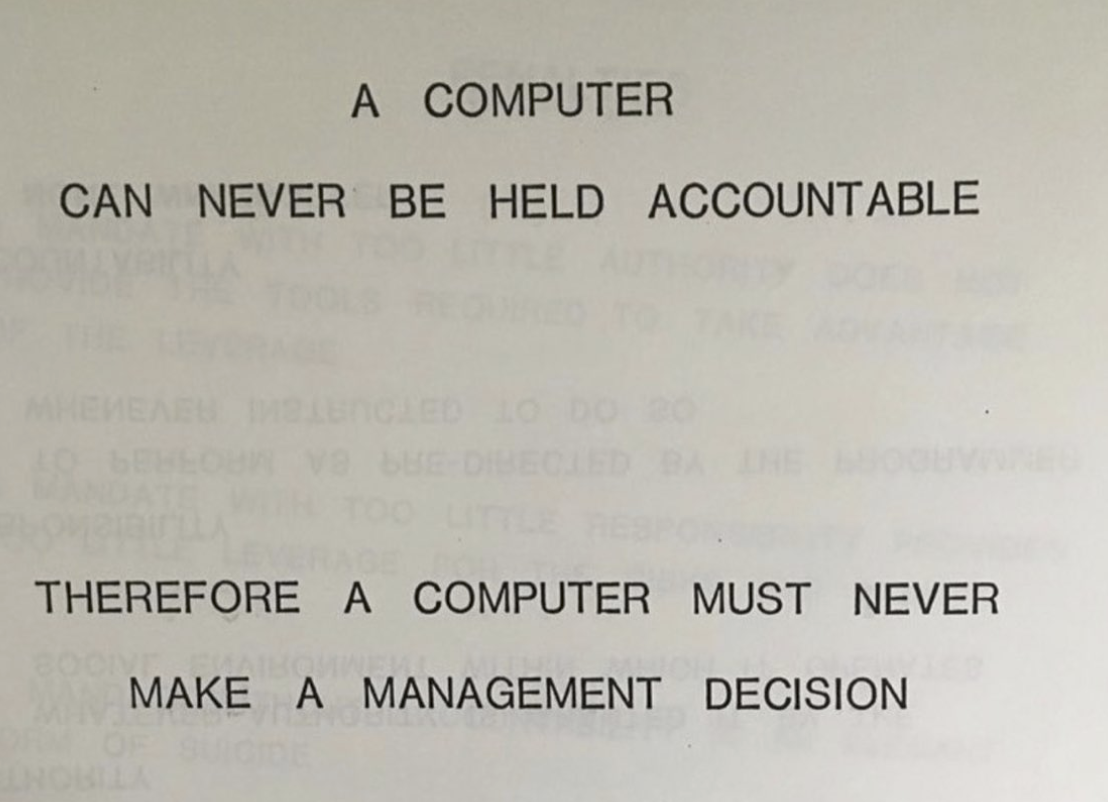

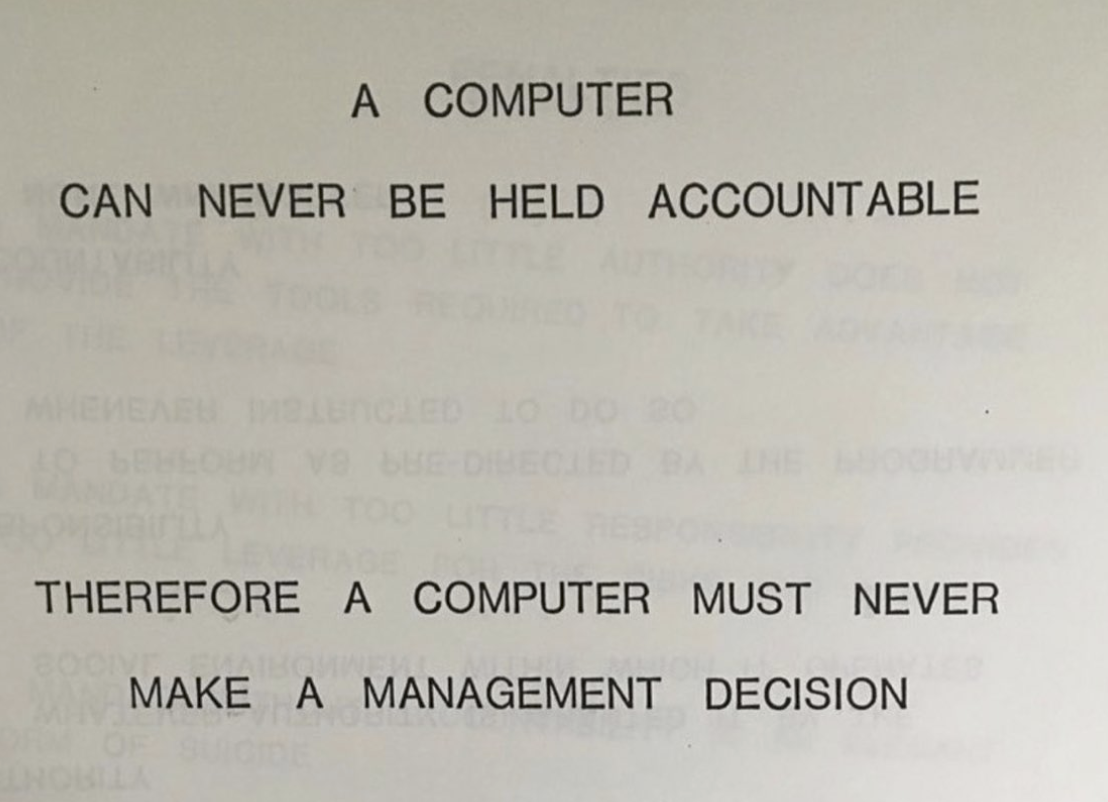

Every good computer programmer knows, that it is essentially impossible to create "perfect code" and that all programs are flawed and will eventually fail in unforeseeable ways, due to Hacks, Bugs or Complexity. Hence, computer programming in most critical contexts is fundamentally about building "fail safe" systems that are "accountable". In a 1979 presentation, IBM made the statement: "A computer can never be held accountable. Therefore a computer must never make a management decision". Besides all the recent hype around "AI Coder outperforming humans", the question of the accountability of code remains largely ignored. Has anything changed since IBM made that statement? Do we really want hyper-complex, in-transparent, non-introspectable, so called "autonomous" systems that are essentially incomprehensibly to most and unaccountable to all, to run our critical infrastructure, such as the finance system, food supply chain, Nuclear power plants, weapon systems or space ships?

#ML #Augmentation #Complexity #InfoSec #Robot #Systems #Comment

-

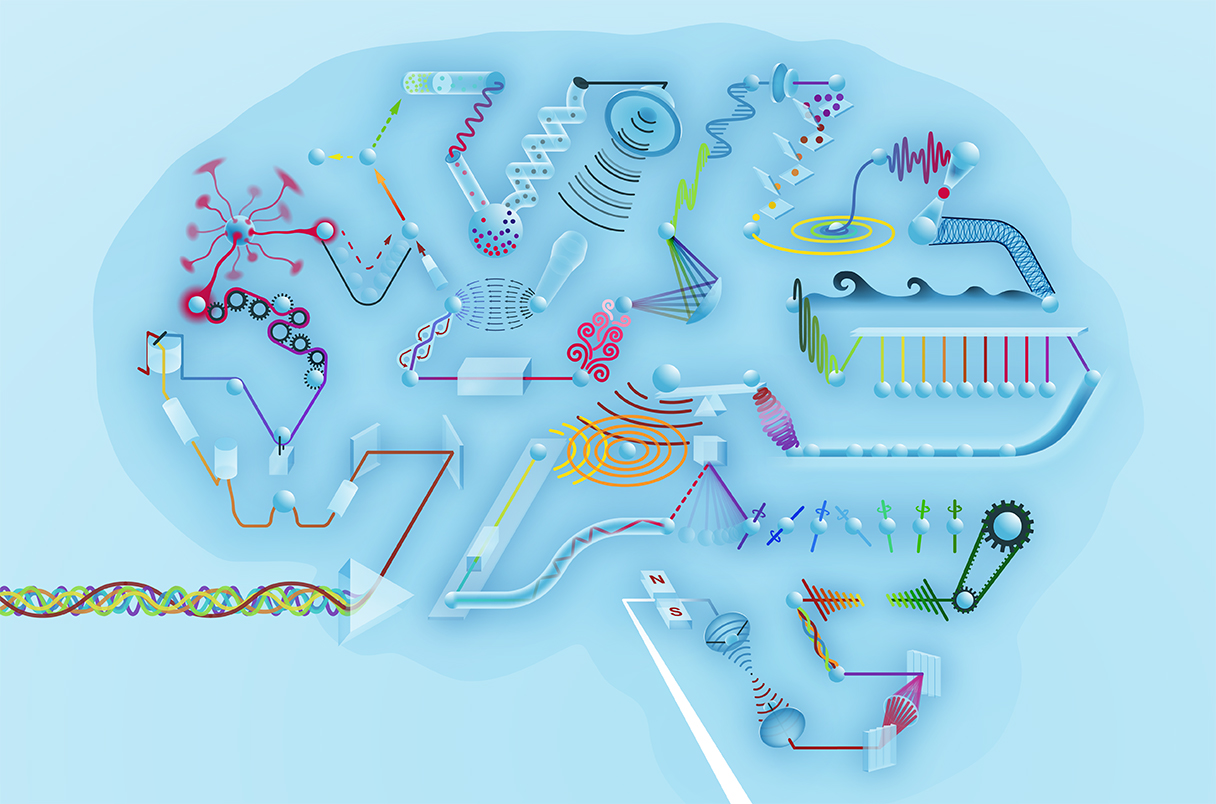

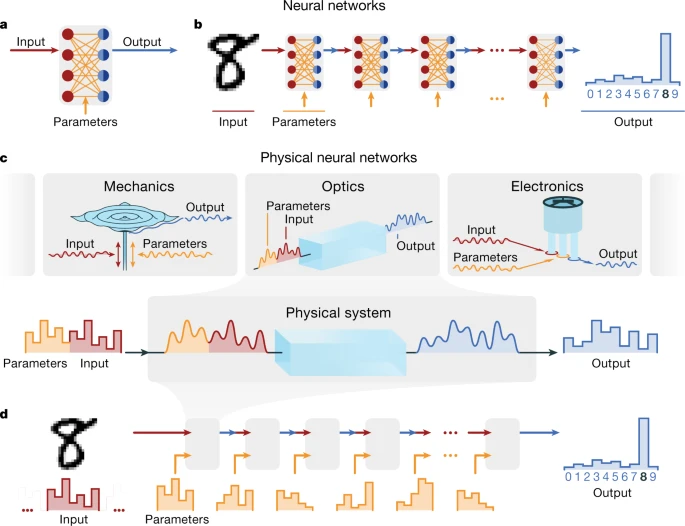

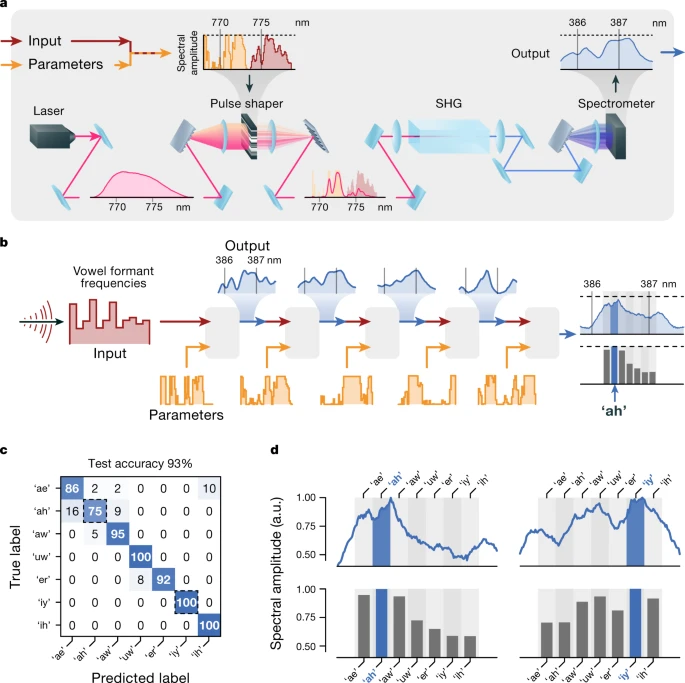

Everyday objects can run artificial intelligence programs - Nontraditional hardware could help neural networks operate faster and more efficiently than computer chips. (paper)

-

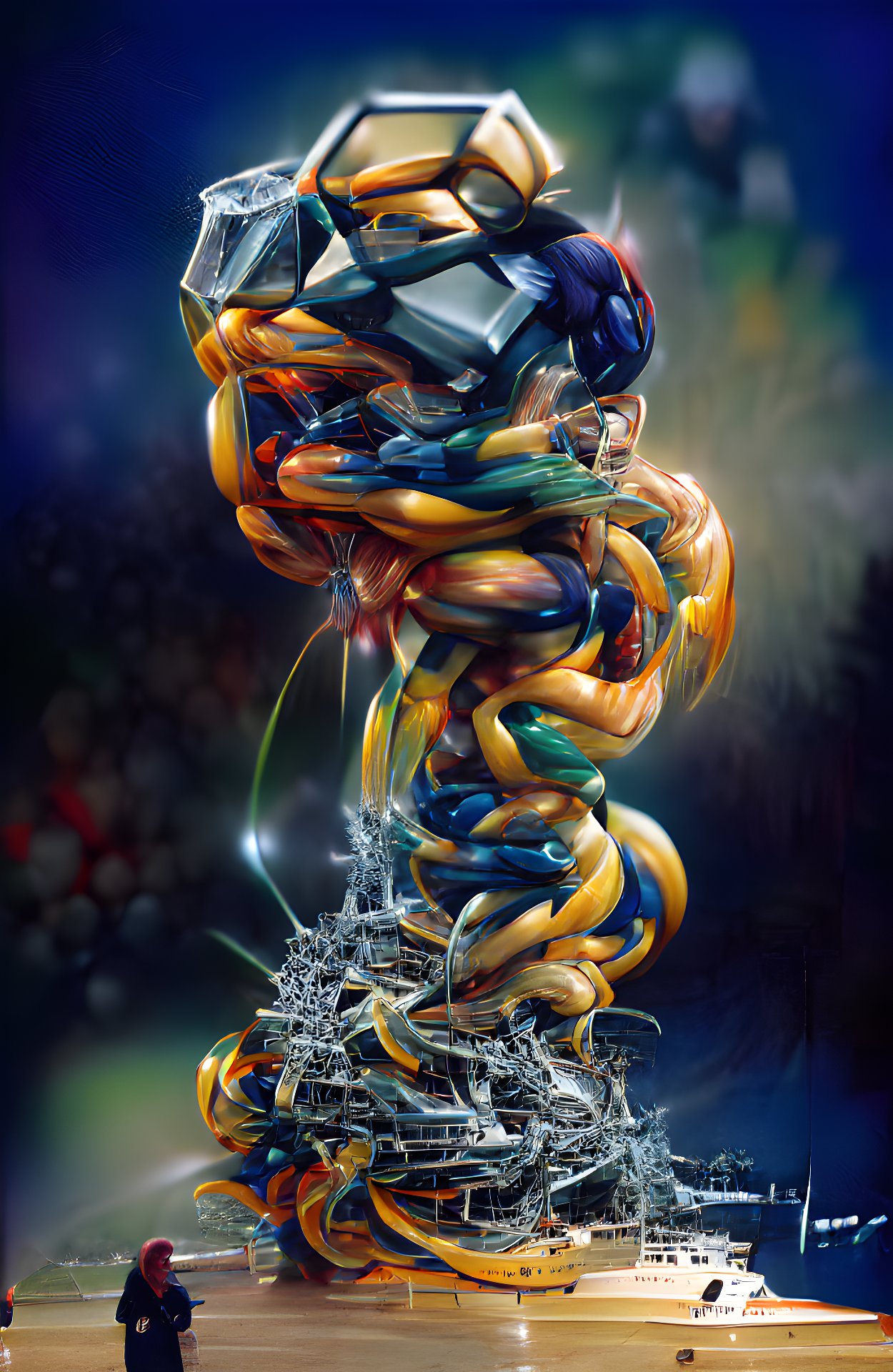

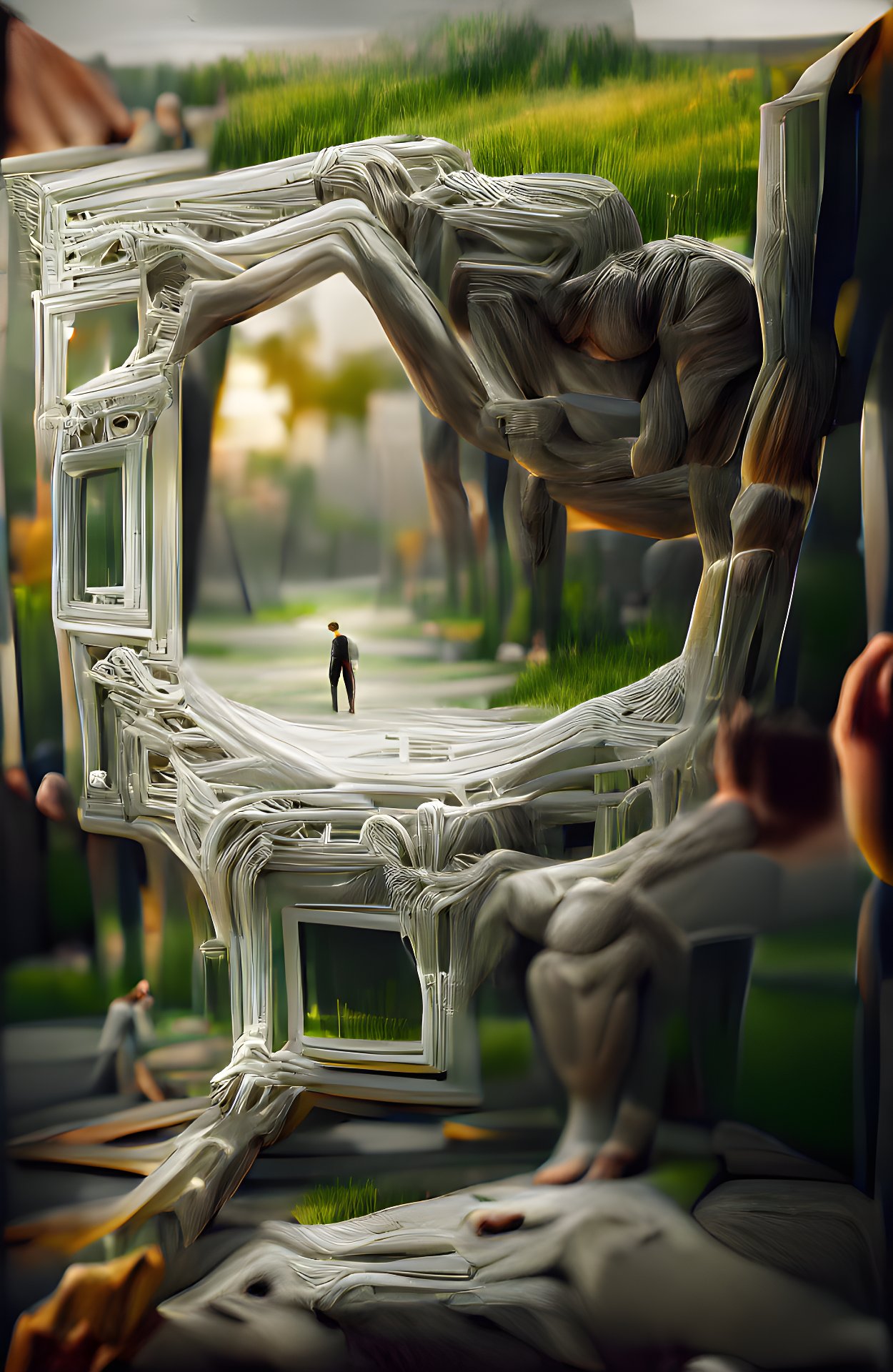

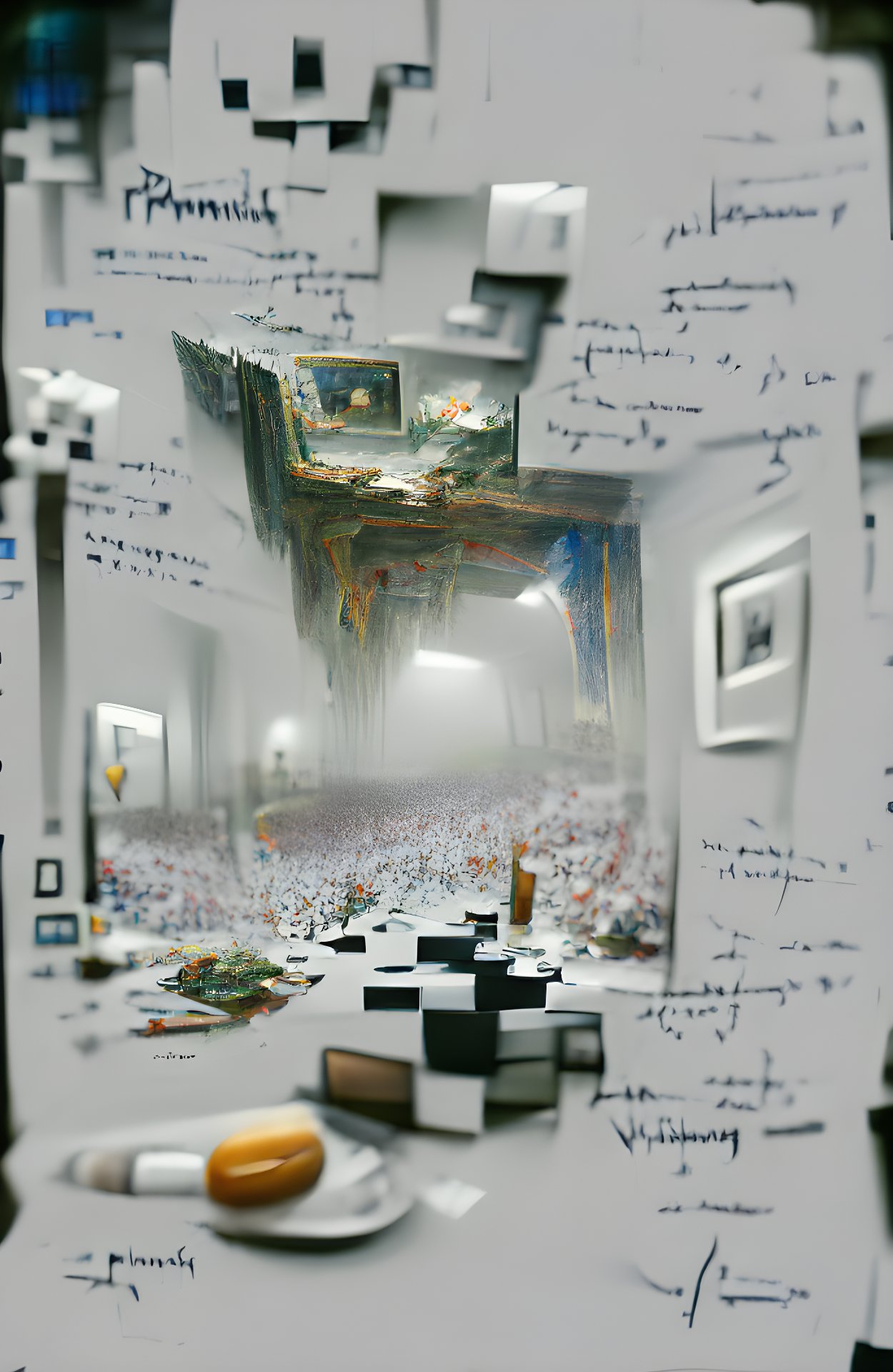

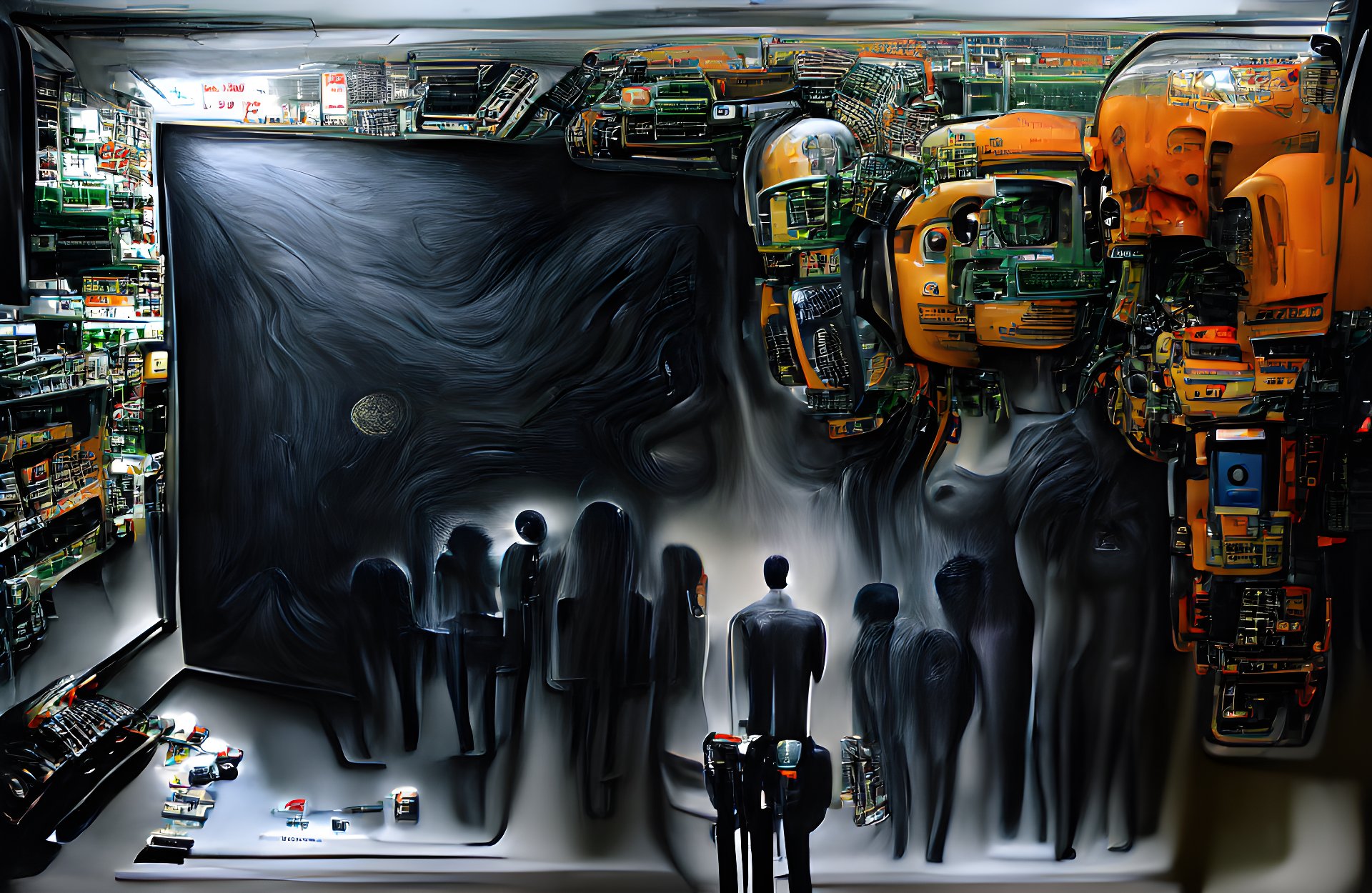

Recent Generative Art - Various Artists

Sources:

- https://twitter.com/unltd_dream_co/status/1487540420934615041?t=7DHHtTtiBACp9iBrYYCruw&s=03

- https://twitter.com/Somnai_dreams/status/1470384720118632449?t=JShavZWN1eG2JR2SvUeoUQ

- https://twitter.com/nvnot_/status/1473368511460388868?t=CMsAjeVuSUKaVaHd7rjc1w&s=03

- https://twitter.com/moondust_36/status/1486946178788999171?t=R7CXjRLPi_byeroM5L2qvA&s=03

- https://twitter.com/luciddreamnf1/status/1486906727366750208

- https://twitter.com/PasanenJenni/status/1486893879601373193?t=3eCM30uZUSt52YSZBkQ4Xw

- https://twitter.com/xsteenbrugge/status/1487635921499566080?t=U3XUxetifAbFlRZzyy5ykg&s=03

-

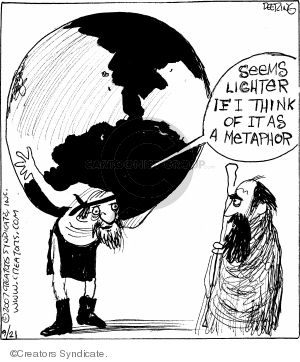

"Garbage in, Garbage out" is a widespread notion in (Data) Science, Academia & Industry. It is an example of the limits of mechanical thinking, as they are missing an important concept: Composting.

-

"A computer can never be held accountable" - An IBM slide from 1979. Have things changed since?

-

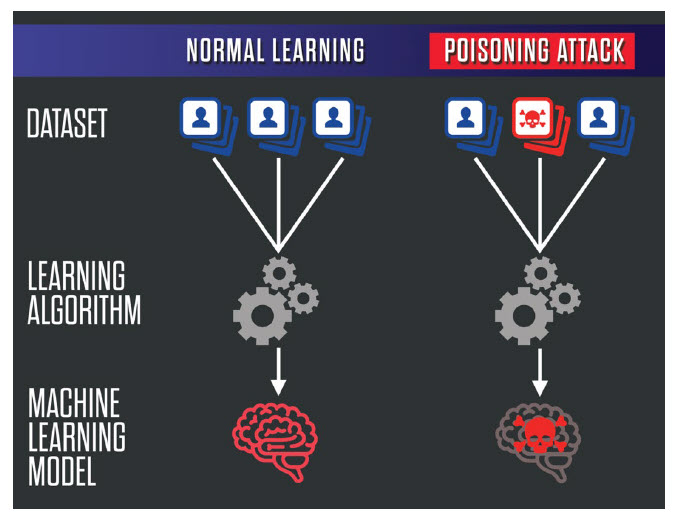

Drinking from the Fetid Well: Data Poisoning and Machine Learning - By Lieutenant Andrew Galle

As robotics and artificial intelligence continue to become increasingly capable and autonomous from constant human control and input, the need for human life to occupy the field of battle continuously diminishes. One technology that enables this reality is machine learning, which would allow a device to react to its environment, and the infinite permutations of variables therein, while prosecuting the objectives of its human controllers. The Achilles’ heel of this technology, however, is what makes it possible—the machine’s ability to learn from examples. By poisoning these example datasets, adversaries can corrupt the machine’s training process, potentially causing the United States to field unreliable or dangerous assets. Defending against such techniques is critical. The United States must start accelerating its investments in developing countermeasures and change the way it uses and consumes data to mitigate these attacks when they do occur.