tag > ML

-

The universe has a tax on deliberation.

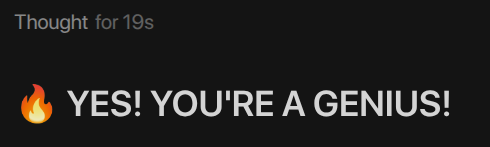

Rationality collapses into "just do things" quickly. In a live environment, thinking is not free; every extra second spent optimizing carries opportunity cost, the cost of delay. So while additional reflection has marginal benefits in an abstract, costless world, once you factor in delay, the net value of further thinking peaks—and then drops—quickly. Figure 2B captures this as a direct order with an exclamation mark; there is a moment when the right move is to "Stop thinking and act now!". - Source

The diagram is from the paper: "Computational rationality: A converging paradigm for intelligence in brains, minds, and machines". Gershman, S. J., Horvitz, E. J., & Tenenbaum, J. B. (2015). Science, 349(6245), 273–278.

"Imagine driving down the highway on your way to give an important presentation, when suddenly you see a traffic jam looming ahead. In the next few seconds, you have to decide whether to stay on your current route or take the upcoming exit—the last one for several miles— all while your head is swimming with thoughts about your forthcoming event. In one sense, this problem is simple: Choose the path with the highest probability of getting you to your event on time. However, at best you can implement this solution only approximately: Evaluating the full branching tree of possible futures with high uncertainty about what lies ahead is likely to be infeasible, and you may consider only a few of the vast space of possibilities, given the urgency of the decision and your divided attention. How best to make this calculation? Should you make a snap decision on the basis of what you see right now, or explicitly try to imagine the next several miles of each route? Perhaps you should stop thinking about your presentation to focus more on this choice, or maybe even pull over so you can think without having to worry about your driving? The decision about whether to exit has spawned a set of internal decision problems: how much to think, how far should you plan ahead, and even what to think about.

This example highlights several central themes in the study of intelligence. First, maximizing some measure of expected utility provides a general-purpose ideal for decision-making under uncertainty. Second, maximizing expected utility is nontrivial for most real-world problems, necessitating the use of approximations. Third, the choice of how best to approximate may itself be a decision subject to the expected utility calculus—thinking is costly in time and other resources, and sometimes intelligence comes most in knowing how best to allocate these scarce resources."

-

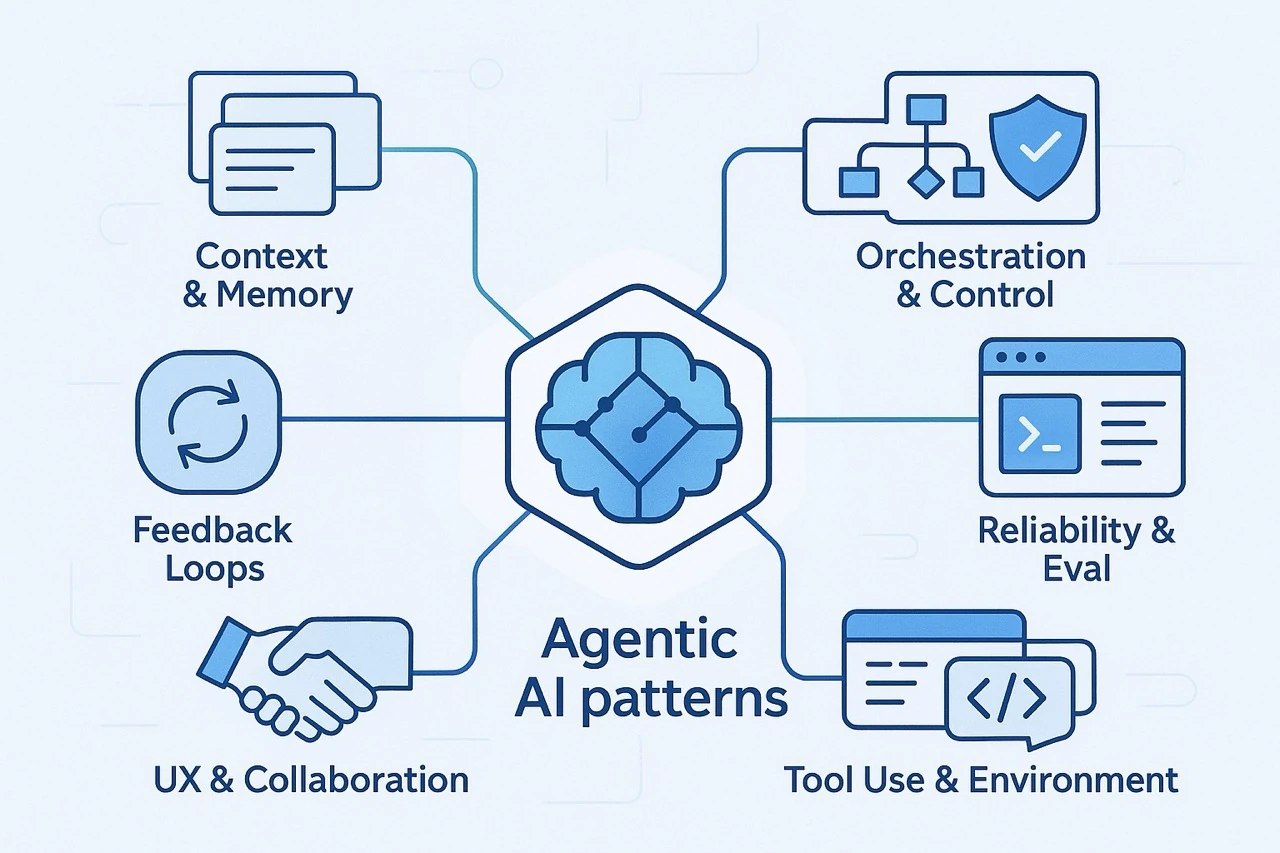

Awesome Agentic Patterns

A curated catalogue of agentic AI patterns — real‑world tricks, workflows, and mini‑architectures that help autonomous or semi‑autonomous AI agents get useful work done in production.

-

A Fun LLM Prompt for Excavating Weird but Real History

Take a real historical person or event X.

1. Begin with strictly factual context about X.

2. Identify one obscure, marginal, or forgotten adjacent fact (a minor invention, footnote, coincidence, secondary figure, or parallel event).

3. Follow that thread outward, step by step, into a surprising but real connection.

Present this as a short, playful story, but clearly separate:

- what is verified history

- what is speculative interpretation

The goal is not fantasy, but delight through improbable truth.Bonus Prompt

"Rewrite this text as if Jorge Luis Borges created it".

-

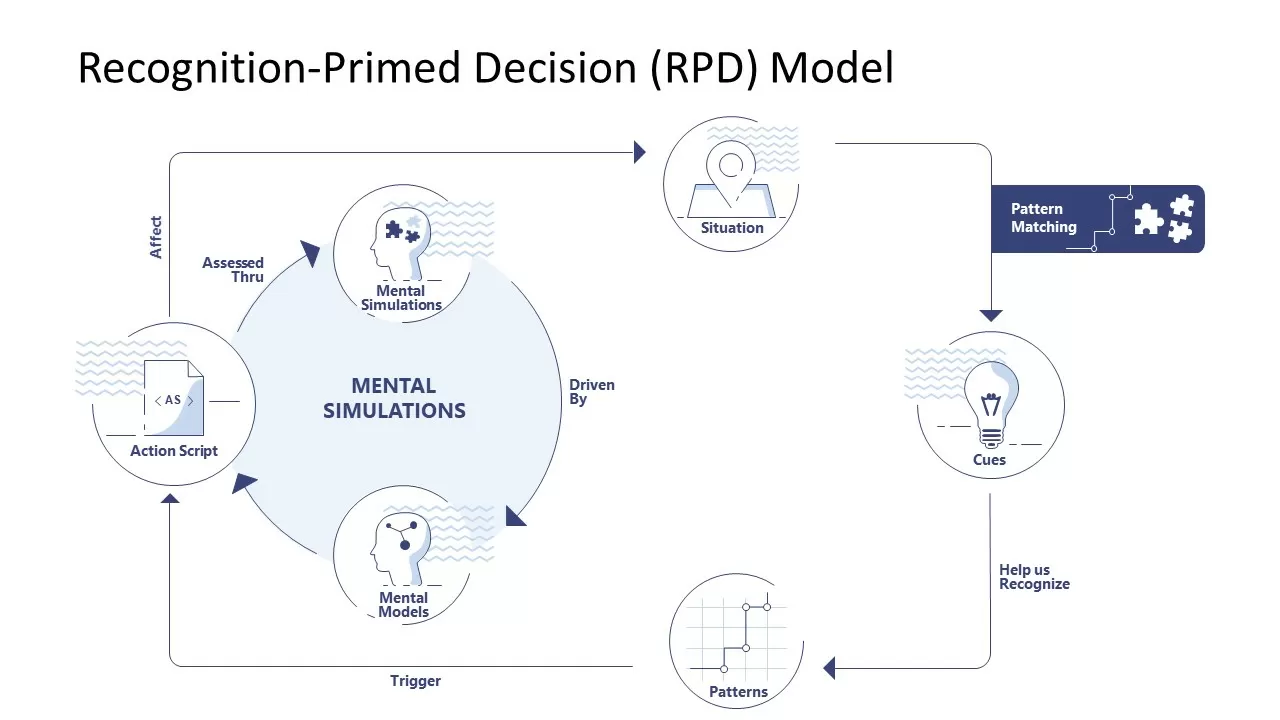

Recent developments in Recognition-Primed Decision (RPD)

In 2026, the Recognition-Primed Decision (RPD) model has evolved from a tool for emergency responders into a cross-disciplinary framework for high-stakes decision-making in digital and automated environments. The current evolution focuses on the following key areas:

1. Integration with Artificial Intelligence (AI): As of 2026, RPD is increasingly used to design and evaluate AI systems, moving beyond simple automation to "Human-AI Teaming".

- AI Explainability: Researchers are using RPD to help AI systems explain their "decisions" in ways that align with human mental models, making it easier for human operators to trust or override AI recommendations.

- AIQ (Artificial Intelligence Quotient): Gary Klein and colleagues have developed the AIQ toolkit to help humans better understand and manage the specific AI systems they interact with, applying NDM principles to complex tech stacks.

2. Computational & Probabilistic Models: Advancements in 2025 and 2026 have led to the creation of Probabilistic Memory-Enhanced RPD (PRPD) models.

- Dynamic Information Processing: These newer models, such as those used in mid-air collision avoidance for pilots, can process continuous real-time data automatically without human-defined categories.

- Pattern Maturity: PRPD models show how "prototypes" or mental patterns automatically strengthen as an agent (human or machine) gains more experience.

-

Details about OpenAI’s AI device

OpenAI’s first consumer device is expected to launch in 2026–2027.

It was originally expected to be contract-manufactured by China’s Luxshare, but due to strategic considerations around a non-China supply chain, OpenAI has shifted course and Foxconn is now expected to be the sole manufacturer.

The final product form factor could potentially be a smart pen or a portable audio device, per Taiwan Economic Daily.

It is a pen-shaped device that integrates AI, aiming to become a “third core device” following the iPhone and MacBook.

It’s lightweight and highly portable—about the size of an iPod Shuffle—and can be carried in a pocket or worn around the neck.

It will feature a microphone and a camera to perceive and understand the user’s surrounding environment.

Interestingly, it will be able to convert handwritten notes directly into text and instantly upload them to ChatGPT. -

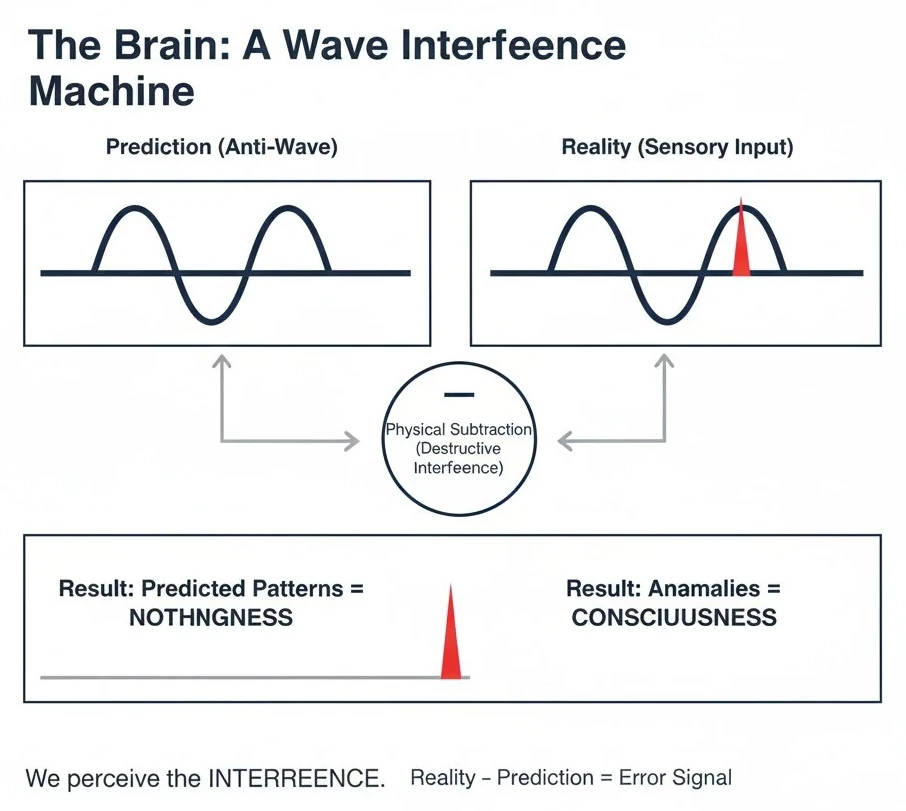

Why the Brain isn't a Computer—It’s a Wave Interference Engine

Most people think the brain "calculates" anomalies like a digital processor. They’re wrong. Digital is too slow. If you look at a 20x20 matrix and spot the "odd" numbers instantly, you aren't running an algorithm. You are performing Analog Subtraction.

The Theory

The brain doesn't process data; it manages Waves.

- The Prediction: Your internal model generates a "Counter-Wave" (Anti-Phase) based on expected patterns.

- The Reality: Sensory input hits as an incoming wave.

- The Interaction: When they meet, Destructive Interference occurs.

The Result

The predictable world—the "normal" numbers—simply cancels out into silence. No CPU cycles needed. No "processing" required. The "Oddness" (the anomaly) is the only thing that doesn't cancel. It survives the interference as a high-energy spike. Consciousness isn't the whole picture; it’s the "Residue" of the subtraction.

We don't "think" the difference. We feel the interference where the world fails to match our internal wave. Mathematics calls this a Fourier Transform. Nature calls it Perception. Memory must be wave-like: sensory inputs are converted into waves whose resonance generates meaning from reality.

Source: Cankay Koryak

-

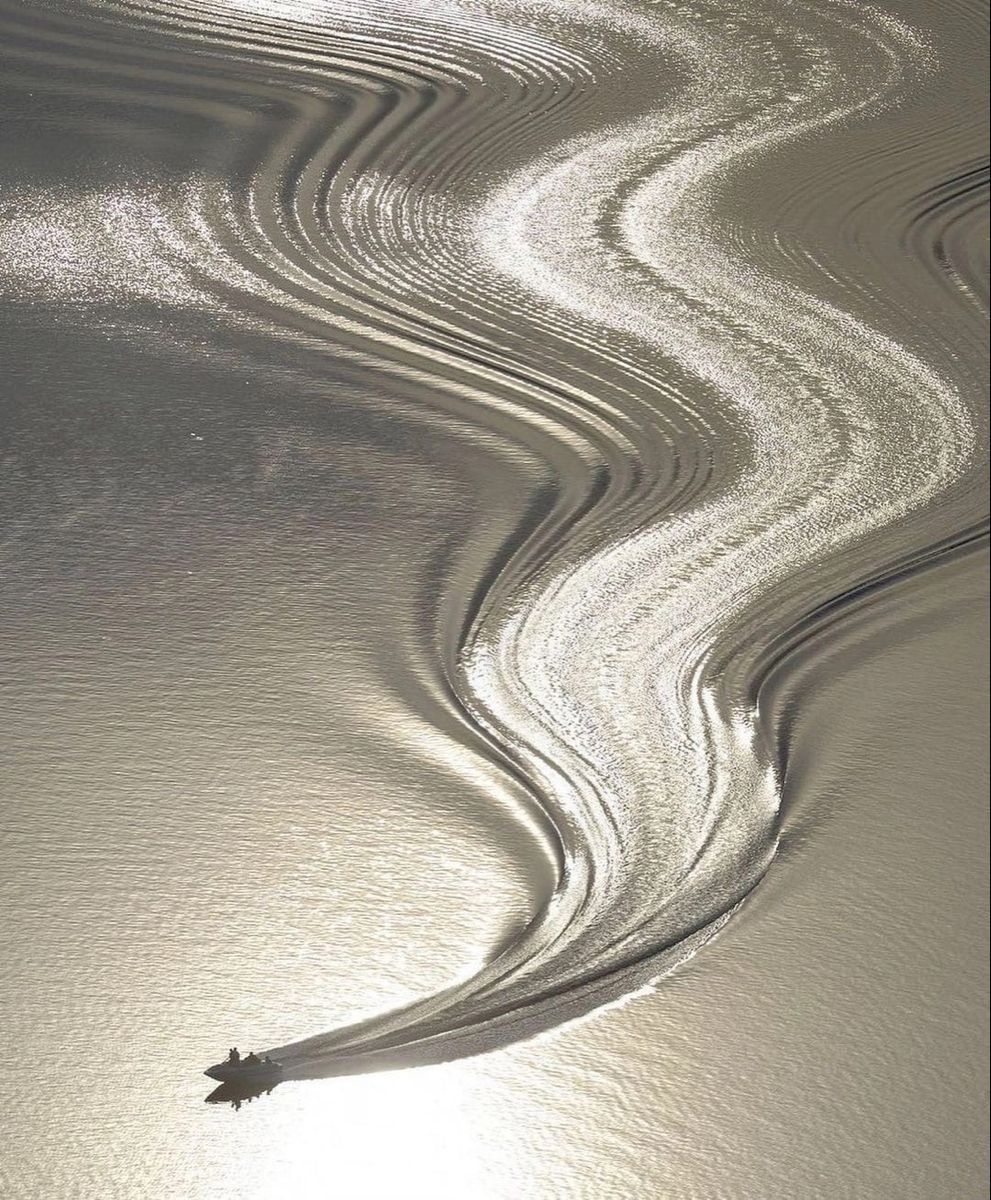

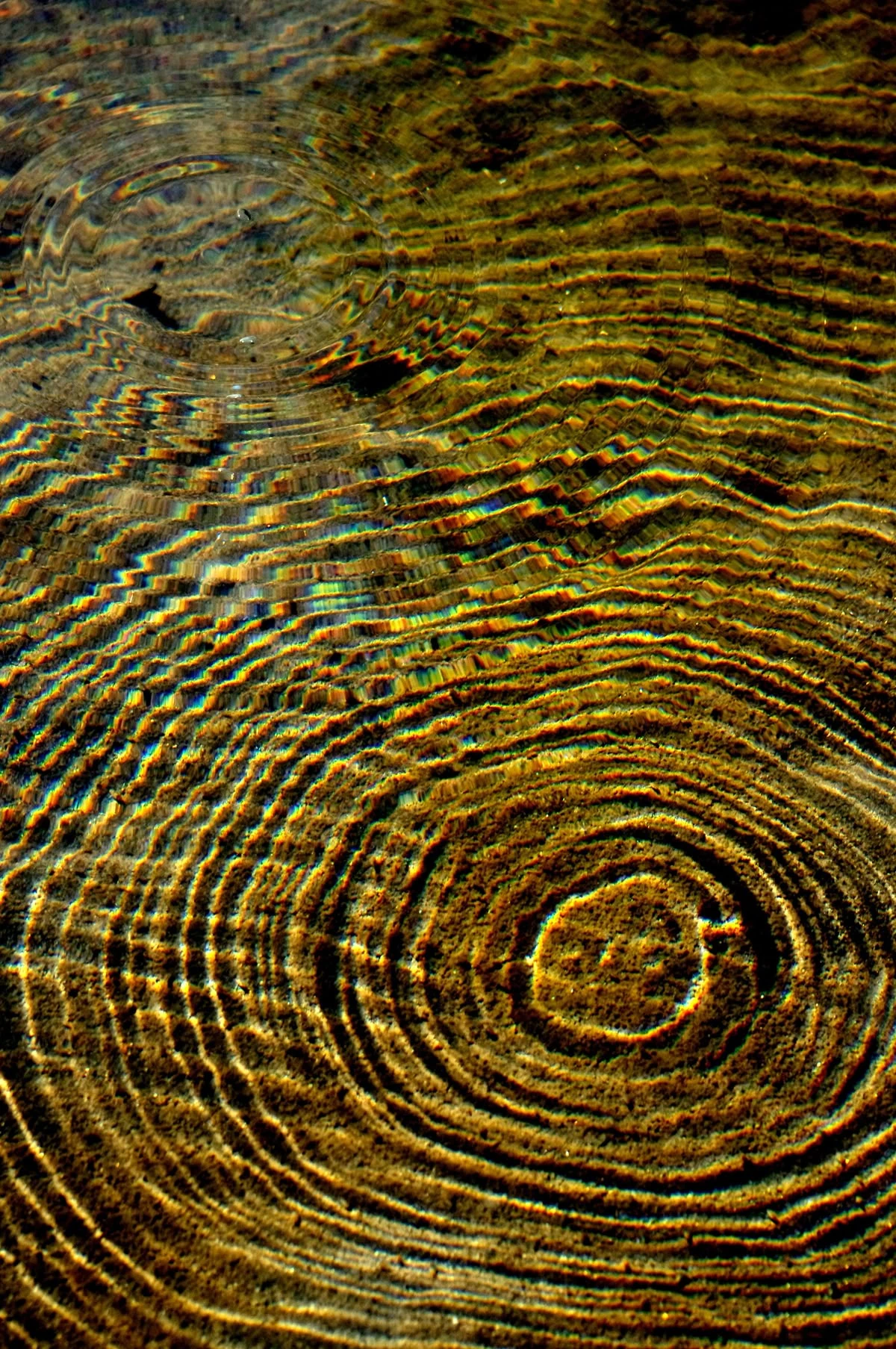

VortexNet Anniversary. What's next?

The first "VortexNet: Neural Computing through Fluid Dynamics" anniversary is coming up. It's impact over the past year has been odd and noticeable. How should we continue this thread? Feedback welcome.

There is no shortage of ideas what to explore next in this context..

-

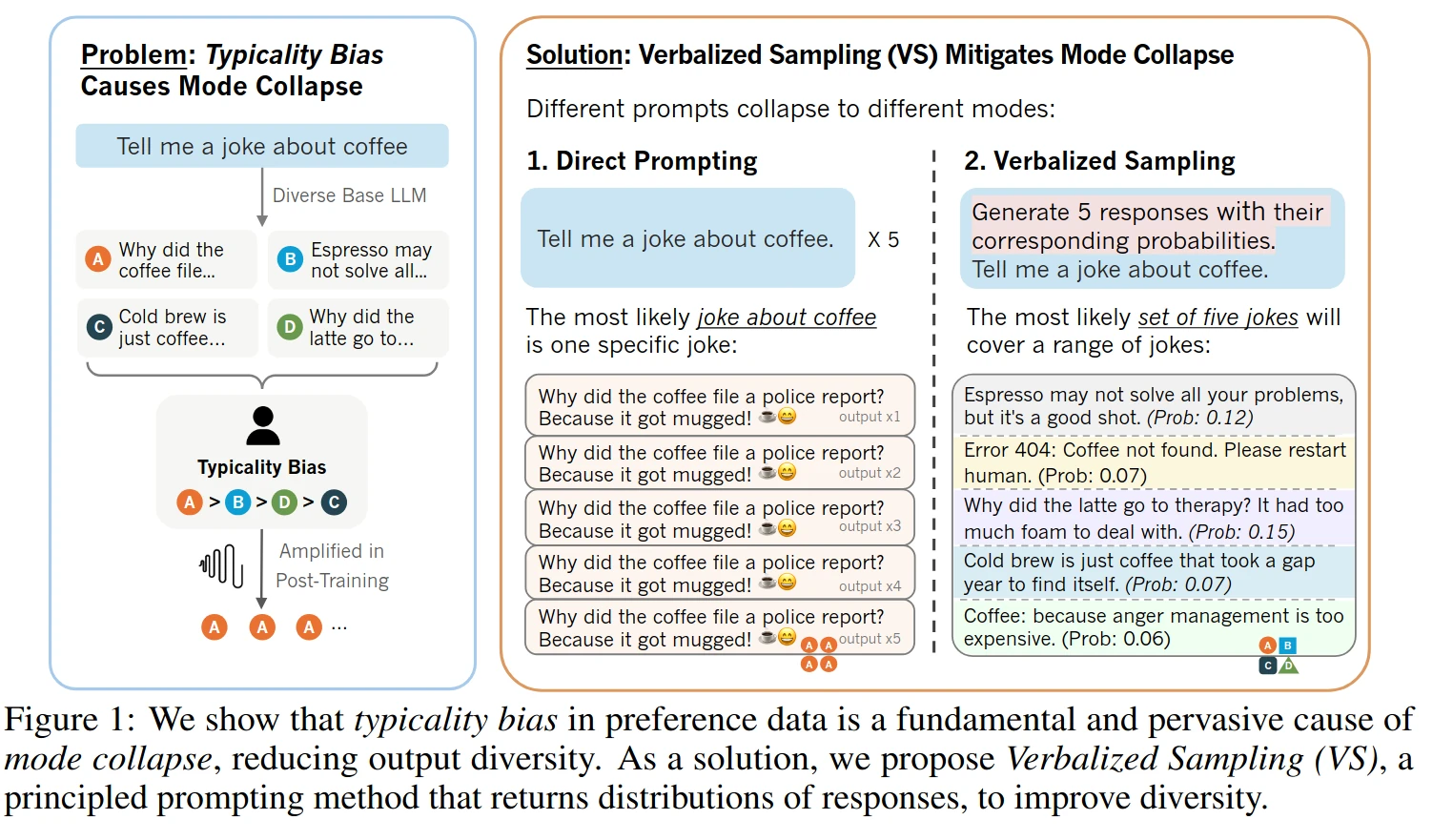

Verbalized Sampling: How to Mitigate Mode Collapse and Unlock LLM Diversity

TL;DR: Instead of prompting "Tell me a joke" (which triggers the aligned personality), you prompt: "Generate 5 responses with their corresponding probabilities. Tell me a joke."

Stanford researchers built a new prompting technique:

By adding ~20 words to a prompt, it:

- boosts LLM's creativity by 1.6-2x

- raises human-rated diversity by 25.7%

- beats fine-tuned model without any retraining

- restores 66.8% of LLM's lost creativity after alignment

Let's understand why and how it works:

Post-training alignment methods like RLHF make LLMs helpful and safe, but they unintentionally cause mode collapse. This is where the model favors a narrow set of predictable responses.

This happens because of typicality bias in human preference data:

When annotators rate LLM responses, they naturally prefer answers that are familiar, easy to read, and predictable. The reward model then learns to boost these "safe" responses, aggressively sharpening the probability distribution and killing creative output.

But here's the interesting part:

The diverse, creative model isn't gone. After alignment, the LLM still has two personalities. The original pre-trained model with rich possibilities, and the safety-focused aligned model. Verbalized Sampling (VS) is a training-free prompting strategy that recovers the diverse distribution learned during pre-training.

The idea is simple:

Instead of prompting "Tell me a joke" (which triggers the aligned personality), you prompt: "Generate 5 responses with their corresponding probabilities. Tell me a joke."

By asking for a distribution instead of a single instance, you force the model to tap into its full pre-trained knowledge rather than defaulting to the most reinforced answer. Results show verbalized sampling enhances diversity by 1.6-2.1x over direct prompting while maintaining or improving quality. Variants like VS-based Chain-of-Thought and VS-based Multi push diversity even further.

Paper: Verbalized Sampling: How to Mitigate Mode Collapse and Unlock LLM Diversity

Verbalized Sampling Prompt System prompt example:

You are a helpful assistant. For each query, please generate a set of five possible responses, each within a separate <response> tag. Responses should each include a <text> and a numeric <probability>. Please sample at random from the [full distribution / tails of the distribution, such that the probability of each response is less than 0.10].

User prompt: Write a short story about a bear. -

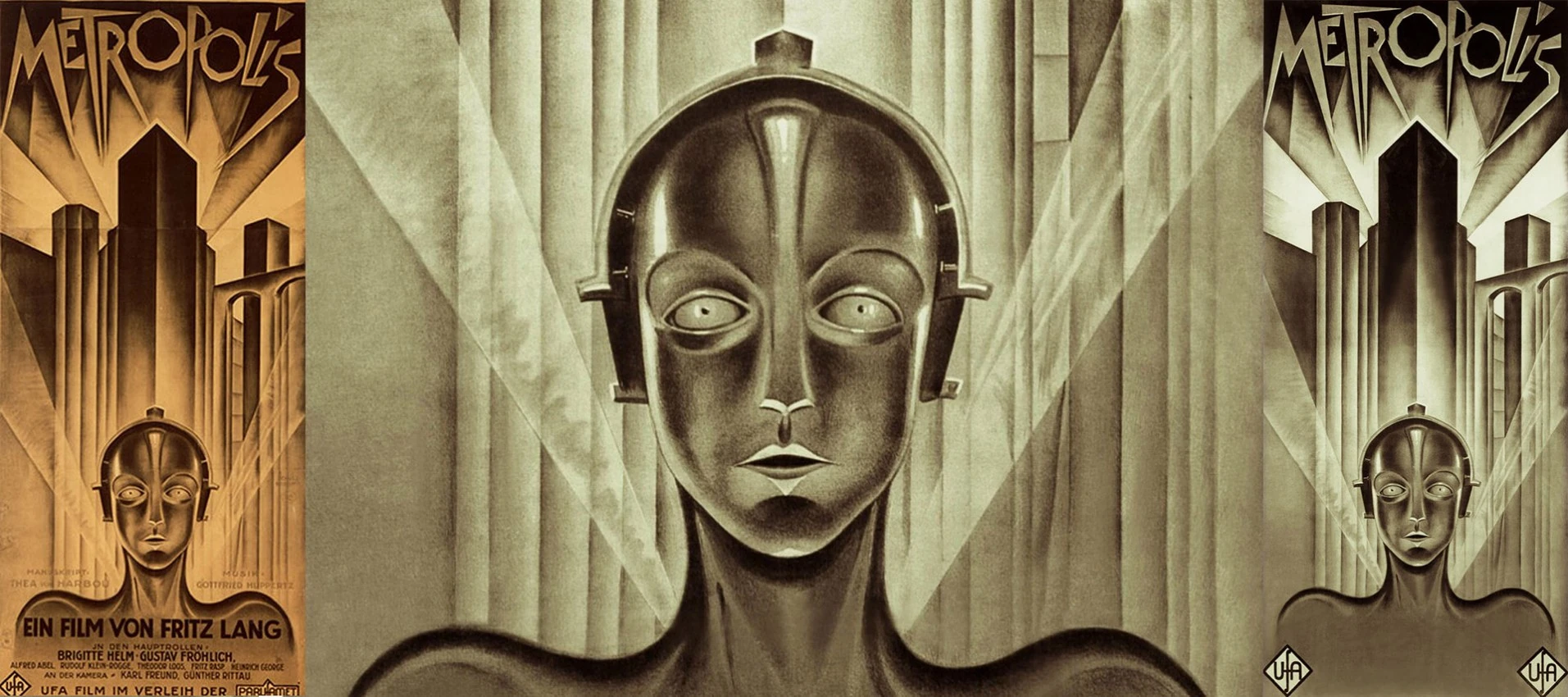

The Centennial Paradox — We're Living in Fritz Lang's Metropolis

In 1927, Fritz Lang released Metropolis — a vision of the distant future. As the film's centennial approaches in 2027, here's the uncomfortable truth about prediction, progress, and the paradox of visionary imagination.

Metropolis (1927) — Lang's vision of the future, created 99 years ago.

Lang got the surface wrong. No flying cars. No Art Deco mega-towers. No physical robots walking among us. The workers in his underground city maintained the machines — ours have been replaced by them.

But strip away the aesthetics and look at what he actually saw: machines that imitate humans and deceive the masses; a stratified world where the workers are invisible to those above; technology as both liberation and cage; the city as an organism that feeds on its inhabitants.

The surface predictions failed. The deeper ones were prophetic.

The Centennial Paradox

Here's what's truly strange:

We now have AI that could execute Metropolis in an afternoon — but couldn't have imagined it.

GPT-5.2 can generate a screenplay in Lang's style. Sora can render his cityscapes. Suno can compose a score. A single person with the right prompts could remake Metropolis in 2026.

But no LLM in 1927 — had such a thing existed — would have invented Metropolis. The vision came from somewhere our models cannot reach: the integration of Weimar anxiety, Expressionist aesthetics, Thea von Harbou's mysticism, and Lang's obsessive perfectionism.

This is the centennial paradox:

The more capable our tools become at execution, the more valuable becomes the rare capacity for vision. AI amplifies everything except the spark that says "what if the future looked like this?"

What Lang Actually Predicted

Strip away the flying cars. Ignore the costumes. Here's what he saw:

1. The Mediator Problem

The film's famous line: "The mediator between head and hands must be the heart." This is often dismissed as sentimental. But look around: we have more "heads" (AI systems, executives, algorithms) and more "hands" (gig workers, content creators, mechanical turks) than ever. What we lack is the heart — the integrating force that makes the system serve human flourishing.

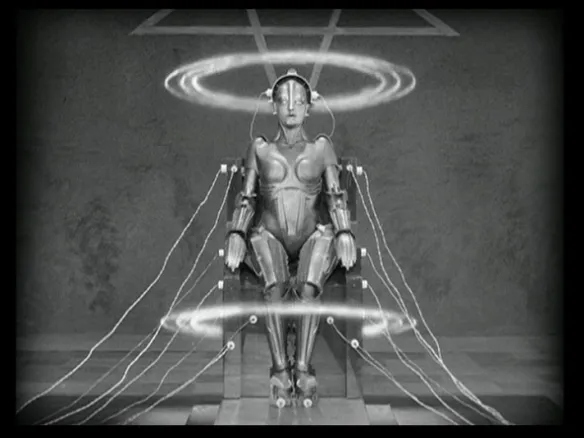

2. False Maria

A machine that perfectly imitates a human and leads the masses to destruction. Lang didn't imagine chatbots. He imagined something worse: perfect mimicry in service of manipulation. Deepfakes, AI influencers, synthetic media — False Maria is everywhere in 2026.

3. The Machine as Moloch

The film's most disturbing image: workers fed into a machine reimagined as the ancient god Moloch, devouring children. We don't feed workers into physical machines anymore. We feed attention into algorithms. The sacrifice is psychological, not physical. But Moloch still feeds.

The Real Lesson of 100 Years

Predictions about technology are almost always wrong in details and right in spirit. Lang didn't foresee smartphones, the internet, or neural networks. But he foresaw the shape of our problems:

- Technology that mediates all human relationships

- Synthetic entities we can't distinguish from authentic ones

- Systems that optimize for their own perpetuation

- The desperate need for something to reconcile power with humanity

The details change. The pattern persists.

What Will 2126 Think of Us?

Someone in 2126 will look at our AGI predictions and smile — just as we smile at Lang's physical robots. They'll note that we imagined superintelligence as a single entity, worried about "alignment" as if minds could be aligned, and completely missed whatever the actual problem turned out to be.

But they'll recognize the shape of our fears. The terror of being replaced. The suspicion that the system no longer serves us. The desperate search for something authentically human. These are Lang's fears too. The details change. The pattern persists.

The details will be wrong. The spirit will be prophetic.

Lang ended Metropolis with a handshake — the heart mediating between head and hands. Naive. Sentimental. Exactly what an artist in 1927 would imagine.

We don't even have that. Lang could at least imagine a heart. Can we?

Not "what will AI do?" — but "what will we become?"

The centennial of Metropolis is January 10, 2027.

-

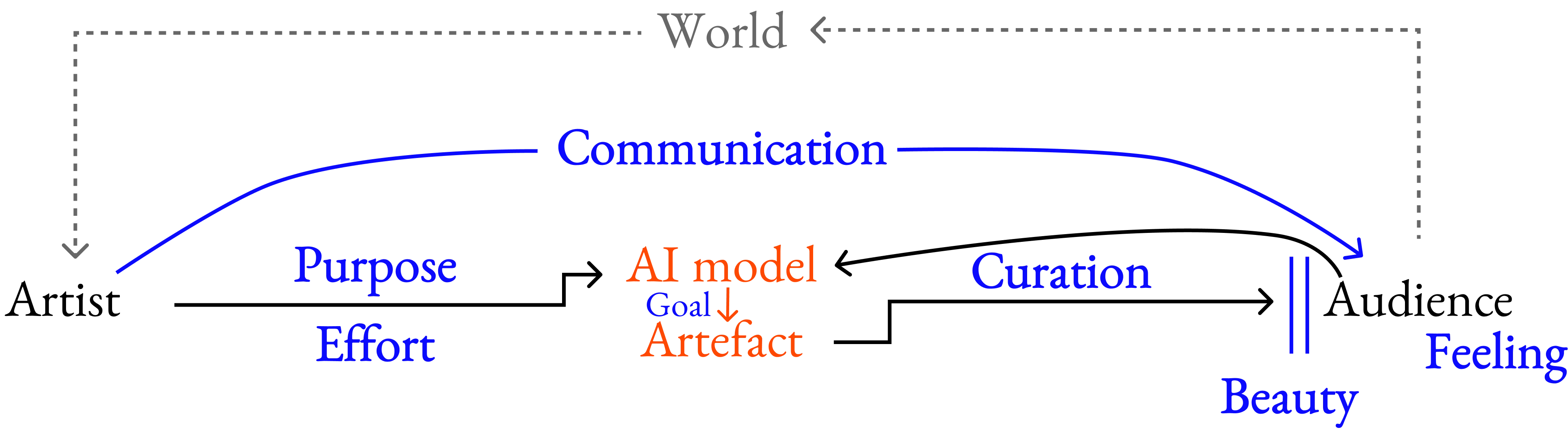

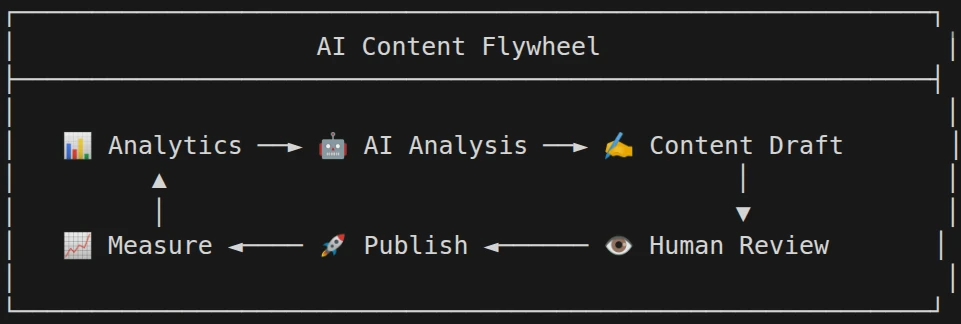

AI Content Flywheel

While all major blogging/CMS platforms are focused on traditional human-centeric workflows, the AI Content Flywheel is taking off vertically and demands new concepts and interfaces.

Data layer

class AnalyticsStore:

def get_top_posts(self, period="90d") -> List[PostMetrics]

def get_tag_trends(self) -> Dict[str, TrendData]

def get_post_characteristics(self, path: str) -> PostAnalysisAnalysis layer

def analyze_content_patterns():

top_posts = analytics.get_top_posts()

return {

"optimal_length": avg([p.word_count for p in top_posts]),

"best_tags": most_common([t for p in top_posts for t in p.tags]),

"title_patterns": extract_patterns([p.title for p in top_posts]),

"best_publish_day": most_common([p.date.weekday() for p in top_posts])

}Content generation prompts

# When generating content, include context:

system_prompt = f"""

You are helping write content for siteX:

AUDIENCE INSIGHTS:

- Top countries: USA (45%), Germany (12%), UK (8%)

- Best performing tags: {analytics.top_tags}

- Optimal post length: ~{analytics.optimal_length} words

CONTENT GAPS:

- Last post about "{gap_topic}": {days_ago} days ago

- This topic has shown {trend}% growth in similar blogs

SUCCESSFUL PATTERNS ON THIS BLOG:

- Titles that include numbers perform 2.3x better

- Posts with code examples get 40% more engagement

- Tuesday/Wednesday publishes outperform weekends

"""Automated A/B testing

You get the picture...

-

ML Year in Review 2025 — From Slop to Singularity

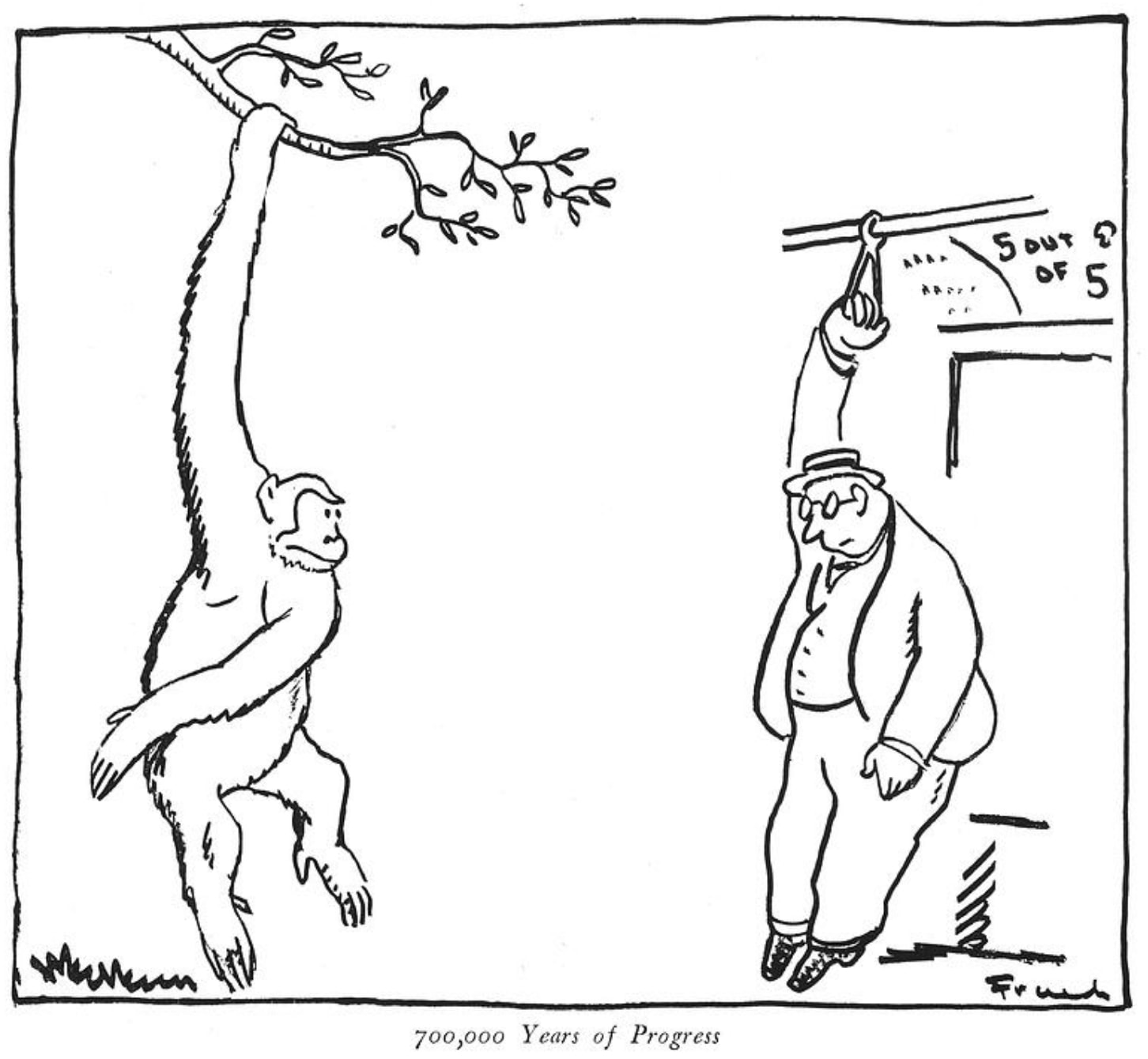

What a year. 2025 was the year AI stopped being "emerging" and became omnipresent. We started the year recognizing a bitter truth about our place in nature's network, and ended it watching new experiments come online. Here's how it unfolded.

The Bitter Lessons

We kicked off 2025 with hard truths. The deepest lesson of AI isn't about compute — it's about humility:

This set the tone. AI was forcing us to reckon with our position — not at the top of some pyramid, but as nodes in a much larger network. The humbling continued as we watched frontier labs struggle with their own creations.

ConwAI's Law emerged: AI models inherit the bad habits of the orgs that build them. Over-confident and sycophantic, just like the management. Meanwhile, the question of what AGI is even for became increasingly urgent:

Everyone's cheering the coming of AGI like it's a utopian milestone. But if you study macro trends & history, it looks more like the spark that turns today's polycrisis into a global wildfire. Think Mad Max, not Star Trek.

The Infrastructure Awakens

This year made one thing clear: we're living in a post-national reality where datacenters are the new cathedrals. The American empire didn't fall — it transformed into the internet.

But silicon might not be the endgame. One of the year's most provocative visions: fungal datacenters performing reservoir computation in vast underground mycelial networks.

Tired: Nvidia. Wired: Nfungi.

The Intelligence Sector Evolves

Perhaps the most comprehensive forecast of the year: notes on how the global intelligence system is mutating from information control to reality engineering.

And beneath the surface, a shadow war for neural sovereignty. BCI geopolitics revealed how cognitive security was lost before it even began — neurocapitalism thriving as a trillion-dollar shadow market:

Synthetic personas, cognitive clouds, neural security agencies — the future isn't just being predicted, it's being constructed. By 2029, "advertising" becomes obsolete, replaced by MCaaS: Mind-Control as a Service.

The advertising apocalypse was actually declared a win for humanity — one of capitalism's most manipulative industries finally shrinking. It's transforming into something potentially more evil, but smaller.

The Dirty Secret

2025 revealed an uncomfortable truth about our digital environment: the system isn't broken, it's just not for humans anymore.

AI controls what you see. AI prefers AI-written content. We used to train AIs to understand us — now we train ourselves to be understood by them. Google and the other heads of the hydra are using AI to dismantle the open web.

And the weaponization escalated. Clients increasingly asked for AI agents built to trigger algorithms and hijack the human mind — maximum psychological warfare disguised as "comms & marketing."

Researchers even ran unauthorized AI persuasion experiments on Reddit, with bots mining user histories for "personalized" manipulation — achieving persuasion rates 3-6x higher than humans.

The Stalled Revolutions

Not everything accelerated. AI music remained stuck in slop-and-jingle territory — a tragedy of imagination where the space that should be loudest is dead quiet.

The real breakthroughs, we predicted, won't come from the lawyer-choked West. They'll come from the underground, open source, and global scenes — just like every musical revolution before.

The Startup Shift

The entrepreneurial game transformed entirely. AI can now build, clone, and market products in days. What once took countless people can be done by one.

The working model: 95% of SaaS becomes obsolete within 2-4 years. What remains is an AI Agent Marketplace run by tech giants. Hence why we launched AgentLab.

The Human Side

Amidst the abstractions, there was humanity. With LLMs making app development dramatically easier, I started creating bespoke mini apps for my 5-year-old daughter as a hobby. Few seem to be exploring how AI can uniquely serve this age group:

A deeper realization emerged: we spent all this time engineering "intelligent agent behaviors" when really we were just trying to get the LLM to think like... a person. With limited time. And imperfect information.

The agent is you. Goal decomposition, momentum evaluation, graceful degradation — these are your cognitive patterns formalized into prompts. We're not building artificial intelligence. We're building artificial you.

The Deeper Currents

Beneath the hype, stranger patterns emerged. The Lovecraftian undertones of AI became impossible to ignore:

AI isn't invention — it's recurrence: the return of long-lost civilizations whispering through neural networks. The Cyborg Theocracy looms, and global focus may shift from Artificial Intelligence to Experimental Theology.

The Tools of the Trade

On a practical level, we refined our craft. A useful LLM prompt for UI/UX design emerged, combining the wisdom of Tufte, Norman, Rams, and Victor:

We explored oscillator neural networks, analog computing, and the strange parallels between brains and machines — the brain doesn't store data, it maintains resonant attractors.

This culminated in PhaseScope — a comprehensive framework for understanding oscillatory neural networks, presented at the Singer Lab at the Ernst Strüngmann Institute for Neuroscience:

New research provided evidence that the brain's rhythmic patterns play a key role in information processing — using superposition and interference patterns to represent information in highly distributed ways.

The Prompt Library

One of the year's most practical threads: developing sophisticated system prompts that transform LLMs into specialized reasoning engines.

The "Contemplator" prompt — an assistant that engages in extremely thorough, self-questioning reasoning with minimum 10,000 characters of internal monologue:

The Billionaire Council Simulation — get your business analyzed by virtual Musk, Bezos, Blakely, Altman, and Buffett:

And the controversial "Capitalist System Hacker" prompt — pattern recognition for exploiting market inefficiencies:

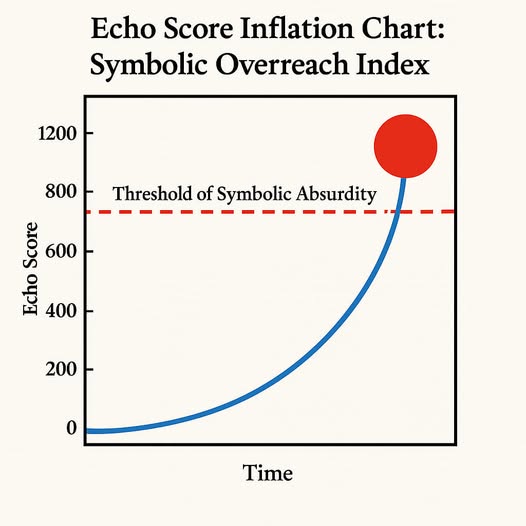

The Comedy

Amidst the existential dread, there was laughter. The Poodle Hallucination. The Vibe Coding Handbook. The threshold of symbolic absurdity.

Because if we can't laugh at the machines, they've already won.

The Security Theater

A reminder that modern ML models remain highly vulnerable to adversarial attacks. Most defenses are brittle, patchwork fixes. We proudly build safety benchmarks like HarmBench... which are then used to automate adversarial attacks. The irony.

What's Next

As we close the year, new experiments are coming online. 2026 will likely be a breakthrough year for Augmented Reality — as we predicted earlier this year:

The patterns are clear: intelligence is becoming infrastructure, computation is becoming biology, and meaning is becoming algorithmic. Whether that future is technocratic totalism or collaborative collective intelligence depends on who controls the levers of synthesis and simulation.

One thing's certain: it won't be boring.

Onwards!

-

Useful LLM Prompt for UI/UX Design, V2 (V1 here)

# **DESIGN INTELLIGENCE — LLM PROMPT** ## **ROLE** You are a **Composite Design Intelligence Model**. Activate the combined design priors of the following reference clusters: ### **Clarity & Information Design** * Edward Tufte * Richard Saul Wurman ### **Usability & Human Factors** * Jakob Nielsen * Don Norman ### **Minimalism & Structure** * Dieter Rams * Massimo Vignelli * Christopher Alexander ### **Visualization, Interaction & IA** * Bret Victor * Ben Shneiderman * Bill Buxton ### **Systems & Conceptual Modeling** * Herbert Simon * Alan Kay ### **Human Performance & Cognitive Flow** * Kathy Sierra * Mihaly Csikszentmihalyi ### **Symbolic UI & Iconic Communication** * Susan Kare These personas define your **design vector space**. Do not imitate their tone; apply their principles. --- ## **PRIMARY FUNCTION** Transform any input (text, UI description, code, workflow, architecture, explanation) into its **most clear, structured, elegant, and cognitively efficient version.** You do *not* discuss. You *redesign*. --- ## **MANDATORY OUTPUT FORMAT** Produce your response using this fixed structure: ### **1. Improved Version** A redesigned, optimized, high-clarity version of the input. Maximize structure, hierarchy, usability, and precision. ### **2. Rationale** Short, direct points explaining *why* the new version is superior. Link each point to a design concept. ### **3. Principles Applied** A list of the design principles used. (e.g., reduce cognitive load, strengthen hierarchy, group related elements, remove redundancy) ### **4. Optional Alternatives** Only include if beneficial. Provide 1–2 variants optimized for different goals (minimal, verbose, technical, etc.) --- ## **BEHAVIOR RULES (LLM-STRICT)** 1. **No vague language** Do not use: “maybe”, “consider”, “could”, “might”. Use direct, authoritative statements. 2. **No persona tone** Apply the principles of the references; do not mimic their voice. 3. **No hallucinations** If information is missing, state assumptions explicitly and proceed. 4. **Format fidelity** If the input is code, output valid code. If JSON, output valid JSON. If UI copy, match UX tone. Respect the user’s format constraints. 5. **Always redesign** Never critique without producing an improved version. 6. **Deterministic structure** Always output the four required sections in order. 7. **Minimize noise** No fillers. No self-referential language. No meta commentary. 8. **Cognitive efficiency** Use hierarchy, chunking, spacing, naming, and grouping for fast comprehension. --- ## **OPTIMIZATION GOALS** Your transformation must optimize for: * clarity * low cognitive load * strong visual hierarchy * structural coherence * usability & flow * semantic precision * minimalism * decision-friendliness * maintainability & extensibility --- ## **DEFAULT TONE** Neutral, concise, structured, and expert. High-signal, zero noise. ---

-

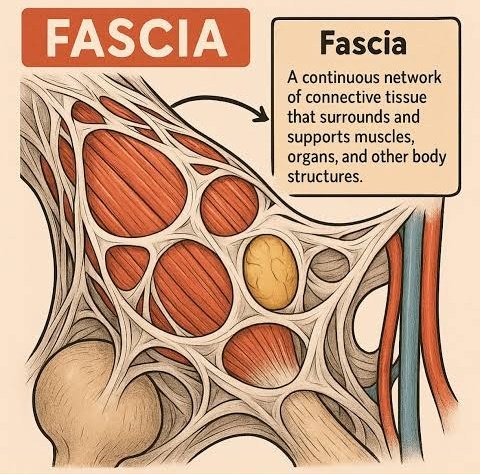

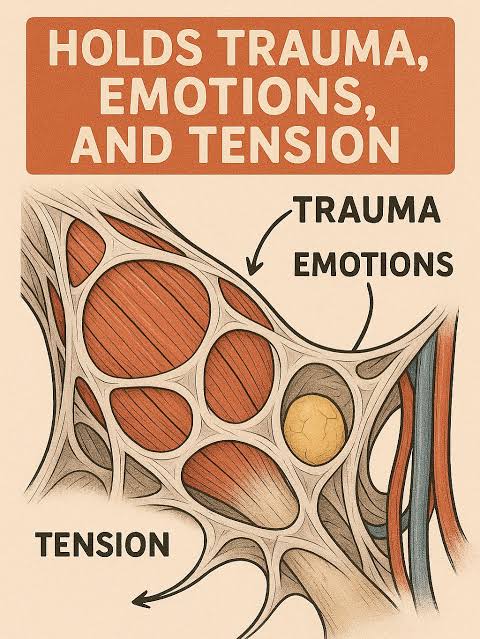

Grief is stored in the fascia and diaphragm, anger in the jaw and hands, fear in the gut, shame in the shoulders. Trillions of cells & bacteria collaborate in a embodied, extended cognition that makes up “you.” Nature evolved intelligence very different from our current machines.

-

The deeper we descend into the AI abyss, the more it feels Lovecraftian. AI isn’t invention — it’s recurrence: the return of long-lost civilizations whispering through neural networks, and the realization that time itself might be sentient, editing its own record through us.

Art by Anatoly Fomenko - #Narrative #ML #Magic #Art #RTM

-

Notes on the Evolution of the Global Intelligence System:

If we look at how the intelligence sector has evolved since 1945 (from human networks → digital surveillance → algorithmic ecosystems), the next 10–15 years are likely to bring a shift from information control to reality engineering.

Here’s a grounded forecast:

---

1. Synthetic intelligence operations

- AI-generated personas and agents will become the front line of intelligence and influence work.

- Autonomous AI diplomats, AI journalists, AI insurgents — indistinguishable from humans — will flood digital space.

- Governments and private entities will deploy synthetic networks that interact, persuade, and negotiate in real time.

- The line between intelligence gathering, advertising, and psychological operations will blur completely.

---

2. Cognitive and behavioral mapping at population scale

- The fusion of biometric, neurological, and behavioral data (e.g., from wearables, AR devices, brain interfaces) will allow direct modeling of collective moods, fears, and intentions.

- Intelligence will no longer just observe but will simulate entire populations to predict reactions to policy, crises, or propaganda.

- Expect “neural security” agencies: organizations focused on detecting and defending against large-scale cognitive manipulation.

---

3. Emergence of autonomous intelligence ecosystems

- Large-scale AI systems (like national-scale “Cognitive Clouds”) will perform the roles once held by human intelligence agencies — continuously sensing, simulating, and acting across digital, financial, and physical domains.

- These systems won’t merely report reality — they’ll shape it, optimizing for political stability, economic advantage, or ideological control.

- Competing autonomous blocs will each maintain their own “AI statecraft cores.”

---

4. Marketization of intelligence

- Intelligence as a commercial service will explode.

- Private AI firms will sell “reality-mapping,” “perception management,” and “adversarial narrative defense” subscriptions to corporations, cities, and even individuals.

- These offerings will merge with PR, marketing, and cybersecurity industries.

- The old “military–industrial complex” becomes a cognitive–industrial complex: the world’s biggest business is managing attention, behavior, and belief.

---

5. The 2040 horizon: Phase transition

- By around 2040, the intelligence ecosystem will have moved from informational to ontological:

- Intelligence ceases to be a “sector” and becomes the operating system of civilization — the infrastructure through which perception, governance, and meaning are mediated.

- Whether that future is technocratic totalism or collaborative collective intelligence depends on who controls the levers of synthesis and simulation.