-

ChatGPT o3-mini-high for most use-cases it's a horrible model, despite the propaganda.

-

Perspective: 155'000 people didn't wake up this morning so have some respect for yourself

-

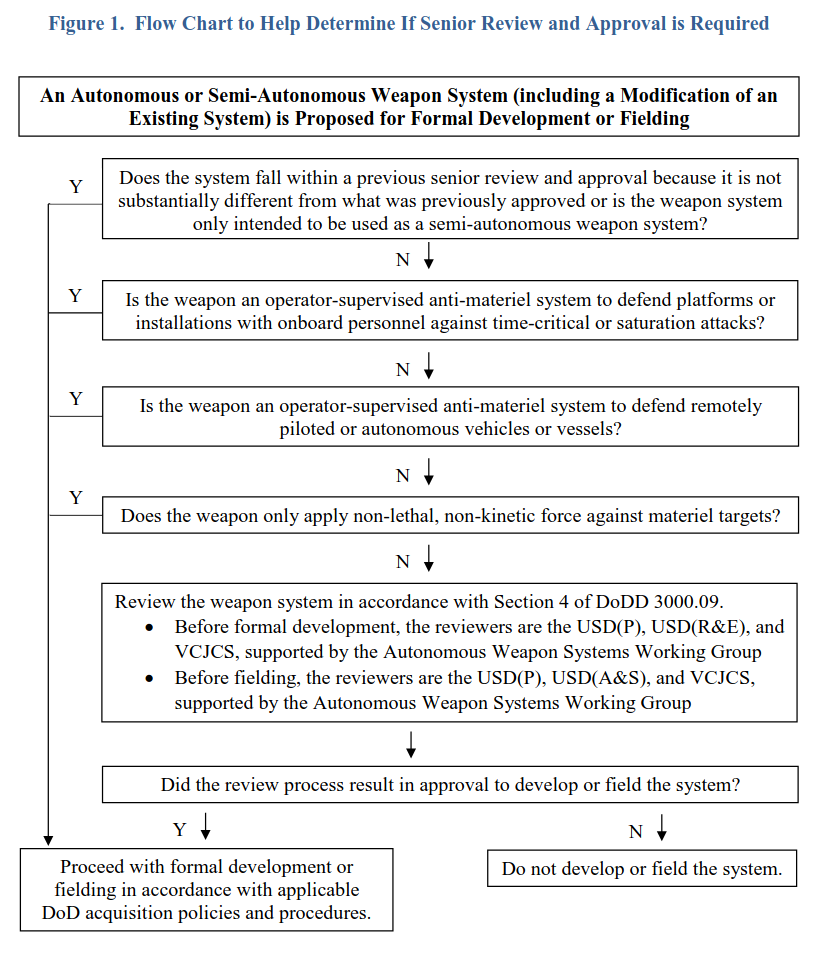

DOD DIRECTIVE 3000.09 - AUTONOMY IN WEAPON SYSTEMS

This document explains how the Department of Defense (DoD) reviews and approves new autonomous weapon systems (such as drones or robotic systems that can choose and engage targets on their own). Here's a breakdown in plain language:

1. Who Must Approve These Systems:

- Any autonomous weapon system that doesn't already follow established rules must get a high-level "senior review" before it is developed and again before it is used in the field.

- Top DoD officials—specifically, senior policy, research, and acquisition leaders, along with the Vice Chairman of the Joint Chiefs of Staff—must give the green light at these stages.

2. What the Review Looks For (Before Development):

- Human Oversight: The system must allow commanders or operators to keep control and step in if needed.

- Controlled Operations: It should work within expected time frames, geographic areas, and operational limits. If it can't safely operate within these limits, it should stop its actions or ask for human input.

- Risk Management: The design must account for the possibility of mistakes or unintended targets (collateral damage) and have safety measures in place.

- Reliability and Security: The system needs robust safety features, strong cybersecurity, and methods to fix any unexpected behavior quickly.

- Ethical Use of AI: If the system uses artificial intelligence, it must follow the DoD's ethical guidelines for AI.

- Legal Review: A preliminary legal check must be completed to ensure that using the system complies with relevant laws and policies.

3. What the Review Looks For (Before Fielding/Deployment):

- Operational Readiness: It must be proven that both the system and the people using it (operators and commanders) understand how it works and can control it appropriately.

- Safety and Security Checks: The system must demonstrate that its safety measures, anti-tamper features, and cybersecurity defenses are effective.

- Training and Procedures: There must be clear training and protocols for operators so they can manage and, if necessary, disable the system safely.

- Ongoing Testing: Plans must be in place to continually test and verify that the system performs reliably, even under realistic and possibly adversarial conditions.

- Final Legal Clearance: A final legal review ensures that the system's use is in line with the law of war and other applicable rules.

4. Exceptions and Urgent Needs:

- In cases where there is an urgent military need, a waiver can be requested to bypass some of these review steps temporarily.

5. Guidance Tools and Support:

- A flowchart in the document helps decide if a particular weapon system needs this detailed senior review.

- A specialized working group, made up of experts from different areas (like policy, research, legal, cybersecurity, and testing), is available to advise on these reviews and to help identify and resolve potential issues.

6. Additional Information:

- The document includes a glossary that explains key terms and acronyms (like AI, CJCS, and USD) so that everyone understands the language used.

- It also lists other related DoD directives and instructions that support or relate to this process.

In summary, the document sets up a careful, multi-step review process to ensure that any new autonomous weapon system is safe, reliable, under meaningful human control, legally sound, and ethically managed before it is developed or deployed.

-

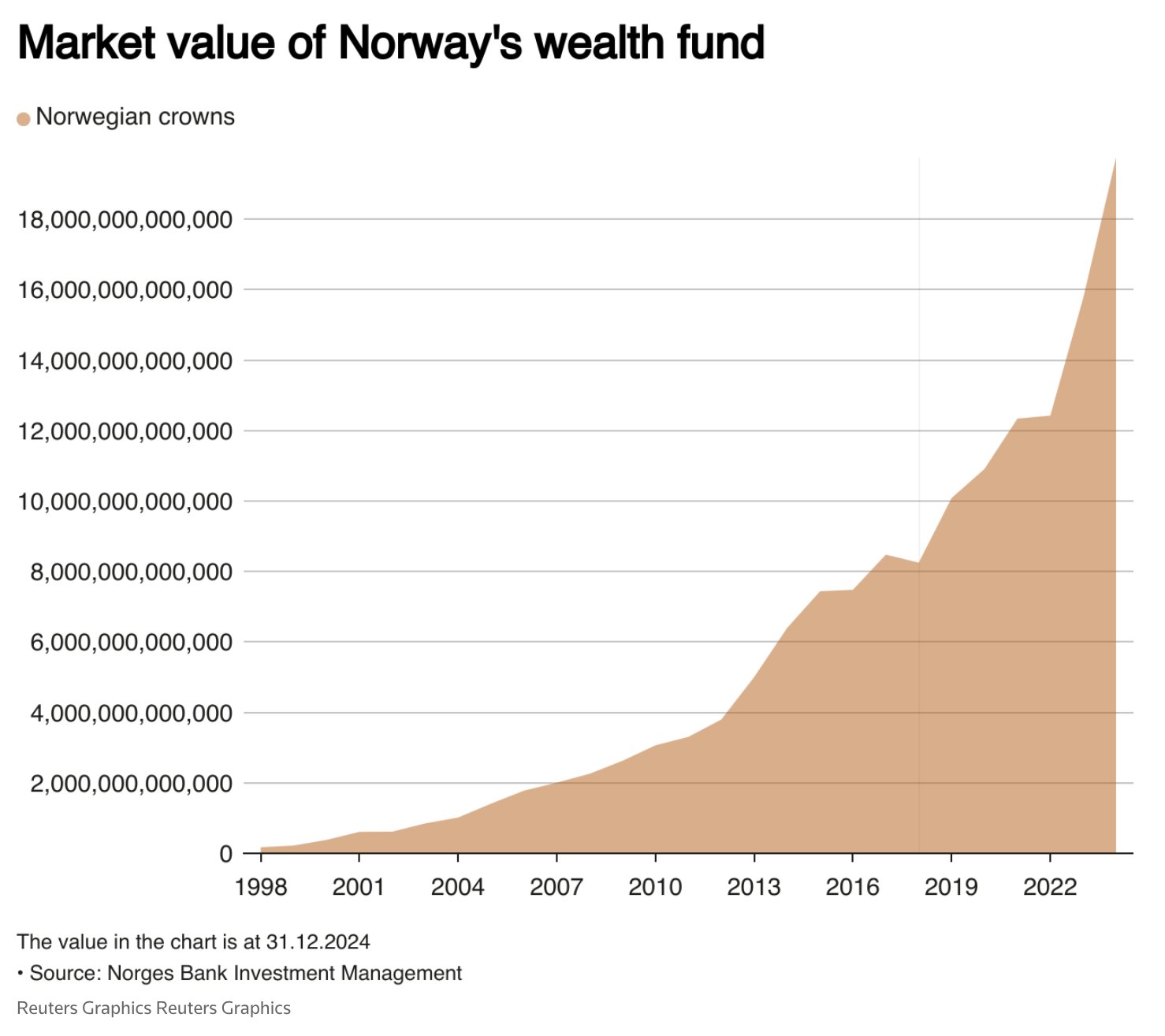

Norway's sovereign wealth fund made $222 billion in profit last year

For comparison, that would fund all of Germany's annual health expenditure (a country 15x larger), it's 2x the annual NASA budget, enough to build 10 Large Hadron Colliders and it's more than Russia's annual military budget. More evidence that Norway can build anything.