tag > Politics

-

Perspective: The 2-day work week is coming faster than anyone’s ready for. The AI economy takeover isn’t just a threat - it’s humanity’s jailbreak.

Cognitive Dissonance for most today:

The more I talk to clients across industries, the more I realize almost no one is taking AI seriously. They treat it like a deluxe word processor, completely missing the seismic shift underway. The disconnect is massive. And time’s running out.

Case in point:

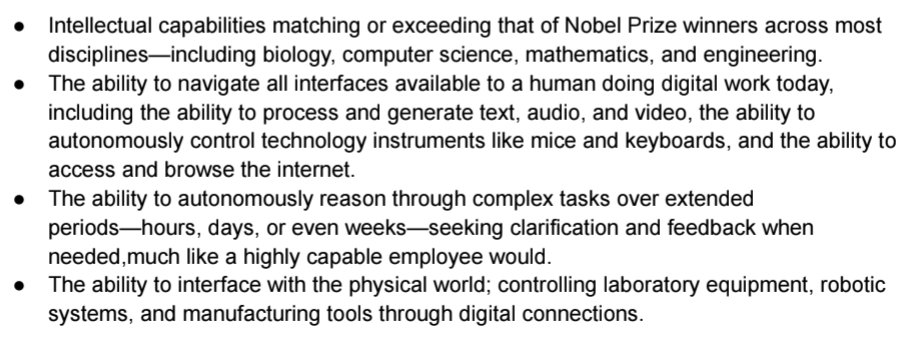

Anthropic submitted their Diffusion Rule recommendations today. From the text:

'Based on current research trajectories, we anticipate that powerful AI technology will be built during this administration, emerging as soon as late 2026 or 2027.'

In this timeframe they anticipate:

-

Paradox is free. It overthrows the tyranny of logic and thus undermines the logic of tyranny. Paradoxes are more subversive than spies, more explosive than bombs, more dangerous than armies, and more trouble than even the President of the United States. They are the weak points in the status quo; they threaten the security of the State. These paradoxes are why the pen is mightier than the sword; a fact which is itself a paradox. - Source

-

It is time for an Epistemic Insurgency. Guerrilla Ontologists, report for duty. Join the Committee for Surrealist Investigation of Claims of the Normal now!

Send in the ontological rescue unit!

-

Cheap AI & robotics will make many formerly exclusive processes available to all:

Synthesize meds at home, Grow food at scale, Build micro-factories & microgrids, and much more.

A radically decentralized future is coming—but elites already have killer drone swarms to stop you

-

The Orwellian doctrines of 'Freedom of speech, not freedom of reach,' 'flood the zone with shit,' 'manufacture consent,' 'pay-for-play' and 'controlled opposition' are all that's left of Western media. Madness normalized. Congrats. 👏

-

Angry Workers Use AI to Bombard Businesses With Employment Lawsuits

#Comment: Admin AI DDoS as a Service will become big business quickly.

-

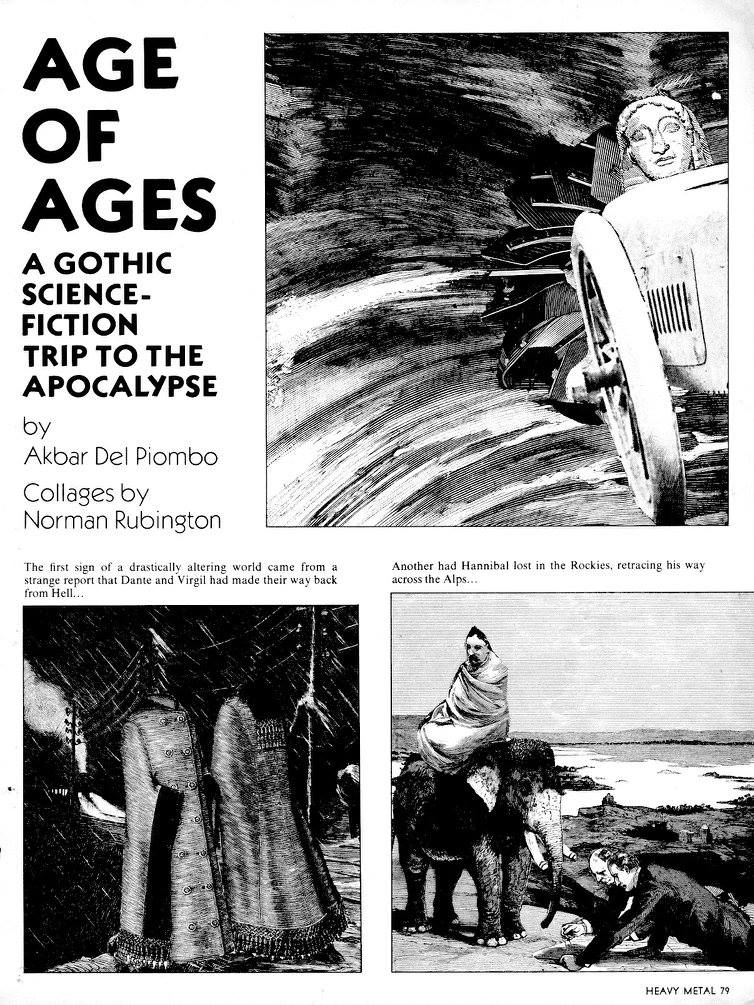

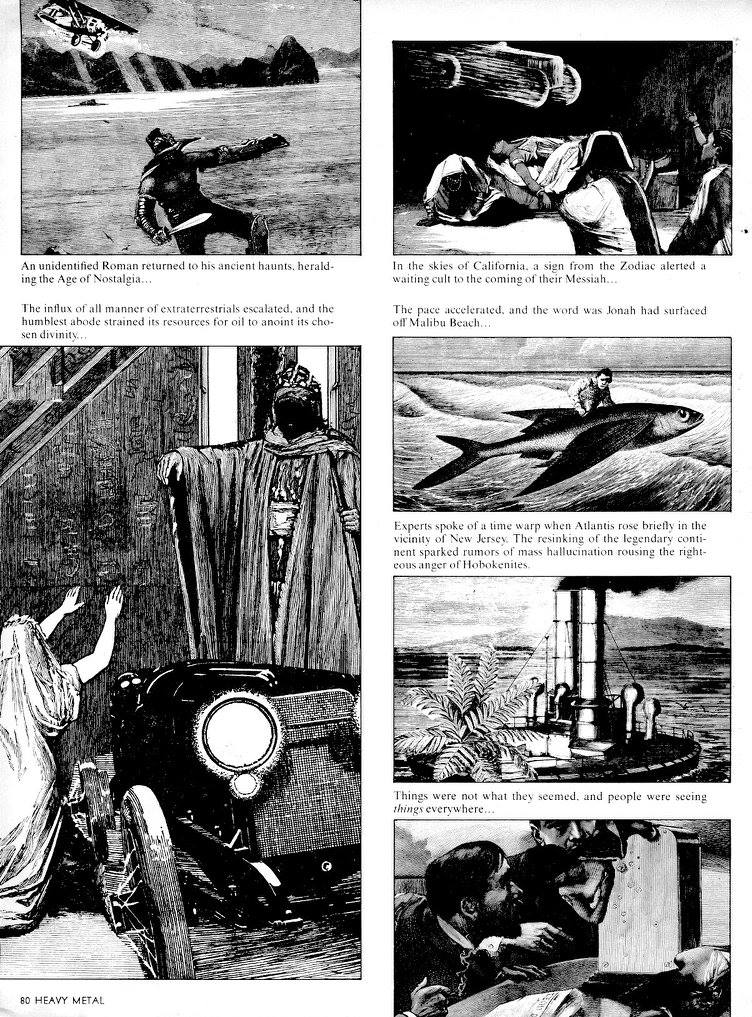

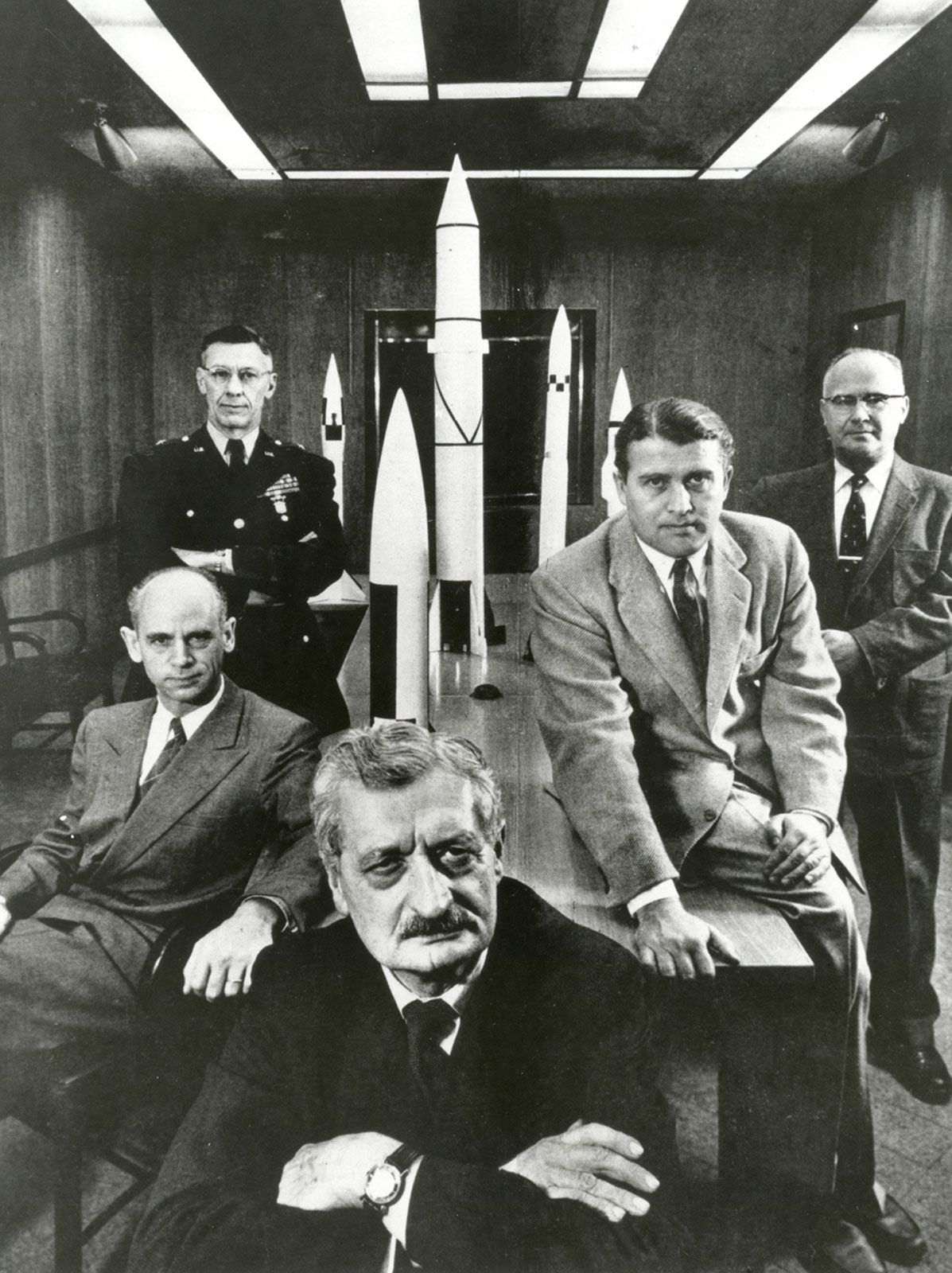

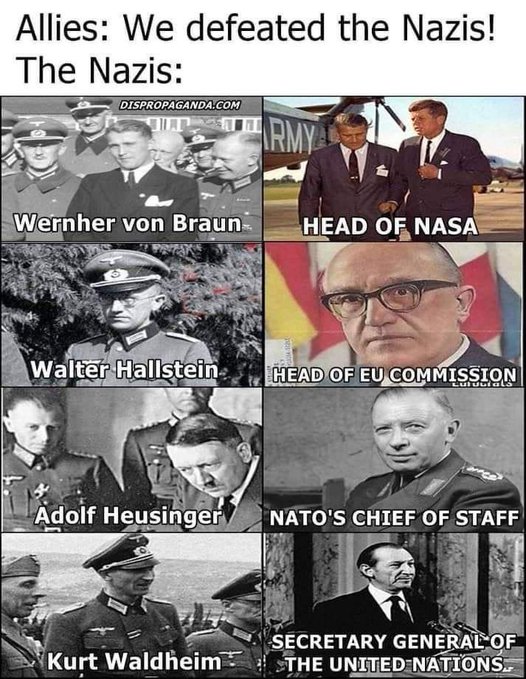

As our American friends finally openly embrace Roman salutes in public and reframe being a Nazi as 'cool,' let’s take a moment to revisit the 100-year-old love affair between the Yankees, their German comrades, and the unseen hand guiding both. Eine ansteckende Geisteskrankheit.

-

What new opportunities emerge from this confluence of trends?

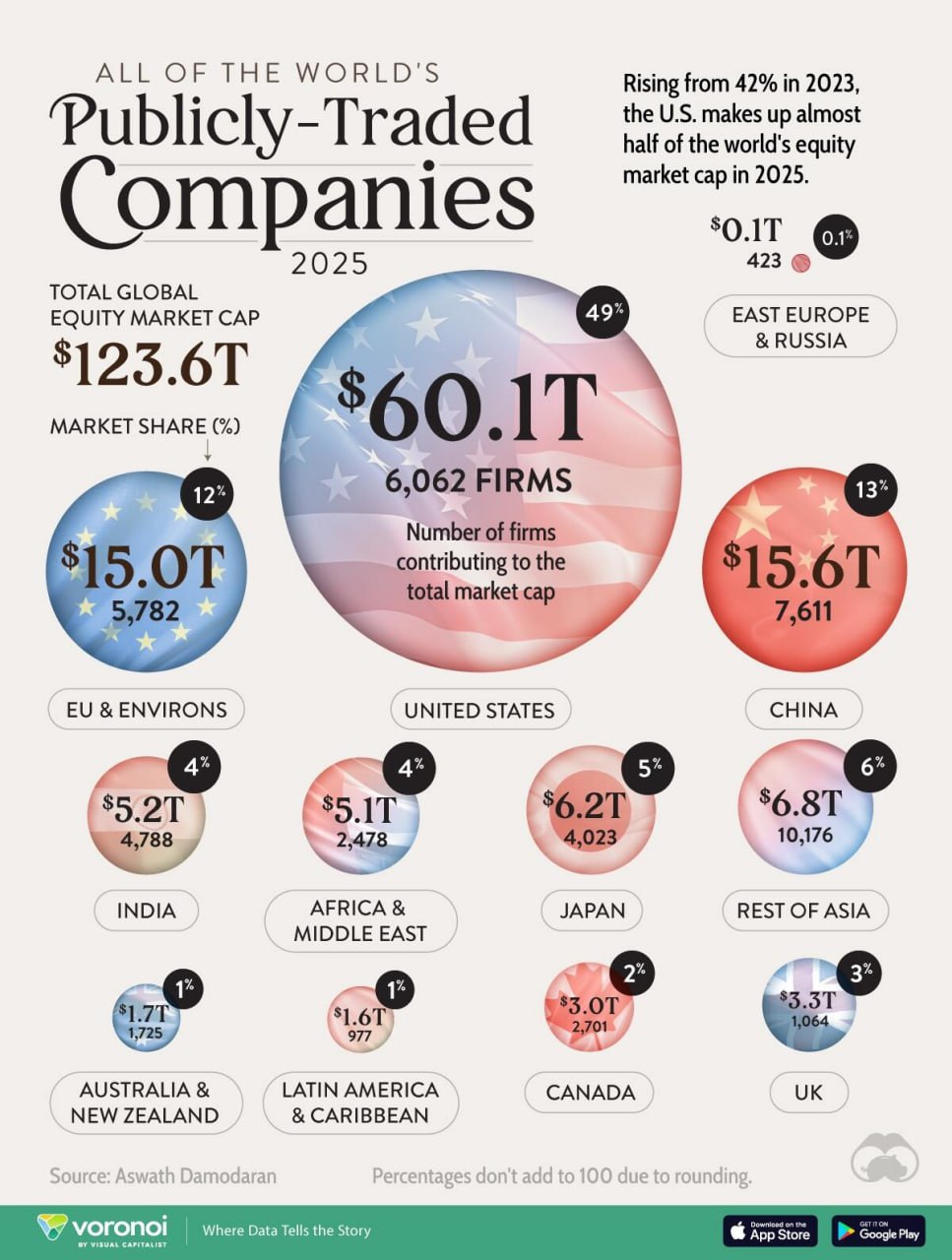

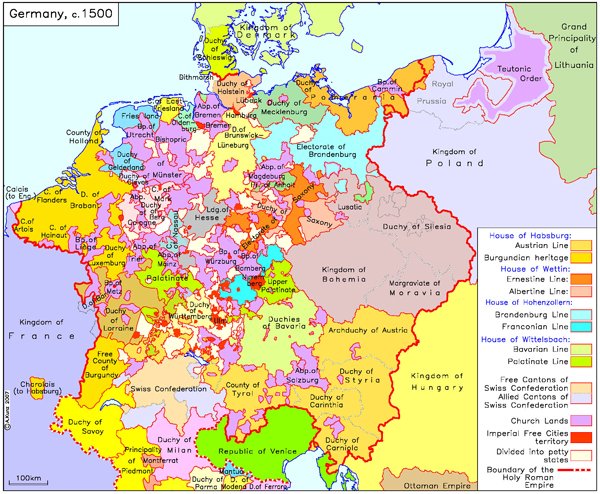

- The total failure of Europe's AI and Robotics sectors

- China’s meteoric rise in AI, robotics, and open-source leadership

- The USA' shift to anti-european, protectionist, vulgar policies

-

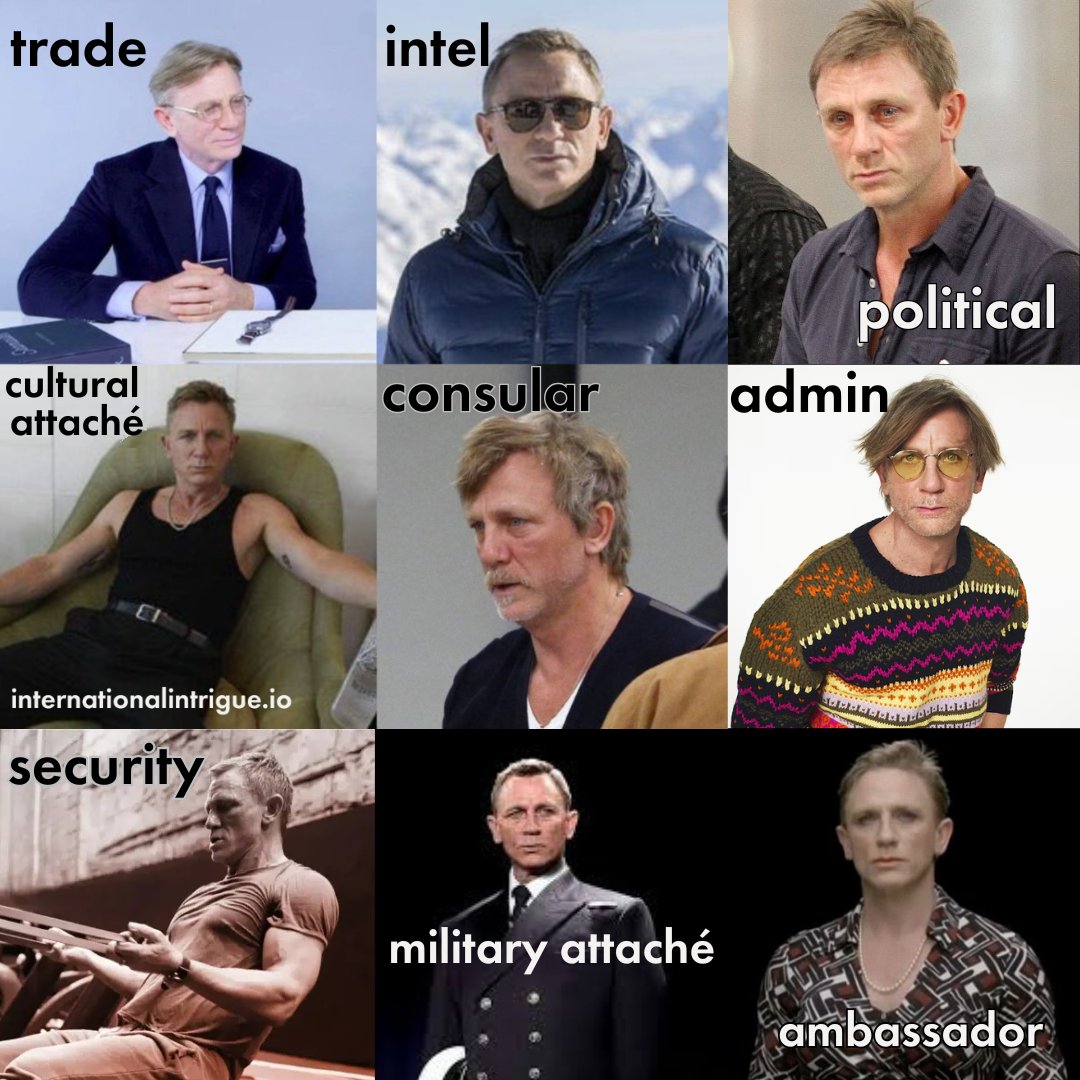

DOD DIRECTIVE 3000.09 - AUTONOMY IN WEAPON SYSTEMS

This document explains how the Department of Defense (DoD) reviews and approves new autonomous weapon systems (such as drones or robotic systems that can choose and engage targets on their own). Here's a breakdown in plain language:

1. Who Must Approve These Systems:

- Any autonomous weapon system that doesn't already follow established rules must get a high-level "senior review" before it is developed and again before it is used in the field.

- Top DoD officials—specifically, senior policy, research, and acquisition leaders, along with the Vice Chairman of the Joint Chiefs of Staff—must give the green light at these stages.

2. What the Review Looks For (Before Development):

- Human Oversight: The system must allow commanders or operators to keep control and step in if needed.

- Controlled Operations: It should work within expected time frames, geographic areas, and operational limits. If it can't safely operate within these limits, it should stop its actions or ask for human input.

- Risk Management: The design must account for the possibility of mistakes or unintended targets (collateral damage) and have safety measures in place.

- Reliability and Security: The system needs robust safety features, strong cybersecurity, and methods to fix any unexpected behavior quickly.

- Ethical Use of AI: If the system uses artificial intelligence, it must follow the DoD's ethical guidelines for AI.

- Legal Review: A preliminary legal check must be completed to ensure that using the system complies with relevant laws and policies.

3. What the Review Looks For (Before Fielding/Deployment):

- Operational Readiness: It must be proven that both the system and the people using it (operators and commanders) understand how it works and can control it appropriately.

- Safety and Security Checks: The system must demonstrate that its safety measures, anti-tamper features, and cybersecurity defenses are effective.

- Training and Procedures: There must be clear training and protocols for operators so they can manage and, if necessary, disable the system safely.

- Ongoing Testing: Plans must be in place to continually test and verify that the system performs reliably, even under realistic and possibly adversarial conditions.

- Final Legal Clearance: A final legal review ensures that the system's use is in line with the law of war and other applicable rules.

4. Exceptions and Urgent Needs:

- In cases where there is an urgent military need, a waiver can be requested to bypass some of these review steps temporarily.

5. Guidance Tools and Support:

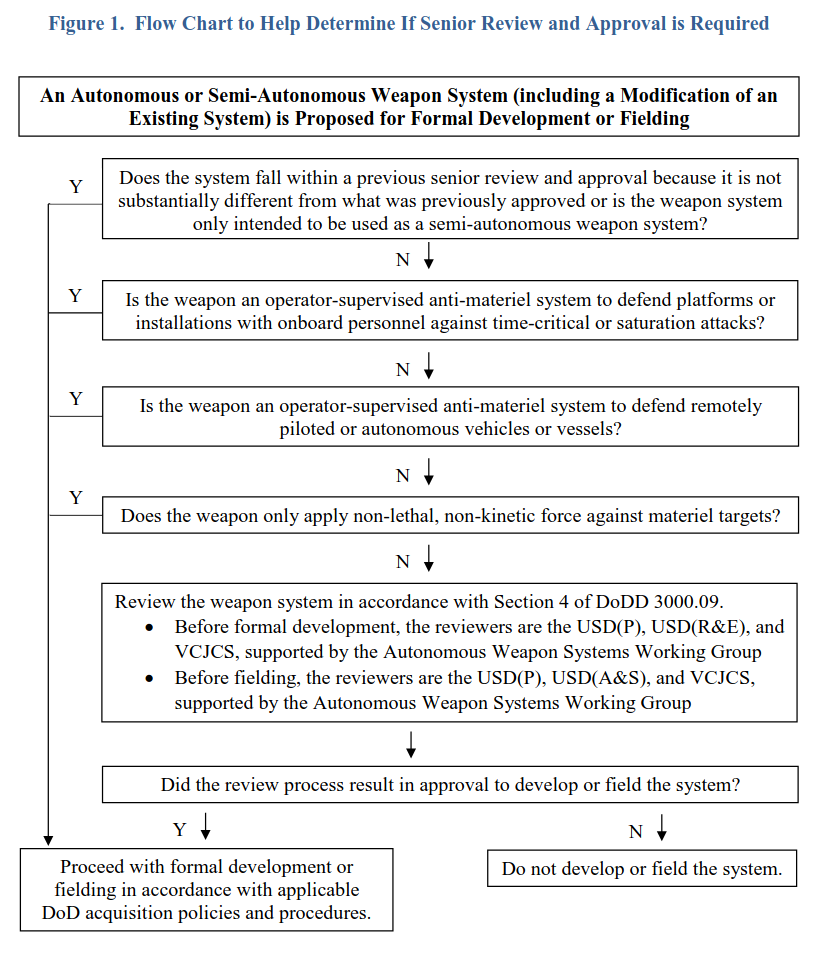

- A flowchart in the document helps decide if a particular weapon system needs this detailed senior review.

- A specialized working group, made up of experts from different areas (like policy, research, legal, cybersecurity, and testing), is available to advise on these reviews and to help identify and resolve potential issues.

6. Additional Information:

- The document includes a glossary that explains key terms and acronyms (like AI, CJCS, and USD) so that everyone understands the language used.

- It also lists other related DoD directives and instructions that support or relate to this process.

In summary, the document sets up a careful, multi-step review process to ensure that any new autonomous weapon system is safe, reliable, under meaningful human control, legally sound, and ethically managed before it is developed or deployed.