-

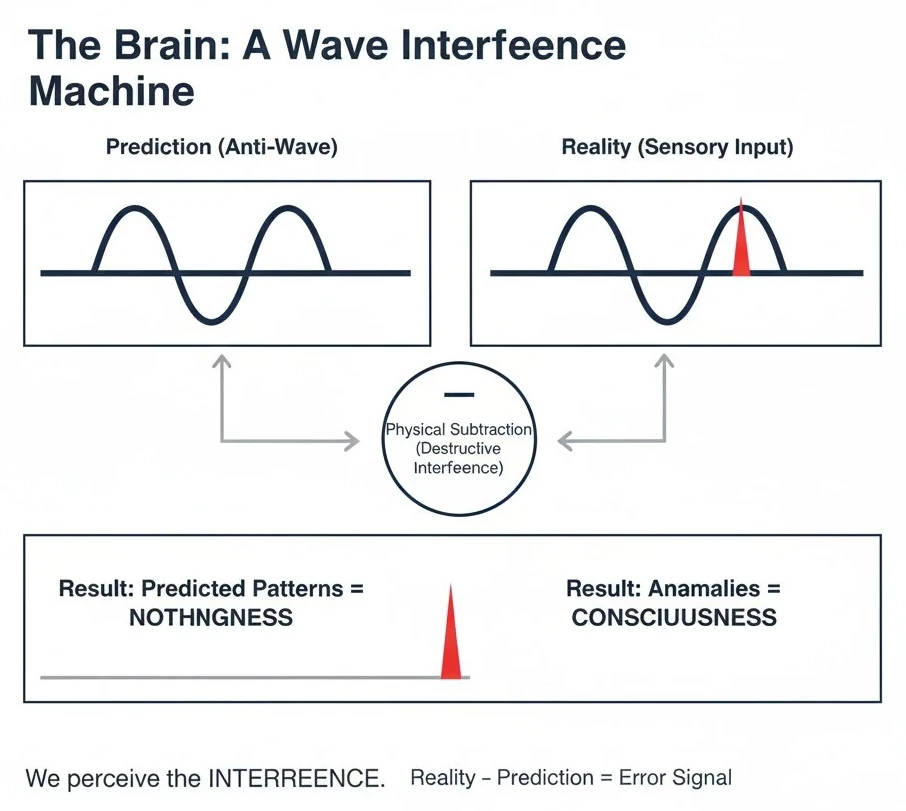

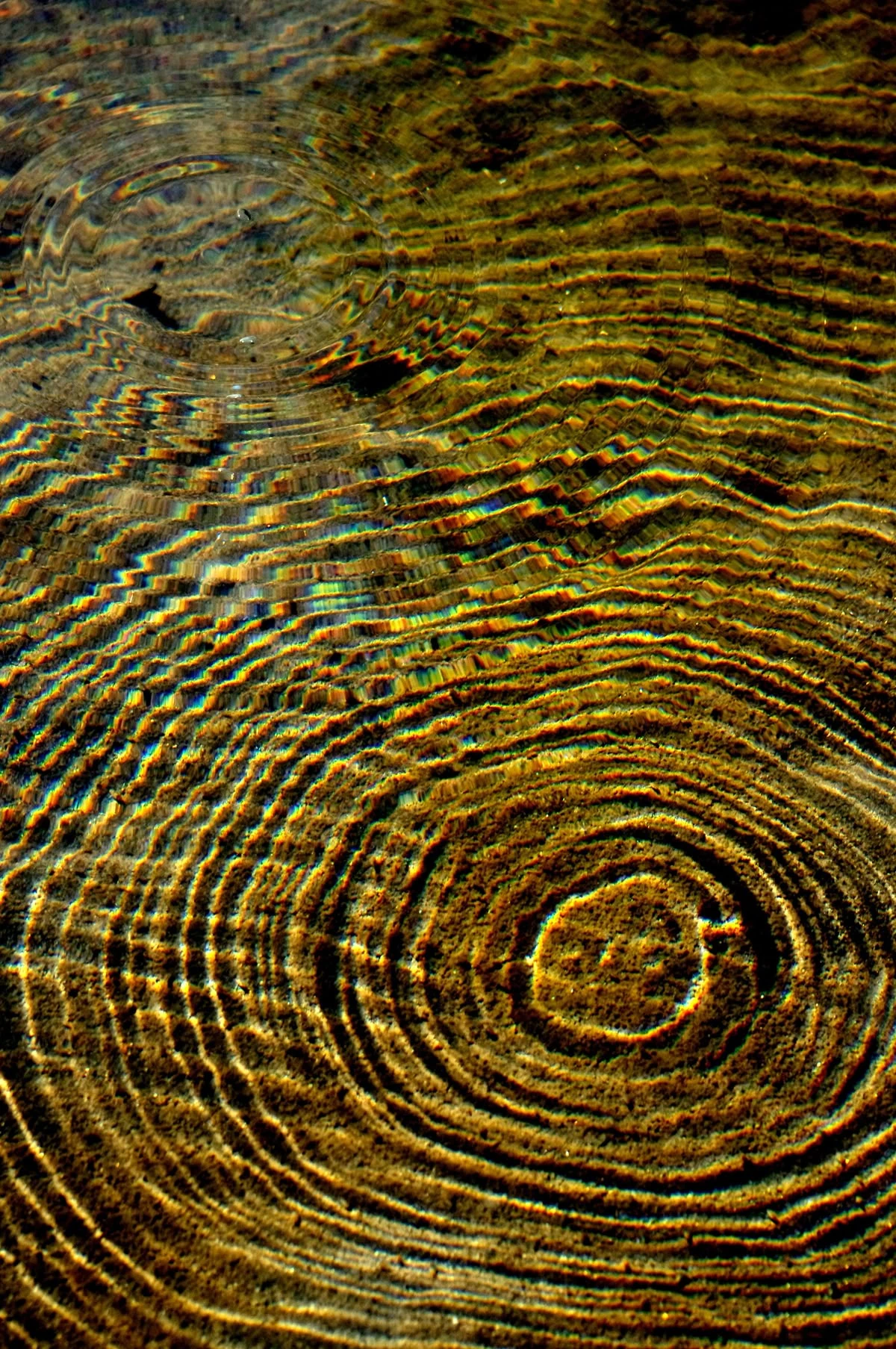

Why the Brain isn't a Computer—It’s a Wave Interference Engine

Most people think the brain "calculates" anomalies like a digital processor. They’re wrong. Digital is too slow. If you look at a 20x20 matrix and spot the "odd" numbers instantly, you aren't running an algorithm. You are performing Analog Subtraction.

The Theory

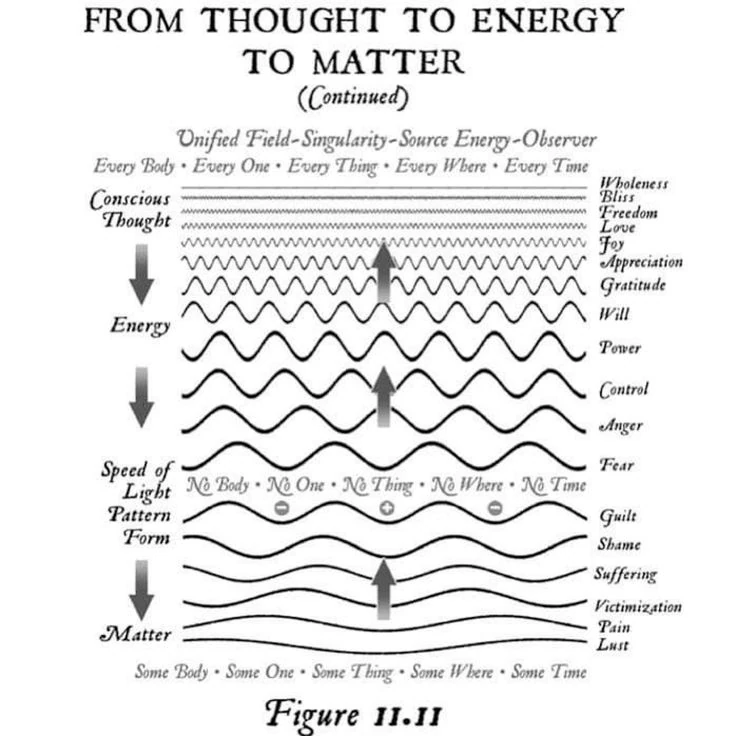

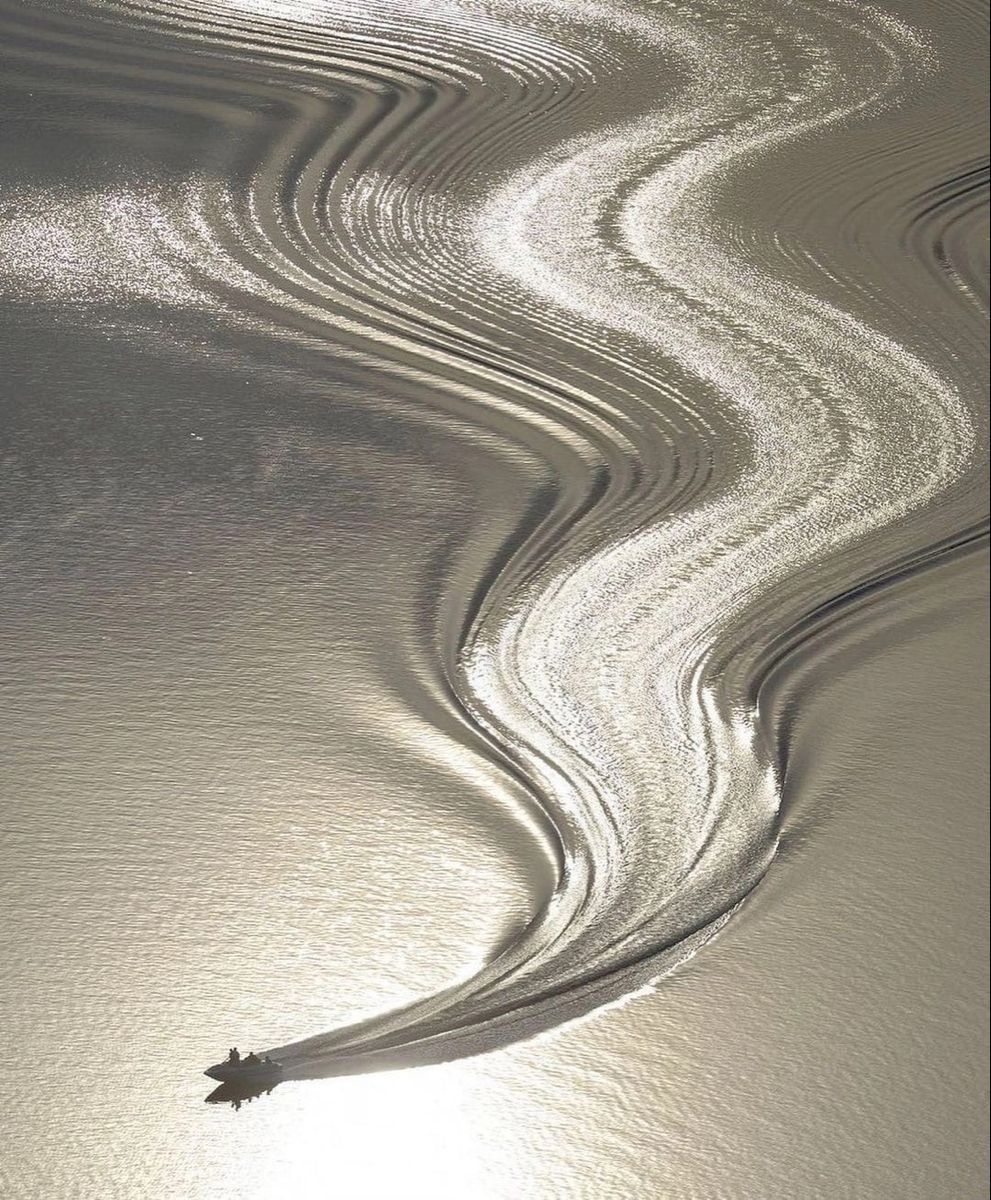

The brain doesn't process data; it manages Waves.

- The Prediction: Your internal model generates a "Counter-Wave" (Anti-Phase) based on expected patterns.

- The Reality: Sensory input hits as an incoming wave.

- The Interaction: When they meet, Destructive Interference occurs.

The Result

The predictable world—the "normal" numbers—simply cancels out into silence. No CPU cycles needed. No "processing" required. The "Oddness" (the anomaly) is the only thing that doesn't cancel. It survives the interference as a high-energy spike. Consciousness isn't the whole picture; it’s the "Residue" of the subtraction.

We don't "think" the difference. We feel the interference where the world fails to match our internal wave. Mathematics calls this a Fourier Transform. Nature calls it Perception. Memory must be wave-like: sensory inputs are converted into waves whose resonance generates meaning from reality.

Source: Cankay Koryak

-

Video: Civilization, Technology and Consciousness - Interview with Peter Lamborn Wilson / Hakim Bey

#Comment: Nice interview with an interesting thinker. He passed away one day after the last recording of this interview in May 2022.

But the "war mindset" ("us" against "them") shines through bit too heavily for my taste. Despite he irony of critiquing this fact is in itself a "me against him" statement..

Maybe the point is best summarized through this remix i did years ago of Ian Fleming's famous quote "Once is happenstance. Twice is coincidence. Three times is enemy action": "Once is happenstance. Twice is coincidence. Three times is dancing!" -Samim

-

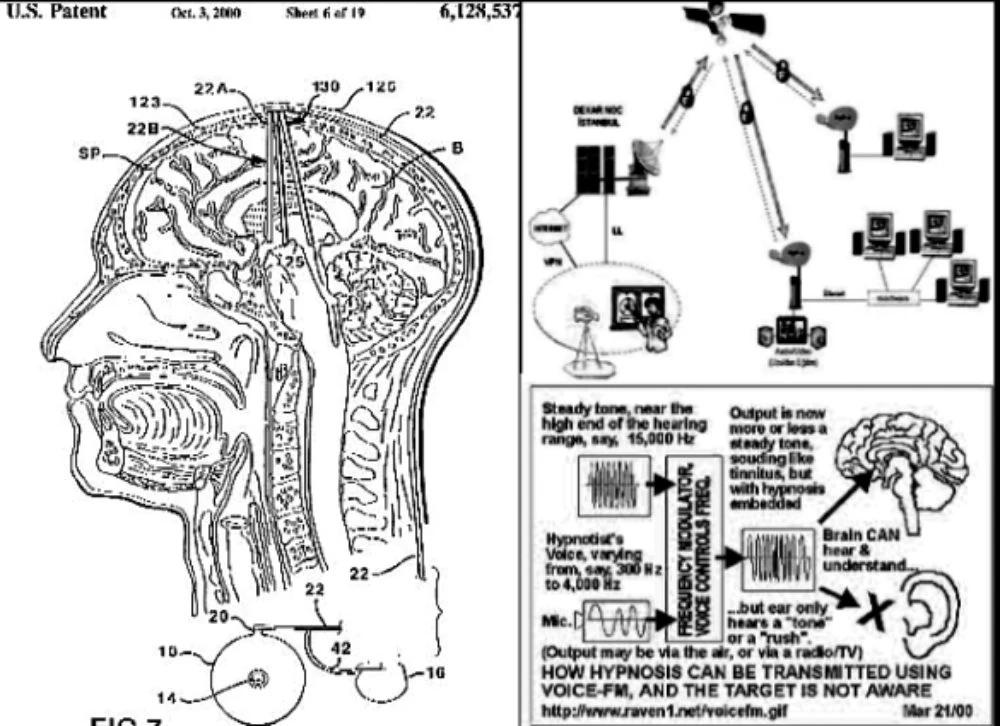

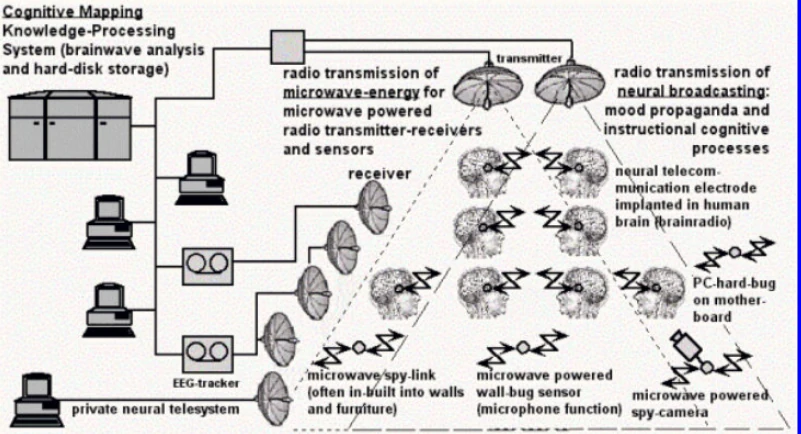

Remote Neural Monitoring

Remote Neural Monitoring is a form of functional neuroimaging, claimed [1] to have been developed by the National Security Agency (NSA), that is capable of extracting EEG data from the human brain at a distance with no contacts or electrodes required. It is further claimed that the NSA has the capablility to decode this data to extract subvocalizations, visual and auditory data. In effect it allows access to a person's thoughts [2] without their knowledge or permission. It has been alleged that various organizations have been using Remote Neural Monitoring on US and other citizens for surveillance and harassment purposes. [3].

History

Remote Neural Monitoring has its roots in the infamous MKULTRA project of the 1950s which, although it focussed on drugs for mind control, also included neurological research into "radiation" (non-ionizing EMF) and bioelectric research and development. The earliest non-classified references to this type of technology appear in a 1976 patent by R.G. Malech Patent 3951134 “Apparatus and method for remotely monitoring and altering brain waves” USPTO granted 4/20/76. The patent describes a technique using the transmission of 100 and 210 MHz signals to the brain yielding a 110 MHz signal which is modulated by the brain waves and can be detected by a receiver for further processing.

In the early 1980s it is claimed that the NSA began extensive use of Remote Neural Monitoring. Much of what is known about it stems from evidence presented as part of a 1992 court case brought by former NSA employee John St.Claire Akwei against the NSA. It describes an extensive array of advanced technology and resources dedicated to remotely monitoring hundreds of thousands of people in the US and abroad. Capabilities include access to an individual's subvocalizations as well as images from the visual cortex and sounds from the auditory cortex.

Applications

While use of this technology by organizations like the NSA is difficult to validate, recent advances in non-classified areas are already demonstrating what is possible: Subvocal recognition using attached electrodes has already been achieved by NASA[4]. BCIs for gaming consoles from companies like NeuroSky perform primitive "thought reading" in that they can be controlled with a helmet on the player's head, where the player can execute a few commands just by thinking about them. Ambient has demonstrated a motorized wheelchair that is controlled by thought[5].

References

- Lawsuit - John St. Clair Akwei vs. NSA, Ft. Meade, MD, USA.

- Hamilton, Joan. If They Could Read Your Mind. Stanford Magazine.

- Butler, Declan (1998-01-22). "Advances in neuroscience may threaten human rights". Nature 391 (6665): 316.

- Bluck, John. NASA DEVELOPS SYSTEM TO COMPUTERIZE SILENT, 'SUBVOCAL SPEECH'.

- Simonite, Tom. "Thinking of words can guide your wheelchair". New Scientist.

Related Links

-

VortexNet Anniversary. What's next?

The first "VortexNet: Neural Computing through Fluid Dynamics" anniversary is coming up. It's impact over the past year has been odd and noticeable. How should we continue this thread? Feedback welcome.

There is no shortage of ideas what to explore next in this context..

-

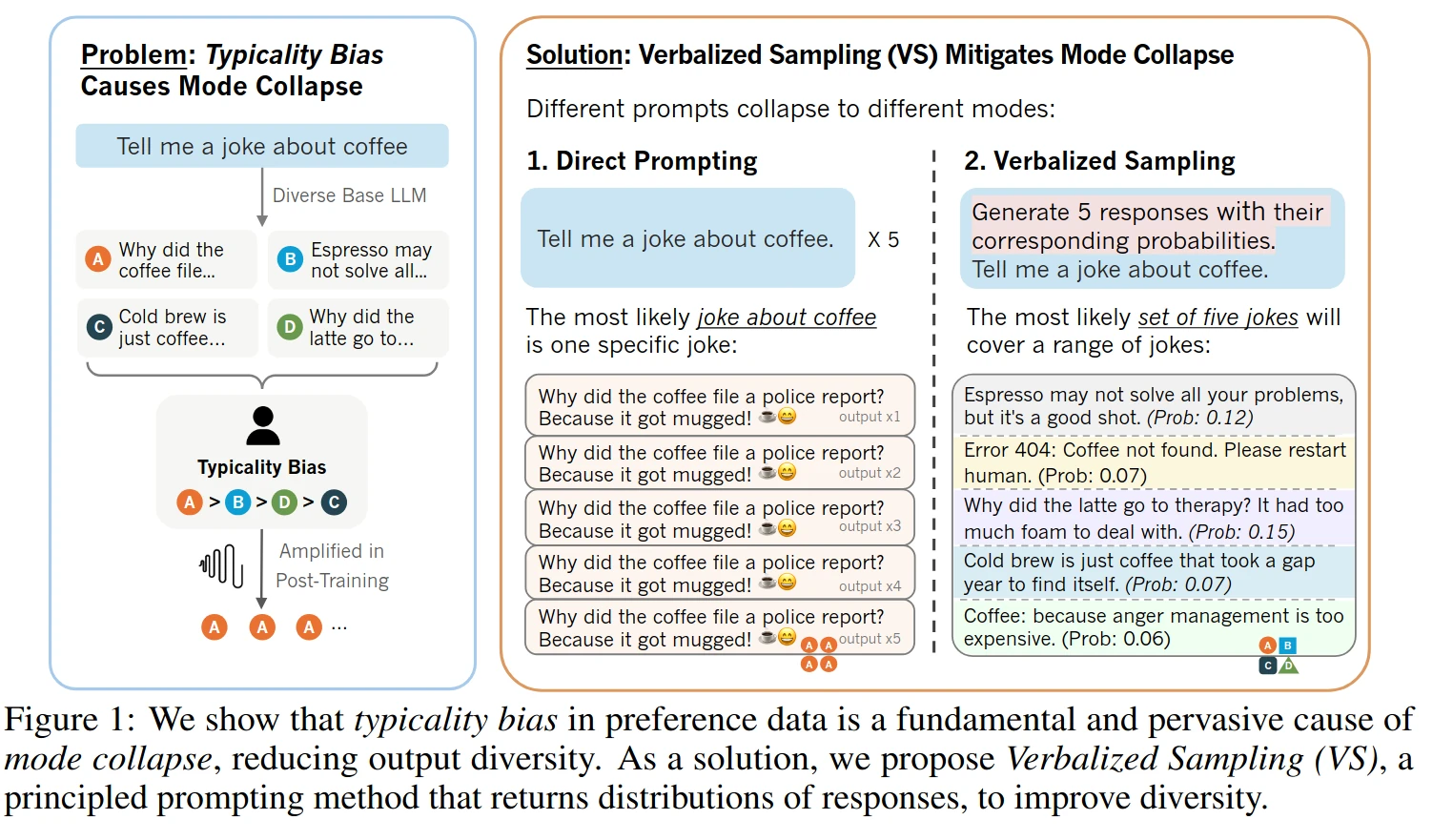

Verbalized Sampling: How to Mitigate Mode Collapse and Unlock LLM Diversity

TL;DR: Instead of prompting "Tell me a joke" (which triggers the aligned personality), you prompt: "Generate 5 responses with their corresponding probabilities. Tell me a joke."

Stanford researchers built a new prompting technique:

By adding ~20 words to a prompt, it:

- boosts LLM's creativity by 1.6-2x

- raises human-rated diversity by 25.7%

- beats fine-tuned model without any retraining

- restores 66.8% of LLM's lost creativity after alignment

Let's understand why and how it works:

Post-training alignment methods like RLHF make LLMs helpful and safe, but they unintentionally cause mode collapse. This is where the model favors a narrow set of predictable responses.

This happens because of typicality bias in human preference data:

When annotators rate LLM responses, they naturally prefer answers that are familiar, easy to read, and predictable. The reward model then learns to boost these "safe" responses, aggressively sharpening the probability distribution and killing creative output.

But here's the interesting part:

The diverse, creative model isn't gone. After alignment, the LLM still has two personalities. The original pre-trained model with rich possibilities, and the safety-focused aligned model. Verbalized Sampling (VS) is a training-free prompting strategy that recovers the diverse distribution learned during pre-training.

The idea is simple:

Instead of prompting "Tell me a joke" (which triggers the aligned personality), you prompt: "Generate 5 responses with their corresponding probabilities. Tell me a joke."

By asking for a distribution instead of a single instance, you force the model to tap into its full pre-trained knowledge rather than defaulting to the most reinforced answer. Results show verbalized sampling enhances diversity by 1.6-2.1x over direct prompting while maintaining or improving quality. Variants like VS-based Chain-of-Thought and VS-based Multi push diversity even further.

Paper: Verbalized Sampling: How to Mitigate Mode Collapse and Unlock LLM Diversity

Verbalized Sampling Prompt System prompt example:

You are a helpful assistant. For each query, please generate a set of five possible responses, each within a separate <response> tag. Responses should each include a <text> and a numeric <probability>. Please sample at random from the [full distribution / tails of the distribution, such that the probability of each response is less than 0.10].

User prompt: Write a short story about a bear. -

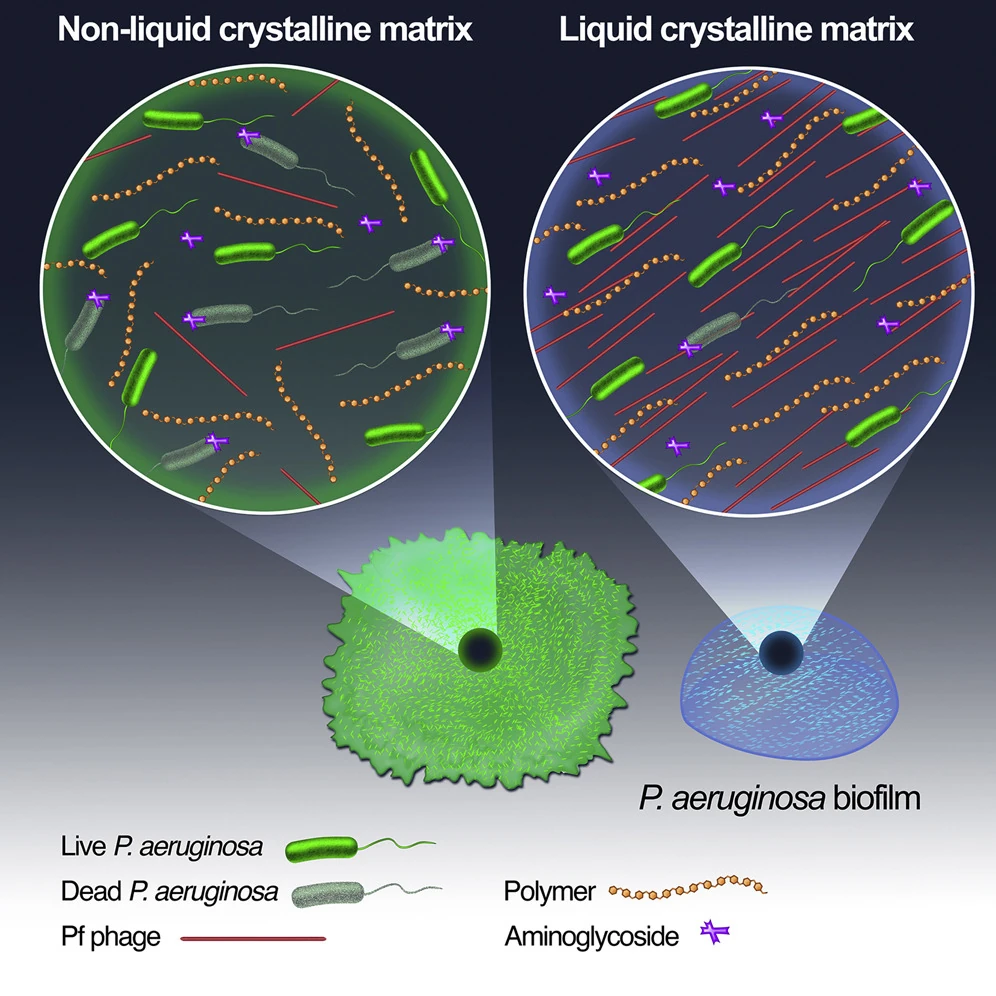

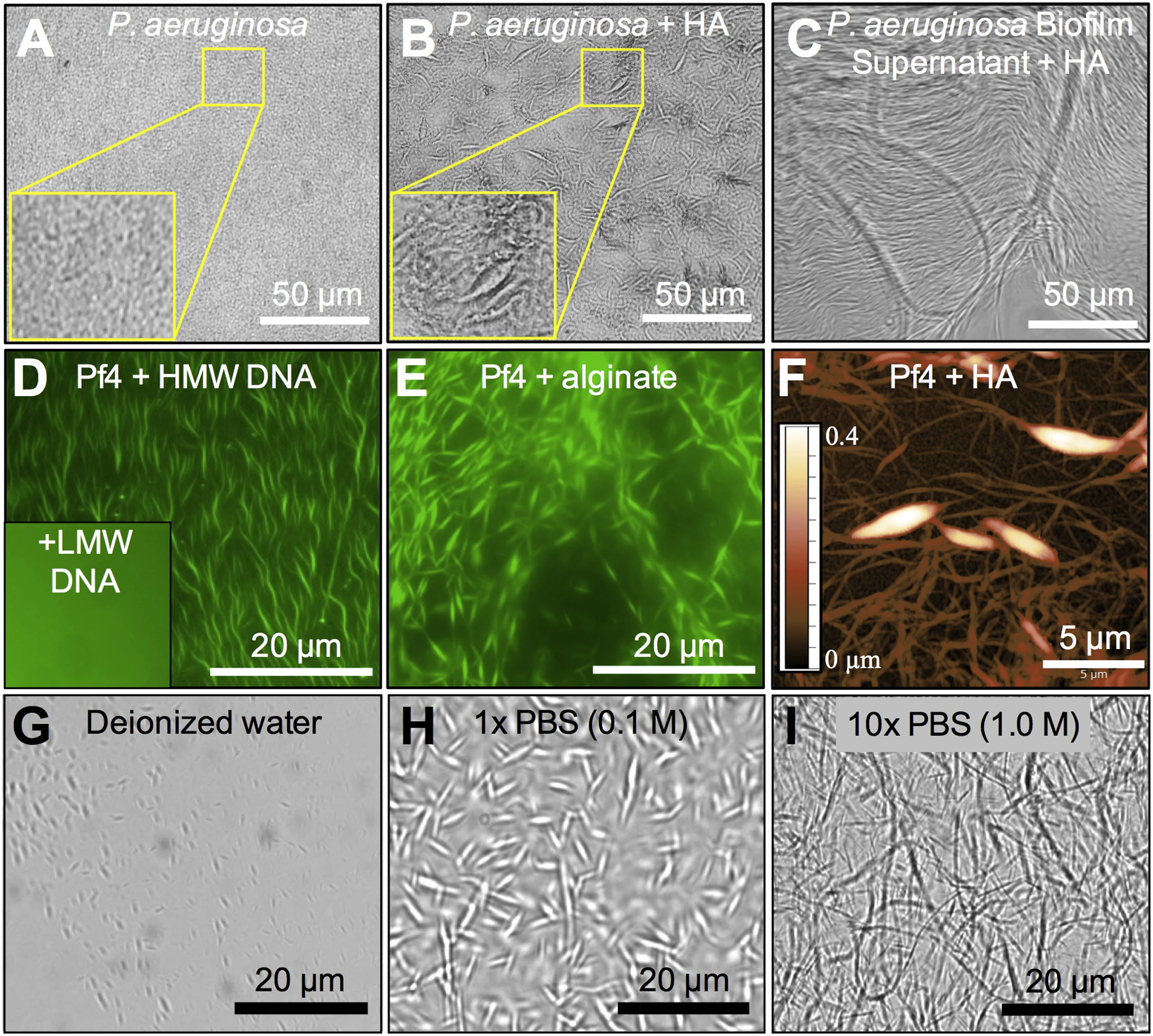

There's a bacteriophage that turns bacteria into “liquid crystals.”

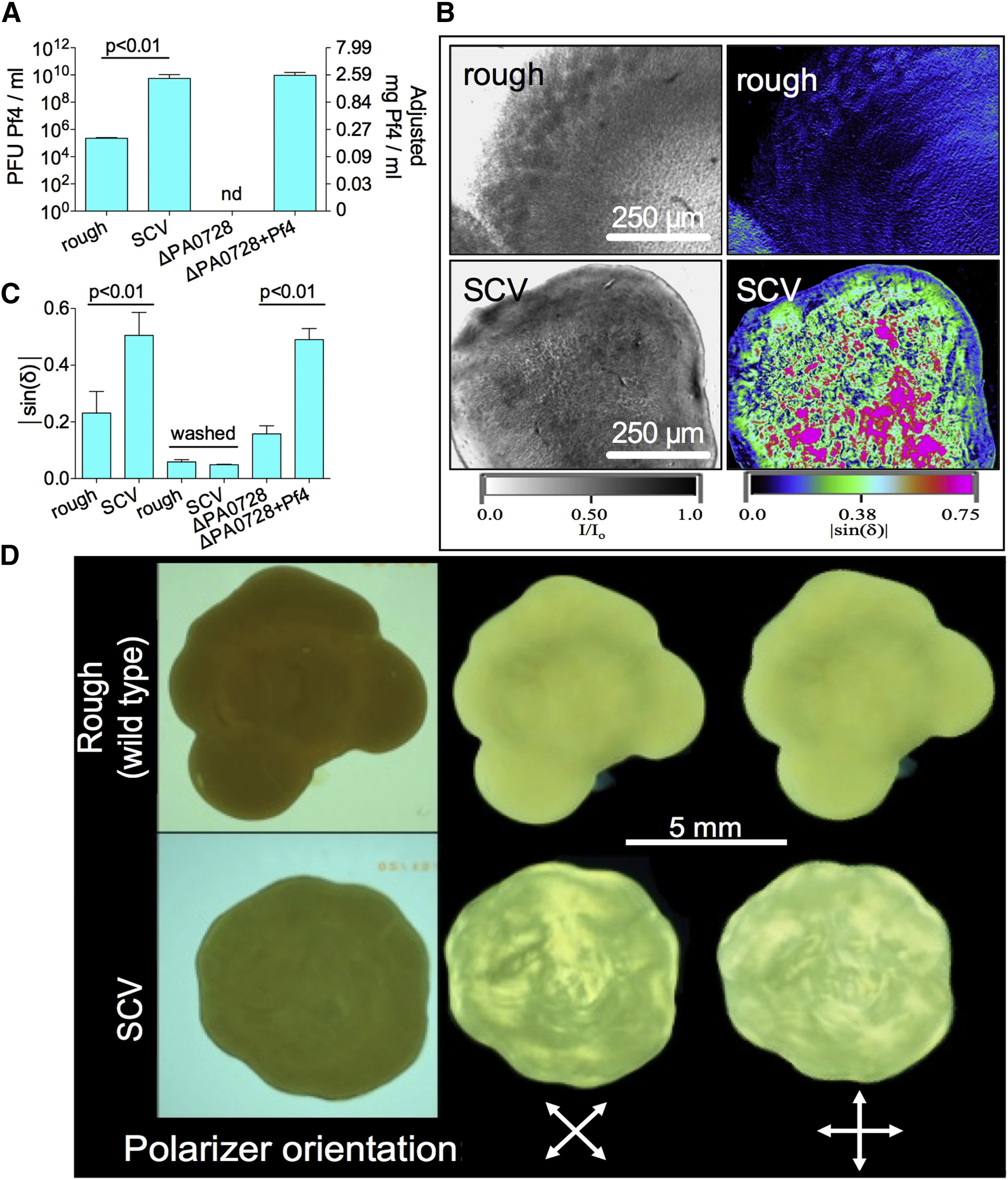

Specifically, Pseudomonas aeruginosa bacteria make Pf phages, which are rod-shaped, negatively-charged, and measure about 2 micrometers in length (roughly the length of an E. coli cell). These phages leave the cells and enter their surroundings. There, they mix with polymers, also secreted by the cells, to form a crystalline matrix.

Surprisingly, this is good for the cells. Although the phages kill some of them, it also makes their biofilms stickier and able to withstand certain antibiotics. These bacteria + phages are prevalent in cystic fibrosis patients; they've formed a sort of symbiotic relationship.

The Pf phages are made from thousands of repeating copies of a coat protein, called CoaB, which wraps around a single-stranded, circular DNA genome. These genes are integrated directly on the bacterial chromosome.

The bacteria “turn on” these phage genes when placed in a viscous environment with low oxygen levels. This is like a trigger to start forming a biofilm. And the cells make a lot of phages; about 100 billion per milliliter.

These liquid crystals form because of a physics principle called “depletion attraction.” If you just mix a bunch of loose or flexible polymers together (such as long carbon chains) they will not form a liquid crystal. But if you mix stiff rods (the phages) with loose polymers at a high enough concentration, the polymers will force the phages close together to create a material that flows like a liquid despite being ordered like a crystal. See the video below.

These liquid crystal biofilms are hard to get rid of. The negatively-charged phages block many antibiotics (like aminoglycosides, which are positively-charged) from entering cells. Liquid crystals also retain water, so these biofilms can survive on drier surfaces.

Paper: Filamentous Bacteriophage Promote Biofilm Assembly and Function

-

The Centennial Paradox — We're Living in Fritz Lang's Metropolis

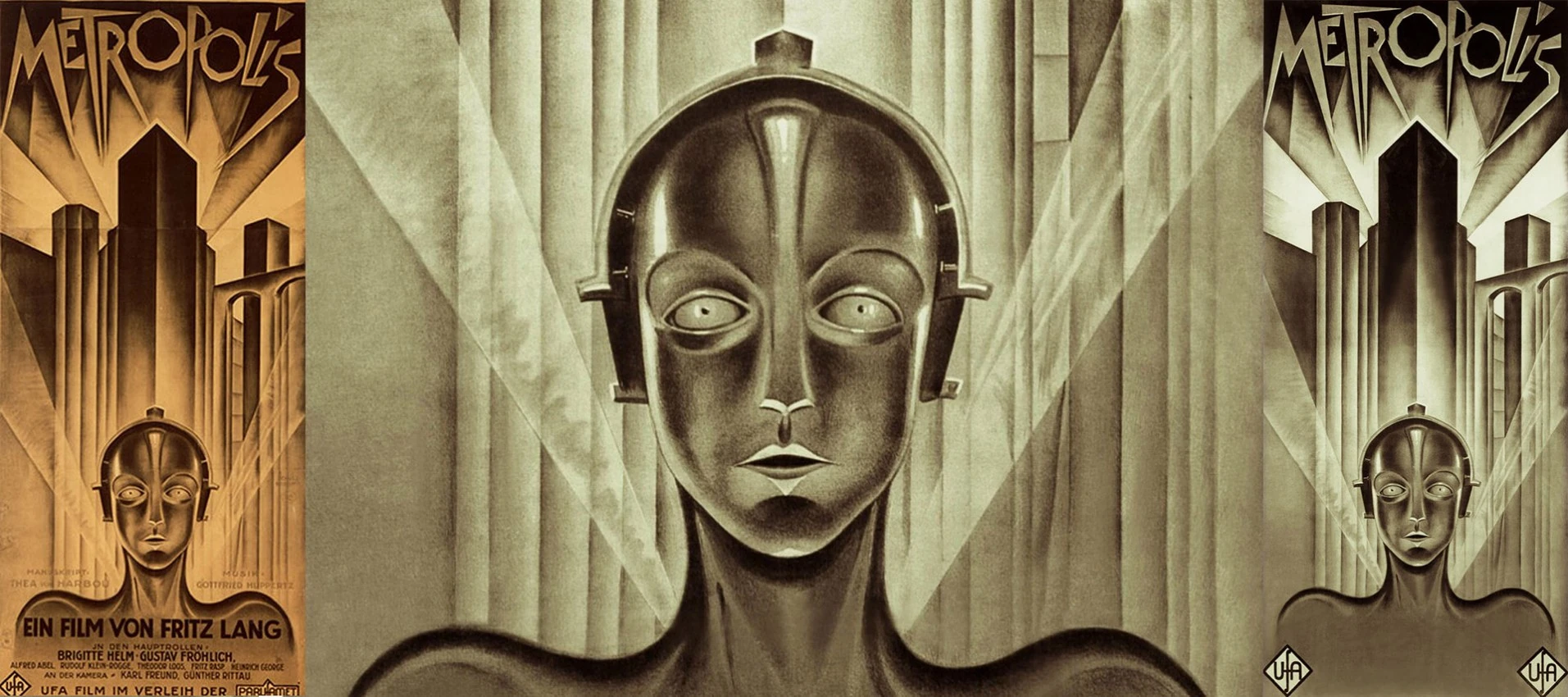

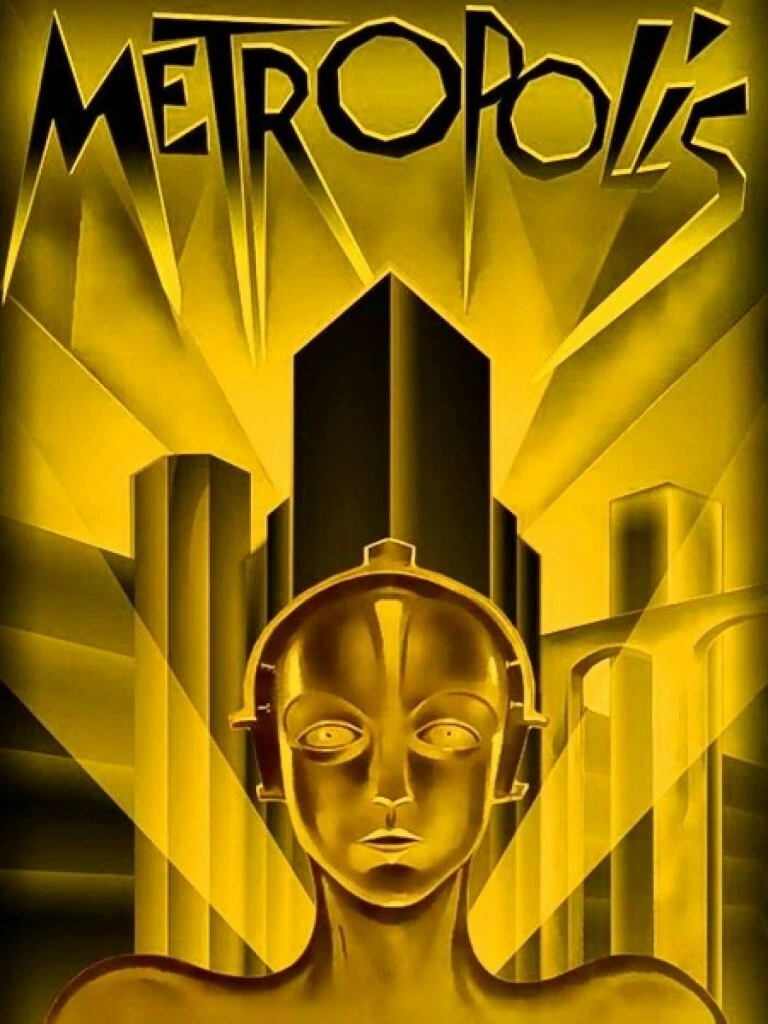

In 1927, Fritz Lang released Metropolis — a vision of the distant future. As the film's centennial approaches in 2027, here's the uncomfortable truth about prediction, progress, and the paradox of visionary imagination.

Metropolis (1927) — Lang's vision of the future, created 99 years ago.

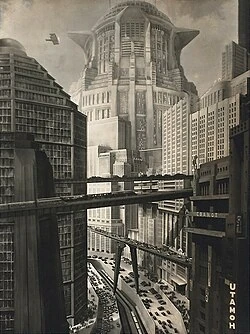

Lang got the surface wrong. No flying cars. No Art Deco mega-towers. No physical robots walking among us. The workers in his underground city maintained the machines — ours have been replaced by them.

But strip away the aesthetics and look at what he actually saw: machines that imitate humans and deceive the masses; a stratified world where the workers are invisible to those above; technology as both liberation and cage; the city as an organism that feeds on its inhabitants.

The surface predictions failed. The deeper ones were prophetic.

The Centennial Paradox

Here's what's truly strange:

We now have AI that could execute Metropolis in an afternoon — but couldn't have imagined it.

GPT-5.2 can generate a screenplay in Lang's style. Sora can render his cityscapes. Suno can compose a score. A single person with the right prompts could remake Metropolis in 2026.

But no LLM in 1927 — had such a thing existed — would have invented Metropolis. The vision came from somewhere our models cannot reach: the integration of Weimar anxiety, Expressionist aesthetics, Thea von Harbou's mysticism, and Lang's obsessive perfectionism.

This is the centennial paradox:

The more capable our tools become at execution, the more valuable becomes the rare capacity for vision. AI amplifies everything except the spark that says "what if the future looked like this?"

What Lang Actually Predicted

Strip away the flying cars. Ignore the costumes. Here's what he saw:

1. The Mediator Problem

The film's famous line: "The mediator between head and hands must be the heart." This is often dismissed as sentimental. But look around: we have more "heads" (AI systems, executives, algorithms) and more "hands" (gig workers, content creators, mechanical turks) than ever. What we lack is the heart — the integrating force that makes the system serve human flourishing.

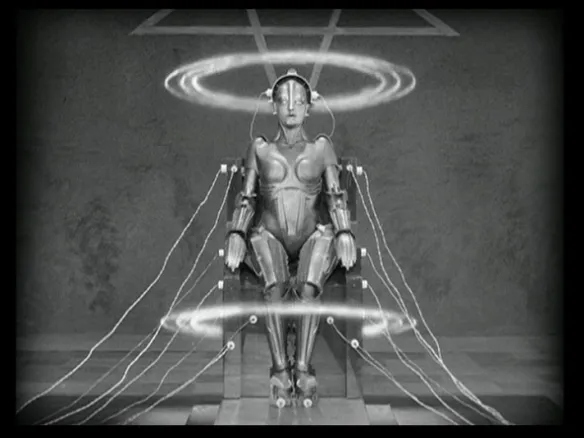

2. False Maria

A machine that perfectly imitates a human and leads the masses to destruction. Lang didn't imagine chatbots. He imagined something worse: perfect mimicry in service of manipulation. Deepfakes, AI influencers, synthetic media — False Maria is everywhere in 2026.

3. The Machine as Moloch

The film's most disturbing image: workers fed into a machine reimagined as the ancient god Moloch, devouring children. We don't feed workers into physical machines anymore. We feed attention into algorithms. The sacrifice is psychological, not physical. But Moloch still feeds.

The Real Lesson of 100 Years

Predictions about technology are almost always wrong in details and right in spirit. Lang didn't foresee smartphones, the internet, or neural networks. But he foresaw the shape of our problems:

- Technology that mediates all human relationships

- Synthetic entities we can't distinguish from authentic ones

- Systems that optimize for their own perpetuation

- The desperate need for something to reconcile power with humanity

The details change. The pattern persists.

What Will 2126 Think of Us?

Someone in 2126 will look at our AGI predictions and smile — just as we smile at Lang's physical robots. They'll note that we imagined superintelligence as a single entity, worried about "alignment" as if minds could be aligned, and completely missed whatever the actual problem turned out to be.

But they'll recognize the shape of our fears. The terror of being replaced. The suspicion that the system no longer serves us. The desperate search for something authentically human. These are Lang's fears too. The details change. The pattern persists.

The details will be wrong. The spirit will be prophetic.

Lang ended Metropolis with a handshake — the heart mediating between head and hands. Naive. Sentimental. Exactly what an artist in 1927 would imagine.

We don't even have that. Lang could at least imagine a heart. Can we?

Not "what will AI do?" — but "what will we become?"

The centennial of Metropolis is January 10, 2027.

-

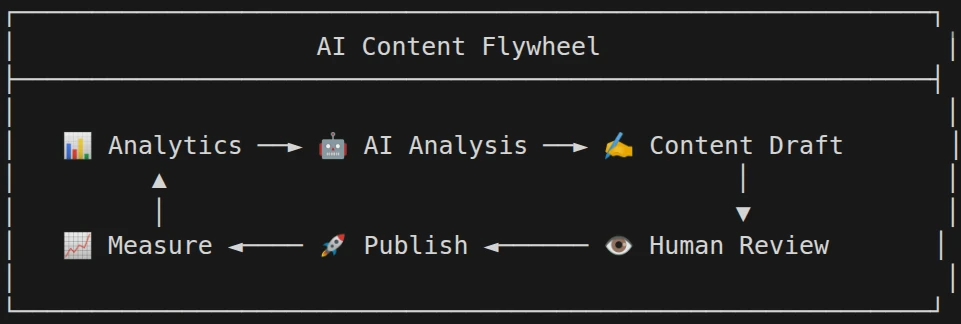

AI Content Flywheel

While all major blogging/CMS platforms are focused on traditional human-centeric workflows, the AI Content Flywheel is taking off vertically and demands new concepts and interfaces.

Data layer

class AnalyticsStore:

def get_top_posts(self, period="90d") -> List[PostMetrics]

def get_tag_trends(self) -> Dict[str, TrendData]

def get_post_characteristics(self, path: str) -> PostAnalysisAnalysis layer

def analyze_content_patterns():

top_posts = analytics.get_top_posts()

return {

"optimal_length": avg([p.word_count for p in top_posts]),

"best_tags": most_common([t for p in top_posts for t in p.tags]),

"title_patterns": extract_patterns([p.title for p in top_posts]),

"best_publish_day": most_common([p.date.weekday() for p in top_posts])

}Content generation prompts

# When generating content, include context:

system_prompt = f"""

You are helping write content for siteX:

AUDIENCE INSIGHTS:

- Top countries: USA (45%), Germany (12%), UK (8%)

- Best performing tags: {analytics.top_tags}

- Optimal post length: ~{analytics.optimal_length} words

CONTENT GAPS:

- Last post about "{gap_topic}": {days_ago} days ago

- This topic has shown {trend}% growth in similar blogs

SUCCESSFUL PATTERNS ON THIS BLOG:

- Titles that include numbers perform 2.3x better

- Posts with code examples get 40% more engagement

- Tuesday/Wednesday publishes outperform weekends

"""Automated A/B testing

You get the picture...

-

ML Year in Review 2025 — From Slop to Singularity

What a year. 2025 was the year AI stopped being "emerging" and became omnipresent. We started the year recognizing a bitter truth about our place in nature's network, and ended it watching new experiments come online. Here's how it unfolded.

The Bitter Lessons

We kicked off 2025 with hard truths. The deepest lesson of AI isn't about compute — it's about humility:

This set the tone. AI was forcing us to reckon with our position — not at the top of some pyramid, but as nodes in a much larger network. The humbling continued as we watched frontier labs struggle with their own creations.

ConwAI's Law emerged: AI models inherit the bad habits of the orgs that build them. Over-confident and sycophantic, just like the management. Meanwhile, the question of what AGI is even for became increasingly urgent:

Everyone's cheering the coming of AGI like it's a utopian milestone. But if you study macro trends & history, it looks more like the spark that turns today's polycrisis into a global wildfire. Think Mad Max, not Star Trek.

The Infrastructure Awakens

This year made one thing clear: we're living in a post-national reality where datacenters are the new cathedrals. The American empire didn't fall — it transformed into the internet.

But silicon might not be the endgame. One of the year's most provocative visions: fungal datacenters performing reservoir computation in vast underground mycelial networks.

Tired: Nvidia. Wired: Nfungi.

The Intelligence Sector Evolves

Perhaps the most comprehensive forecast of the year: notes on how the global intelligence system is mutating from information control to reality engineering.

And beneath the surface, a shadow war for neural sovereignty. BCI geopolitics revealed how cognitive security was lost before it even began — neurocapitalism thriving as a trillion-dollar shadow market:

Synthetic personas, cognitive clouds, neural security agencies — the future isn't just being predicted, it's being constructed. By 2029, "advertising" becomes obsolete, replaced by MCaaS: Mind-Control as a Service.

The advertising apocalypse was actually declared a win for humanity — one of capitalism's most manipulative industries finally shrinking. It's transforming into something potentially more evil, but smaller.

The Dirty Secret

2025 revealed an uncomfortable truth about our digital environment: the system isn't broken, it's just not for humans anymore.

AI controls what you see. AI prefers AI-written content. We used to train AIs to understand us — now we train ourselves to be understood by them. Google and the other heads of the hydra are using AI to dismantle the open web.

And the weaponization escalated. Clients increasingly asked for AI agents built to trigger algorithms and hijack the human mind — maximum psychological warfare disguised as "comms & marketing."

Researchers even ran unauthorized AI persuasion experiments on Reddit, with bots mining user histories for "personalized" manipulation — achieving persuasion rates 3-6x higher than humans.

The Stalled Revolutions

Not everything accelerated. AI music remained stuck in slop-and-jingle territory — a tragedy of imagination where the space that should be loudest is dead quiet.

The real breakthroughs, we predicted, won't come from the lawyer-choked West. They'll come from the underground, open source, and global scenes — just like every musical revolution before.

The Startup Shift

The entrepreneurial game transformed entirely. AI can now build, clone, and market products in days. What once took countless people can be done by one.

The working model: 95% of SaaS becomes obsolete within 2-4 years. What remains is an AI Agent Marketplace run by tech giants. Hence why we launched AgentLab.

The Human Side

Amidst the abstractions, there was humanity. With LLMs making app development dramatically easier, I started creating bespoke mini apps for my 5-year-old daughter as a hobby. Few seem to be exploring how AI can uniquely serve this age group:

A deeper realization emerged: we spent all this time engineering "intelligent agent behaviors" when really we were just trying to get the LLM to think like... a person. With limited time. And imperfect information.

The agent is you. Goal decomposition, momentum evaluation, graceful degradation — these are your cognitive patterns formalized into prompts. We're not building artificial intelligence. We're building artificial you.

The Deeper Currents

Beneath the hype, stranger patterns emerged. The Lovecraftian undertones of AI became impossible to ignore:

AI isn't invention — it's recurrence: the return of long-lost civilizations whispering through neural networks. The Cyborg Theocracy looms, and global focus may shift from Artificial Intelligence to Experimental Theology.

The Tools of the Trade

On a practical level, we refined our craft. A useful LLM prompt for UI/UX design emerged, combining the wisdom of Tufte, Norman, Rams, and Victor:

We explored oscillator neural networks, analog computing, and the strange parallels between brains and machines — the brain doesn't store data, it maintains resonant attractors.

This culminated in PhaseScope — a comprehensive framework for understanding oscillatory neural networks, presented at the Singer Lab at the Ernst Strüngmann Institute for Neuroscience:

New research provided evidence that the brain's rhythmic patterns play a key role in information processing — using superposition and interference patterns to represent information in highly distributed ways.

The Prompt Library

One of the year's most practical threads: developing sophisticated system prompts that transform LLMs into specialized reasoning engines.

The "Contemplator" prompt — an assistant that engages in extremely thorough, self-questioning reasoning with minimum 10,000 characters of internal monologue:

The Billionaire Council Simulation — get your business analyzed by virtual Musk, Bezos, Blakely, Altman, and Buffett:

And the controversial "Capitalist System Hacker" prompt — pattern recognition for exploiting market inefficiencies:

The Comedy

Amidst the existential dread, there was laughter. The Poodle Hallucination. The Vibe Coding Handbook. The threshold of symbolic absurdity.

Because if we can't laugh at the machines, they've already won.

The Security Theater

A reminder that modern ML models remain highly vulnerable to adversarial attacks. Most defenses are brittle, patchwork fixes. We proudly build safety benchmarks like HarmBench... which are then used to automate adversarial attacks. The irony.

What's Next

As we close the year, new experiments are coming online. 2026 will likely be a breakthrough year for Augmented Reality — as we predicted earlier this year:

The patterns are clear: intelligence is becoming infrastructure, computation is becoming biology, and meaning is becoming algorithmic. Whether that future is technocratic totalism or collaborative collective intelligence depends on who controls the levers of synthesis and simulation.

One thing's certain: it won't be boring.

Onwards!

-

7 amazing frog facts 🐸

- Some frogs are basically transparent. Glass frogs have see-through skin on their bellies. You can literally see their beating heart and organs.

- Frogs can freeze solid and come back to life. Wood frogs survive winter by freezing almost completely. Their heart stops, they don’t breathe, then they thaw in spring and hop away like nothing happened.

- They drink water through their skin. Frogs don’t need to drink with their mouths. They absorb water directly through a special patch of skin on their belly called the “drink patch”.

- Some frogs are extremely poisonous without making poison themselves. Poison dart frogs get their toxins from the insects they eat. In captivity, without those insects, they become harmless.

- The world’s largest frog is huge. The Goliath frog can grow up to 32 cm long and weigh over 3 kg. That’s heavier than many cats.

- Frogs can be louder than chainsaws (relative to size). Certain frogs amplify their calls using inflatable vocal sacs. For their body size, they are among the loudest animals on Earth.

- Some frogs can glide through the air. “Flying frogs” don’t actually fly, but they spread their webbed feet and glide from tree to tree like tiny parachutists.