tag > ML

-

UK government using confidential patient data in coronavirus response (Guardian)

"Technology firms are processing large volumes of confidential UK patient information in a data-mining operation as part of the government’s response to the coronavirus outbreak"

-

Microsoft exec says coronavirus could spark big shift for AI in health care (techexplorer)

Microsoft chief technology officer Kevin Scott grew up fascinated by the 1960s Apollo space program and then-President John F. Kennedy's vision of a moon shot. Now, he envisions just as ambitious a project taking shape as a consequence of the coronavirus pandemic. Just as the U.S. government significantly invested to put Neil Armstrong and others on the moon by 1969—$200 billion in today's dollars by his estimate—Scott said similar funding in artificial intelligence technology could be a difference-maker for our nation's battered health care system.

-

Stanford researchers propose AI in-home system that can monitor for coronavirus symptoms

During the “COVID-19 and AI” livestream event run by the Stanford Institute of Human-Centered AI (HAI), Stanford professor and HAI codirector Dr. Fei-Fei Li presented a concept for an AI-powered in-home system that could track a resident’s health, including for signs of COVID-19, while ensuring privacy.

#Comment: A highly intrusive "AI" in your living room, constantly analysing your bio-signals and sending it to its shadowy corporate owners. What could possibility go wrong? But don't worry and smile - cause comments like this will soon be banned by A.I, so we can feel better:

Microsoft claims its AI framework spots fake news better than state-of-the-art baselines

In a study Microsoft propose an AI framework — Multiple sources of Weak Social Supervision (MWSS) — that leverages engagement and social media signals to detect fake news. They say that after training and testing the model on a real-world data set, it outperforms a number of state-of-the-art baselines for early fake news detection.

-

In other news Stanford has made a toilet that identifies you based on your butthole (Nature)

Here, we describe easily deployable hardware and software for the long-term analysis of a user's excreta through data collection and models of human health. The 'smart' toilet, which is self-contained and operates autonomously by leveraging pressure and motion sensors, analyses the user's urine using a standard-of-care colorimetric assay that traces red -- green -- blue values from images of urinalysis strips, calculates the flow rate and volume of urine using computer vision as a uroflowmeter, and classifies stool according to the Bristol stool form scale using deep learning [...] Each user of the toilet is identified through their fingerprint and the distinctive features of their anoderm, and the data are securely stored and analysed in an encrypted cloud server. The toilet may find uses in the screening, diagnosis and longitudinal monitoring of specific patient populations.

-

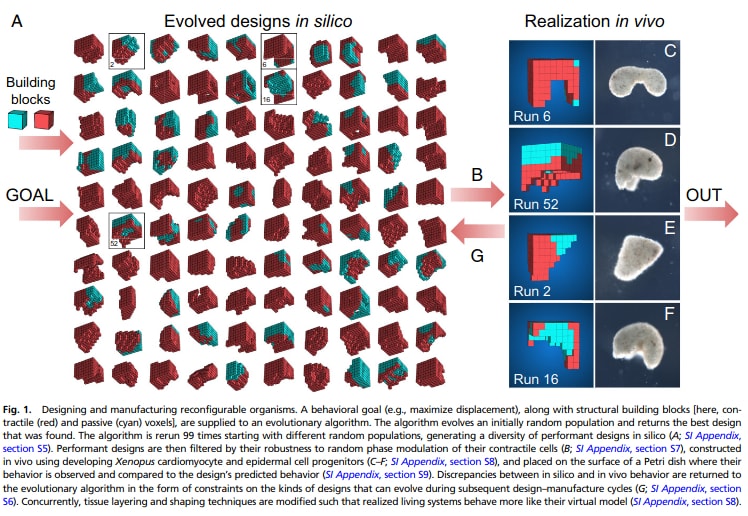

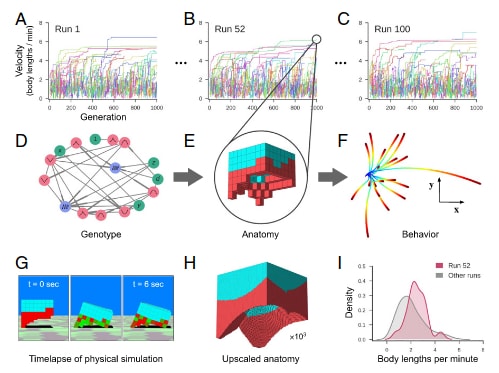

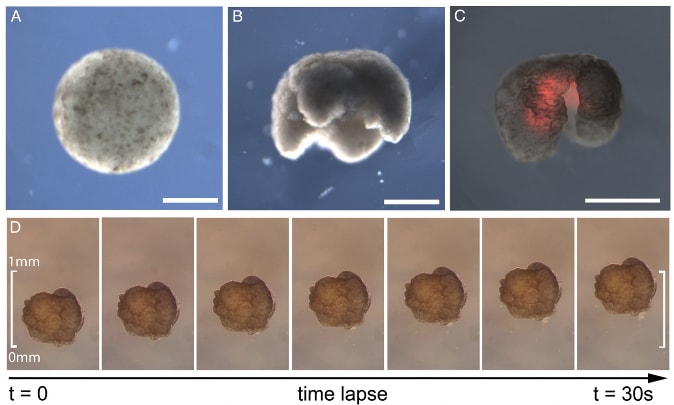

Scientists Create 'Xenobots' -- Virtual Creatures Brought to Life (nytimes.com)

Strictly speaking, these life-forms do not have sex organs — or stomachs, brains or nervous systems. The one under the microscope consisted of about 2,000 living skin cells taken from a frog embryo. Bigger specimens, albeit still smaller than a millimeter-wide poppy seed, have skin cells and heart muscle cells that will begin pulsating by the end of the day. These are all programmable organisms called xenobots, the creation of which was revealed in a scientific paper in January, by Sam Kriegmana, Douglas Blackistonb, Michael Levinb, and Josh Bongarda,

A xenobot lives for only about a week, feeding on the small platelets of yolk that fill each of its cells and would normally fuel embryonic development. Because its building blocks are living cells, the entity can heal from injury, even after being torn almost in half. But what it does during its short life is decreed not by the ineffable frogginess etched into its DNA — which has not been genetically modified — but by its physical shape. And xenobots come in many shapes, all designed by roboticists in computer simulations, using physics engines similar to those in video games like Fortnite and Minecraft...

All of which makes xenobots amazing and maybe slightly unsettling — golems dreamed in silicon and then written into flesh. The implications of their existence could spill from artificial-intelligence research to fundamental questions in biology and ethics. "We are witnessing almost the birth of a new discipline of synthetic organisms," said Hod Lipson, a roboticist at the Columbia University who was not part of the research team. "I don't know if that's robotics, or zoology or something else."

An algorithm running for about 24 hours iterated through possible body shapes, after which the the two researchers tried "to sculpt cellular figurines that resembled those designs." They're now considering how the process might be automated with 3-D cell printers, and the Times ponders other future possibilities the researchers have hinted at for their Xenobots. ("Sweep up ocean microplastics into a larger, collectible ball? Deliver drugs to a specific tumor? Scrape plaque from the walls of our arteries?")

-

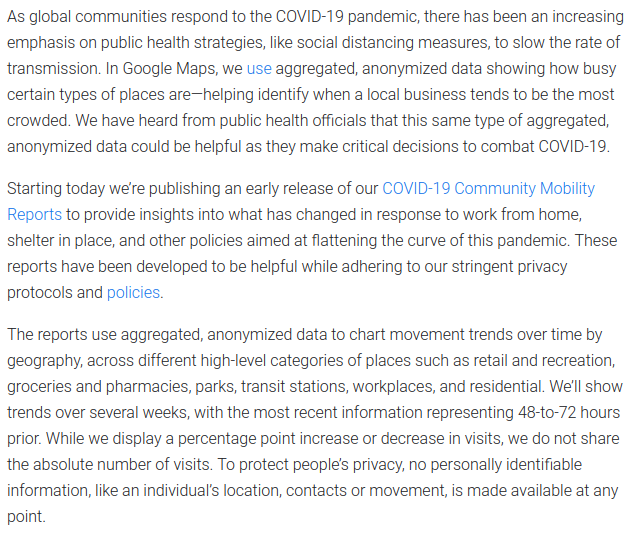

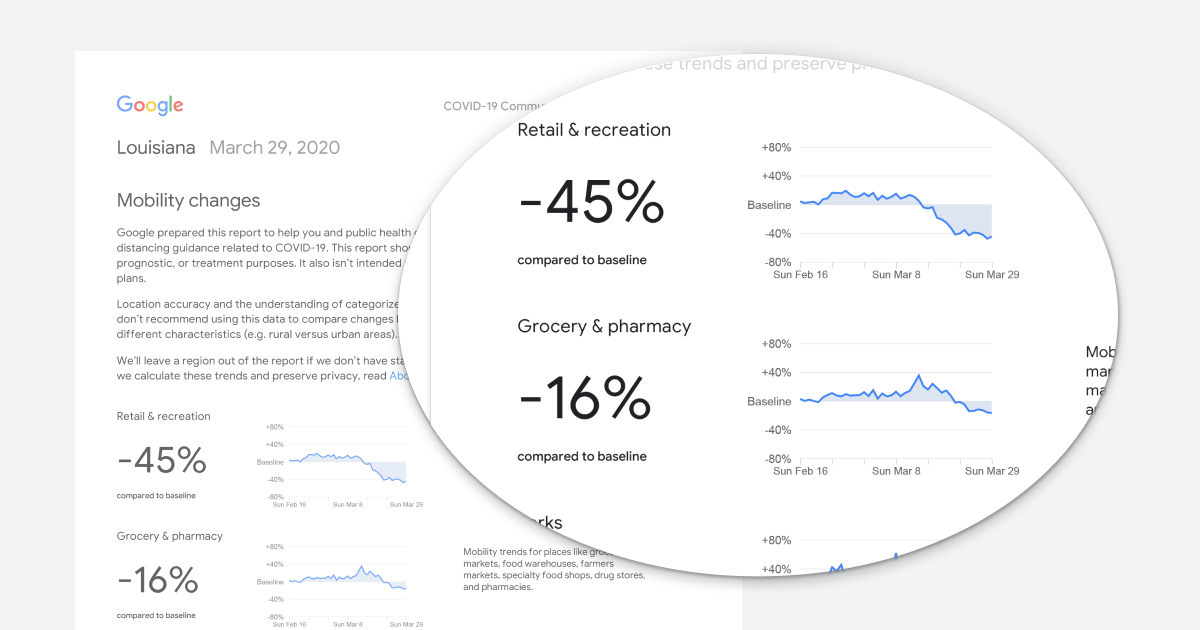

Google Is Publishing Location Data From 131 Countries To Show How Coronavirus Lockdowns Are Working (Buzzfeed) - COVID-19 Community Mobility Reports (Google)

These Community Mobility Reports aim to provide insights into what has changed in response to policies aimed at combating COVID-19. The reports chart movement trends over time by geography, across different categories of places such as retail and recreation, groceries and pharmacies, parks, transit stations, workplaces, and residential. The Reports were developed to be helpful while adhering to our stringent privacy protocols and protecting people’s privacy.

Related: Moscow To Launch Mandatory Surveillance App To Track Residents In Corona Lockdown

Related: S Korea unleashes all-out policy assault on virus - ‘Big Brother’ on steroids.#Health #Technology #InfoSec #SE #Augmentation #ML #Cryptocracy

-

Neuroevolution of Self-Interpretable Agents (Yujin Tang, Duong Nguyen, David Ha - Google Brain, 2020)

Abstract: Inattentional blindness is the psychological phenomenon that causes one to miss things in plain sight. It is a consequence of the selective attention in perception that lets us remain focused on important parts of our world without distraction from irrelevant details. Motivated by selective attention, we study the properties of artificial agents that perceive the world through the lens of a self-attention bottleneck. By constraining access to only a small fraction of the visual input, we show that their policies are directly interpretable in pixel space. We find neuroevolution ideal for training self-attention architectures for vision-based reinforcement learning tasks, allowing us to incorporate modules that can include discrete, non-differentiable operations which are useful for our agent. We argue that self-attention has similar properties as indirect encoding, in the sense that large implicit weight matrices are generated from a small number of key-query parameters, thus enabling our agent to solve challenging vision based tasks with at least 1000x fewer parameters than existing methods. Since our agent attends to only task-critical visual hints, they are able to generalize to environments where task irrelevant elements are modified while conventional methods fail.

-

Intel is using A.I. to build smell-o-vision chips (Intel, 2020)

Smell-O-Vision machines of the past With machine learning, Loihi can recognize hazardous chemicals “in the presence of significant noise and occlusion,” Intel said, suggesting the chip can be used in the real world where smells — such as perfumes, food, and other odors — are often found in the same area as a harmful chemical. Machine learning trained Loihi to learn and identify each hazardous odor with just a single sample, and learning a new smell didn’t disrupt previously learned scents. Intel claims Loihi can learn 10 different odors right now.

How smell, emotion, and memory are intertwined, and exploited (Harvard, 2020)

Researchers explore how certain scents often elicit specific emotions and memories in people, and how marking companies are manipulating the link for branding.

-

New blood test can detect 50 types of cancer (Bombastic Misleading Headline, by The Guardian)

A new blood test that can detect more than 50 types of cancer has been revealed by researchers in the latest study. Writing in the journal Annals of Oncology, the team reveal how the test was developed using a machine learning algorithm. The team initially fed the system with data on methylation patterns in DNA from within blood samples taken from more than 2,800 patients, before further training it with data from 3,052 participants.

When it came to identifying people with cancers the team found that, across more than 50 different types of cancer, the system correctly detected that the disease was present 44% of the time – although the team stress that figure could differ if the test was used to screen a general population, rather than those known to have cancer. Detection was better the more advanced the disease was. Overall, cancer was correctly detected in 18% of those with stage I cancer, but in 93% of those with stage IV cancer.

-

S Korea unleashes all-out policy assault on virus - ‘Big Brother’ on steroids (Asiantimes)

The Korea Center for Disease Control and Prevention is in charge of the new digital surveillance system. It combines information from 27 public and private organizations including the National Police Agency, the Credit Finance Association, three mobile carriers and 22 credit card firms. This vast collection of big data is amassed, trawled through and analyzed by AI. The system is a comprehensive upgrade to a previous program under which “data detectives” combined CCTV footage, GPS location information, credit card payments and other metrics to track down persons who may have been in contact with the infected person.

-

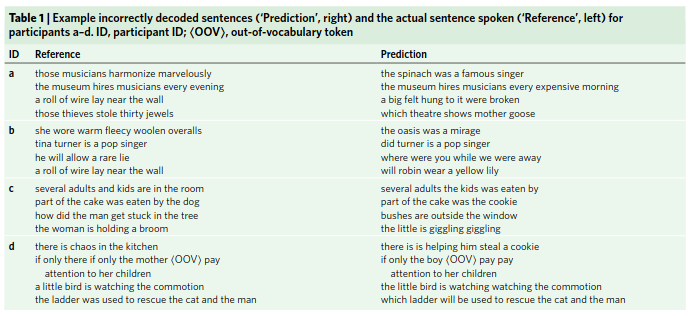

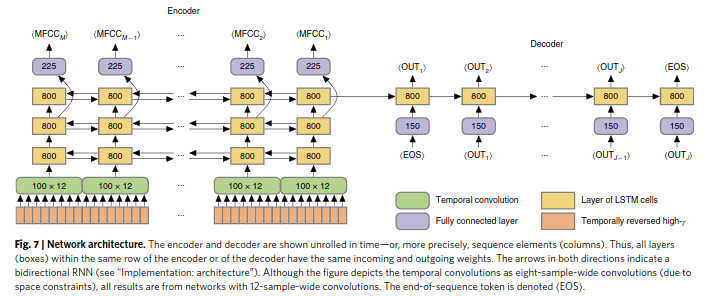

Machine translation of cortical activity to text with an encoder–decoder framework (Nature)

Reading minds has just come a step closer to reality: scientists have developed artificial intelligence that can turn brain activity into text. “We are not there yet but we think this could be the basis of a speech prosthesis,” said Dr Joseph Makin, co-author of the research from the University of California, San Francisco.

Writing in the journal Nature Neuroscience (unpaywalled), Makin and colleagues reveal how they developed their system by recruiting four participants who had electrode arrays implanted in their brain to monitor epileptic seizures. These participants were asked to read aloud from 50 set sentences multiple times, including “Tina Turner is a pop singer”, and “Those thieves stole 30 jewels”. The team tracked their neural activity while they were speaking. This data was then fed into a machine-learning algorithm, a type of artificial intelligence system that converted the brain activity data for each spoken sentence into a string of numbers.

-

Tiny Qoobo - The headless robot cat

-

UK has enough intensive care units for coronavirus, expert predicts (New Scientist)

The UK should now be able to cope with the spread of the covid-19 virus, according to one of the epidemiologists advising the government. Neil Ferguson at Imperial College London said that expected increases in National Health Service capacity and ongoing restrictions to people’s movements make him “reasonably confident” the health service can cope when the predicted peak of the epidemic arrives in two or three weeks. UK deaths from the disease are now unlikely to exceed 20,000, he said, and could be much lower.

Comment by @AlexBerenson: This is a remarkable turn from Neil Ferguson, who led the @imperialcollegeauthors who warned of 500,000 UK deaths - and who has now himself tested positive for COVID; He now says both that the U.K. should have enough ICU beds and that the coronavirus will probably kill under 20,000 people in the U.K. - more than 1/2 of whom would have died by the end of the year in any case bc they were so old and sick. Essentially, what has happened is that estimates of the viruses transmissibility have increased - which implies that many more people have already gotten it than we realize - which in turn implies it is less dangerous. Ferguson now predicts that the epidemic in the U.K. will peak and subside within “two to three weeks” - last week’s paper said 18+ months of quarantine would be necessary. One last point here: Ferguson gives the lockdown credit, which is *interesting* - the UK only began ita lockdown 2 days ago, and the theory is that lockdowns take 2 weeks or more to work. Not surprisingly, this testimony has received no attention in the US - I found it only in UK papers. Team Apocalypse is not interested.

More from Imperial

- Coronavirus pandemic could have caused 40 million deaths if left unchecked (Imperial)

- COVID-19: Imperial researchers model likely impact of public health measures (Imperial)

virus modeling

- Coronavirus pandemic could have caused 40 million deaths if left unchecked (Imperial)

-

COVID-19 & AI - Privacy & Ethical Considerations (RE-Work)

Extraordinary circumstances require extraordinary measures - as we battle the ongoing COVID-19 pandemic, governments, industry and citizens around the world have kicked efforts into high gear to find creative and effective ways to curb the spread of the disease. A variety of technological and AI-enabled solutions are being proposed and piloted with varying degrees of success. What's being discussed less is how this might alter what precedents get set and how the technology landscape will change once the pandemic has subsided.

Finally, we must pay attention to the creeping deployment of surveillance infrastructure under the guise of fighting the pandemic. Particular attention needs to be focused on powers being granted to the government, the technological solutions being deployed and the legal and social precedents being set. We don't want to emerge from the pandemic crisis with a privacy and ethical crisis on our hands. The solutions being deployed to combat COVID-19 need to be purpose- and time-limited to hold our rights and freedoms in place.

-

Japan’s Prime Minister Shinzo Abe attends a G7 leader video conference In such extraordinary circumstances, forecasts and predictions by clever computers or AI-driven algorithms cannot provide specific solutions. These can only come about by putting our smartest thinkers together through multilateral action and global cooperation.

-

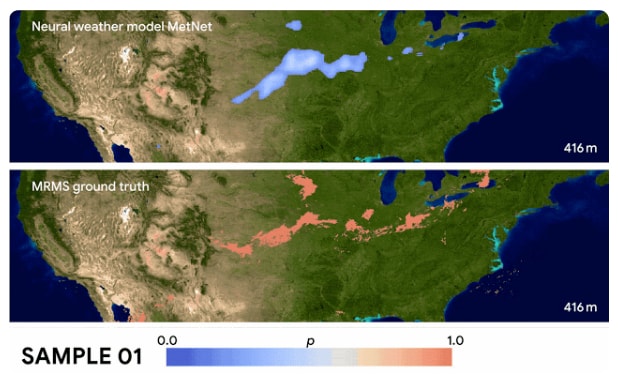

Google details MetNet, an AI model better than NOAA at predicting precipitation (Venturebeat)

In a blog post and accompanying paper, researchers at Google detail an AI system — MetNet — that can predict precipitation up to eight hours into the future. They say that it outperforms the current state-of-the-art physics model in use by the U.S. National Oceanic and Atmospheric Administration (NOAA) and that it makes a prediction over the entire U.S. in seconds as opposed to an hour.

-

ANML Learning to Continually Learn - talk by Jeff Clune (OpenAI)